mirror of

https://github.com/huggingface/diffusers.git

synced 2025-12-07 04:54:47 +08:00

Compare commits

12 Commits

modular-di

...

v0.19.2-pa

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

965e52ce61 | ||

|

|

b1e52794a2 | ||

|

|

c3e3a1ee10 | ||

|

|

9cde56a729 | ||

|

|

c63d7cdba0 | ||

|

|

aa4634a7fa | ||

|

|

0709650e9d | ||

|

|

a9829164f4 | ||

|

|

49c95178ad | ||

|

|

c2f755bc62 | ||

|

|

2fb877b66c | ||

|

|

ef9824f9f7 |

@@ -39,8 +39,8 @@ Currently AutoPipeline support the Text-to-Image, Image-to-Image, and Inpainting

|

||||

- [Stable Diffusion Controlnet](./api/pipelines/controlnet)

|

||||

- [Stable Diffusion XL](./stable_diffusion/stable_diffusion_xl)

|

||||

- [IF](./if)

|

||||

- [Kandinsky](./kandinsky)(./kandinsky)(./kandinsky)(./kandinsky)(./kandinsky)

|

||||

- [Kandinsky 2.2]()(./kandinsky)

|

||||

- [Kandinsky](./kandinsky)

|

||||

- [Kandinsky 2.2](./kandinsky)

|

||||

|

||||

|

||||

## AutoPipelineForText2Image

|

||||

|

||||

@@ -105,6 +105,30 @@ One cheeseburger monster coming up! Enjoy!

|

||||

|

||||

|

||||

|

||||

<Tip>

|

||||

|

||||

We also provide an end-to-end Kandinsky pipeline [`KandinskyCombinedPipeline`], which combines both the prior pipeline and text-to-image pipeline, and lets you perform inference in a single step. You can create the combined pipeline with the [`~AutoPipelineForTextToImage.from_pretrained`] method

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForTextToImage

|

||||

import torch

|

||||

|

||||

pipe = AutoPipelineForTextToImage.from_pretrained(

|

||||

"kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16

|

||||

)

|

||||

pipe.enable_model_cpu_offload()

|

||||

```

|

||||

|

||||

Under the hood, it will automatically load both [`KandinskyPriorPipeline`] and [`KandinskyPipeline`]. To generate images, you no longer need to call both pipelines and pass the outputs from one to another. You only need to call the combined pipeline once. You can set different `guidance_scale` and `num_inference_steps` for the prior pipeline with the `prior_guidance_scale` and `prior_num_inference_steps` arguments.

|

||||

|

||||

```python

|

||||

prompt = "A alien cheeseburger creature eating itself, claymation, cinematic, moody lighting"

|

||||

negative_prompt = "low quality, bad quality"

|

||||

|

||||

image = pipe(prompt=prompt, negative_prompt=negative_prompt, prior_guidance_scale =1.0, guidance_scacle = 4.0, height=768, width=768).images[0]

|

||||

```

|

||||

</Tip>

|

||||

|

||||

The Kandinsky model works extremely well with creative prompts. Here is some of the amazing art that can be created using the exact same process but with different prompts.

|

||||

|

||||

```python

|

||||

@@ -187,6 +211,34 @@ out.images[0].save("fantasy_land.png")

|

||||

|

||||

|

||||

|

||||

<Tip>

|

||||

|

||||

You can also use the [`KandinskyImg2ImgCombinedPipeline`] for end-to-end image-to-image generation with Kandinsky 2.1

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForImage2Image

|

||||

import torch

|

||||

import requests

|

||||

from io import BytesIO

|

||||

from PIL import Image

|

||||

import os

|

||||

|

||||

pipe = AutoPipelineForImage2Image.from_pretrained("kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16)

|

||||

pipe.enable_model_cpu_offload()

|

||||

|

||||

prompt = "A fantasy landscape, Cinematic lighting"

|

||||

negative_prompt = "low quality, bad quality"

|

||||

|

||||

url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

|

||||

|

||||

response = requests.get(url)

|

||||

original_image = Image.open(BytesIO(response.content)).convert("RGB")

|

||||

original_image.thumbnail((768, 768))

|

||||

|

||||

image = pipe(prompt=prompt, image=original_image, strength=0.3).images[0]

|

||||

```

|

||||

</Tip>

|

||||

|

||||

### Text Guided Inpainting Generation

|

||||

|

||||

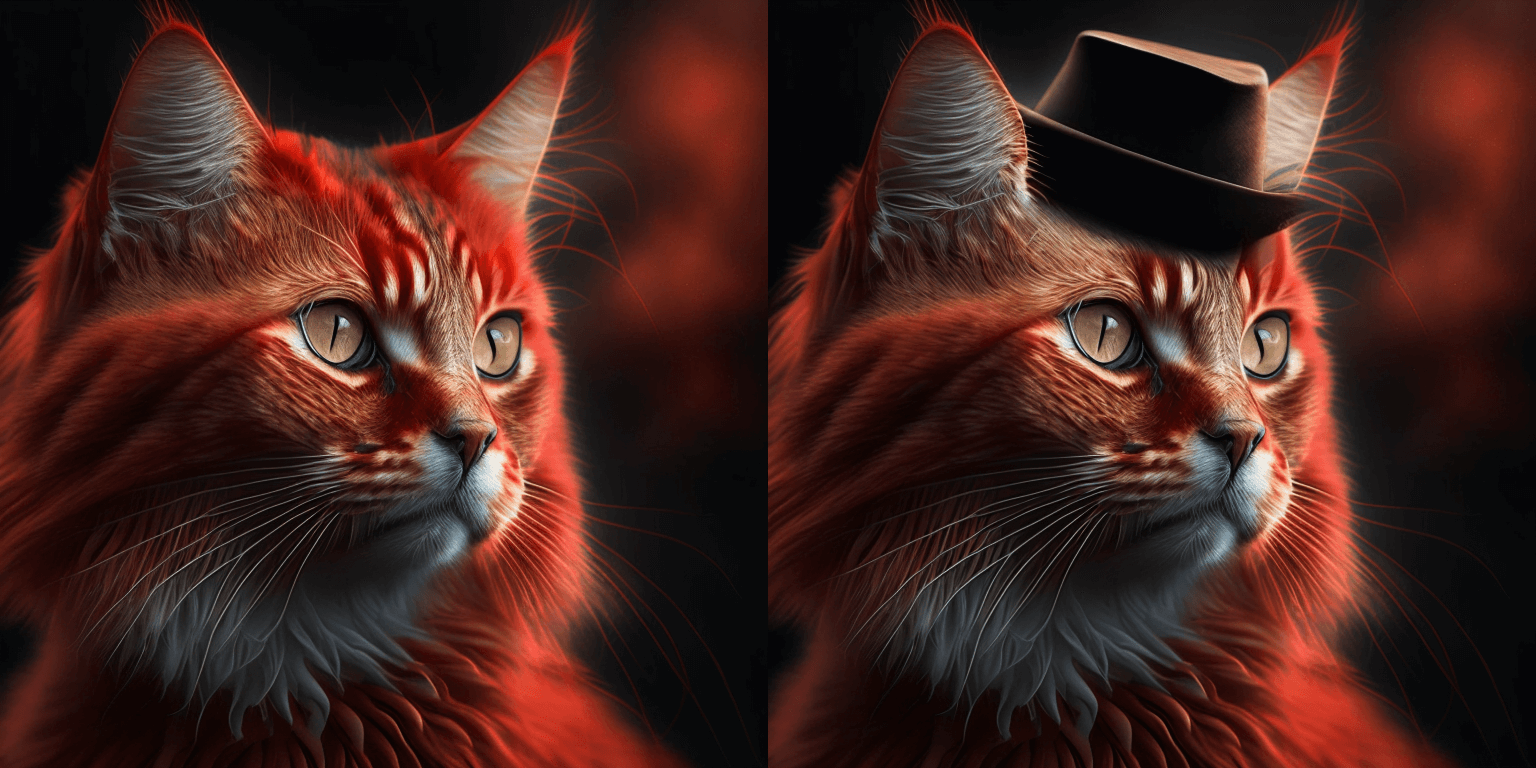

You can use [`KandinskyInpaintPipeline`] to edit images. In this example, we will add a hat to the portrait of a cat.

|

||||

@@ -231,6 +283,33 @@ image.save("cat_with_hat.png")

|

||||

```

|

||||

|

||||

|

||||

<Tip>

|

||||

|

||||

To use the [`KandinskyInpaintCombinedPipeline`] to perform end-to-end image inpainting generation, you can run below code instead

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForInpainting

|

||||

|

||||

pipe = AutoPipelineForInpainting.from_pretrained("kandinsky-community/kandinsky-2-1-inpaint", torch_dtype=torch.float16)

|

||||

pipe.enable_model_cpu_offload()

|

||||

image = pipe(prompt=prompt, image=original_image, mask_image=mask).images[0]

|

||||

```

|

||||

</Tip>

|

||||

|

||||

🚨🚨🚨 __Breaking change for Kandinsky Mask Inpainting__ 🚨🚨🚨

|

||||

|

||||

We introduced a breaking change for Kandinsky inpainting pipeline in the following pull request: https://github.com/huggingface/diffusers/pull/4207. Previously we accepted a mask format where black pixels represent the masked-out area. This is inconsistent with all other pipelines in diffusers. We have changed the mask format in Knaindsky and now using white pixels instead.

|

||||

Please upgrade your inpainting code to follow the above. If you are using Kandinsky Inpaint in production. You now need to change the mask to:

|

||||

|

||||

```python

|

||||

# For PIL input

|

||||

import PIL.ImageOps

|

||||

mask = PIL.ImageOps.invert(mask)

|

||||

|

||||

# For PyTorch and Numpy input

|

||||

mask = 1 - mask

|

||||

```

|

||||

|

||||

### Interpolate

|

||||

|

||||

The [`KandinskyPriorPipeline`] also comes with a cool utility function that will allow you to interpolate the latent space of different images and texts super easily. Here is an example of how you can create an Impressionist-style portrait for your pet based on "The Starry Night".

|

||||

|

||||

@@ -11,7 +11,22 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

The Kandinsky 2.2 release includes robust new text-to-image models that support text-to-image generation, image-to-image generation, image interpolation, and text-guided image inpainting. The general workflow to perform these tasks using Kandinsky 2.2 is the same as in Kandinsky 2.1. First, you will need to use a prior pipeline to generate image embeddings based on your text prompt, and then use one of the image decoding pipelines to generate the output image. The only difference is that in Kandinsky 2.2, all of the decoding pipelines no longer accept the `prompt` input, and the image generation process is conditioned with only `image_embeds` and `negative_image_embeds`.

|

||||

|

||||

Let's look at an example of how to perform text-to-image generation using Kandinsky 2.2.

|

||||

Same as with Kandinsky 2.1, the easiest way to perform text-to-image generation is to use the combined Kandinsky pipeline. This process is exactly the same as Kandinsky 2.1. All you need to do is to replace the Kandinsky 2.1 checkpoint with 2.2.

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForText2Image

|

||||

import torch

|

||||

|

||||

pipe = AutoPipelineForText2Image.from_pretrained("kandinsky-community/kandinsky-2-2-decoder", torch_dtype=torch.float16)

|

||||

pipe.enable_model_cpu_offload()

|

||||

|

||||

prompt = "A alien cheeseburger creature eating itself, claymation, cinematic, moody lighting"

|

||||

negative_prompt = "low quality, bad quality"

|

||||

|

||||

image = pipe(prompt=prompt, negative_prompt=negative_prompt, prior_guidance_scale =1.0, height=768, width=768).images[0]

|

||||

```

|

||||

|

||||

Now, let's look at an example where we take separate steps to run the prior pipeline and text-to-image pipeline. This way, we can understand what's happening under the hood and how Kandinsky 2.2 differs from Kandinsky 2.1.

|

||||

|

||||

First, let's create the prior pipeline and text-to-image pipeline with Kandinsky 2.2 checkpoints.

|

||||

|

||||

|

||||

@@ -38,9 +38,25 @@ You can install the libraries as follows:

|

||||

pip install transformers

|

||||

pip install accelerate

|

||||

pip install safetensors

|

||||

```

|

||||

|

||||

### Watermarker

|

||||

|

||||

We recommend to add an invisible watermark to images generating by Stable Diffusion XL, this can help with identifying if an image is machine-synthesised for downstream applications. To do so, please install

|

||||

the [invisible-watermark library](https://pypi.org/project/invisible-watermark/) via:

|

||||

|

||||

```

|

||||

pip install invisible-watermark>=0.2.0

|

||||

```

|

||||

|

||||

If the `invisible-watermark` library is installed the watermarker will be used **by default**.

|

||||

|

||||

If you have other provisions for generating or deploying images safely, you can disable the watermarker as follows:

|

||||

|

||||

```py

|

||||

pipe = StableDiffusionXLPipeline.from_pretrained(..., add_watermarker=False)

|

||||

```

|

||||

|

||||

### Text-to-Image

|

||||

|

||||

You can use SDXL as follows for *text-to-image*:

|

||||

|

||||

@@ -354,4 +354,52 @@ directly with [`~diffusers.loaders.LoraLoaderMixin.load_lora_weights`] like so:

|

||||

lora_model_id = "sayakpaul/civitai-light-shadow-lora"

|

||||

lora_filename = "light_and_shadow.safetensors"

|

||||

pipeline.load_lora_weights(lora_model_id, weight_name=lora_filename)

|

||||

```

|

||||

```

|

||||

|

||||

### Supporting Stable Diffusion XL LoRAs trained using the Kohya-trainer

|

||||

|

||||

With this [PR](https://github.com/huggingface/diffusers/pull/4287), there should now be better support for loading Kohya-style LoRAs trained on Stable Diffusion XL (SDXL).

|

||||

|

||||

Here are some example checkpoints we tried out:

|

||||

|

||||

* SDXL 0.9:

|

||||

* https://civitai.com/models/22279?modelVersionId=118556

|

||||

* https://civitai.com/models/104515/sdxlor30costumesrevue-starlight-saijoclaudine-lora

|

||||

* https://civitai.com/models/108448/daiton-sdxl-test

|

||||

* https://filebin.net/2ntfqqnapiu9q3zx/pixelbuildings128-v1.safetensors

|

||||

* SDXL 1.0:

|

||||

* https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0/blob/main/sd_xl_offset_example-lora_1.0.safetensors

|

||||

|

||||

Here is an example of how to perform inference with these checkpoints in `diffusers`:

|

||||

|

||||

```python

|

||||

from diffusers import DiffusionPipeline

|

||||

import torch

|

||||

|

||||

base_model_id = "stabilityai/stable-diffusion-xl-base-0.9"

|

||||

pipeline = DiffusionPipeline.from_pretrained(base_model_id, torch_dtype=torch.float16).to("cuda")

|

||||

pipeline.load_lora_weights(".", weight_name="Kamepan.safetensors")

|

||||

|

||||

prompt = "anime screencap, glint, drawing, best quality, light smile, shy, a full body of a girl wearing wedding dress in the middle of the forest beneath the trees, fireflies, big eyes, 2d, cute, anime girl, waifu, cel shading, magical girl, vivid colors, (outline:1.1), manga anime artstyle, masterpiece, offical wallpaper, glint <lora:kame_sdxl_v2:1>"

|

||||

negative_prompt = "(deformed, bad quality, sketch, depth of field, blurry:1.1), grainy, bad anatomy, bad perspective, old, ugly, realistic, cartoon, disney, bad propotions"

|

||||

generator = torch.manual_seed(2947883060)

|

||||

num_inference_steps = 30

|

||||

guidance_scale = 7

|

||||

|

||||

image = pipeline(

|

||||

prompt=prompt, negative_prompt=negative_prompt, num_inference_steps=num_inference_steps,

|

||||

generator=generator, guidance_scale=guidance_scale

|

||||

).images[0]

|

||||

image.save("Kamepan.png")

|

||||

```

|

||||

|

||||

`Kamepan.safetensors` comes from https://civitai.com/models/22279?modelVersionId=118556 .

|

||||

|

||||

If you notice carefully, the inference UX is exactly identical to what we presented in the sections above.

|

||||

|

||||

Thanks to [@isidentical](https://github.com/isidentical) for helping us on integrating this feature.

|

||||

|

||||

### Known limitations specific to the Kohya-styled LoRAs

|

||||

|

||||

* SDXL LoRAs that have both the text encoders are currently leading to weird results. We're actively investigating the issue.

|

||||

* When images don't looks similar to other UIs such ComfyUI, it can be beacause of multiple reasons as explained [here](https://github.com/huggingface/diffusers/pull/4287/#issuecomment-1655110736).

|

||||

@@ -4,6 +4,5 @@ transformers>=4.25.1

|

||||

ftfy

|

||||

tensorboard

|

||||

Jinja2

|

||||

invisible-watermark>=0.2.0

|

||||

datasets

|

||||

wandb

|

||||

|

||||

@@ -56,7 +56,7 @@ if is_wandb_available():

|

||||

import wandb

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

|

||||

@@ -59,7 +59,7 @@ if is_wandb_available():

|

||||

import wandb

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = logging.getLogger(__name__)

|

||||

|

||||

|

||||

@@ -58,7 +58,7 @@ if is_wandb_available():

|

||||

import wandb

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.18.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

|

||||

@@ -57,7 +57,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

|

||||

@@ -4,4 +4,3 @@ transformers>=4.25.1

|

||||

ftfy

|

||||

tensorboard

|

||||

Jinja2

|

||||

invisible-watermark>=0.2.0

|

||||

@@ -59,7 +59,7 @@ if is_wandb_available():

|

||||

import wandb

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

|

||||

@@ -36,7 +36,7 @@ from diffusers.utils import check_min_version

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

# Cache compiled models across invocations of this script.

|

||||

cc.initialize_cache(os.path.expanduser("~/.cache/jax/compilation_cache"))

|

||||

|

||||

@@ -69,7 +69,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

@@ -924,10 +924,10 @@ def main(args):

|

||||

else:

|

||||

raise ValueError(f"unexpected save model: {model.__class__}")

|

||||

|

||||

lora_state_dict, network_alpha = LoraLoaderMixin.lora_state_dict(input_dir)

|

||||

LoraLoaderMixin.load_lora_into_unet(lora_state_dict, network_alpha=network_alpha, unet=unet_)

|

||||

lora_state_dict, network_alphas = LoraLoaderMixin.lora_state_dict(input_dir)

|

||||

LoraLoaderMixin.load_lora_into_unet(lora_state_dict, network_alphas=network_alphas, unet=unet_)

|

||||

LoraLoaderMixin.load_lora_into_text_encoder(

|

||||

lora_state_dict, network_alpha=network_alpha, text_encoder=text_encoder_

|

||||

lora_state_dict, network_alphas=network_alphas, text_encoder=text_encoder_

|

||||

)

|

||||

|

||||

accelerator.register_save_state_pre_hook(save_model_hook)

|

||||

|

||||

@@ -58,7 +58,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

@@ -829,13 +829,13 @@ def main(args):

|

||||

else:

|

||||

raise ValueError(f"unexpected save model: {model.__class__}")

|

||||

|

||||

lora_state_dict, network_alpha = LoraLoaderMixin.lora_state_dict(input_dir)

|

||||

LoraLoaderMixin.load_lora_into_unet(lora_state_dict, network_alpha=network_alpha, unet=unet_)

|

||||

lora_state_dict, network_alphas = LoraLoaderMixin.lora_state_dict(input_dir)

|

||||

LoraLoaderMixin.load_lora_into_unet(lora_state_dict, network_alphas=network_alphas, unet=unet_)

|

||||

LoraLoaderMixin.load_lora_into_text_encoder(

|

||||

lora_state_dict, network_alpha=network_alpha, text_encoder=text_encoder_one_

|

||||

lora_state_dict, network_alphas=network_alphas, text_encoder=text_encoder_one_

|

||||

)

|

||||

LoraLoaderMixin.load_lora_into_text_encoder(

|

||||

lora_state_dict, network_alpha=network_alpha, text_encoder=text_encoder_two_

|

||||

lora_state_dict, network_alphas=network_alphas, text_encoder=text_encoder_two_

|

||||

)

|

||||

|

||||

accelerator.register_save_state_pre_hook(save_model_hook)

|

||||

|

||||

@@ -52,7 +52,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__, log_level="INFO")

|

||||

|

||||

|

||||

@@ -55,7 +55,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.18.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__, log_level="INFO")

|

||||

|

||||

|

||||

@@ -54,7 +54,7 @@ if is_wandb_available():

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__, log_level="INFO")

|

||||

|

||||

|

||||

@@ -33,7 +33,7 @@ from diffusers.utils import check_min_version

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = logging.getLogger(__name__)

|

||||

|

||||

|

||||

@@ -48,7 +48,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__, log_level="INFO")

|

||||

|

||||

|

||||

@@ -78,7 +78,7 @@ else:

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

|

||||

@@ -56,7 +56,7 @@ else:

|

||||

# ------------------------------------------------------------------------------

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = logging.getLogger(__name__)

|

||||

|

||||

|

||||

@@ -30,7 +30,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__, log_level="INFO")

|

||||

|

||||

|

||||

2

setup.py

2

setup.py

@@ -233,7 +233,7 @@ install_requires = [

|

||||

|

||||

setup(

|

||||

name="diffusers",

|

||||

version="0.19.0.dev0", # expected format is one of x.y.z.dev0, or x.y.z.rc1 or x.y.z (no to dashes, yes to dots)

|

||||

version="0.19.2", # expected format is one of x.y.z.dev0, or x.y.z.rc1 or x.y.z (no to dashes, yes to dots)

|

||||

description="Diffusers",

|

||||

long_description=open("README.md", "r", encoding="utf-8").read(),

|

||||

long_description_content_type="text/markdown",

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

__version__ = "0.19.0.dev0"

|

||||

__version__ = "0.19.2"

|

||||

|

||||

from .configuration_utils import ConfigMixin

|

||||

from .utils import (

|

||||

@@ -185,6 +185,11 @@ else:

|

||||

StableDiffusionPix2PixZeroPipeline,

|

||||

StableDiffusionSAGPipeline,

|

||||

StableDiffusionUpscalePipeline,

|

||||

StableDiffusionXLControlNetPipeline,

|

||||

StableDiffusionXLImg2ImgPipeline,

|

||||

StableDiffusionXLInpaintPipeline,

|

||||

StableDiffusionXLInstructPix2PixPipeline,

|

||||

StableDiffusionXLPipeline,

|

||||

StableUnCLIPImg2ImgPipeline,

|

||||

StableUnCLIPPipeline,

|

||||

TextToVideoSDPipeline,

|

||||

@@ -202,20 +207,6 @@ else:

|

||||

VQDiffusionPipeline,

|

||||

)

|

||||

|

||||

try:

|

||||

if not (is_torch_available() and is_transformers_available() and is_invisible_watermark_available()):

|

||||

raise OptionalDependencyNotAvailable()

|

||||

except OptionalDependencyNotAvailable:

|

||||

from .utils.dummy_torch_and_transformers_and_invisible_watermark_objects import * # noqa F403

|

||||

else:

|

||||

from .pipelines import (

|

||||

StableDiffusionXLControlNetPipeline,

|

||||

StableDiffusionXLImg2ImgPipeline,

|

||||

StableDiffusionXLInpaintPipeline,

|

||||

StableDiffusionXLInstructPix2PixPipeline,

|

||||

StableDiffusionXLPipeline,

|

||||

)

|

||||

|

||||

try:

|

||||

if not (is_torch_available() and is_transformers_available() and is_k_diffusion_available()):

|

||||

raise OptionalDependencyNotAvailable()

|

||||

|

||||

@@ -12,6 +12,7 @@

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

import os

|

||||

import re

|

||||

import warnings

|

||||

from collections import defaultdict

|

||||

from contextlib import nullcontext

|

||||

@@ -56,7 +57,6 @@ UNET_NAME = "unet"

|

||||

|

||||

LORA_WEIGHT_NAME = "pytorch_lora_weights.bin"

|

||||

LORA_WEIGHT_NAME_SAFE = "pytorch_lora_weights.safetensors"

|

||||

TOTAL_EXAMPLE_KEYS = 5

|

||||

|

||||

TEXT_INVERSION_NAME = "learned_embeds.bin"

|

||||

TEXT_INVERSION_NAME_SAFE = "learned_embeds.safetensors"

|

||||

@@ -257,7 +257,7 @@ class UNet2DConditionLoadersMixin:

|

||||

use_safetensors = kwargs.pop("use_safetensors", None)

|

||||

# This value has the same meaning as the `--network_alpha` option in the kohya-ss trainer script.

|

||||

# See https://github.com/darkstorm2150/sd-scripts/blob/main/docs/train_network_README-en.md#execute-learning

|

||||

network_alpha = kwargs.pop("network_alpha", None)

|

||||

network_alphas = kwargs.pop("network_alphas", None)

|

||||

|

||||

if use_safetensors and not is_safetensors_available():

|

||||

raise ValueError(

|

||||

@@ -322,7 +322,7 @@ class UNet2DConditionLoadersMixin:

|

||||

attn_processors = {}

|

||||

non_attn_lora_layers = []

|

||||

|

||||

is_lora = all("lora" in k for k in state_dict.keys())

|

||||

is_lora = all(("lora" in k or k.endswith(".alpha")) for k in state_dict.keys())

|

||||

is_custom_diffusion = any("custom_diffusion" in k for k in state_dict.keys())

|

||||

|

||||

if is_lora:

|

||||

@@ -339,10 +339,25 @@ class UNet2DConditionLoadersMixin:

|

||||

state_dict = {k.replace(f"{self.unet_name}.", ""): v for k, v in state_dict.items() if k in unet_keys}

|

||||

|

||||

lora_grouped_dict = defaultdict(dict)

|

||||

for key, value in state_dict.items():

|

||||

mapped_network_alphas = {}

|

||||

|

||||

all_keys = list(state_dict.keys())

|

||||

for key in all_keys:

|

||||

value = state_dict.pop(key)

|

||||

attn_processor_key, sub_key = ".".join(key.split(".")[:-3]), ".".join(key.split(".")[-3:])

|

||||

lora_grouped_dict[attn_processor_key][sub_key] = value

|

||||

|

||||

# Create another `mapped_network_alphas` dictionary so that we can properly map them.

|

||||

if network_alphas is not None:

|

||||

for k in network_alphas:

|

||||

if k.replace(".alpha", "") in key:

|

||||

mapped_network_alphas.update({attn_processor_key: network_alphas[k]})

|

||||

|

||||

if len(state_dict) > 0:

|

||||

raise ValueError(

|

||||

f"The state_dict has to be empty at this point but has the following keys \n\n {', '.join(state_dict.keys())}"

|

||||

)

|

||||

|

||||

for key, value_dict in lora_grouped_dict.items():

|

||||

attn_processor = self

|

||||

for sub_key in key.split("."):

|

||||

@@ -352,13 +367,27 @@ class UNet2DConditionLoadersMixin:

|

||||

# or add_{k,v,q,out_proj}_proj_lora layers.

|

||||

if "lora.down.weight" in value_dict:

|

||||

rank = value_dict["lora.down.weight"].shape[0]

|

||||

hidden_size = value_dict["lora.up.weight"].shape[0]

|

||||

|

||||

if isinstance(attn_processor, LoRACompatibleConv):

|

||||

lora = LoRAConv2dLayer(hidden_size, hidden_size, rank, network_alpha)

|

||||

in_features = attn_processor.in_channels

|

||||

out_features = attn_processor.out_channels

|

||||

kernel_size = attn_processor.kernel_size

|

||||

|

||||

lora = LoRAConv2dLayer(

|

||||

in_features=in_features,

|

||||

out_features=out_features,

|

||||

rank=rank,

|

||||

kernel_size=kernel_size,

|

||||

stride=attn_processor.stride,

|

||||

padding=attn_processor.padding,

|

||||

network_alpha=mapped_network_alphas.get(key),

|

||||

)

|

||||

elif isinstance(attn_processor, LoRACompatibleLinear):

|

||||

lora = LoRALinearLayer(

|

||||

attn_processor.in_features, attn_processor.out_features, rank, network_alpha

|

||||

attn_processor.in_features,

|

||||

attn_processor.out_features,

|

||||

rank,

|

||||

mapped_network_alphas.get(key),

|

||||

)

|

||||

else:

|

||||

raise ValueError(f"Module {key} is not a LoRACompatibleConv or LoRACompatibleLinear module.")

|

||||

@@ -366,32 +395,64 @@ class UNet2DConditionLoadersMixin:

|

||||

value_dict = {k.replace("lora.", ""): v for k, v in value_dict.items()}

|

||||

lora.load_state_dict(value_dict)

|

||||

non_attn_lora_layers.append((attn_processor, lora))

|

||||

continue

|

||||

|

||||

rank = value_dict["to_k_lora.down.weight"].shape[0]

|

||||

hidden_size = value_dict["to_k_lora.up.weight"].shape[0]

|

||||

|

||||

if isinstance(

|

||||

attn_processor, (AttnAddedKVProcessor, SlicedAttnAddedKVProcessor, AttnAddedKVProcessor2_0)

|

||||

):

|

||||

cross_attention_dim = value_dict["add_k_proj_lora.down.weight"].shape[1]

|

||||

attn_processor_class = LoRAAttnAddedKVProcessor

|

||||

else:

|

||||

cross_attention_dim = value_dict["to_k_lora.down.weight"].shape[1]

|

||||

if isinstance(attn_processor, (XFormersAttnProcessor, LoRAXFormersAttnProcessor)):

|

||||

attn_processor_class = LoRAXFormersAttnProcessor

|

||||

# To handle SDXL.

|

||||

rank_mapping = {}

|

||||

hidden_size_mapping = {}

|

||||

for projection_id in ["to_k", "to_q", "to_v", "to_out"]:

|

||||

rank = value_dict[f"{projection_id}_lora.down.weight"].shape[0]

|

||||

hidden_size = value_dict[f"{projection_id}_lora.up.weight"].shape[0]

|

||||

|

||||

rank_mapping.update({f"{projection_id}_lora.down.weight": rank})

|

||||

hidden_size_mapping.update({f"{projection_id}_lora.up.weight": hidden_size})

|

||||

|

||||

if isinstance(

|

||||

attn_processor, (AttnAddedKVProcessor, SlicedAttnAddedKVProcessor, AttnAddedKVProcessor2_0)

|

||||

):

|

||||

cross_attention_dim = value_dict["add_k_proj_lora.down.weight"].shape[1]

|

||||

attn_processor_class = LoRAAttnAddedKVProcessor

|

||||

else:

|

||||

attn_processor_class = (

|

||||

LoRAAttnProcessor2_0 if hasattr(F, "scaled_dot_product_attention") else LoRAAttnProcessor

|

||||

cross_attention_dim = value_dict["to_k_lora.down.weight"].shape[1]

|

||||

if isinstance(attn_processor, (XFormersAttnProcessor, LoRAXFormersAttnProcessor)):

|

||||

attn_processor_class = LoRAXFormersAttnProcessor

|

||||

else:

|

||||

attn_processor_class = (

|

||||

LoRAAttnProcessor2_0

|

||||

if hasattr(F, "scaled_dot_product_attention")

|

||||

else LoRAAttnProcessor

|

||||

)

|

||||

|

||||

if attn_processor_class is not LoRAAttnAddedKVProcessor:

|

||||

attn_processors[key] = attn_processor_class(

|

||||

rank=rank_mapping.get("to_k_lora.down.weight"),

|

||||

hidden_size=hidden_size_mapping.get("to_k_lora.up.weight"),

|

||||

cross_attention_dim=cross_attention_dim,

|

||||

network_alpha=mapped_network_alphas.get(key),

|

||||

q_rank=rank_mapping.get("to_q_lora.down.weight"),

|

||||

q_hidden_size=hidden_size_mapping.get("to_q_lora.up.weight"),

|

||||

v_rank=rank_mapping.get("to_v_lora.down.weight"),

|

||||

v_hidden_size=hidden_size_mapping.get("to_v_lora.up.weight"),

|

||||

out_rank=rank_mapping.get("to_out_lora.down.weight"),

|

||||

out_hidden_size=hidden_size_mapping.get("to_out_lora.up.weight"),

|

||||

# rank=rank_mapping.get("to_k_lora.down.weight", None),

|

||||

# hidden_size=hidden_size_mapping.get("to_k_lora.up.weight", None),

|

||||

# q_rank=rank_mapping.get("to_q_lora.down.weight", None),

|

||||

# q_hidden_size=hidden_size_mapping.get("to_q_lora.up.weight", None),

|

||||

# v_rank=rank_mapping.get("to_v_lora.down.weight", None),

|

||||

# v_hidden_size=hidden_size_mapping.get("to_v_lora.up.weight", None),

|

||||

# out_rank=rank_mapping.get("to_out_lora.down.weight", None),

|

||||

# out_hidden_size=hidden_size_mapping.get("to_out_lora.up.weight", None),

|

||||

)

|

||||

else:

|

||||

attn_processors[key] = attn_processor_class(

|

||||

rank=rank_mapping.get("to_k_lora.down.weight", None),

|

||||

hidden_size=hidden_size_mapping.get("to_k_lora.up.weight", None),

|

||||

cross_attention_dim=cross_attention_dim,

|

||||

network_alpha=mapped_network_alphas.get(key),

|

||||

)

|

||||

|

||||

attn_processors[key] = attn_processor_class(

|

||||

hidden_size=hidden_size,

|

||||

cross_attention_dim=cross_attention_dim,

|

||||

rank=rank,

|

||||

network_alpha=network_alpha,

|

||||

)

|

||||

attn_processors[key].load_state_dict(value_dict)

|

||||

attn_processors[key].load_state_dict(value_dict)

|

||||

|

||||

elif is_custom_diffusion:

|

||||

custom_diffusion_grouped_dict = defaultdict(dict)

|

||||

for key, value in state_dict.items():

|

||||

@@ -434,8 +495,10 @@ class UNet2DConditionLoadersMixin:

|

||||

|

||||

# set ff layers

|

||||

for target_module, lora_layer in non_attn_lora_layers:

|

||||

if hasattr(target_module, "set_lora_layer"):

|

||||

target_module.set_lora_layer(lora_layer)

|

||||

target_module.set_lora_layer(lora_layer)

|

||||

# It should raise an error if we don't have a set lora here

|

||||

# if hasattr(target_module, "set_lora_layer"):

|

||||

# target_module.set_lora_layer(lora_layer)

|

||||

|

||||

def save_attn_procs(

|

||||

self,

|

||||

@@ -880,11 +943,11 @@ class LoraLoaderMixin:

|

||||

kwargs (`dict`, *optional*):

|

||||

See [`~loaders.LoraLoaderMixin.lora_state_dict`].

|

||||

"""

|

||||

state_dict, network_alpha = self.lora_state_dict(pretrained_model_name_or_path_or_dict, **kwargs)

|

||||

self.load_lora_into_unet(state_dict, network_alpha=network_alpha, unet=self.unet)

|

||||

state_dict, network_alphas = self.lora_state_dict(pretrained_model_name_or_path_or_dict, **kwargs)

|

||||

self.load_lora_into_unet(state_dict, network_alphas=network_alphas, unet=self.unet)

|

||||

self.load_lora_into_text_encoder(

|

||||

state_dict,

|

||||

network_alpha=network_alpha,

|

||||

network_alphas=network_alphas,

|

||||

text_encoder=self.text_encoder,

|

||||

lora_scale=self.lora_scale,

|

||||

)

|

||||

@@ -896,7 +959,7 @@ class LoraLoaderMixin:

|

||||

**kwargs,

|

||||

):

|

||||

r"""

|

||||

Return state dict for lora weights

|

||||

Return state dict for lora weights and the network alphas.

|

||||

|

||||

<Tip warning={true}>

|

||||

|

||||

@@ -957,6 +1020,7 @@ class LoraLoaderMixin:

|

||||

revision = kwargs.pop("revision", None)

|

||||

subfolder = kwargs.pop("subfolder", None)

|

||||

weight_name = kwargs.pop("weight_name", None)

|

||||

unet_config = kwargs.pop("unet_config", None)

|

||||

use_safetensors = kwargs.pop("use_safetensors", None)

|

||||

|

||||

if use_safetensors and not is_safetensors_available():

|

||||

@@ -1018,53 +1082,158 @@ class LoraLoaderMixin:

|

||||

else:

|

||||

state_dict = pretrained_model_name_or_path_or_dict

|

||||

|

||||

# Convert kohya-ss Style LoRA attn procs to diffusers attn procs

|

||||

network_alpha = None

|

||||

if all((k.startswith("lora_te_") or k.startswith("lora_unet_")) for k in state_dict.keys()):

|

||||

state_dict, network_alpha = cls._convert_kohya_lora_to_diffusers(state_dict)

|

||||

network_alphas = None

|

||||

if all(

|

||||

(

|

||||

k.startswith("lora_te_")

|

||||

or k.startswith("lora_unet_")

|

||||

or k.startswith("lora_te1_")

|

||||

or k.startswith("lora_te2_")

|

||||

)

|

||||

for k in state_dict.keys()

|

||||

):

|

||||

# Map SDXL blocks correctly.

|

||||

if unet_config is not None:

|

||||

# use unet config to remap block numbers

|

||||

state_dict = cls._map_sgm_blocks_to_diffusers(state_dict, unet_config)

|

||||

state_dict, network_alphas = cls._convert_kohya_lora_to_diffusers(state_dict)

|

||||

|

||||

return state_dict, network_alpha

|

||||

return state_dict, network_alphas

|

||||

|

||||

@classmethod

|

||||

def load_lora_into_unet(cls, state_dict, network_alpha, unet):

|

||||

def _map_sgm_blocks_to_diffusers(cls, state_dict, unet_config, delimiter="_", block_slice_pos=5):

|

||||

is_all_unet = all(k.startswith("lora_unet") for k in state_dict)

|

||||

new_state_dict = {}

|

||||

inner_block_map = ["resnets", "attentions", "upsamplers"]

|

||||

|

||||

# Retrieves # of down, mid and up blocks

|

||||

input_block_ids, middle_block_ids, output_block_ids = set(), set(), set()

|

||||

for layer in state_dict:

|

||||

if "text" not in layer:

|

||||

layer_id = int(layer.split(delimiter)[:block_slice_pos][-1])

|

||||

if "input_blocks" in layer:

|

||||

input_block_ids.add(layer_id)

|

||||

elif "middle_block" in layer:

|

||||

middle_block_ids.add(layer_id)

|

||||

elif "output_blocks" in layer:

|

||||

output_block_ids.add(layer_id)

|

||||

else:

|

||||

raise ValueError("Checkpoint not supported")

|

||||

|

||||

input_blocks = {

|

||||

layer_id: [key for key in state_dict if f"input_blocks{delimiter}{layer_id}" in key]

|

||||

for layer_id in input_block_ids

|

||||

}

|

||||

middle_blocks = {

|

||||

layer_id: [key for key in state_dict if f"middle_block{delimiter}{layer_id}" in key]

|

||||

for layer_id in middle_block_ids

|

||||

}

|

||||

output_blocks = {

|

||||

layer_id: [key for key in state_dict if f"output_blocks{delimiter}{layer_id}" in key]

|

||||

for layer_id in output_block_ids

|

||||

}

|

||||

|

||||

# Rename keys accordingly

|

||||

for i in input_block_ids:

|

||||

block_id = (i - 1) // (unet_config.layers_per_block + 1)

|

||||

layer_in_block_id = (i - 1) % (unet_config.layers_per_block + 1)

|

||||

|

||||

for key in input_blocks[i]:

|

||||

inner_block_id = int(key.split(delimiter)[block_slice_pos])

|

||||

inner_block_key = inner_block_map[inner_block_id] if "op" not in key else "downsamplers"

|

||||

inner_layers_in_block = str(layer_in_block_id) if "op" not in key else "0"

|

||||

new_key = delimiter.join(

|

||||

key.split(delimiter)[: block_slice_pos - 1]

|

||||

+ [str(block_id), inner_block_key, inner_layers_in_block]

|

||||

+ key.split(delimiter)[block_slice_pos + 1 :]

|

||||

)

|

||||

new_state_dict[new_key] = state_dict.pop(key)

|

||||

|

||||

for i in middle_block_ids:

|

||||

key_part = None

|

||||

if i == 0:

|

||||

key_part = [inner_block_map[0], "0"]

|

||||

elif i == 1:

|

||||

key_part = [inner_block_map[1], "0"]

|

||||

elif i == 2:

|

||||

key_part = [inner_block_map[0], "1"]

|

||||

else:

|

||||

raise ValueError(f"Invalid middle block id {i}.")

|

||||

|

||||

for key in middle_blocks[i]:

|

||||

new_key = delimiter.join(

|

||||

key.split(delimiter)[: block_slice_pos - 1] + key_part + key.split(delimiter)[block_slice_pos:]

|

||||

)

|

||||

new_state_dict[new_key] = state_dict.pop(key)

|

||||

|

||||

for i in output_block_ids:

|

||||

block_id = i // (unet_config.layers_per_block + 1)

|

||||

layer_in_block_id = i % (unet_config.layers_per_block + 1)

|

||||

|

||||

for key in output_blocks[i]:

|

||||

inner_block_id = int(key.split(delimiter)[block_slice_pos])

|

||||

inner_block_key = inner_block_map[inner_block_id]

|

||||

inner_layers_in_block = str(layer_in_block_id) if inner_block_id < 2 else "0"

|

||||

new_key = delimiter.join(

|

||||

key.split(delimiter)[: block_slice_pos - 1]

|

||||

+ [str(block_id), inner_block_key, inner_layers_in_block]

|

||||

+ key.split(delimiter)[block_slice_pos + 1 :]

|

||||

)

|

||||

new_state_dict[new_key] = state_dict.pop(key)

|

||||

|

||||

if is_all_unet and len(state_dict) > 0:

|

||||

raise ValueError("At this point all state dict entries have to be converted.")

|

||||

else:

|

||||

# Remaining is the text encoder state dict.

|

||||

for k, v in state_dict.items():

|

||||

new_state_dict.update({k: v})

|

||||

|

||||

return new_state_dict

|

||||

|

||||

@classmethod

|

||||

def load_lora_into_unet(cls, state_dict, network_alphas, unet):

|

||||

"""

|

||||

This will load the LoRA layers specified in `state_dict` into `unet`

|

||||

This will load the LoRA layers specified in `state_dict` into `unet`.

|

||||

|

||||

Parameters:

|

||||

state_dict (`dict`):

|

||||

A standard state dict containing the lora layer parameters. The keys can either be indexed directly

|

||||

into the unet or prefixed with an additional `unet` which can be used to distinguish between text

|

||||

encoder lora layers.

|

||||

network_alpha (`float`):

|

||||

network_alphas (`Dict[str, float]`):

|

||||

See `LoRALinearLayer` for more details.

|

||||

unet (`UNet2DConditionModel`):

|

||||

The UNet model to load the LoRA layers into.

|

||||

"""

|

||||

|

||||

# If the serialization format is new (introduced in https://github.com/huggingface/diffusers/pull/2918),

|

||||

# then the `state_dict` keys should have `self.unet_name` and/or `self.text_encoder_name` as

|

||||

# their prefixes.

|

||||

keys = list(state_dict.keys())

|

||||

|

||||

if all(key.startswith(cls.unet_name) or key.startswith(cls.text_encoder_name) for key in keys):

|

||||

# Load the layers corresponding to UNet.

|

||||

unet_keys = [k for k in keys if k.startswith(cls.unet_name)]

|

||||

logger.info(f"Loading {cls.unet_name}.")

|

||||

unet_lora_state_dict = {

|

||||

k.replace(f"{cls.unet_name}.", ""): v for k, v in state_dict.items() if k in unet_keys

|

||||

}

|

||||

unet.load_attn_procs(unet_lora_state_dict, network_alpha=network_alpha)

|

||||

|

||||

# Otherwise, we're dealing with the old format. This means the `state_dict` should only

|

||||

# contain the module names of the `unet` as its keys WITHOUT any prefix.

|

||||

elif not all(

|

||||

key.startswith(cls.unet_name) or key.startswith(cls.text_encoder_name) for key in state_dict.keys()

|

||||

):

|

||||

unet.load_attn_procs(state_dict, network_alpha=network_alpha)

|

||||

unet_keys = [k for k in keys if k.startswith(cls.unet_name)]

|

||||

state_dict = {k.replace(f"{cls.unet_name}.", ""): v for k, v in state_dict.items() if k in unet_keys}

|

||||

|

||||

if network_alphas is not None:

|

||||

alpha_keys = [k for k in network_alphas.keys() if k.startswith(cls.unet_name)]

|

||||

network_alphas = {

|

||||

k.replace(f"{cls.unet_name}.", ""): v for k, v in network_alphas.items() if k in alpha_keys

|

||||

}

|

||||

|

||||

else:

|

||||

# Otherwise, we're dealing with the old format. This means the `state_dict` should only

|

||||

# contain the module names of the `unet` as its keys WITHOUT any prefix.

|

||||

warn_message = "You have saved the LoRA weights using the old format. To convert the old LoRA weights to the new format, you can first load them in a dictionary and then create a new dictionary like the following: `new_state_dict = {f'unet'.{module_name}: params for module_name, params in old_state_dict.items()}`."

|

||||

warnings.warn(warn_message)

|

||||

|

||||

# load loras into unet

|

||||

unet.load_attn_procs(state_dict, network_alphas=network_alphas)

|

||||

|

||||

@classmethod

|

||||

def load_lora_into_text_encoder(cls, state_dict, network_alpha, text_encoder, prefix=None, lora_scale=1.0):

|

||||

def load_lora_into_text_encoder(cls, state_dict, network_alphas, text_encoder, prefix=None, lora_scale=1.0):

|

||||

"""

|

||||

This will load the LoRA layers specified in `state_dict` into `text_encoder`

|

||||

|

||||

@@ -1072,7 +1241,7 @@ class LoraLoaderMixin:

|

||||

state_dict (`dict`):

|

||||

A standard state dict containing the lora layer parameters. The key should be prefixed with an

|

||||

additional `text_encoder` to distinguish between unet lora layers.

|

||||

network_alpha (`float`):

|

||||

network_alphas (`Dict[str, float]`):

|

||||

See `LoRALinearLayer` for more details.

|

||||

text_encoder (`CLIPTextModel`):

|

||||

The text encoder model to load the LoRA layers into.

|

||||

@@ -1141,14 +1310,19 @@ class LoraLoaderMixin:

|

||||

].shape[1]

|

||||

patch_mlp = any(".mlp." in key for key in text_encoder_lora_state_dict.keys())

|

||||

|

||||

cls._modify_text_encoder(text_encoder, lora_scale, network_alpha, rank=rank, patch_mlp=patch_mlp)

|

||||

cls._modify_text_encoder(

|

||||

text_encoder,

|

||||

lora_scale,

|

||||

network_alphas,

|

||||

rank=rank,

|

||||

patch_mlp=patch_mlp,

|

||||

)

|

||||

|

||||

# set correct dtype & device

|

||||

text_encoder_lora_state_dict = {

|

||||

k: v.to(device=text_encoder.device, dtype=text_encoder.dtype)

|

||||

for k, v in text_encoder_lora_state_dict.items()

|

||||

}

|

||||

|

||||

load_state_dict_results = text_encoder.load_state_dict(text_encoder_lora_state_dict, strict=False)

|

||||

if len(load_state_dict_results.unexpected_keys) != 0:

|

||||

raise ValueError(

|

||||

@@ -1183,7 +1357,7 @@ class LoraLoaderMixin:

|

||||

cls,

|

||||

text_encoder,

|

||||

lora_scale=1,

|

||||

network_alpha=None,

|

||||

network_alphas=None,

|

||||

rank=4,

|

||||

dtype=None,

|

||||

patch_mlp=False,

|

||||

@@ -1196,37 +1370,46 @@ class LoraLoaderMixin:

|

||||

cls._remove_text_encoder_monkey_patch_classmethod(text_encoder)

|

||||

|

||||

lora_parameters = []

|

||||

network_alphas = {} if network_alphas is None else network_alphas

|

||||

|

||||

for name, attn_module in text_encoder_attn_modules(text_encoder):

|

||||

query_alpha = network_alphas.get(name + ".k.proj.alpha")

|

||||

key_alpha = network_alphas.get(name + ".q.proj.alpha")

|

||||

value_alpha = network_alphas.get(name + ".v.proj.alpha")

|

||||

proj_alpha = network_alphas.get(name + ".out.proj.alpha")

|

||||

|

||||

for _, attn_module in text_encoder_attn_modules(text_encoder):

|

||||

attn_module.q_proj = PatchedLoraProjection(

|

||||

attn_module.q_proj, lora_scale, network_alpha, rank=rank, dtype=dtype

|

||||

attn_module.q_proj, lora_scale, network_alpha=query_alpha, rank=rank, dtype=dtype

|

||||

)

|

||||

lora_parameters.extend(attn_module.q_proj.lora_linear_layer.parameters())

|

||||

|

||||

attn_module.k_proj = PatchedLoraProjection(

|

||||

attn_module.k_proj, lora_scale, network_alpha, rank=rank, dtype=dtype

|

||||

attn_module.k_proj, lora_scale, network_alpha=key_alpha, rank=rank, dtype=dtype

|

||||

)

|

||||

lora_parameters.extend(attn_module.k_proj.lora_linear_layer.parameters())

|

||||

|

||||

attn_module.v_proj = PatchedLoraProjection(

|

||||

attn_module.v_proj, lora_scale, network_alpha, rank=rank, dtype=dtype

|

||||

attn_module.v_proj, lora_scale, network_alpha=value_alpha, rank=rank, dtype=dtype

|

||||

)

|

||||

lora_parameters.extend(attn_module.v_proj.lora_linear_layer.parameters())

|

||||

|

||||

attn_module.out_proj = PatchedLoraProjection(

|

||||

attn_module.out_proj, lora_scale, network_alpha, rank=rank, dtype=dtype

|

||||

attn_module.out_proj, lora_scale, network_alpha=proj_alpha, rank=rank, dtype=dtype

|

||||

)

|

||||

lora_parameters.extend(attn_module.out_proj.lora_linear_layer.parameters())

|

||||

|

||||

if patch_mlp:

|

||||

for _, mlp_module in text_encoder_mlp_modules(text_encoder):

|

||||

for name, mlp_module in text_encoder_mlp_modules(text_encoder):

|

||||

fc1_alpha = network_alphas.get(name + ".fc1.alpha")

|

||||

fc2_alpha = network_alphas.get(name + ".fc2.alpha")

|

||||

|

||||

mlp_module.fc1 = PatchedLoraProjection(

|

||||

mlp_module.fc1, lora_scale, network_alpha, rank=rank, dtype=dtype

|

||||

mlp_module.fc1, lora_scale, network_alpha=fc1_alpha, rank=rank, dtype=dtype

|

||||

)

|

||||

lora_parameters.extend(mlp_module.fc1.lora_linear_layer.parameters())

|

||||

|

||||

mlp_module.fc2 = PatchedLoraProjection(

|

||||

mlp_module.fc2, lora_scale, network_alpha, rank=rank, dtype=dtype

|

||||

mlp_module.fc2, lora_scale, network_alpha=fc2_alpha, rank=rank, dtype=dtype

|

||||

)

|

||||

lora_parameters.extend(mlp_module.fc2.lora_linear_layer.parameters())

|

||||

|

||||

@@ -1333,77 +1516,163 @@ class LoraLoaderMixin:

|

||||

def _convert_kohya_lora_to_diffusers(cls, state_dict):

|

||||

unet_state_dict = {}

|

||||

te_state_dict = {}

|

||||

network_alpha = None

|

||||

unloaded_keys = []

|

||||

te2_state_dict = {}

|

||||

network_alphas = {}

|

||||

|

||||

for key, value in state_dict.items():

|

||||

if "hada" in key or "skip" in key:

|

||||

unloaded_keys.append(key)

|

||||

elif "lora_down" in key:

|

||||

lora_name = key.split(".")[0]

|

||||

lora_name_up = lora_name + ".lora_up.weight"

|

||||

lora_name_alpha = lora_name + ".alpha"

|

||||

if lora_name_alpha in state_dict:

|

||||

alpha = state_dict[lora_name_alpha].item()

|

||||

if network_alpha is None:

|

||||

network_alpha = alpha

|

||||

elif network_alpha != alpha:

|

||||

raise ValueError("Network alpha is not consistent")

|

||||

# every down weight has a corresponding up weight and potentially an alpha weight

|

||||

lora_keys = [k for k in state_dict.keys() if k.endswith("lora_down.weight")]

|

||||

for key in lora_keys:

|

||||

lora_name = key.split(".")[0]

|

||||

lora_name_up = lora_name + ".lora_up.weight"

|

||||

lora_name_alpha = lora_name + ".alpha"

|

||||

|

||||

if lora_name.startswith("lora_unet_"):

|

||||

diffusers_name = key.replace("lora_unet_", "").replace("_", ".")

|

||||

# if lora_name_alpha in state_dict:

|

||||

# alpha = state_dict.pop(lora_name_alpha).item()

|

||||

# network_alphas.update({lora_name_alpha: alpha})

|

||||

|

||||

if lora_name.startswith("lora_unet_"):

|

||||

diffusers_name = key.replace("lora_unet_", "").replace("_", ".")

|

||||

|

||||

if "input.blocks" in diffusers_name:

|

||||

diffusers_name = diffusers_name.replace("input.blocks", "down_blocks")

|

||||

else:

|

||||

diffusers_name = diffusers_name.replace("down.blocks", "down_blocks")

|

||||

|

||||

if "middle.block" in diffusers_name:

|

||||

diffusers_name = diffusers_name.replace("middle.block", "mid_block")

|

||||

else:

|

||||

diffusers_name = diffusers_name.replace("mid.block", "mid_block")

|

||||

if "output.blocks" in diffusers_name:

|

||||

diffusers_name = diffusers_name.replace("output.blocks", "up_blocks")

|

||||

else:

|

||||

diffusers_name = diffusers_name.replace("up.blocks", "up_blocks")

|

||||

diffusers_name = diffusers_name.replace("transformer.blocks", "transformer_blocks")

|

||||

diffusers_name = diffusers_name.replace("to.q.lora", "to_q_lora")

|

||||

diffusers_name = diffusers_name.replace("to.k.lora", "to_k_lora")

|

||||

diffusers_name = diffusers_name.replace("to.v.lora", "to_v_lora")

|

||||

diffusers_name = diffusers_name.replace("to.out.0.lora", "to_out_lora")

|

||||

diffusers_name = diffusers_name.replace("proj.in", "proj_in")

|

||||

diffusers_name = diffusers_name.replace("proj.out", "proj_out")

|

||||

if "transformer_blocks" in diffusers_name:

|

||||

if "attn1" in diffusers_name or "attn2" in diffusers_name:

|

||||

diffusers_name = diffusers_name.replace("attn1", "attn1.processor")

|

||||

diffusers_name = diffusers_name.replace("attn2", "attn2.processor")

|

||||

unet_state_dict[diffusers_name] = value

|

||||

unet_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict[lora_name_up]

|

||||

elif "ff" in diffusers_name:

|

||||

unet_state_dict[diffusers_name] = value

|

||||

unet_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict[lora_name_up]

|

||||

elif any(key in diffusers_name for key in ("proj_in", "proj_out")):

|

||||

unet_state_dict[diffusers_name] = value

|

||||

unet_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict[lora_name_up]

|

||||

|

||||

elif lora_name.startswith("lora_te_"):

|

||||

diffusers_name = key.replace("lora_te_", "").replace("_", ".")

|

||||

diffusers_name = diffusers_name.replace("text.model", "text_model")

|

||||

diffusers_name = diffusers_name.replace("self.attn", "self_attn")

|

||||

diffusers_name = diffusers_name.replace("q.proj.lora", "to_q_lora")

|

||||

diffusers_name = diffusers_name.replace("k.proj.lora", "to_k_lora")

|

||||

diffusers_name = diffusers_name.replace("v.proj.lora", "to_v_lora")

|

||||

diffusers_name = diffusers_name.replace("out.proj.lora", "to_out_lora")

|

||||

if "self_attn" in diffusers_name:

|

||||

te_state_dict[diffusers_name] = value

|

||||

te_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict[lora_name_up]

|

||||

elif "mlp" in diffusers_name:

|

||||

# Be aware that this is the new diffusers convention and the rest of the code might

|

||||

# not utilize it yet.

|

||||

diffusers_name = diffusers_name.replace(".lora.", ".lora_linear_layer.")

|

||||

te_state_dict[diffusers_name] = value

|

||||

te_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict[lora_name_up]

|

||||

diffusers_name = diffusers_name.replace("transformer.blocks", "transformer_blocks")

|

||||

diffusers_name = diffusers_name.replace("to.q.lora", "to_q_lora")

|

||||

diffusers_name = diffusers_name.replace("to.k.lora", "to_k_lora")

|

||||

diffusers_name = diffusers_name.replace("to.v.lora", "to_v_lora")

|

||||

diffusers_name = diffusers_name.replace("to.out.0.lora", "to_out_lora")

|

||||

diffusers_name = diffusers_name.replace("proj.in", "proj_in")

|

||||

diffusers_name = diffusers_name.replace("proj.out", "proj_out")

|

||||

diffusers_name = diffusers_name.replace("emb.layers", "time_emb_proj")

|

||||

|

||||

logger.info("Kohya-style checkpoint detected.")

|

||||

if len(unloaded_keys) > 0:

|

||||

example_unloaded_keys = ", ".join(x for x in unloaded_keys[:TOTAL_EXAMPLE_KEYS])

|

||||

logger.warning(

|

||||

f"There are some keys (such as: {example_unloaded_keys}) in the checkpoints we don't provide support for."

|

||||

# SDXL specificity.

|

||||

if "emb" in diffusers_name:

|

||||

pattern = r"\.\d+(?=\D*$)"

|

||||

diffusers_name = re.sub(pattern, "", diffusers_name, count=1)

|

||||

if ".in." in diffusers_name:

|

||||

diffusers_name = diffusers_name.replace("in.layers.2", "conv1")

|

||||

if ".out." in diffusers_name:

|

||||

diffusers_name = diffusers_name.replace("out.layers.3", "conv2")

|

||||

if "downsamplers" in diffusers_name or "upsamplers" in diffusers_name:

|

||||

diffusers_name = diffusers_name.replace("op", "conv")

|

||||

if "skip" in diffusers_name:

|

||||

diffusers_name = diffusers_name.replace("skip.connection", "conv_shortcut")

|

||||

|

||||

if "transformer_blocks" in diffusers_name:

|

||||

if "attn1" in diffusers_name or "attn2" in diffusers_name:

|

||||

diffusers_name = diffusers_name.replace("attn1", "attn1.processor")

|

||||

diffusers_name = diffusers_name.replace("attn2", "attn2.processor")

|

||||

unet_state_dict[diffusers_name] = state_dict.pop(key)

|

||||

unet_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict.pop(lora_name_up)

|

||||

elif "ff" in diffusers_name:

|

||||

unet_state_dict[diffusers_name] = state_dict.pop(key)

|

||||

unet_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict.pop(lora_name_up)

|

||||

elif any(key in diffusers_name for key in ("proj_in", "proj_out")):

|

||||

unet_state_dict[diffusers_name] = state_dict.pop(key)

|

||||

unet_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict.pop(lora_name_up)

|

||||

else:

|

||||

unet_state_dict[diffusers_name] = state_dict.pop(key)

|

||||

unet_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict.pop(lora_name_up)

|

||||

|

||||

elif lora_name.startswith("lora_te_"):

|

||||

diffusers_name = key.replace("lora_te_", "").replace("_", ".")

|

||||

diffusers_name = diffusers_name.replace("text.model", "text_model")

|

||||

diffusers_name = diffusers_name.replace("self.attn", "self_attn")

|

||||

diffusers_name = diffusers_name.replace("q.proj.lora", "to_q_lora")

|

||||

diffusers_name = diffusers_name.replace("k.proj.lora", "to_k_lora")

|

||||

diffusers_name = diffusers_name.replace("v.proj.lora", "to_v_lora")

|

||||

diffusers_name = diffusers_name.replace("out.proj.lora", "to_out_lora")

|

||||

if "self_attn" in diffusers_name:

|

||||

te_state_dict[diffusers_name] = state_dict.pop(key)

|

||||

te_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict.pop(lora_name_up)

|

||||

elif "mlp" in diffusers_name:

|

||||

# Be aware that this is the new diffusers convention and the rest of the code might

|

||||

# not utilize it yet.

|

||||

diffusers_name = diffusers_name.replace(".lora.", ".lora_linear_layer.")

|

||||

te_state_dict[diffusers_name] = state_dict.pop(key)

|

||||

te_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict.pop(lora_name_up)

|

||||

|

||||

# (sayakpaul): Duplicate code. Needs to be cleaned.

|

||||

elif lora_name.startswith("lora_te1_"):

|

||||

diffusers_name = key.replace("lora_te1_", "").replace("_", ".")

|

||||

diffusers_name = diffusers_name.replace("text.model", "text_model")

|

||||

diffusers_name = diffusers_name.replace("self.attn", "self_attn")

|

||||

diffusers_name = diffusers_name.replace("q.proj.lora", "to_q_lora")

|

||||

diffusers_name = diffusers_name.replace("k.proj.lora", "to_k_lora")

|

||||

diffusers_name = diffusers_name.replace("v.proj.lora", "to_v_lora")

|

||||

diffusers_name = diffusers_name.replace("out.proj.lora", "to_out_lora")

|

||||

if "self_attn" in diffusers_name:

|

||||

te_state_dict[diffusers_name] = state_dict.pop(key)

|

||||

te_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict.pop(lora_name_up)

|

||||

elif "mlp" in diffusers_name:

|

||||

# Be aware that this is the new diffusers convention and the rest of the code might

|

||||

# not utilize it yet.

|

||||

diffusers_name = diffusers_name.replace(".lora.", ".lora_linear_layer.")

|

||||

te_state_dict[diffusers_name] = state_dict.pop(key)

|

||||

te_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict.pop(lora_name_up)

|

||||

|

||||

# (sayakpaul): Duplicate code. Needs to be cleaned.

|

||||

elif lora_name.startswith("lora_te2_"):

|

||||

diffusers_name = key.replace("lora_te2_", "").replace("_", ".")

|

||||

diffusers_name = diffusers_name.replace("text.model", "text_model")

|

||||

diffusers_name = diffusers_name.replace("self.attn", "self_attn")

|

||||

diffusers_name = diffusers_name.replace("q.proj.lora", "to_q_lora")

|

||||

diffusers_name = diffusers_name.replace("k.proj.lora", "to_k_lora")

|

||||

diffusers_name = diffusers_name.replace("v.proj.lora", "to_v_lora")

|

||||

diffusers_name = diffusers_name.replace("out.proj.lora", "to_out_lora")

|

||||

if "self_attn" in diffusers_name:

|

||||

te2_state_dict[diffusers_name] = state_dict.pop(key)

|

||||

te2_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict.pop(lora_name_up)

|

||||

elif "mlp" in diffusers_name:

|

||||

# Be aware that this is the new diffusers convention and the rest of the code might

|

||||

# not utilize it yet.

|

||||

diffusers_name = diffusers_name.replace(".lora.", ".lora_linear_layer.")

|

||||

te2_state_dict[diffusers_name] = state_dict.pop(key)

|

||||

te2_state_dict[diffusers_name.replace(".down.", ".up.")] = state_dict.pop(lora_name_up)

|

||||

|

||||

# Rename the alphas so that they can be mapped appropriately.

|

||||

if lora_name_alpha in state_dict:

|

||||

alpha = state_dict.pop(lora_name_alpha).item()

|

||||

if lora_name_alpha.startswith("lora_unet_"):

|

||||

prefix = "unet."

|

||||

elif lora_name_alpha.startswith(("lora_te_", "lora_te1_")):

|

||||

prefix = "text_encoder."

|

||||

else:

|

||||

prefix = "text_encoder_2."

|

||||

new_name = prefix + diffusers_name.split(".lora.")[0] + ".alpha"

|

||||

network_alphas.update({new_name: alpha})

|

||||

|

||||

if len(state_dict) > 0:

|

||||

raise ValueError(

|

||||

f"The following keys have not been correctly be renamed: \n\n {', '.join(state_dict.keys())}"

|

||||

)

|

||||

|

||||

unet_state_dict = {f"{UNET_NAME}.{module_name}": params for module_name, params in unet_state_dict.items()}

|

||||

te_state_dict = {f"{TEXT_ENCODER_NAME}.{module_name}": params for module_name, params in te_state_dict.items()}

|

||||

logger.info("Kohya-style checkpoint detected.")

|

||||

unet_state_dict = {f"{cls.unet_name}.{module_name}": params for module_name, params in unet_state_dict.items()}

|

||||

te_state_dict = {

|

||||

f"{cls.text_encoder_name}.{module_name}": params for module_name, params in te_state_dict.items()

|

||||

}

|

||||

te2_state_dict = (

|

||||

{f"text_encoder_2.{module_name}": params for module_name, params in te2_state_dict.items()}

|

||||

if len(te2_state_dict) > 0

|

||||

else None

|

||||

)

|

||||

if te2_state_dict is not None:

|

||||

te_state_dict.update(te2_state_dict)

|

||||

|

||||

new_state_dict = {**unet_state_dict, **te_state_dict}

|

||||

return new_state_dict, network_alpha

|

||||

return new_state_dict, network_alphas

|

||||

|

||||

def unload_lora_weights(self):

|

||||

"""

|

||||

|

||||

@@ -521,17 +521,32 @@ class LoRAAttnProcessor(nn.Module):

|

||||

Equivalent to `alpha` but it's usage is specific to Kohya (A1111) style LoRAs.

|

||||

"""

|

||||

|

||||

def __init__(self, hidden_size, cross_attention_dim=None, rank=4, network_alpha=None):

|

||||