mirror of

https://github.com/huggingface/diffusers.git

synced 2025-12-07 04:54:47 +08:00

Compare commits

418 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

c1b378db69 | ||

|

|

b50a9ae383 | ||

|

|

ea2e177c1d | ||

|

|

513f1fbfb0 | ||

|

|

d7b692083c | ||

|

|

9070c394aa | ||

|

|

194ed794d8 | ||

|

|

051b34635f | ||

|

|

5f25818a0f | ||

|

|

c25d8c905c | ||

|

|

5782e0393d | ||

|

|

92b6dbba1a | ||

|

|

c72e343085 | ||

|

|

3228eb1609 | ||

|

|

c1488ff348 | ||

|

|

b344c953a8 | ||

|

|

dd10da76a7 | ||

|

|

543ee1e092 | ||

|

|

75b6c16567 | ||

|

|

c4ae7c2421 | ||

|

|

a2090375ca | ||

|

|

c4a3b09a36 | ||

|

|

616c3a42cb | ||

|

|

d23cf98769 | ||

|

|

eeb9264acd | ||

|

|

b6447fa87e | ||

|

|

b6cadcef98 | ||

|

|

3100bc9670 | ||

|

|

e05f03ae41 | ||

|

|

6c15636b0b | ||

|

|

89f2011ced | ||

|

|

0f8547c2af | ||

|

|

343180c2cf | ||

|

|

27782bc18e | ||

|

|

cde0ed162a | ||

|

|

570d3f1eb9 | ||

|

|

85244d4a59 | ||

|

|

1a84bd2a0f | ||

|

|

3247eadde4 | ||

|

|

a487b5095a | ||

|

|

04fa7baea8 | ||

|

|

9a04a8a6a8 | ||

|

|

a05a5fb9ba | ||

|

|

71faf347fd | ||

|

|

2f1f7b01d6 | ||

|

|

5311f564ed | ||

|

|

3b7f514a1c | ||

|

|

7c0a861894 | ||

|

|

a73ae3e5b0 | ||

|

|

06505ba4b4 | ||

|

|

13457002c0 | ||

|

|

302b86bd0b | ||

|

|

d87d5edf66 | ||

|

|

e795a4c6f8 | ||

|

|

4293b9f54f | ||

|

|

0e5f2daee7 | ||

|

|

416749ff96 | ||

|

|

b1b99b59ac | ||

|

|

606ac57e50 | ||

|

|

394243ce98 | ||

|

|

fe98574622 | ||

|

|

c5c9399610 | ||

|

|

836f3f35c2 | ||

|

|

9c3820d05a | ||

|

|

13e37cabe0 | ||

|

|

760dcb1ffc | ||

|

|

889aa6008c | ||

|

|

76f9b52289 | ||

|

|

6b275fca49 | ||

|

|

1b42732ced | ||

|

|

9e9d2dbc59 | ||

|

|

8b4371f70f | ||

|

|

919e27d357 | ||

|

|

ad9d252596 | ||

|

|

7e11392dfd | ||

|

|

1f49a343b5 | ||

|

|

936cd08488 | ||

|

|

3a32b8c916 | ||

|

|

c3a15437f8 | ||

|

|

8c31925b3b | ||

|

|

33344ed916 | ||

|

|

7353b74ec2 | ||

|

|

44bb38fd8b | ||

|

|

2ea64a08ed | ||

|

|

37fe8e00b2 | ||

|

|

0ea78f0d3b | ||

|

|

0e5a99bb5a | ||

|

|

e3c982ee29 | ||

|

|

ab00f5d3e1 | ||

|

|

3f0b44b322 | ||

|

|

cb90fd69b4 | ||

|

|

f794432e81 | ||

|

|

182b164f32 | ||

|

|

8b42c7cecc | ||

|

|

66d5a1804c | ||

|

|

d5acb4110a | ||

|

|

6cabc599a2 | ||

|

|

36b459f6e6 | ||

|

|

1820024005 | ||

|

|

ffe7b93b60 | ||

|

|

f82ebb9a03 | ||

|

|

63c68d979a | ||

|

|

ba3c9a9a3a | ||

|

|

b5c684f042 | ||

|

|

da8e87e201 | ||

|

|

43bbc78123 | ||

|

|

1c14ce9509 | ||

|

|

29628acbec | ||

|

|

9d2fc6b535 | ||

|

|

3f1e95928e | ||

|

|

87060e6a9c | ||

|

|

e5f3415fbd | ||

|

|

f5ca5af6ce | ||

|

|

2ac19ff190 | ||

|

|

badc5517ff | ||

|

|

a8fc1560c6 | ||

|

|

f448360bd0 | ||

|

|

97e1e3ba76 | ||

|

|

dacabaa47f | ||

|

|

6d5ef87e6b | ||

|

|

e7fe901e5e | ||

|

|

c3d78cd306 | ||

|

|

2a69c0b7b8 | ||

|

|

c8c0c0e846 | ||

|

|

5e12d5c691 | ||

|

|

8aed37c1bd | ||

|

|

06c79730d0 | ||

|

|

ea8d58ea91 | ||

|

|

c352faeae3 | ||

|

|

107986639d | ||

|

|

d9316bf8bc | ||

|

|

3abf4bc439 | ||

|

|

94566e6dd8 | ||

|

|

4e2674934f | ||

|

|

53a42d0a0c | ||

|

|

321f9791d6 | ||

|

|

c524244f49 | ||

|

|

d224c6373f | ||

|

|

44705a648b | ||

|

|

a7b0047e0f | ||

|

|

dcb9070bc2 | ||

|

|

11667d08d3 | ||

|

|

221de0edee | ||

|

|

0eac7bd682 | ||

|

|

1e7e23a9c6 | ||

|

|

b8415bb480 | ||

|

|

3a15afacab | ||

|

|

571e4062e5 | ||

|

|

14bd3567b0 | ||

|

|

c2bc59d2b1 | ||

|

|

ab946575b1 | ||

|

|

1468f754e0 | ||

|

|

fa7443c899 | ||

|

|

8d7771d8b0 | ||

|

|

a1b5ef5ddc | ||

|

|

f26d3011c7 | ||

|

|

9da575d63c | ||

|

|

979c48be04 | ||

|

|

099d3eab49 | ||

|

|

61dc657461 | ||

|

|

23904d54d0 | ||

|

|

c691bb2f42 | ||

|

|

4c293e0e1b | ||

|

|

516cb9e7f8 | ||

|

|

60a981343e | ||

|

|

db5a05742e | ||

|

|

0dbc4779c8 | ||

|

|

5018abff6e | ||

|

|

f1aade0596 | ||

|

|

abedfb08f1 | ||

|

|

61ea57c5a7 | ||

|

|

810c0e4fda | ||

|

|

db7ec72dd8 | ||

|

|

52e0c5b294 | ||

|

|

fb188cd3f5 | ||

|

|

efe1e60e12 | ||

|

|

fd6f93b2b1 | ||

|

|

db934c6750 | ||

|

|

185347e411 | ||

|

|

c1c4dea98d | ||

|

|

f4cd5a20d0 | ||

|

|

3dbd6a8f4d | ||

|

|

c54f36f087 | ||

|

|

8b0bc596de | ||

|

|

f35387b33f | ||

|

|

3e2cff4da2 | ||

|

|

639b861129 | ||

|

|

663393e28a | ||

|

|

c50d997591 | ||

|

|

f1cb807496 | ||

|

|

13ac40ed8e | ||

|

|

ebe683432f | ||

|

|

b897008122 | ||

|

|

8830af1168 | ||

|

|

81e7144783 | ||

|

|

c9bd4d4338 | ||

|

|

7e0fd19ffe | ||

|

|

21aac1aca9 | ||

|

|

b65eb377dd | ||

|

|

26ce60c46d | ||

|

|

358531be9d | ||

|

|

66ee73eebc | ||

|

|

32b93da875 | ||

|

|

597b7ae2fb | ||

|

|

519bd41ff3 | ||

|

|

eb90d3be13 | ||

|

|

df2e145e5f | ||

|

|

046dc43075 | ||

|

|

c174bcf4bf | ||

|

|

466214d2d6 | ||

|

|

4e125f72ab | ||

|

|

0926dc2418 | ||

|

|

8cba133f36 | ||

|

|

f47066f707 | ||

|

|

859ffea2b1 | ||

|

|

65788e46ed | ||

|

|

eceeb97242 | ||

|

|

333a8da678 | ||

|

|

814133ec9c | ||

|

|

f15ab901a0 | ||

|

|

d1f2e3e47b | ||

|

|

1899457b24 | ||

|

|

ebf3717c37 | ||

|

|

976173a4bf | ||

|

|

bae04ea9d8 | ||

|

|

0b7daa6de9 | ||

|

|

99568c5a39 | ||

|

|

2ac9b02609 | ||

|

|

17e5b4921a | ||

|

|

36e1893c6f | ||

|

|

4d1536bb2e | ||

|

|

e5d9baf0fe | ||

|

|

c482d7bd4f | ||

|

|

e47c97a451 | ||

|

|

740326d2a2 | ||

|

|

31d1f3c8c0 | ||

|

|

635da72374 | ||

|

|

79db3eb6ca | ||

|

|

e372767c4d | ||

|

|

c45fd7498c | ||

|

|

9dccc7dc42 | ||

|

|

52b3ff5eb9 | ||

|

|

fff981df2f | ||

|

|

a42b900d27 | ||

|

|

bdecc3cffd | ||

|

|

0efac0aac9 | ||

|

|

d74b804d05 | ||

|

|

a859b1992b | ||

|

|

22b63d155a | ||

|

|

85d991a12a | ||

|

|

3a5c87055c | ||

|

|

a2b72faff7 | ||

|

|

c9504bba10 | ||

|

|

26ea58d4e1 | ||

|

|

d1fb309381 | ||

|

|

7b9b946cb2 | ||

|

|

b9de7172ba | ||

|

|

4261c3aadf | ||

|

|

932ce05d97 | ||

|

|

4e08e0ca42 | ||

|

|

af6c143919 | ||

|

|

07ff0abff4 | ||

|

|

3286dac6bf | ||

|

|

1cf7933ea2 | ||

|

|

d726857f7e | ||

|

|

ee010726ab | ||

|

|

abcb25978a | ||

|

|

183056f243 | ||

|

|

dc7c49e4e4 | ||

|

|

c991ffd4f0 | ||

|

|

3986741b8b | ||

|

|

0e13d3293c | ||

|

|

3f9e3d8ad6 | ||

|

|

e13ee8b5b3 | ||

|

|

0027993e91 | ||

|

|

6846ee2ac4 | ||

|

|

c7a39d38ad | ||

|

|

02a76c2c81 | ||

|

|

9b9afc9726 | ||

|

|

b7f0ce5b39 | ||

|

|

6921393ae2 | ||

|

|

17bf65e186 | ||

|

|

014ebc594d | ||

|

|

168e5b7ffa | ||

|

|

43bf361a7a | ||

|

|

8199f09c22 | ||

|

|

7c120874be | ||

|

|

3562a3e661 | ||

|

|

1a0331a78a | ||

|

|

fbb103deb6 | ||

|

|

45a09bebf3 | ||

|

|

0183bf13c7 | ||

|

|

f6e8c8c09c | ||

|

|

9a4d53a476 | ||

|

|

ba264419f4 | ||

|

|

dc6d028654 | ||

|

|

d5c527a499 | ||

|

|

135acd83af | ||

|

|

433cb3f801 | ||

|

|

de810814da | ||

|

|

bc2d586dcb | ||

|

|

49a81f9f1a | ||

|

|

78e99a997b | ||

|

|

fc67917a18 | ||

|

|

7ca832cac9 | ||

|

|

b296f2d4f3 | ||

|

|

ac796924df | ||

|

|

3618d33039 | ||

|

|

c3c1bdf8e2 | ||

|

|

bd9c9fbfbe | ||

|

|

f941fc9917 | ||

|

|

e29fc44635 | ||

|

|

7b4e049eb0 | ||

|

|

4fbf8c815e | ||

|

|

0244e2af4c | ||

|

|

6e456b7a7a | ||

|

|

3a17775454 | ||

|

|

40e28e8bf4 | ||

|

|

fc596c8625 | ||

|

|

48269070d2 | ||

|

|

c31736a4a4 | ||

|

|

7b43035bcb | ||

|

|

e45dae7dc0 | ||

|

|

d0032c6095 | ||

|

|

33abc79515 | ||

|

|

0d80fe9327 | ||

|

|

848c86ca0a | ||

|

|

320506c75a | ||

|

|

30fbd39f0c | ||

|

|

62c2c547db | ||

|

|

9e31c6a749 | ||

|

|

e3bf932404 | ||

|

|

dc966cc447 | ||

|

|

ac00dad756 | ||

|

|

072d75196c | ||

|

|

da4aebeda7 | ||

|

|

71289ba06e | ||

|

|

bfb4ddca35 | ||

|

|

c982fb8262 | ||

|

|

0417baf23d | ||

|

|

9c82c32ba7 | ||

|

|

1a099e5e0e | ||

|

|

b09b152f77 | ||

|

|

a2117cb797 | ||

|

|

ee902ddf3a | ||

|

|

e1ef122260 | ||

|

|

4497e78d00 | ||

|

|

49718b4704 | ||

|

|

77aadfee6a | ||

|

|

452339e20e | ||

|

|

80898b5234 | ||

|

|

e5675fad5d | ||

|

|

27359ae049 | ||

|

|

95a45f5b3a | ||

|

|

646e16fe06 | ||

|

|

08c852290a | ||

|

|

2b8bc91cf8 | ||

|

|

5b8ce1e7e6 | ||

|

|

05e265fbc8 | ||

|

|

694ad9849b | ||

|

|

808b49a7dc | ||

|

|

1c953bc3ea | ||

|

|

e007c797b1 | ||

|

|

44e64f9464 | ||

|

|

a677565f16 | ||

|

|

ff885b0e26 | ||

|

|

b4e6a7403d | ||

|

|

d182a6ad91 | ||

|

|

12da0fe10d | ||

|

|

cf6cd39572 | ||

|

|

eef2327a47 | ||

|

|

9c96682a51 | ||

|

|

1997b90838 | ||

|

|

b2274ece73 | ||

|

|

7dc71897b3 | ||

|

|

800b27703e | ||

|

|

d76bc43720 | ||

|

|

de22d4cd5d | ||

|

|

8c1f51978c | ||

|

|

dcb23b2d72 | ||

|

|

13a78b3cd3 | ||

|

|

fe7d136324 | ||

|

|

e660a05fed | ||

|

|

5e6f500038 | ||

|

|

0ffda1dfcc | ||

|

|

20c722c601 | ||

|

|

7cabc0cddc | ||

|

|

c2e48b23f8 | ||

|

|

ace07110c1 | ||

|

|

988369a01c | ||

|

|

5a3467e623 | ||

|

|

e26782759c | ||

|

|

1d2551d716 | ||

|

|

8007393614 | ||

|

|

cdf26c55f5 | ||

|

|

bed32182f6 | ||

|

|

cf3fdb8479 | ||

|

|

d2940c23fe | ||

|

|

13f003c9bd | ||

|

|

a1e1806575 | ||

|

|

cc45831ec6 | ||

|

|

2d8d82f93e | ||

|

|

71ecc7aed8 | ||

|

|

3f2d46a14e | ||

|

|

ebbba62c36 | ||

|

|

7b55d334d5 | ||

|

|

986cc9b2f4 | ||

|

|

c3cc8eb23c | ||

|

|

926658665f | ||

|

|

acb2faaefa | ||

|

|

4c16b3a5fd | ||

|

|

c5e54c200a | ||

|

|

4bf6bea52a | ||

|

|

7d4bafa8a4 | ||

|

|

57aba1ef50 | ||

|

|

71c6b36254 | ||

|

|

1112699149 | ||

|

|

52a9acfa8e |

37

.github/ISSUE_TEMPLATE/bug-report.yml

vendored

Normal file

37

.github/ISSUE_TEMPLATE/bug-report.yml

vendored

Normal file

@@ -0,0 +1,37 @@

|

||||

name: "\U0001F41B Bug Report"

|

||||

description: Report a bug on diffusers

|

||||

labels: [ "bug" ]

|

||||

body:

|

||||

- type: markdown

|

||||

attributes:

|

||||

value: |

|

||||

Thanks for taking the time to fill out this bug report!

|

||||

- type: textarea

|

||||

id: bug-description

|

||||

attributes:

|

||||

label: Describe the bug

|

||||

description: A clear and concise description of what the bug is. If you intend to submit a pull request for this issue, tell us in the description. Thanks!

|

||||

placeholder: Bug description

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: reproduction

|

||||

attributes:

|

||||

label: Reproduction

|

||||

description: Please provide a minimal reproducible code which we can copy/paste and reproduce the issue.

|

||||

placeholder: Reproduction

|

||||

- type: textarea

|

||||

id: logs

|

||||

attributes:

|

||||

label: Logs

|

||||

description: "Please include the Python logs if you can."

|

||||

render: shell

|

||||

- type: textarea

|

||||

id: system-info

|

||||

attributes:

|

||||

label: System Info

|

||||

description: Please share your system info with us,

|

||||

render: shell

|

||||

placeholder: diffusers version, Python Version, etc

|

||||

validations:

|

||||

required: true

|

||||

7

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

7

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

@@ -0,0 +1,7 @@

|

||||

contact_links:

|

||||

- name: Forum

|

||||

url: https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63

|

||||

about: General usage questions and community discussions

|

||||

- name: Blank issue

|

||||

url: https://github.com/huggingface/diffusers/issues/new

|

||||

about: Please note that the Forum is in most places the right place for discussions

|

||||

20

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

20

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

@@ -0,0 +1,20 @@

|

||||

---

|

||||

name: "\U0001F680 Feature request"

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

||||

|

||||

**Describe the solution you'd like**

|

||||

A clear and concise description of what you want to happen.

|

||||

|

||||

**Describe alternatives you've considered**

|

||||

A clear and concise description of any alternative solutions or features you've considered.

|

||||

|

||||

**Additional context**

|

||||

Add any other context or screenshots about the feature request here.

|

||||

17

.github/workflows/build_documentation.yml

vendored

Normal file

17

.github/workflows/build_documentation.yml

vendored

Normal file

@@ -0,0 +1,17 @@

|

||||

name: Build documentation

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

- doc-builder*

|

||||

- v*-release

|

||||

|

||||

jobs:

|

||||

build:

|

||||

uses: huggingface/doc-builder/.github/workflows/build_main_documentation.yml@main

|

||||

with:

|

||||

commit_sha: ${{ github.sha }}

|

||||

package: diffusers

|

||||

secrets:

|

||||

token: ${{ secrets.HUGGINGFACE_PUSH }}

|

||||

16

.github/workflows/build_pr_documentation.yml

vendored

Normal file

16

.github/workflows/build_pr_documentation.yml

vendored

Normal file

@@ -0,0 +1,16 @@

|

||||

name: Build PR Documentation

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

|

||||

concurrency:

|

||||

group: ${{ github.workflow }}-${{ github.head_ref || github.run_id }}

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

build:

|

||||

uses: huggingface/doc-builder/.github/workflows/build_pr_documentation.yml@main

|

||||

with:

|

||||

commit_sha: ${{ github.event.pull_request.head.sha }}

|

||||

pr_number: ${{ github.event.number }}

|

||||

package: diffusers

|

||||

13

.github/workflows/delete_doc_comment.yml

vendored

Normal file

13

.github/workflows/delete_doc_comment.yml

vendored

Normal file

@@ -0,0 +1,13 @@

|

||||

name: Delete dev documentation

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

types: [ closed ]

|

||||

|

||||

|

||||

jobs:

|

||||

delete:

|

||||

uses: huggingface/doc-builder/.github/workflows/delete_doc_comment.yml@main

|

||||

with:

|

||||

pr_number: ${{ github.event.number }}

|

||||

package: diffusers

|

||||

1

MANIFEST.in

Normal file

1

MANIFEST.in

Normal file

@@ -0,0 +1 @@

|

||||

include src/diffusers/utils/model_card_template.md

|

||||

13

Makefile

13

Makefile

@@ -34,30 +34,23 @@ autogenerate_code: deps_table_update

|

||||

# Check that the repo is in a good state

|

||||

|

||||

repo-consistency:

|

||||

python utils/check_copies.py

|

||||

python utils/check_table.py

|

||||

python utils/check_dummies.py

|

||||

python utils/check_repo.py

|

||||

python utils/check_inits.py

|

||||

python utils/check_config_docstrings.py

|

||||

python utils/tests_fetcher.py --sanity_check

|

||||

|

||||

# this target runs checks on all files

|

||||

|

||||

quality:

|

||||

black --check --preview $(check_dirs)

|

||||

isort --check-only $(check_dirs)

|

||||

python utils/custom_init_isort.py --check_only

|

||||

python utils/sort_auto_mappings.py --check_only

|

||||

flake8 $(check_dirs)

|

||||

doc-builder style src/transformers docs/source --max_len 119 --check_only --path_to_docs docs/source

|

||||

doc-builder style src/diffusers docs/source --max_len 119 --check_only --path_to_docs docs/source

|

||||

|

||||

# Format source code automatically and check is there are any problems left that need manual fixing

|

||||

|

||||

extra_style_checks:

|

||||

python utils/custom_init_isort.py

|

||||

python utils/sort_auto_mappings.py

|

||||

doc-builder style src/transformers docs/source --max_len 119 --path_to_docs docs/source

|

||||

doc-builder style src/diffusers docs/source --max_len 119 --path_to_docs docs/source

|

||||

|

||||

# this target runs checks on all files and potentially modifies some of them

|

||||

|

||||

@@ -74,8 +67,6 @@ fixup: modified_only_fixup extra_style_checks autogenerate_code repo-consistency

|

||||

# Make marked copies of snippets of codes conform to the original

|

||||

|

||||

fix-copies:

|

||||

python utils/check_copies.py --fix_and_overwrite

|

||||

python utils/check_table.py --fix_and_overwrite

|

||||

python utils/check_dummies.py --fix_and_overwrite

|

||||

|

||||

# Run tests for the library

|

||||

|

||||

336

README.md

336

README.md

@@ -22,264 +22,140 @@ More precisely, 🤗 Diffusers offers:

|

||||

|

||||

- State-of-the-art diffusion pipelines that can be run in inference with just a couple of lines of code (see [src/diffusers/pipelines](https://github.com/huggingface/diffusers/tree/main/src/diffusers/pipelines)).

|

||||

- Various noise schedulers that can be used interchangeably for the prefered speed vs. quality trade-off in inference (see [src/diffusers/schedulers](https://github.com/huggingface/diffusers/tree/main/src/diffusers/schedulers)).

|

||||

- Multiple types of models, such as UNet, that can be used as building blocks in an end-to-end diffusion system (see [src/diffusers/models](https://github.com/huggingface/diffusers/tree/main/src/diffusers/models)).

|

||||

- Multiple types of models, such as UNet, can be used as building blocks in an end-to-end diffusion system (see [src/diffusers/models](https://github.com/huggingface/diffusers/tree/main/src/diffusers/models)).

|

||||

- Training examples to show how to train the most popular diffusion models (see [examples](https://github.com/huggingface/diffusers/tree/main/examples)).

|

||||

|

||||

## Quickstart

|

||||

|

||||

In order to get started, we recommend taking a look at two notebooks:

|

||||

|

||||

- The [Getting started with Diffusers](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/diffusers_intro.ipynb) notebook, which showcases an end-to-end example of usage for diffusion models, schedulers and pipelines.

|

||||

Take a look at this notebook to learn how to use the pipeline abstraction, which takes care of everything (model, scheduler, noise handling) for you, and also to understand each independent building block in the library.

|

||||

- The [Training a diffusers model](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/training_example.ipynb) notebook summarizes diffuser model training methods. This notebook takes a step-by-step approach to training your

|

||||

diffuser model on an image dataset, with explanatory graphics.

|

||||

|

||||

## Examples

|

||||

|

||||

If you want to run the code yourself 💻, you can try out:

|

||||

- [Text-to-Image Latent Diffusion](https://huggingface.co/CompVis/ldm-text2im-large-256)

|

||||

```python

|

||||

# !pip install diffusers transformers

|

||||

from diffusers import DiffusionPipeline

|

||||

|

||||

model_id = "CompVis/ldm-text2im-large-256"

|

||||

|

||||

# load model and scheduler

|

||||

ldm = DiffusionPipeline.from_pretrained(model_id)

|

||||

|

||||

# run pipeline in inference (sample random noise and denoise)

|

||||

prompt = "A painting of a squirrel eating a burger"

|

||||

images = ldm([prompt], num_inference_steps=50, eta=0.3, guidance_scale=6)["sample"]

|

||||

|

||||

# save images

|

||||

for idx, image in enumerate(images):

|

||||

image.save(f"squirrel-{idx}.png")

|

||||

```

|

||||

- [Unconditional Diffusion with discrete scheduler](https://huggingface.co/google/ddpm-celebahq-256)

|

||||

```python

|

||||

# !pip install diffusers

|

||||

from diffusers import DDPMPipeline, DDIMPipeline, PNDMPipeline

|

||||

|

||||

model_id = "google/ddpm-celebahq-256"

|

||||

|

||||

# load model and scheduler

|

||||

ddpm = DDPMPipeline.from_pretrained(model_id) # you can replace DDPMPipeline with DDIMPipeline or PNDMPipeline for faster inference

|

||||

|

||||

# run pipeline in inference (sample random noise and denoise)

|

||||

image = ddpm()["sample"]

|

||||

|

||||

# save image

|

||||

image[0].save("ddpm_generated_image.png")

|

||||

```

|

||||

- [Unconditional Latent Diffusion](https://huggingface.co/CompVis/ldm-celebahq-256)

|

||||

- [Unconditional Diffusion with continous scheduler](https://huggingface.co/google/ncsnpp-ffhq-1024)

|

||||

|

||||

If you just want to play around with some web demos, you can try out the following 🚀 Spaces:

|

||||

| Model | Hugging Face Spaces |

|

||||

|-------------------------------- |------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| Text-to-Image Latent Diffusion | [](https://huggingface.co/spaces/CompVis/text2img-latent-diffusion) |

|

||||

| Faces generator | [](https://huggingface.co/spaces/CompVis/celeba-latent-diffusion) |

|

||||

| DDPM with different schedulers | [](https://huggingface.co/spaces/fusing/celeba-diffusion) |

|

||||

|

||||

## Definitions

|

||||

|

||||

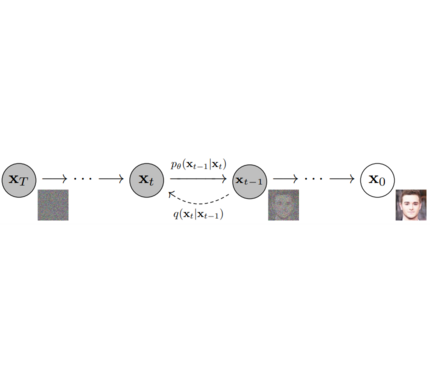

**Models**: Neural network that models **p_θ(x_t-1|x_t)** (see image below) and is trained end-to-end to *denoise* a noisy input to an image.

|

||||

**Models**: Neural network that models $p_\theta(\mathbf{x}_{t-1}|\mathbf{x}_t)$ (see image below) and is trained end-to-end to *denoise* a noisy input to an image.

|

||||

*Examples*: UNet, Conditioned UNet, 3D UNet, Transformer UNet

|

||||

|

||||

|

||||

|

||||

<p align="center">

|

||||

<img src="https://user-images.githubusercontent.com/10695622/174349667-04e9e485-793b-429a-affe-096e8199ad5b.png" width="800"/>

|

||||

<br>

|

||||

<em> Figure from DDPM paper (https://arxiv.org/abs/2006.11239). </em>

|

||||

<p>

|

||||

|

||||

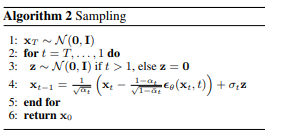

**Schedulers**: Algorithm class for both **inference** and **training**.

|

||||

The class provides functionality to compute previous image according to alpha, beta schedule as well as predict noise for training.

|

||||

*Examples*: [DDPM](https://arxiv.org/abs/2006.11239), [DDIM](https://arxiv.org/abs/2010.02502), [PNDM](https://arxiv.org/abs/2202.09778), [DEIS](https://arxiv.org/abs/2204.13902)

|

||||

|

||||

|

||||

|

||||

<p align="center">

|

||||

<img src="https://user-images.githubusercontent.com/10695622/174349706-53d58acc-a4d1-4cda-b3e8-432d9dc7ad38.png" width="800"/>

|

||||

<br>

|

||||

<em> Sampling and training algorithms. Figure from DDPM paper (https://arxiv.org/abs/2006.11239). </em>

|

||||

<p>

|

||||

|

||||

|

||||

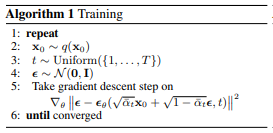

**Diffusion Pipeline**: End-to-end pipeline that includes multiple diffusion models, possible text encoders, ...

|

||||

*Examples*: GLIDE, Latent-Diffusion, Imagen, DALL-E 2

|

||||

|

||||

|

||||

|

||||

*Examples*: Glide, Latent-Diffusion, Imagen, DALL-E 2

|

||||

|

||||

<p align="center">

|

||||

<img src="https://user-images.githubusercontent.com/10695622/174348898-481bd7c2-5457-4830-89bc-f0907756f64c.jpeg" width="550"/>

|

||||

<br>

|

||||

<em> Figure from ImageGen (https://imagen.research.google/). </em>

|

||||

<p>

|

||||

|

||||

## Philosophy

|

||||

|

||||

- Readability and clarity is prefered over highly optimized code. A strong importance is put on providing readable, intuitive and elementary code desgin. *E.g.*, the provided [schedulers](https://github.com/huggingface/diffusers/tree/main/src/diffusers/schedulers) are separated from the provided [models](https://github.com/huggingface/diffusers/tree/main/src/diffusers/models) and provide well-commented code that can be read alongside the original paper.

|

||||

- Diffusers is **modality independent** and focusses on providing pretrained models and tools to build systems that generate **continous outputs**, *e.g.* vision and audio.

|

||||

- Diffusion models and schedulers are provided as consise, elementary building blocks whereas diffusion pipelines are a collection of end-to-end diffusion systems that can be used out-of-the-box, should stay as close as possible to their original implementation and can include components of other library, such as text-encoders. Examples for diffusion pipelines are [Glide](https://github.com/openai/glide-text2im) and [Latent Diffusion](https://github.com/CompVis/latent-diffusion).

|

||||

- Readability and clarity is prefered over highly optimized code. A strong importance is put on providing readable, intuitive and elementary code design. *E.g.*, the provided [schedulers](https://github.com/huggingface/diffusers/tree/main/src/diffusers/schedulers) are separated from the provided [models](https://github.com/huggingface/diffusers/tree/main/src/diffusers/models) and provide well-commented code that can be read alongside the original paper.

|

||||

- Diffusers is **modality independent** and focuses on providing pretrained models and tools to build systems that generate **continous outputs**, *e.g.* vision and audio.

|

||||

- Diffusion models and schedulers are provided as concise, elementary building blocks. In contrast, diffusion pipelines are a collection of end-to-end diffusion systems that can be used out-of-the-box, should stay as close as possible to their original implementation and can include components of another library, such as text-encoders. Examples for diffusion pipelines are [Glide](https://github.com/openai/glide-text2im) and [Latent Diffusion](https://github.com/CompVis/latent-diffusion).

|

||||

|

||||

## Quickstart

|

||||

## Installation

|

||||

|

||||

### Installation

|

||||

|

||||

**Note**: If you want to run PyTorch on GPU on a CUDA-compatible machine, please make sure to install the corresponding `torch` version from the

|

||||

[official website](https://pytorch.org/).

|

||||

```

|

||||

git clone https://github.com/huggingface/diffusers.git

|

||||

cd diffusers && pip install -e .

|

||||

**With `pip`**

|

||||

|

||||

```bash

|

||||

pip install --upgrade diffusers # should install diffusers 0.2.1

|

||||

```

|

||||

|

||||

### 1. `diffusers` as a toolbox for schedulers and models.

|

||||

**With `conda`**

|

||||

|

||||

`diffusers` is more modularized than `transformers`. The idea is that researchers and engineers can use only parts of the library easily for the own use cases.

|

||||

It could become a central place for all kinds of models, schedulers, training utils and processors that one can mix and match for one's own use case.

|

||||

Both models and schedulers should be load- and saveable from the Hub.

|

||||

|

||||

For more examples see [schedulers](https://github.com/huggingface/diffusers/tree/main/src/diffusers/schedulers) and [models](https://github.com/huggingface/diffusers/tree/main/src/diffusers/models)

|

||||

|

||||

#### **Example for [DDPM](https://arxiv.org/abs/2006.11239):**

|

||||

|

||||

```python

|

||||

import torch

|

||||

from diffusers import UNetModel, DDPMScheduler

|

||||

import PIL

|

||||

import numpy as np

|

||||

import tqdm

|

||||

|

||||

generator = torch.manual_seed(0)

|

||||

torch_device = "cuda" if torch.cuda.is_available() else "cpu"

|

||||

|

||||

# 1. Load models

|

||||

noise_scheduler = DDPMScheduler.from_config("fusing/ddpm-lsun-church", tensor_format="pt")

|

||||

unet = UNetModel.from_pretrained("fusing/ddpm-lsun-church").to(torch_device)

|

||||

|

||||

# 2. Sample gaussian noise

|

||||

image = torch.randn(

|

||||

(1, unet.in_channels, unet.resolution, unet.resolution),

|

||||

generator=generator,

|

||||

)

|

||||

image = image.to(torch_device)

|

||||

|

||||

# 3. Denoise

|

||||

num_prediction_steps = len(noise_scheduler)

|

||||

for t in tqdm.tqdm(reversed(range(num_prediction_steps)), total=num_prediction_steps):

|

||||

# predict noise residual

|

||||

with torch.no_grad():

|

||||

residual = unet(image, t)

|

||||

|

||||

# predict previous mean of image x_t-1

|

||||

pred_prev_image = noise_scheduler.step(residual, image, t)

|

||||

|

||||

# optionally sample variance

|

||||

variance = 0

|

||||

if t > 0:

|

||||

noise = torch.randn(image.shape, generator=generator).to(image.device)

|

||||

variance = noise_scheduler.get_variance(t).sqrt() * noise

|

||||

|

||||

# set current image to prev_image: x_t -> x_t-1

|

||||

image = pred_prev_image + variance

|

||||

|

||||

# 5. process image to PIL

|

||||

image_processed = image.cpu().permute(0, 2, 3, 1)

|

||||

image_processed = (image_processed + 1.0) * 127.5

|

||||

image_processed = image_processed.numpy().astype(np.uint8)

|

||||

image_pil = PIL.Image.fromarray(image_processed[0])

|

||||

|

||||

# 6. save image

|

||||

image_pil.save("test.png")

|

||||

```sh

|

||||

conda install -c conda-forge diffusers

|

||||

```

|

||||

|

||||

#### **Example for [DDIM](https://arxiv.org/abs/2010.02502):**

|

||||

## In the works

|

||||

|

||||

```python

|

||||

import torch

|

||||

from diffusers import UNetModel, DDIMScheduler

|

||||

import PIL

|

||||

import numpy as np

|

||||

import tqdm

|

||||

For the first release, 🤗 Diffusers focuses on text-to-image diffusion techniques. However, diffusers can be used for much more than that! Over the upcoming releases, we'll be focusing on:

|

||||

|

||||

generator = torch.manual_seed(0)

|

||||

torch_device = "cuda" if torch.cuda.is_available() else "cpu"

|

||||

- Diffusers for audio

|

||||

- Diffusers for reinforcement learning (initial work happening in https://github.com/huggingface/diffusers/pull/105).

|

||||

- Diffusers for video generation

|

||||

- Diffusers for molecule generation (initial work happening in https://github.com/huggingface/diffusers/pull/54)

|

||||

|

||||

# 1. Load models

|

||||

noise_scheduler = DDIMScheduler.from_config("fusing/ddpm-celeba-hq", tensor_format="pt")

|

||||

unet = UNetModel.from_pretrained("fusing/ddpm-celeba-hq").to(torch_device)

|

||||

A few pipeline components are already being worked on, namely:

|

||||

|

||||

# 2. Sample gaussian noise

|

||||

image = torch.randn(

|

||||

(1, unet.in_channels, unet.resolution, unet.resolution),

|

||||

generator=generator,

|

||||

)

|

||||

image = image.to(torch_device)

|

||||

- BDDMPipeline for spectrogram-to-sound vocoding

|

||||

- GLIDEPipeline to support OpenAI's GLIDE model

|

||||

- Grad-TTS for text to audio generation / conditional audio generation

|

||||

|

||||

# 3. Denoise

|

||||

num_inference_steps = 50

|

||||

eta = 0.0 # <- deterministic sampling

|

||||

We want diffusers to be a toolbox useful for diffusers models in general; if you find yourself limited in any way by the current API, or would like to see additional models, schedulers, or techniques, please open a [GitHub issue](https://github.com/huggingface/diffusers/issues) mentioning what you would like to see.

|

||||

|

||||

for t in tqdm.tqdm(reversed(range(num_inference_steps)), total=num_inference_steps):

|

||||

# 1. predict noise residual

|

||||

orig_t = noise_scheduler.get_orig_t(t, num_inference_steps)

|

||||

with torch.no_grad():

|

||||

residual = unet(image, orig_t)

|

||||

## Credits

|

||||

|

||||

# 2. predict previous mean of image x_t-1

|

||||

pred_prev_image = noise_scheduler.step(residual, image, t, num_inference_steps, eta)

|

||||

This library concretizes previous work by many different authors and would not have been possible without their great research and implementations. We'd like to thank, in particular, the following implementations which have helped us in our development and without which the API could not have been as polished today:

|

||||

|

||||

# 3. optionally sample variance

|

||||

variance = 0

|

||||

if eta > 0:

|

||||

noise = torch.randn(image.shape, generator=generator).to(image.device)

|

||||

variance = noise_scheduler.get_variance(t).sqrt() * eta * noise

|

||||

- @CompVis' latent diffusion models library, available [here](https://github.com/CompVis/latent-diffusion)

|

||||

- @hojonathanho original DDPM implementation, available [here](https://github.com/hojonathanho/diffusion) as well as the extremely useful translation into PyTorch by @pesser, available [here](https://github.com/pesser/pytorch_diffusion)

|

||||

- @ermongroup's DDIM implementation, available [here](https://github.com/ermongroup/ddim).

|

||||

- @yang-song's Score-VE and Score-VP implementations, available [here](https://github.com/yang-song/score_sde_pytorch)

|

||||

|

||||

# 4. set current image to prev_image: x_t -> x_t-1

|

||||

image = pred_prev_image + variance

|

||||

|

||||

# 5. process image to PIL

|

||||

image_processed = image.cpu().permute(0, 2, 3, 1)

|

||||

image_processed = (image_processed + 1.0) * 127.5

|

||||

image_processed = image_processed.numpy().astype(np.uint8)

|

||||

image_pil = PIL.Image.fromarray(image_processed[0])

|

||||

|

||||

# 6. save image

|

||||

image_pil.save("test.png")

|

||||

```

|

||||

|

||||

### 2. `diffusers` as a collection of popula Diffusion systems (GLIDE, Dalle, ...)

|

||||

|

||||

For more examples see [pipelines](https://github.com/huggingface/diffusers/tree/main/src/diffusers/pipelines).

|

||||

|

||||

#### **Example image generation with PNDM**

|

||||

|

||||

```python

|

||||

from diffusers import PNDM, UNetModel, PNDMScheduler

|

||||

import PIL.Image

|

||||

import numpy as np

|

||||

import torch

|

||||

|

||||

model_id = "fusing/ddim-celeba-hq"

|

||||

|

||||

model = UNetModel.from_pretrained(model_id)

|

||||

scheduler = PNDMScheduler()

|

||||

|

||||

# load model and scheduler

|

||||

pndm = PNDM(unet=model, noise_scheduler=scheduler)

|

||||

|

||||

# run pipeline in inference (sample random noise and denoise)

|

||||

with torch.no_grad():

|

||||

image = pndm()

|

||||

|

||||

# process image to PIL

|

||||

image_processed = image.cpu().permute(0, 2, 3, 1)

|

||||

image_processed = (image_processed + 1.0) / 2

|

||||

image_processed = torch.clamp(image_processed, 0.0, 1.0)

|

||||

image_processed = image_processed * 255

|

||||

image_processed = image_processed.numpy().astype(np.uint8)

|

||||

image_pil = PIL.Image.fromarray(image_processed[0])

|

||||

|

||||

# save image

|

||||

image_pil.save("test.png")

|

||||

```

|

||||

|

||||

#### **Text to Image generation with Latent Diffusion**

|

||||

|

||||

_Note: To use latent diffusion install transformers from [this branch](https://github.com/patil-suraj/transformers/tree/ldm-bert)._

|

||||

|

||||

```python

|

||||

from diffusers import DiffusionPipeline

|

||||

|

||||

ldm = DiffusionPipeline.from_pretrained("fusing/latent-diffusion-text2im-large")

|

||||

|

||||

generator = torch.manual_seed(42)

|

||||

|

||||

prompt = "A painting of a squirrel eating a burger"

|

||||

image = ldm([prompt], generator=generator, eta=0.3, guidance_scale=6.0, num_inference_steps=50)

|

||||

|

||||

image_processed = image.cpu().permute(0, 2, 3, 1)

|

||||

image_processed = image_processed * 255.

|

||||

image_processed = image_processed.numpy().astype(np.uint8)

|

||||

image_pil = PIL.Image.fromarray(image_processed[0])

|

||||

|

||||

# save image

|

||||

image_pil.save("test.png")

|

||||

```

|

||||

|

||||

#### **Text to speech with BDDM**

|

||||

|

||||

_Follow the isnstructions [here](https://pytorch.org/hub/nvidia_deeplearningexamples_tacotron2/) to load tacotron2 model._

|

||||

|

||||

```python

|

||||

import torch

|

||||

from diffusers import BDDM, DiffusionPipeline

|

||||

|

||||

torch_device = "cuda"

|

||||

|

||||

# load the BDDM pipeline

|

||||

bddm = DiffusionPipeline.from_pretrained("fusing/diffwave-vocoder-ljspeech")

|

||||

|

||||

# load tacotron2 to get the mel spectograms

|

||||

tacotron2 = torch.hub.load('NVIDIA/DeepLearningExamples:torchhub', 'nvidia_tacotron2', model_math='fp16')

|

||||

tacotron2 = tacotron2.to(torch_device).eval()

|

||||

|

||||

text = "Hello world, I missed you so much."

|

||||

|

||||

utils = torch.hub.load('NVIDIA/DeepLearningExamples:torchhub', 'nvidia_tts_utils')

|

||||

sequences, lengths = utils.prepare_input_sequence([text])

|

||||

|

||||

# generate mel spectograms using text

|

||||

with torch.no_grad():

|

||||

mel_spec, _, _ = tacotron2.infer(sequences, lengths)

|

||||

|

||||

# generate the speech by passing mel spectograms to BDDM pipeline

|

||||

generator = torch.manual_seed(0)

|

||||

audio = bddm(mel_spec, generator, torch_device)

|

||||

|

||||

# save generated audio

|

||||

from scipy.io.wavfile import write as wavwrite

|

||||

sampling_rate = 22050

|

||||

wavwrite("generated_audio.wav", sampling_rate, audio.squeeze().cpu().numpy())

|

||||

```

|

||||

|

||||

## TODO

|

||||

|

||||

- Create common API for models [ ]

|

||||

- Add tests for models [ ]

|

||||

- Adapt schedulers for training [ ]

|

||||

- Write google colab for training [ ]

|

||||

- Write docs / Think about how to structure docs [ ]

|

||||

- Add tests to circle ci [ ]

|

||||

- Add more vision models [ ]

|

||||

- Add more speech models [ ]

|

||||

- Add RL model [ ]

|

||||

We also want to thank @heejkoo for the very helpful overview of papers, code and resources on diffusion models, available [here](https://github.com/heejkoo/Awesome-Diffusion-Models) as well as @crowsonkb and @rromb for useful discussions and insights.

|

||||

|

||||

40

docs/source/_toctree.yml

Normal file

40

docs/source/_toctree.yml

Normal file

@@ -0,0 +1,40 @@

|

||||

- sections:

|

||||

- local: index

|

||||

title: 🧨 Diffusers

|

||||

- local: quicktour

|

||||

title: Quicktour

|

||||

- local: philosophy

|

||||

title: Philosophy

|

||||

title: Get started

|

||||

- sections:

|

||||

- sections:

|

||||

- local: examples/diffusers_for_vision

|

||||

title: Diffusers for Vision

|

||||

- local: examples/diffusers_for_audio

|

||||

title: Diffusers for Audio

|

||||

- local: examples/diffusers_for_other

|

||||

title: Diffusers for Other Modalities

|

||||

title: Examples

|

||||

title: Using Diffusers

|

||||

- sections:

|

||||

- sections:

|

||||

- local: pipelines

|

||||

title: Pipelines

|

||||

- local: schedulers

|

||||

title: Schedulers

|

||||

- local: models

|

||||

title: Models

|

||||

title: Main Classes

|

||||

- sections:

|

||||

- local: pipelines/glide

|

||||

title: "Glide"

|

||||

title: Pipelines

|

||||

- sections:

|

||||

- local: schedulers/ddpm

|

||||

title: "DDPM"

|

||||

title: Schedulers

|

||||

- sections:

|

||||

- local: models/unet

|

||||

title: "Unet"

|

||||

title: Models

|

||||

title: API

|

||||

13

docs/source/examples/diffusers_for_audio.mdx

Normal file

13

docs/source/examples/diffusers_for_audio.mdx

Normal file

@@ -0,0 +1,13 @@

|

||||

<!--Copyright 2022 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# Diffusers for audio

|

||||

20

docs/source/examples/diffusers_for_other.mdx

Normal file

20

docs/source/examples/diffusers_for_other.mdx

Normal file

@@ -0,0 +1,20 @@

|

||||

<!--Copyright 2022 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# Diffusers for other modalities

|

||||

|

||||

Diffusers offers support to other modalities than vision and audio.

|

||||

Currently, some examples include:

|

||||

- [Diffuser](https://diffusion-planning.github.io/) for planning in reinforcement learning (currenlty only inference): [](https://colab.research.google.com/drive/1TmBmlYeKUZSkUZoJqfBmaicVTKx6nN1R?usp=sharing)

|

||||

|

||||

If you are interested in contributing to under-construction examples, you can explore:

|

||||

- [GeoDiff](https://github.com/MinkaiXu/GeoDiff) for generating 3D configurations of molecule diagrams [](https://colab.research.google.com/drive/1pLYYWQhdLuv1q-JtEHGZybxp2RBF8gPs?usp=sharing).

|

||||

150

docs/source/examples/diffusers_for_vision.mdx

Normal file

150

docs/source/examples/diffusers_for_vision.mdx

Normal file

@@ -0,0 +1,150 @@

|

||||

<!--Copyright 2022 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# Diffusers for vision

|

||||

|

||||

## Direct image generation

|

||||

|

||||

#### **Example image generation with PNDM**

|

||||

|

||||

```python

|

||||

from diffusers import PNDM, UNetModel, PNDMScheduler

|

||||

import PIL.Image

|

||||

import numpy as np

|

||||

import torch

|

||||

|

||||

model_id = "fusing/ddim-celeba-hq"

|

||||

|

||||

model = UNetModel.from_pretrained(model_id)

|

||||

scheduler = PNDMScheduler()

|

||||

|

||||

# load model and scheduler

|

||||

pndm = PNDM(unet=model, noise_scheduler=scheduler)

|

||||

|

||||

# run pipeline in inference (sample random noise and denoise)

|

||||

with torch.no_grad():

|

||||

image = pndm()

|

||||

|

||||

# process image to PIL

|

||||

image_processed = image.cpu().permute(0, 2, 3, 1)

|

||||

image_processed = (image_processed + 1.0) / 2

|

||||

image_processed = torch.clamp(image_processed, 0.0, 1.0)

|

||||

image_processed = image_processed * 255

|

||||

image_processed = image_processed.numpy().astype(np.uint8)

|

||||

image_pil = PIL.Image.fromarray(image_processed[0])

|

||||

|

||||

# save image

|

||||

image_pil.save("test.png")

|

||||

```

|

||||

|

||||

#### **Example 1024x1024 image generation with SDE VE**

|

||||

|

||||

See [paper](https://arxiv.org/abs/2011.13456) for more information on SDE VE.

|

||||

|

||||

```python

|

||||

from diffusers import DiffusionPipeline

|

||||

import torch

|

||||

import PIL.Image

|

||||

import numpy as np

|

||||

|

||||

torch.manual_seed(32)

|

||||

|

||||

score_sde_sv = DiffusionPipeline.from_pretrained("fusing/ffhq_ncsnpp")

|

||||

|

||||

# Note this might take up to 3 minutes on a GPU

|

||||

image = score_sde_sv(num_inference_steps=2000)

|

||||

|

||||

image = image.permute(0, 2, 3, 1).cpu().numpy()

|

||||

image = np.clip(image * 255, 0, 255).astype(np.uint8)

|

||||

image_pil = PIL.Image.fromarray(image[0])

|

||||

|

||||

# save image

|

||||

image_pil.save("test.png")

|

||||

```

|

||||

#### **Example 32x32 image generation with SDE VP**

|

||||

|

||||

See [paper](https://arxiv.org/abs/2011.13456) for more information on SDE VE.

|

||||

|

||||

```python

|

||||

from diffusers import DiffusionPipeline

|

||||

import torch

|

||||

import PIL.Image

|

||||

import numpy as np

|

||||

|

||||

torch.manual_seed(32)

|

||||

|

||||

score_sde_sv = DiffusionPipeline.from_pretrained("fusing/cifar10-ddpmpp-deep-vp")

|

||||

|

||||

# Note this might take up to 3 minutes on a GPU

|

||||

image = score_sde_sv(num_inference_steps=1000)

|

||||

|

||||

image = image.permute(0, 2, 3, 1).cpu().numpy()

|

||||

image = np.clip(image * 255, 0, 255).astype(np.uint8)

|

||||

image_pil = PIL.Image.fromarray(image[0])

|

||||

|

||||

# save image

|

||||

image_pil.save("test.png")

|

||||

```

|

||||

|

||||

|

||||

#### **Text to Image generation with Latent Diffusion**

|

||||

|

||||

_Note: To use latent diffusion install transformers from [this branch](https://github.com/patil-suraj/transformers/tree/ldm-bert)._

|

||||

|

||||

```python

|

||||

from diffusers import DiffusionPipeline

|

||||

|

||||

ldm = DiffusionPipeline.from_pretrained("fusing/latent-diffusion-text2im-large")

|

||||

|

||||

generator = torch.manual_seed(42)

|

||||

|

||||

prompt = "A painting of a squirrel eating a burger"

|

||||

image = ldm([prompt], generator=generator, eta=0.3, guidance_scale=6.0, num_inference_steps=50)

|

||||

|

||||

image_processed = image.cpu().permute(0, 2, 3, 1)

|

||||

image_processed = image_processed * 255.0

|

||||

image_processed = image_processed.numpy().astype(np.uint8)

|

||||

image_pil = PIL.Image.fromarray(image_processed[0])

|

||||

|

||||

# save image

|

||||

image_pil.save("test.png")

|

||||

```

|

||||

|

||||

|

||||

## Text to image generation

|

||||

|

||||

```python

|

||||

import torch

|

||||

from diffusers import BDDMPipeline, GradTTSPipeline

|

||||

|

||||

torch_device = "cuda"

|

||||

|

||||

# load grad tts and bddm pipelines

|

||||

grad_tts = GradTTSPipeline.from_pretrained("fusing/grad-tts-libri-tts")

|

||||

bddm = BDDMPipeline.from_pretrained("fusing/diffwave-vocoder-ljspeech")

|

||||

|

||||

text = "Hello world, I missed you so much."

|

||||

|

||||

# generate mel spectograms using text

|

||||

mel_spec = grad_tts(text, torch_device=torch_device)

|

||||

|

||||

# generate the speech by passing mel spectograms to BDDMPipeline pipeline

|

||||

generator = torch.manual_seed(42)

|

||||

audio = bddm(mel_spec, generator, torch_device=torch_device)

|

||||

|

||||

# save generated audio

|

||||

from scipy.io.wavfile import write as wavwrite

|

||||

|

||||

sampling_rate = 22050

|

||||

wavwrite("generated_audio.wav", sampling_rate, audio.squeeze().cpu().numpy())

|

||||

```

|

||||

|

||||

110

docs/source/index.mdx

Normal file

110

docs/source/index.mdx

Normal file

@@ -0,0 +1,110 @@

|

||||

<!--Copyright 2022 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

<p align="center">

|

||||

<br>

|

||||

<img src="https://raw.githubusercontent.com/huggingface/diffusers/77aadfee6a891ab9fcfb780f87c693f7a5beeb8e/docs/source/imgs/diffusers_library.jpg" width="400"/>

|

||||

<br>

|

||||

</p>

|

||||

|

||||

# 🧨 Diffusers

|

||||

|

||||

|

||||

🤗 Diffusers provides pretrained diffusion models across multiple modalities, such as vision and audio, and serves

|

||||

as a modular toolbox for inference and training of diffusion models.

|

||||

|

||||

More precisely, 🤗 Diffusers offers:

|

||||

|

||||

- State-of-the-art diffusion pipelines that can be run in inference with just a couple of lines of code (see [src/diffusers/pipelines](https://github.com/huggingface/diffusers/tree/main/src/diffusers/pipelines)).

|

||||

- Various noise schedulers that can be used interchangeably for the prefered speed vs. quality trade-off in inference (see [src/diffusers/schedulers](https://github.com/huggingface/diffusers/tree/main/src/diffusers/schedulers)).

|

||||

- Multiple types of models, such as UNet, that can be used as building blocks in an end-to-end diffusion system (see [src/diffusers/models](https://github.com/huggingface/diffusers/tree/main/src/diffusers/models)).

|

||||

- Training examples to show how to train the most popular diffusion models (see [examples](https://github.com/huggingface/diffusers/tree/main/examples)).

|

||||

|

||||

# Installation

|

||||

|

||||

Install Diffusers for with PyTorch. Support for other libraries will come in the future

|

||||

|

||||

🤗 Diffusers is tested on Python 3.6+, and PyTorch 1.4.0+.

|

||||

|

||||

## Install with pip

|

||||

|

||||

You should install 🤗 Diffusers in a [virtual environment](https://docs.python.org/3/library/venv.html).

|

||||

If you're unfamiliar with Python virtual environments, take a look at this [guide](https://packaging.python.org/guides/installing-using-pip-and-virtual-environments/).

|

||||

A virtual environment makes it easier to manage different projects, and avoid compatibility issues between dependencies.

|

||||

|

||||

Start by creating a virtual environment in your project directory:

|

||||

|

||||

```bash

|

||||

python -m venv .env

|

||||

```

|

||||

|

||||

Activate the virtual environment:

|

||||

|

||||

```bash

|

||||

source .env/bin/activate

|

||||

```

|

||||

|

||||

Now you're ready to install 🤗 Diffusers with the following command:

|

||||

|

||||

```bash

|

||||

pip install diffusers

|

||||

```

|

||||

|

||||

## Install from source

|

||||

|

||||

Install 🤗 Diffusers from source with the following command:

|

||||

|

||||

```bash

|

||||

pip install git+https://github.com/huggingface/diffusers

|

||||

```

|

||||

|

||||

This command installs the bleeding edge `main` version rather than the latest `stable` version.

|

||||

The `main` version is useful for staying up-to-date with the latest developments.

|

||||

For instance, if a bug has been fixed since the last official release but a new release hasn't been rolled out yet.

|

||||

However, this means the `main` version may not always be stable.

|

||||

We strive to keep the `main` version operational, and most issues are usually resolved within a few hours or a day.

|

||||

If you run into a problem, please open an [Issue](https://github.com/huggingface/transformers/issues) so we can fix it even sooner!

|

||||

|

||||

## Editable install

|

||||

|

||||

You will need an editable install if you'd like to:

|

||||

|

||||

* Use the `main` version of the source code.

|

||||

* Contribute to 🤗 Diffusers and need to test changes in the code.

|

||||

|

||||

Clone the repository and install 🤗 Diffusers with the following commands:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/huggingface/diffusers.git

|

||||

cd transformers

|

||||

pip install -e .

|

||||

```

|

||||

|

||||

These commands will link the folder you cloned the repository to and your Python library paths.

|

||||

Python will now look inside the folder you cloned to in addition to the normal library paths.

|

||||

For example, if your Python packages are typically installed in `~/anaconda3/envs/main/lib/python3.7/site-packages/`, Python will also search the folder you cloned to: `~/diffusers/`.

|

||||

|

||||

<Tip warning={true}>

|

||||

|

||||

You must keep the `diffusers` folder if you want to keep using the library.

|

||||

|

||||

</Tip>

|

||||

|

||||

Now you can easily update your clone to the latest version of 🤗 Diffusers with the following command:

|

||||

|

||||

```bash

|

||||

cd ~/diffusers/

|

||||

git pull

|

||||

```

|

||||

|

||||

Your Python environment will find the `main` version of 🤗 Diffuers on the next run.

|

||||

|

||||

28

docs/source/models.mdx

Normal file

28

docs/source/models.mdx

Normal file

@@ -0,0 +1,28 @@

|

||||

<!--Copyright 2022 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# Models

|

||||

|

||||

Diffusers contains pretrained models for popular algorithms and modules for creating the next set of diffusion models.

|

||||

The primary function of these models is to denoise an input sample, by modeling the distribution $p_\theta(\mathbf{x}_{t-1}|\mathbf{x}_t)$.

|

||||

The models are built on the base class ['ModelMixin'] that is a `torch.nn.module` with basic functionality for saving and loading models both locally and from the HuggingFace hub.

|

||||

|

||||

## API

|

||||

|

||||

Models should provide the `def forward` function and initialization of the model.

|

||||

All saving, loading, and utilities should be in the base ['ModelMixin'] class.

|

||||

|

||||

## Examples

|

||||

|

||||

- The ['UNetModel'] was proposed in [TODO](https://arxiv.org/) and has been used in paper1, paper2, paper3.

|

||||

- Extensions of the ['UNetModel'] include the ['UNetGlideModel'] that uses attention and timestep embeddings for the [GLIDE](https://arxiv.org/abs/2112.10741) paper, the ['UNetGradTTS'] model from this [paper](https://arxiv.org/abs/2105.06337) for text-to-speech, ['UNetLDMModel'] for latent-diffusion models in this [paper](https://arxiv.org/abs/2112.10752), and the ['TemporalUNet'] used for time-series prediciton in this reinforcement learning [paper](https://arxiv.org/abs/2205.09991).

|

||||

- TODO: mention VAE / SDE score estimation

|

||||

4

docs/source/models/unet.mdx

Normal file

4

docs/source/models/unet.mdx

Normal file

@@ -0,0 +1,4 @@

|

||||

# UNet

|

||||

|

||||

The UNet is an example often used in diffusion models.

|

||||

It was originally published [here](https://www.google.com).

|

||||

17

docs/source/philosophy.mdx

Normal file

17

docs/source/philosophy.mdx

Normal file

@@ -0,0 +1,17 @@

|

||||

<!--Copyright 2022 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# Philosophy

|

||||

|

||||

- Readability and clarity is prefered over highly optimized code. A strong importance is put on providing readable, intuitive and elementary code design. *E.g.*, the provided [schedulers](https://github.com/huggingface/diffusers/tree/main/src/diffusers/schedulers) are separated from the provided [models](https://github.com/huggingface/diffusers/tree/main/src/diffusers/models) and provide well-commented code that can be read alongside the original paper.

|

||||

- Diffusers is **modality independent** and focusses on providing pretrained models and tools to build systems that generate **continous outputs**, *e.g.* vision and audio.

|

||||