mirror of

https://github.com/huggingface/diffusers.git

synced 2026-02-07 03:15:16 +08:00

Compare commits

12 Commits

test-clean

...

fix-test

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

7eb2d2208e | ||

|

|

7aa4514260 | ||

|

|

d97bca56ab | ||

|

|

c2e87869be | ||

|

|

ca61287daa | ||

|

|

f0c81562a4 | ||

|

|

9d20ed37a2 | ||

|

|

bda1d4faf8 | ||

|

|

77103d71ca | ||

|

|

0302446819 | ||

|

|

4d39b7483d | ||

|

|

fac761694a |

@@ -133,6 +133,62 @@ image

|

||||

|

||||

|

||||

|

||||

### Customize adapters strength

|

||||

For even more customization, you can control how strongly the adapter affects each part of the pipeline. For this, pass a dictionary with the control strengths (called "scales") to [`~diffusers.loaders.UNet2DConditionLoadersMixin.set_adapters`].

|

||||

|

||||

For example, here's how you can turn on the adapter for the `down` parts, but turn it off for the `mid` and `up` parts:

|

||||

```python

|

||||

pipe.enable_lora() # enable lora again, after we disabled it above

|

||||

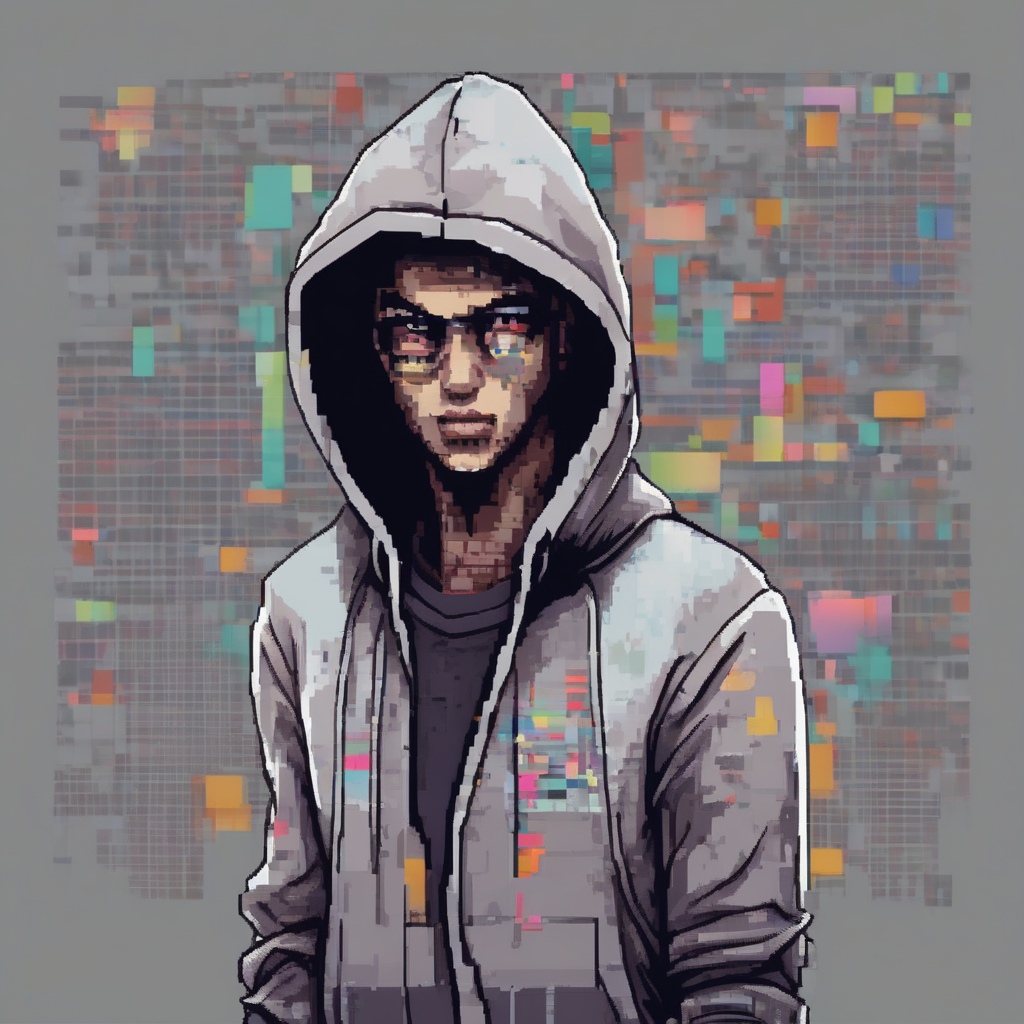

prompt = "toy_face of a hacker with a hoodie, pixel art"

|

||||

adapter_weight_scales = { "unet": { "down": 1, "mid": 0, "up": 0} }

|

||||

pipe.set_adapters("pixel", adapter_weight_scales)

|

||||

image = pipe(prompt, num_inference_steps=30, generator=torch.manual_seed(0)).images[0]

|

||||

image

|

||||

```

|

||||

|

||||

|

||||

|

||||

Let's see how turning off the `down` part and turning on the `mid` and `up` part respectively changes the image.

|

||||

```python

|

||||

adapter_weight_scales = { "unet": { "down": 0, "mid": 1, "up": 0} }

|

||||

pipe.set_adapters("pixel", adapter_weight_scales)

|

||||

image = pipe(prompt, num_inference_steps=30, generator=torch.manual_seed(0)).images[0]

|

||||

image

|

||||

```

|

||||

|

||||

|

||||

|

||||

```python

|

||||

adapter_weight_scales = { "unet": { "down": 0, "mid": 0, "up": 1} }

|

||||

pipe.set_adapters("pixel", adapter_weight_scales)

|

||||

image = pipe(prompt, num_inference_steps=30, generator=torch.manual_seed(0)).images[0]

|

||||

image

|

||||

```

|

||||

|

||||

|

||||

|

||||

Looks cool!

|

||||

|

||||

This is a really powerful feature. You can use it to control the adapter strengths down to per-transformer level. And you can even use it for multiple adapters.

|

||||

```python

|

||||

adapter_weight_scales_toy = 0.5

|

||||

adapter_weight_scales_pixel = {

|

||||

"unet": {

|

||||

"down": 0.9, # all transformers in the down-part will use scale 0.9

|

||||

# "mid" # because, in this example, "mid" is not given, all transformers in the mid part will use the default scale 1.0

|

||||

"up": {

|

||||

"block_0": 0.6, # all 3 transformers in the 0th block in the up-part will use scale 0.6

|

||||

"block_1": [0.4, 0.8, 1.0], # the 3 transformers in the 1st block in the up-part will use scales 0.4, 0.8 and 1.0 respectively

|

||||

}

|

||||

}

|

||||

}

|

||||

pipe.set_adapters(["toy", "pixel"], [adapter_weight_scales_toy, adapter_weight_scales_pixel])

|

||||

image = pipe(prompt, num_inference_steps=30, generator=torch.manual_seed(0)).images[0]

|

||||

image

|

||||

```

|

||||

|

||||

|

||||

|

||||

## Manage active adapters

|

||||

|

||||

You have attached multiple adapters in this tutorial, and if you're feeling a bit lost on what adapters have been attached to the pipeline's components, use the [`~diffusers.loaders.LoraLoaderMixin.get_active_adapters`] method to check the list of active adapters:

|

||||

|

||||

@@ -153,18 +153,43 @@ image

|

||||

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/load_attn_proc.png" />

|

||||

</div>

|

||||

|

||||

<Tip>

|

||||

|

||||

For both [`~loaders.LoraLoaderMixin.load_lora_weights`] and [`~loaders.UNet2DConditionLoadersMixin.load_attn_procs`], you can pass the `cross_attention_kwargs={"scale": 0.5}` parameter to adjust how much of the LoRA weights to use. A value of `0` is the same as only using the base model weights, and a value of `1` is equivalent to using the fully finetuned LoRA.

|

||||

|

||||

</Tip>

|

||||

|

||||

To unload the LoRA weights, use the [`~loaders.LoraLoaderMixin.unload_lora_weights`] method to discard the LoRA weights and restore the model to its original weights:

|

||||

|

||||

```py

|

||||

pipeline.unload_lora_weights()

|

||||

```

|

||||

|

||||

### Adjust LoRA weight scale

|

||||

|

||||

For both [`~loaders.LoraLoaderMixin.load_lora_weights`] and [`~loaders.UNet2DConditionLoadersMixin.load_attn_procs`], you can pass the `cross_attention_kwargs={"scale": 0.5}` parameter to adjust how much of the LoRA weights to use. A value of `0` is the same as only using the base model weights, and a value of `1` is equivalent to using the fully finetuned LoRA.

|

||||

|

||||

For more granular control on the amount of LoRA weights used per layer, you can use [`~loaders.LoraLoaderMixin.set_adapters`] and pass a dictionary specifying by how much to scale the weights in each layer by.

|

||||

```python

|

||||

pipe = ... # create pipeline

|

||||

pipe.load_lora_weights(..., adapter_name="my_adapter")

|

||||

scales = {

|

||||

"text_encoder": 0.5,

|

||||

"text_encoder_2": 0.5, # only usable if pipe has a 2nd text encoder

|

||||

"unet": {

|

||||

"down": 0.9, # all transformers in the down-part will use scale 0.9

|

||||

# "mid" # in this example "mid" is not given, therefore all transformers in the mid part will use the default scale 1.0

|

||||

"up": {

|

||||

"block_0": 0.6, # all 3 transformers in the 0th block in the up-part will use scale 0.6

|

||||

"block_1": [0.4, 0.8, 1.0], # the 3 transformers in the 1st block in the up-part will use scales 0.4, 0.8 and 1.0 respectively

|

||||

}

|

||||

}

|

||||

}

|

||||

pipe.set_adapters("my_adapter", scales)

|

||||

```

|

||||

|

||||

This also works with multiple adapters - see [this guide](https://huggingface.co/docs/diffusers/tutorials/using_peft_for_inference#customize-adapters-strength) for how to do it.

|

||||

|

||||

<Tip warning={true}>

|

||||

|

||||

Currently, [`~loaders.LoraLoaderMixin.set_adapters`] only supports scaling attention weights. If a LoRA has other parts (e.g., resnets or down-/upsamplers), they will keep a scale of 1.0.

|

||||

|

||||

</Tip>

|

||||

|

||||

### Kohya and TheLastBen

|

||||

|

||||

Other popular LoRA trainers from the community include those by [Kohya](https://github.com/kohya-ss/sd-scripts/) and [TheLastBen](https://github.com/TheLastBen/fast-stable-diffusion). These trainers create different LoRA checkpoints than those trained by 🤗 Diffusers, but they can still be loaded in the same way.

|

||||

|

||||

@@ -85,14 +85,25 @@ This depth estimation pipeline processes a single input image through multiple d

|

||||

|

||||

```python

|

||||

import numpy as np

|

||||

import torch

|

||||

from PIL import Image

|

||||

from diffusers import DiffusionPipeline

|

||||

from diffusers.utils import load_image

|

||||

|

||||

# Original DDIM version (higher quality)

|

||||

pipe = DiffusionPipeline.from_pretrained(

|

||||

"Bingxin/Marigold",

|

||||

"prs-eth/marigold-v1-0",

|

||||

custom_pipeline="marigold_depth_estimation"

|

||||

# torch_dtype=torch.float16, # (optional) Run with half-precision (16-bit float).

|

||||

# variant="fp16", # (optional) Use with `torch_dtype=torch.float16`, to directly load fp16 checkpoint

|

||||

)

|

||||

|

||||

# (New) LCM version (faster speed)

|

||||

pipe = DiffusionPipeline.from_pretrained(

|

||||

"prs-eth/marigold-lcm-v1-0",

|

||||

custom_pipeline="marigold_depth_estimation"

|

||||

# torch_dtype=torch.float16, # (optional) Run with half-precision (16-bit float).

|

||||

# variant="fp16", # (optional) Use with `torch_dtype=torch.float16`, to directly load fp16 checkpoint

|

||||

)

|

||||

|

||||

pipe.to("cuda")

|

||||

@@ -101,12 +112,21 @@ img_path_or_url = "https://share.phys.ethz.ch/~pf/bingkedata/marigold/pipeline_e

|

||||

image: Image.Image = load_image(img_path_or_url)

|

||||

|

||||

pipeline_output = pipe(

|

||||

image, # Input image.

|

||||

image, # Input image.

|

||||

# ----- recommended setting for DDIM version -----

|

||||

# denoising_steps=10, # (optional) Number of denoising steps of each inference pass. Default: 10.

|

||||

# ensemble_size=10, # (optional) Number of inference passes in the ensemble. Default: 10.

|

||||

# ------------------------------------------------

|

||||

|

||||

# ----- recommended setting for LCM version ------

|

||||

# denoising_steps=4,

|

||||

# ensemble_size=5,

|

||||

# -------------------------------------------------

|

||||

|

||||

# processing_res=768, # (optional) Maximum resolution of processing. If set to 0: will not resize at all. Defaults to 768.

|

||||

# match_input_res=True, # (optional) Resize depth prediction to match input resolution.

|

||||

# batch_size=0, # (optional) Inference batch size, no bigger than `num_ensemble`. If set to 0, the script will automatically decide the proper batch size. Defaults to 0.

|

||||

# seed=2024, # (optional) Random seed can be set to ensure additional reproducibility. Default: None (unseeded). Note: forcing --batch_size 1 helps to increase reproducibility. To ensure full reproducibility, deterministic mode needs to be used.

|

||||

# color_map="Spectral", # (optional) Colormap used to colorize the depth map. Defaults to "Spectral". Set to `None` to skip colormap generation.

|

||||

# show_progress_bar=True, # (optional) If true, will show progress bars of the inference progress.

|

||||

)

|

||||

@@ -3743,3 +3763,80 @@ onestep_image = pipe(prompt, num_inference_steps=1).images[0]

|

||||

# Multistep sampling

|

||||

multistep_image = pipe(prompt, num_inference_steps=4).images[0]

|

||||

```

|

||||

|

||||

# Perturbed-Attention Guidance

|

||||

|

||||

[Project](https://ku-cvlab.github.io/Perturbed-Attention-Guidance/) / [arXiv](https://arxiv.org/abs/2403.17377) / [GitHub](https://github.com/KU-CVLAB/Perturbed-Attention-Guidance)

|

||||

|

||||

This implementation is based on [Diffusers](https://huggingface.co/docs/diffusers/index). StableDiffusionPAGPipeline is a modification of StableDiffusionPipeline to support Perturbed-Attention Guidance (PAG).

|

||||

|

||||

## Example Usage

|

||||

|

||||

```

|

||||

import os

|

||||

import torch

|

||||

|

||||

from accelerate.utils import set_seed

|

||||

|

||||

from diffusers import StableDiffusionPipeline

|

||||

from diffusers.utils import load_image, make_image_grid

|

||||

from diffusers.utils.torch_utils import randn_tensor

|

||||

|

||||

pipe = StableDiffusionPipeline.from_pretrained(

|

||||

"runwayml/stable-diffusion-v1-5",

|

||||

custom_pipeline="hyoungwoncho/sd_perturbed_attention_guidance",

|

||||

torch_dtype=torch.float16

|

||||

)

|

||||

|

||||

device="cuda"

|

||||

pipe = pipe.to(device)

|

||||

|

||||

pag_scale = 5.0

|

||||

pag_applied_layers_index = ['m0']

|

||||

|

||||

batch_size = 4

|

||||

seed=10

|

||||

|

||||

base_dir = "./results/"

|

||||

grid_dir = base_dir + "/pag" + str(pag_scale) + "/"

|

||||

|

||||

if not os.path.exists(grid_dir):

|

||||

os.makedirs(grid_dir)

|

||||

|

||||

set_seed(seed)

|

||||

|

||||

latent_input = randn_tensor(shape=(batch_size,4,64,64),generator=None, device=device, dtype=torch.float16)

|

||||

|

||||

output_baseline = pipe(

|

||||

"",

|

||||

width=512,

|

||||

height=512,

|

||||

num_inference_steps=50,

|

||||

guidance_scale=0.0,

|

||||

pag_scale=0.0,

|

||||

pag_applied_layers_index=pag_applied_layers_index,

|

||||

num_images_per_prompt=batch_size,

|

||||

latents=latent_input

|

||||

).images

|

||||

|

||||

output_pag = pipe(

|

||||

"",

|

||||

width=512,

|

||||

height=512,

|

||||

num_inference_steps=50,

|

||||

guidance_scale=0.0,

|

||||

pag_scale=5.0,

|

||||

pag_applied_layers_index=pag_applied_layers_index,

|

||||

num_images_per_prompt=batch_size,

|

||||

latents=latent_input

|

||||

).images

|

||||

|

||||

grid_image = make_image_grid(output_baseline + output_pag, rows=2, cols=batch_size)

|

||||

grid_image.save(grid_dir + "sample.png")

|

||||

```

|

||||

|

||||

## PAG Parameters

|

||||

|

||||

pag_scale : gudiance scale of PAG (ex: 5.0)

|

||||

|

||||

pag_applied_layers_index : index of the layer to apply perturbation (ex: ['m0'])

|

||||

|

||||

@@ -18,6 +18,7 @@

|

||||

# --------------------------------------------------------------------------

|

||||

|

||||

|

||||

import logging

|

||||

import math

|

||||

from typing import Dict, Union

|

||||

|

||||

@@ -25,6 +26,7 @@ import matplotlib

|

||||

import numpy as np

|

||||

import torch

|

||||

from PIL import Image

|

||||

from PIL.Image import Resampling

|

||||

from scipy.optimize import minimize

|

||||

from torch.utils.data import DataLoader, TensorDataset

|

||||

from tqdm.auto import tqdm

|

||||

@@ -34,13 +36,14 @@ from diffusers import (

|

||||

AutoencoderKL,

|

||||

DDIMScheduler,

|

||||

DiffusionPipeline,

|

||||

LCMScheduler,

|

||||

UNet2DConditionModel,

|

||||

)

|

||||

from diffusers.utils import BaseOutput, check_min_version

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.28.0.dev0")

|

||||

check_min_version("0.25.0")

|

||||

|

||||

|

||||

class MarigoldDepthOutput(BaseOutput):

|

||||

@@ -61,6 +64,19 @@ class MarigoldDepthOutput(BaseOutput):

|

||||

uncertainty: Union[None, np.ndarray]

|

||||

|

||||

|

||||

def get_pil_resample_method(method_str: str) -> Resampling:

|

||||

resample_method_dic = {

|

||||

"bilinear": Resampling.BILINEAR,

|

||||

"bicubic": Resampling.BICUBIC,

|

||||

"nearest": Resampling.NEAREST,

|

||||

}

|

||||

resample_method = resample_method_dic.get(method_str, None)

|

||||

if resample_method is None:

|

||||

raise ValueError(f"Unknown resampling method: {resample_method}")

|

||||

else:

|

||||

return resample_method

|

||||

|

||||

|

||||

class MarigoldPipeline(DiffusionPipeline):

|

||||

"""

|

||||

Pipeline for monocular depth estimation using Marigold: https://marigoldmonodepth.github.io.

|

||||

@@ -113,7 +129,9 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

ensemble_size: int = 10,

|

||||

processing_res: int = 768,

|

||||

match_input_res: bool = True,

|

||||

resample_method: str = "bilinear",

|

||||

batch_size: int = 0,

|

||||

seed: Union[int, None] = None,

|

||||

color_map: str = "Spectral",

|

||||

show_progress_bar: bool = True,

|

||||

ensemble_kwargs: Dict = None,

|

||||

@@ -129,7 +147,9 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

If set to 0: will not resize at all.

|

||||

match_input_res (`bool`, *optional*, defaults to `True`):

|

||||

Resize depth prediction to match input resolution.

|

||||

Only valid if `limit_input_res` is not None.

|

||||

Only valid if `processing_res` > 0.

|

||||

resample_method: (`str`, *optional*, defaults to `bilinear`):

|

||||

Resampling method used to resize images and depth predictions. This can be one of `bilinear`, `bicubic` or `nearest`, defaults to: `bilinear`.

|

||||

denoising_steps (`int`, *optional*, defaults to `10`):

|

||||

Number of diffusion denoising steps (DDIM) during inference.

|

||||

ensemble_size (`int`, *optional*, defaults to `10`):

|

||||

@@ -137,6 +157,8 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

batch_size (`int`, *optional*, defaults to `0`):

|

||||

Inference batch size, no bigger than `num_ensemble`.

|

||||

If set to 0, the script will automatically decide the proper batch size.

|

||||

seed (`int`, *optional*, defaults to `None`)

|

||||

Reproducibility seed.

|

||||

show_progress_bar (`bool`, *optional*, defaults to `True`):

|

||||

Display a progress bar of diffusion denoising.

|

||||

color_map (`str`, *optional*, defaults to `"Spectral"`, pass `None` to skip colorized depth map generation):

|

||||

@@ -146,8 +168,7 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

Returns:

|

||||

`MarigoldDepthOutput`: Output class for Marigold monocular depth prediction pipeline, including:

|

||||

- **depth_np** (`np.ndarray`) Predicted depth map, with depth values in the range of [0, 1]

|

||||

- **depth_colored** (`None` or `PIL.Image.Image`) Colorized depth map, with the shape of [3, H, W] and

|

||||

values in [0, 1]. None if `color_map` is `None`

|

||||

- **depth_colored** (`PIL.Image.Image`) Colorized depth map, with the shape of [3, H, W] and values in [0, 1], None if `color_map` is `None`

|

||||

- **uncertainty** (`None` or `np.ndarray`) Uncalibrated uncertainty(MAD, median absolute deviation)

|

||||

coming from ensembling. None if `ensemble_size = 1`

|

||||

"""

|

||||

@@ -158,13 +179,21 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

if not match_input_res:

|

||||

assert processing_res is not None, "Value error: `resize_output_back` is only valid with "

|

||||

assert processing_res >= 0

|

||||

assert denoising_steps >= 1

|

||||

assert ensemble_size >= 1

|

||||

|

||||

# Check if denoising step is reasonable

|

||||

self._check_inference_step(denoising_steps)

|

||||

|

||||

resample_method: Resampling = get_pil_resample_method(resample_method)

|

||||

|

||||

# ----------------- Image Preprocess -----------------

|

||||

# Resize image

|

||||

if processing_res > 0:

|

||||

input_image = self.resize_max_res(input_image, max_edge_resolution=processing_res)

|

||||

input_image = self.resize_max_res(

|

||||

input_image,

|

||||

max_edge_resolution=processing_res,

|

||||

resample_method=resample_method,

|

||||

)

|

||||

# Convert the image to RGB, to 1.remove the alpha channel 2.convert B&W to 3-channel

|

||||

input_image = input_image.convert("RGB")

|

||||

image = np.asarray(input_image)

|

||||

@@ -203,9 +232,10 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

rgb_in=batched_img,

|

||||

num_inference_steps=denoising_steps,

|

||||

show_pbar=show_progress_bar,

|

||||

seed=seed,

|

||||

)

|

||||

depth_pred_ls.append(depth_pred_raw.detach().clone())

|

||||

depth_preds = torch.concat(depth_pred_ls, axis=0).squeeze()

|

||||

depth_pred_ls.append(depth_pred_raw.detach())

|

||||

depth_preds = torch.concat(depth_pred_ls, dim=0).squeeze()

|

||||

torch.cuda.empty_cache() # clear vram cache for ensembling

|

||||

|

||||

# ----------------- Test-time ensembling -----------------

|

||||

@@ -227,7 +257,7 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

# Resize back to original resolution

|

||||

if match_input_res:

|

||||

pred_img = Image.fromarray(depth_pred)

|

||||

pred_img = pred_img.resize(input_size)

|

||||

pred_img = pred_img.resize(input_size, resample=resample_method)

|

||||

depth_pred = np.asarray(pred_img)

|

||||

|

||||

# Clip output range

|

||||

@@ -243,12 +273,32 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

depth_colored_img = Image.fromarray(depth_colored_hwc)

|

||||

else:

|

||||

depth_colored_img = None

|

||||

|

||||

return MarigoldDepthOutput(

|

||||

depth_np=depth_pred,

|

||||

depth_colored=depth_colored_img,

|

||||

uncertainty=pred_uncert,

|

||||

)

|

||||

|

||||

def _check_inference_step(self, n_step: int):

|

||||

"""

|

||||

Check if denoising step is reasonable

|

||||

Args:

|

||||

n_step (`int`): denoising steps

|

||||

"""

|

||||

assert n_step >= 1

|

||||

|

||||

if isinstance(self.scheduler, DDIMScheduler):

|

||||

if n_step < 10:

|

||||

logging.warning(

|

||||

f"Too few denoising steps: {n_step}. Recommended to use the LCM checkpoint for few-step inference."

|

||||

)

|

||||

elif isinstance(self.scheduler, LCMScheduler):

|

||||

if not 1 <= n_step <= 4:

|

||||

logging.warning(f"Non-optimal setting of denoising steps: {n_step}. Recommended setting is 1-4 steps.")

|

||||

else:

|

||||

raise RuntimeError(f"Unsupported scheduler type: {type(self.scheduler)}")

|

||||

|

||||

def _encode_empty_text(self):

|

||||

"""

|

||||

Encode text embedding for empty prompt.

|

||||

@@ -265,7 +315,13 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

self.empty_text_embed = self.text_encoder(text_input_ids)[0].to(self.dtype)

|

||||

|

||||

@torch.no_grad()

|

||||

def single_infer(self, rgb_in: torch.Tensor, num_inference_steps: int, show_pbar: bool) -> torch.Tensor:

|

||||

def single_infer(

|

||||

self,

|

||||

rgb_in: torch.Tensor,

|

||||

num_inference_steps: int,

|

||||

seed: Union[int, None],

|

||||

show_pbar: bool,

|

||||

) -> torch.Tensor:

|

||||

"""

|

||||

Perform an individual depth prediction without ensembling.

|

||||

|

||||

@@ -286,10 +342,20 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

timesteps = self.scheduler.timesteps # [T]

|

||||

|

||||

# Encode image

|

||||

rgb_latent = self._encode_rgb(rgb_in)

|

||||

rgb_latent = self.encode_rgb(rgb_in)

|

||||

|

||||

# Initial depth map (noise)

|

||||

depth_latent = torch.randn(rgb_latent.shape, device=device, dtype=self.dtype) # [B, 4, h, w]

|

||||

if seed is None:

|

||||

rand_num_generator = None

|

||||

else:

|

||||

rand_num_generator = torch.Generator(device=device)

|

||||

rand_num_generator.manual_seed(seed)

|

||||

depth_latent = torch.randn(

|

||||

rgb_latent.shape,

|

||||

device=device,

|

||||

dtype=self.dtype,

|

||||

generator=rand_num_generator,

|

||||

) # [B, 4, h, w]

|

||||

|

||||

# Batched empty text embedding

|

||||

if self.empty_text_embed is None:

|

||||

@@ -314,9 +380,9 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

noise_pred = self.unet(unet_input, t, encoder_hidden_states=batch_empty_text_embed).sample # [B, 4, h, w]

|

||||

|

||||

# compute the previous noisy sample x_t -> x_t-1

|

||||

depth_latent = self.scheduler.step(noise_pred, t, depth_latent).prev_sample

|

||||

torch.cuda.empty_cache()

|

||||

depth = self._decode_depth(depth_latent)

|

||||

depth_latent = self.scheduler.step(noise_pred, t, depth_latent, generator=rand_num_generator).prev_sample

|

||||

|

||||

depth = self.decode_depth(depth_latent)

|

||||

|

||||

# clip prediction

|

||||

depth = torch.clip(depth, -1.0, 1.0)

|

||||

@@ -325,7 +391,7 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

|

||||

return depth

|

||||

|

||||

def _encode_rgb(self, rgb_in: torch.Tensor) -> torch.Tensor:

|

||||

def encode_rgb(self, rgb_in: torch.Tensor) -> torch.Tensor:

|

||||

"""

|

||||

Encode RGB image into latent.

|

||||

|

||||

@@ -344,7 +410,7 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

rgb_latent = mean * self.rgb_latent_scale_factor

|

||||

return rgb_latent

|

||||

|

||||

def _decode_depth(self, depth_latent: torch.Tensor) -> torch.Tensor:

|

||||

def decode_depth(self, depth_latent: torch.Tensor) -> torch.Tensor:

|

||||

"""

|

||||

Decode depth latent into depth map.

|

||||

|

||||

@@ -365,7 +431,7 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

return depth_mean

|

||||

|

||||

@staticmethod

|

||||

def resize_max_res(img: Image.Image, max_edge_resolution: int) -> Image.Image:

|

||||

def resize_max_res(img: Image.Image, max_edge_resolution: int, resample_method=Resampling.BILINEAR) -> Image.Image:

|

||||

"""

|

||||

Resize image to limit maximum edge length while keeping aspect ratio.

|

||||

|

||||

@@ -374,6 +440,8 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

Image to be resized.

|

||||

max_edge_resolution (`int`):

|

||||

Maximum edge length (pixel).

|

||||

resample_method (`PIL.Image.Resampling`):

|

||||

Resampling method used to resize images.

|

||||

|

||||

Returns:

|

||||

`Image.Image`: Resized image.

|

||||

@@ -384,7 +452,7 @@ class MarigoldPipeline(DiffusionPipeline):

|

||||

new_width = int(original_width * downscale_factor)

|

||||

new_height = int(original_height * downscale_factor)

|

||||

|

||||

resized_img = img.resize((new_width, new_height))

|

||||

resized_img = img.resize((new_width, new_height), resample=resample_method)

|

||||

return resized_img

|

||||

|

||||

@staticmethod

|

||||

|

||||

1477

examples/community/pipeline_stable_diffusion_pag.py

Normal file

1477

examples/community/pipeline_stable_diffusion_pag.py

Normal file

File diff suppressed because it is too large

Load Diff

1077

examples/community/pipeline_stable_diffusion_xl_instandid_img2img.py

Normal file

1077

examples/community/pipeline_stable_diffusion_xl_instandid_img2img.py

Normal file

File diff suppressed because it is too large

Load Diff

@@ -46,6 +46,11 @@ except Exception:

|

||||

|

||||

logger = logging.get_logger(__name__) # pylint: disable=invalid-name

|

||||

|

||||

logger.warning(

|

||||

"To use instant id pipelines, please make sure you have the `insightface` library installed: `pip install insightface`."

|

||||

"Please refer to: https://huggingface.co/InstantX/InstantID for further instructions regarding inference"

|

||||

)

|

||||

|

||||

|

||||

def FeedForward(dim, mult=4):

|

||||

inner_dim = int(dim * mult)

|

||||

|

||||

@@ -11,6 +11,7 @@

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

import copy

|

||||

import inspect

|

||||

import os

|

||||

from pathlib import Path

|

||||

@@ -985,7 +986,7 @@ class LoraLoaderMixin:

|

||||

self,

|

||||

adapter_names: Union[List[str], str],

|

||||

text_encoder: Optional["PreTrainedModel"] = None, # noqa: F821

|

||||

text_encoder_weights: List[float] = None,

|

||||

text_encoder_weights: Optional[Union[float, List[float], List[None]]] = None,

|

||||

):

|

||||

"""

|

||||

Sets the adapter layers for the text encoder.

|

||||

@@ -1003,15 +1004,20 @@ class LoraLoaderMixin:

|

||||

raise ValueError("PEFT backend is required for this method.")

|

||||

|

||||

def process_weights(adapter_names, weights):

|

||||

if weights is None:

|

||||

weights = [1.0] * len(adapter_names)

|

||||

elif isinstance(weights, float):

|

||||

weights = [weights]

|

||||

# Expand weights into a list, one entry per adapter

|

||||

# e.g. for 2 adapters: 7 -> [7,7] ; [3, None] -> [3, None]

|

||||

if not isinstance(weights, list):

|

||||

weights = [weights] * len(adapter_names)

|

||||

|

||||

if len(adapter_names) != len(weights):

|

||||

raise ValueError(

|

||||

f"Length of adapter names {len(adapter_names)} is not equal to the length of the weights {len(weights)}"

|

||||

)

|

||||

|

||||

# Set None values to default of 1.0

|

||||

# e.g. [7,7] -> [7,7] ; [3, None] -> [3,1]

|

||||

weights = [w if w is not None else 1.0 for w in weights]

|

||||

|

||||

return weights

|

||||

|

||||

adapter_names = [adapter_names] if isinstance(adapter_names, str) else adapter_names

|

||||

@@ -1059,17 +1065,77 @@ class LoraLoaderMixin:

|

||||

def set_adapters(

|

||||

self,

|

||||

adapter_names: Union[List[str], str],

|

||||

adapter_weights: Optional[List[float]] = None,

|

||||

adapter_weights: Optional[Union[float, Dict, List[float], List[Dict]]] = None,

|

||||

):

|

||||

adapter_names = [adapter_names] if isinstance(adapter_names, str) else adapter_names

|

||||

|

||||

adapter_weights = copy.deepcopy(adapter_weights)

|

||||

|

||||

# Expand weights into a list, one entry per adapter

|

||||

if not isinstance(adapter_weights, list):

|

||||

adapter_weights = [adapter_weights] * len(adapter_names)

|

||||

|

||||

if len(adapter_names) != len(adapter_weights):

|

||||

raise ValueError(

|

||||

f"Length of adapter names {len(adapter_names)} is not equal to the length of the weights {len(adapter_weights)}"

|

||||

)

|

||||

|

||||

# Decompose weights into weights for unet, text_encoder and text_encoder_2

|

||||

unet_lora_weights, text_encoder_lora_weights, text_encoder_2_lora_weights = [], [], []

|

||||

|

||||

list_adapters = self.get_list_adapters() # eg {"unet": ["adapter1", "adapter2"], "text_encoder": ["adapter2"]}

|

||||

all_adapters = {

|

||||

adapter for adapters in list_adapters.values() for adapter in adapters

|

||||

} # eg ["adapter1", "adapter2"]

|

||||

invert_list_adapters = {

|

||||

adapter: [part for part, adapters in list_adapters.items() if adapter in adapters]

|

||||

for adapter in all_adapters

|

||||

} # eg {"adapter1": ["unet"], "adapter2": ["unet", "text_encoder"]}

|

||||

|

||||

for adapter_name, weights in zip(adapter_names, adapter_weights):

|

||||

if isinstance(weights, dict):

|

||||

unet_lora_weight = weights.pop("unet", None)

|

||||

text_encoder_lora_weight = weights.pop("text_encoder", None)

|

||||

text_encoder_2_lora_weight = weights.pop("text_encoder_2", None)

|

||||

|

||||

if len(weights) > 0:

|

||||

raise ValueError(

|

||||

f"Got invalid key '{weights.keys()}' in lora weight dict for adapter {adapter_name}."

|

||||

)

|

||||

|

||||

if text_encoder_2_lora_weight is not None and not hasattr(self, "text_encoder_2"):

|

||||

logger.warning(

|

||||

"Lora weight dict contains text_encoder_2 weights but will be ignored because pipeline does not have text_encoder_2."

|

||||

)

|

||||

|

||||

# warn if adapter doesn't have parts specified by adapter_weights

|

||||

for part_weight, part_name in zip(

|

||||

[unet_lora_weight, text_encoder_lora_weight, text_encoder_2_lora_weight],

|

||||

["unet", "text_encoder", "text_encoder_2"],

|

||||

):

|

||||

if part_weight is not None and part_name not in invert_list_adapters[adapter_name]:

|

||||

logger.warning(

|

||||

f"Lora weight dict for adapter '{adapter_name}' contains {part_name}, but this will be ignored because {adapter_name} does not contain weights for {part_name}. Valid parts for {adapter_name} are: {invert_list_adapters[adapter_name]}."

|

||||

)

|

||||

|

||||

else:

|

||||

unet_lora_weight = weights

|

||||

text_encoder_lora_weight = weights

|

||||

text_encoder_2_lora_weight = weights

|

||||

|

||||

unet_lora_weights.append(unet_lora_weight)

|

||||

text_encoder_lora_weights.append(text_encoder_lora_weight)

|

||||

text_encoder_2_lora_weights.append(text_encoder_2_lora_weight)

|

||||

|

||||

unet = getattr(self, self.unet_name) if not hasattr(self, "unet") else self.unet

|

||||

# Handle the UNET

|

||||

unet.set_adapters(adapter_names, adapter_weights)

|

||||

unet.set_adapters(adapter_names, unet_lora_weights)

|

||||

|

||||

# Handle the Text Encoder

|

||||

if hasattr(self, "text_encoder"):

|

||||

self.set_adapters_for_text_encoder(adapter_names, self.text_encoder, adapter_weights)

|

||||

self.set_adapters_for_text_encoder(adapter_names, self.text_encoder, text_encoder_lora_weights)

|

||||

if hasattr(self, "text_encoder_2"):

|

||||

self.set_adapters_for_text_encoder(adapter_names, self.text_encoder_2, adapter_weights)

|

||||

self.set_adapters_for_text_encoder(adapter_names, self.text_encoder_2, text_encoder_2_lora_weights)

|

||||

|

||||

def disable_lora(self):

|

||||

if not USE_PEFT_BACKEND:

|

||||

|

||||

@@ -47,6 +47,7 @@ from .single_file_utils import (

|

||||

infer_stable_cascade_single_file_config,

|

||||

load_single_file_model_checkpoint,

|

||||

)

|

||||

from .unet_loader_utils import _maybe_expand_lora_scales

|

||||

from .utils import AttnProcsLayers

|

||||

|

||||

|

||||

@@ -564,7 +565,7 @@ class UNet2DConditionLoadersMixin:

|

||||

def set_adapters(

|

||||

self,

|

||||

adapter_names: Union[List[str], str],

|

||||

weights: Optional[Union[List[float], float]] = None,

|

||||

weights: Optional[Union[float, Dict, List[float], List[Dict], List[None]]] = None,

|

||||

):

|

||||

"""

|

||||

Set the currently active adapters for use in the UNet.

|

||||

@@ -597,9 +598,9 @@ class UNet2DConditionLoadersMixin:

|

||||

|

||||

adapter_names = [adapter_names] if isinstance(adapter_names, str) else adapter_names

|

||||

|

||||

if weights is None:

|

||||

weights = [1.0] * len(adapter_names)

|

||||

elif isinstance(weights, float):

|

||||

# Expand weights into a list, one entry per adapter

|

||||

# examples for e.g. 2 adapters: [{...}, 7] -> [7,7] ; None -> [None, None]

|

||||

if not isinstance(weights, list):

|

||||

weights = [weights] * len(adapter_names)

|

||||

|

||||

if len(adapter_names) != len(weights):

|

||||

@@ -607,6 +608,13 @@ class UNet2DConditionLoadersMixin:

|

||||

f"Length of adapter names {len(adapter_names)} is not equal to the length of their weights {len(weights)}."

|

||||

)

|

||||

|

||||

# Set None values to default of 1.0

|

||||

# e.g. [{...}, 7] -> [{...}, 7] ; [None, None] -> [1.0, 1.0]

|

||||

weights = [w if w is not None else 1.0 for w in weights]

|

||||

|

||||

# e.g. [{...}, 7] -> [{expanded dict...}, 7]

|

||||

weights = _maybe_expand_lora_scales(self, weights)

|

||||

|

||||

set_weights_and_activate_adapters(self, adapter_names, weights)

|

||||

|

||||

def disable_lora(self):

|

||||

|

||||

154

src/diffusers/loaders/unet_loader_utils.py

Normal file

154

src/diffusers/loaders/unet_loader_utils.py

Normal file

@@ -0,0 +1,154 @@

|

||||

# Copyright 2024 The HuggingFace Team. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

import copy

|

||||

from typing import TYPE_CHECKING, Dict, List, Union

|

||||

|

||||

from ..utils import logging

|

||||

|

||||

|

||||

if TYPE_CHECKING:

|

||||

# import here to avoid circular imports

|

||||

from ..models import UNet2DConditionModel

|

||||

|

||||

logger = logging.get_logger(__name__) # pylint: disable=invalid-name

|

||||

|

||||

|

||||

def _translate_into_actual_layer_name(name):

|

||||

"""Translate user-friendly name (e.g. 'mid') into actual layer name (e.g. 'mid_block.attentions.0')"""

|

||||

if name == "mid":

|

||||

return "mid_block.attentions.0"

|

||||

|

||||

updown, block, attn = name.split(".")

|

||||

|

||||

updown = updown.replace("down", "down_blocks").replace("up", "up_blocks")

|

||||

block = block.replace("block_", "")

|

||||

attn = "attentions." + attn

|

||||

|

||||

return ".".join((updown, block, attn))

|

||||

|

||||

|

||||

def _maybe_expand_lora_scales(unet: "UNet2DConditionModel", weight_scales: List[Union[float, Dict]]):

|

||||

blocks_with_transformer = {

|

||||

"down": [i for i, block in enumerate(unet.down_blocks) if hasattr(block, "attentions")],

|

||||

"up": [i for i, block in enumerate(unet.up_blocks) if hasattr(block, "attentions")],

|

||||

}

|

||||

transformer_per_block = {"down": unet.config.layers_per_block, "up": unet.config.layers_per_block + 1}

|

||||

|

||||

expanded_weight_scales = [

|

||||

_maybe_expand_lora_scales_for_one_adapter(

|

||||

weight_for_adapter, blocks_with_transformer, transformer_per_block, unet.state_dict()

|

||||

)

|

||||

for weight_for_adapter in weight_scales

|

||||

]

|

||||

|

||||

return expanded_weight_scales

|

||||

|

||||

|

||||

def _maybe_expand_lora_scales_for_one_adapter(

|

||||

scales: Union[float, Dict],

|

||||

blocks_with_transformer: Dict[str, int],

|

||||

transformer_per_block: Dict[str, int],

|

||||

state_dict: None,

|

||||

):

|

||||

"""

|

||||

Expands the inputs into a more granular dictionary. See the example below for more details.

|

||||

|

||||

Parameters:

|

||||

scales (`Union[float, Dict]`):

|

||||

Scales dict to expand.

|

||||

blocks_with_transformer (`Dict[str, int]`):

|

||||

Dict with keys 'up' and 'down', showing which blocks have transformer layers

|

||||

transformer_per_block (`Dict[str, int]`):

|

||||

Dict with keys 'up' and 'down', showing how many transformer layers each block has

|

||||

|

||||

E.g. turns

|

||||

```python

|

||||

scales = {

|

||||

'down': 2,

|

||||

'mid': 3,

|

||||

'up': {

|

||||

'block_0': 4,

|

||||

'block_1': [5, 6, 7]

|

||||

}

|

||||

}

|

||||

blocks_with_transformer = {

|

||||

'down': [1,2],

|

||||

'up': [0,1]

|

||||

}

|

||||

transformer_per_block = {

|

||||

'down': 2,

|

||||

'up': 3

|

||||

}

|

||||

```

|

||||

into

|

||||

```python

|

||||

{

|

||||

'down.block_1.0': 2,

|

||||

'down.block_1.1': 2,

|

||||

'down.block_2.0': 2,

|

||||

'down.block_2.1': 2,

|

||||

'mid': 3,

|

||||

'up.block_0.0': 4,

|

||||

'up.block_0.1': 4,

|

||||

'up.block_0.2': 4,

|

||||

'up.block_1.0': 5,

|

||||

'up.block_1.1': 6,

|

||||

'up.block_1.2': 7,

|

||||

}

|

||||

```

|

||||

"""

|

||||

if sorted(blocks_with_transformer.keys()) != ["down", "up"]:

|

||||

raise ValueError("blocks_with_transformer needs to be a dict with keys `'down' and `'up'`")

|

||||

|

||||

if sorted(transformer_per_block.keys()) != ["down", "up"]:

|

||||

raise ValueError("transformer_per_block needs to be a dict with keys `'down' and `'up'`")

|

||||

|

||||

if not isinstance(scales, dict):

|

||||

# don't expand if scales is a single number

|

||||

return scales

|

||||

|

||||

scales = copy.deepcopy(scales)

|

||||

|

||||

if "mid" not in scales:

|

||||

scales["mid"] = 1

|

||||

|

||||

for updown in ["up", "down"]:

|

||||

if updown not in scales:

|

||||

scales[updown] = 1

|

||||

|

||||

# eg {"down": 1} to {"down": {"block_1": 1, "block_2": 1}}}

|

||||

if not isinstance(scales[updown], dict):

|

||||

scales[updown] = {f"block_{i}": scales[updown] for i in blocks_with_transformer[updown]}

|

||||

|

||||

# eg {"down": "block_1": 1}} to {"down": "block_1": [1, 1]}}

|

||||

for i in blocks_with_transformer[updown]:

|

||||

block = f"block_{i}"

|

||||

if not isinstance(scales[updown][block], list):

|

||||

scales[updown][block] = [scales[updown][block] for _ in range(transformer_per_block[updown])]

|

||||

|

||||

# eg {"down": "block_1": [1, 1]}} to {"down.block_1.0": 1, "down.block_1.1": 1}

|

||||

for i in blocks_with_transformer[updown]:

|

||||

block = f"block_{i}"

|

||||

for tf_idx, value in enumerate(scales[updown][block]):

|

||||

scales[f"{updown}.{block}.{tf_idx}"] = value

|

||||

|

||||

del scales[updown]

|

||||

|

||||

for layer in scales.keys():

|

||||

if not any(_translate_into_actual_layer_name(layer) in module for module in state_dict.keys()):

|

||||

raise ValueError(

|

||||

f"Can't set lora scale for layer {layer}. It either doesn't exist in this unet or it has no attentions."

|

||||

)

|

||||

|

||||

return {_translate_into_actual_layer_name(name): weight for name, weight in scales.items()}

|

||||

@@ -401,6 +401,40 @@ class StableDiffusionSAGPipeline(DiffusionPipeline, StableDiffusionMixin, Textua

|

||||

|

||||

return image_embeds, uncond_image_embeds

|

||||

|

||||

def prepare_ip_adapter_image_embeds(

|

||||

self, ip_adapter_image, ip_adapter_image_embeds, device, num_images_per_prompt, do_classifier_free_guidance

|

||||

):

|

||||

if ip_adapter_image_embeds is None:

|

||||

if not isinstance(ip_adapter_image, list):

|

||||

ip_adapter_image = [ip_adapter_image]

|

||||

|

||||

if len(ip_adapter_image) != len(self.unet.encoder_hid_proj.image_projection_layers):

|

||||

raise ValueError(

|

||||

f"`ip_adapter_image` must have same length as the number of IP Adapters. Got {len(ip_adapter_image)} images and {len(self.unet.encoder_hid_proj.image_projection_layers)} IP Adapters."

|

||||

)

|

||||

|

||||

image_embeds = []

|

||||

for single_ip_adapter_image, image_proj_layer in zip(

|

||||

ip_adapter_image, self.unet.encoder_hid_proj.image_projection_layers

|

||||

):

|

||||

output_hidden_state = not isinstance(image_proj_layer, ImageProjection)

|

||||

single_image_embeds, single_negative_image_embeds = self.encode_image(

|

||||

single_ip_adapter_image, device, 1, output_hidden_state

|

||||

)

|

||||

single_image_embeds = torch.stack([single_image_embeds] * num_images_per_prompt, dim=0)

|

||||

single_negative_image_embeds = torch.stack(

|

||||

[single_negative_image_embeds] * num_images_per_prompt, dim=0

|

||||

)

|

||||

|

||||

if do_classifier_free_guidance:

|

||||

single_image_embeds = torch.cat([single_negative_image_embeds, single_image_embeds])

|

||||

single_image_embeds = single_image_embeds.to(device)

|

||||

|

||||

image_embeds.append(single_image_embeds)

|

||||

else:

|

||||

image_embeds = ip_adapter_image_embeds

|

||||

return image_embeds

|

||||

|

||||

# Copied from diffusers.pipelines.stable_diffusion.pipeline_stable_diffusion.StableDiffusionPipeline.run_safety_checker

|

||||

def run_safety_checker(self, image, device, dtype):

|

||||

if self.safety_checker is None:

|

||||

@@ -535,6 +569,7 @@ class StableDiffusionSAGPipeline(DiffusionPipeline, StableDiffusionMixin, Textua

|

||||

prompt_embeds: Optional[torch.FloatTensor] = None,

|

||||

negative_prompt_embeds: Optional[torch.FloatTensor] = None,

|

||||

ip_adapter_image: Optional[PipelineImageInput] = None,

|

||||

ip_adapter_image_embeds: Optional[List[torch.FloatTensor]] = None,

|

||||

output_type: Optional[str] = "pil",

|

||||

return_dict: bool = True,

|

||||

callback: Optional[Callable[[int, int, torch.FloatTensor], None]] = None,

|

||||

@@ -583,6 +618,9 @@ class StableDiffusionSAGPipeline(DiffusionPipeline, StableDiffusionMixin, Textua

|

||||

not provided, `negative_prompt_embeds` are generated from the `negative_prompt` input argument.

|

||||

ip_adapter_image: (`PipelineImageInput`, *optional*):

|

||||

Optional image input to work with IP Adapters.

|

||||

ip_adapter_image_embeds (`List[torch.FloatTensor]`, *optional*):

|

||||

Pre-generated image embeddings for IP-Adapter. If not

|

||||

provided, embeddings are computed from the `ip_adapter_image` input argument.

|

||||

output_type (`str`, *optional*, defaults to `"pil"`):

|

||||

The output format of the generated image. Choose between `PIL.Image` or `np.array`.

|

||||

return_dict (`bool`, *optional*, defaults to `True`):

|

||||

@@ -636,13 +674,24 @@ class StableDiffusionSAGPipeline(DiffusionPipeline, StableDiffusionMixin, Textua

|

||||

# `sag_scale = 0` means no self-attention guidance

|

||||

do_self_attention_guidance = sag_scale > 0.0

|

||||

|

||||

if ip_adapter_image is not None:

|

||||

output_hidden_state = False if isinstance(self.unet.encoder_hid_proj, ImageProjection) else True

|

||||

image_embeds, negative_image_embeds = self.encode_image(

|

||||

ip_adapter_image, device, num_images_per_prompt, output_hidden_state

|

||||

if ip_adapter_image is not None or ip_adapter_image_embeds is not None:

|

||||

ip_adapter_image_embeds = self.prepare_ip_adapter_image_embeds(

|

||||

ip_adapter_image,

|

||||

ip_adapter_image_embeds,

|

||||

device,

|

||||

batch_size * num_images_per_prompt,

|

||||

do_classifier_free_guidance,

|

||||

)

|

||||

|

||||

if do_classifier_free_guidance:

|

||||

image_embeds = torch.cat([negative_image_embeds, image_embeds])

|

||||

image_embeds = []

|

||||

negative_image_embeds = []

|

||||

for tmp_image_embeds in ip_adapter_image_embeds:

|

||||

single_negative_image_embeds, single_image_embeds = tmp_image_embeds.chunk(2)

|

||||

image_embeds.append(single_image_embeds)

|

||||

negative_image_embeds.append(single_negative_image_embeds)

|

||||

else:

|

||||

image_embeds = ip_adapter_image_embeds

|

||||

|

||||

# 3. Encode input prompt

|

||||

prompt_embeds, negative_prompt_embeds = self.encode_prompt(

|

||||

@@ -687,8 +736,18 @@ class StableDiffusionSAGPipeline(DiffusionPipeline, StableDiffusionMixin, Textua

|

||||

extra_step_kwargs = self.prepare_extra_step_kwargs(generator, eta)

|

||||

|

||||

# 6.1 Add image embeds for IP-Adapter

|

||||

added_cond_kwargs = {"image_embeds": image_embeds} if ip_adapter_image is not None else None

|

||||

added_uncond_kwargs = {"image_embeds": negative_image_embeds} if ip_adapter_image is not None else None

|

||||

added_cond_kwargs = (

|

||||

{"image_embeds": image_embeds}

|

||||

if ip_adapter_image is not None or ip_adapter_image_embeds is not None

|

||||

else None

|

||||

)

|

||||

|

||||

if do_classifier_free_guidance:

|

||||

added_uncond_kwargs = (

|

||||

{"image_embeds": negative_image_embeds}

|

||||

if ip_adapter_image is not None or ip_adapter_image_embeds is not None

|

||||

else None

|

||||

)

|

||||

|

||||

# 7. Denoising loop

|

||||

store_processor = CrossAttnStoreProcessor()

|

||||

|

||||

@@ -127,6 +127,9 @@ class UniPCMultistepScheduler(SchedulerMixin, ConfigMixin):

|

||||

Sample Steps are Flawed](https://huggingface.co/papers/2305.08891) for more information.

|

||||

steps_offset (`int`, defaults to 0):

|

||||

An offset added to the inference steps, as required by some model families.

|

||||

final_sigmas_type (`str`, defaults to `"zero"`):

|

||||

The final `sigma` value for the noise schedule during the sampling process. If `"sigma_min"`, the final sigma

|

||||

is the same as the last sigma in the training schedule. If `zero`, the final sigma is set to 0.

|

||||

"""

|

||||

|

||||

_compatibles = [e.name for e in KarrasDiffusionSchedulers]

|

||||

@@ -153,6 +156,7 @@ class UniPCMultistepScheduler(SchedulerMixin, ConfigMixin):

|

||||

use_karras_sigmas: Optional[bool] = False,

|

||||

timestep_spacing: str = "linspace",

|

||||

steps_offset: int = 0,

|

||||

final_sigmas_type: Optional[str] = "zero", # "zero", "sigma_min"

|

||||

):

|

||||

if trained_betas is not None:

|

||||

self.betas = torch.tensor(trained_betas, dtype=torch.float32)

|

||||

@@ -265,10 +269,25 @@ class UniPCMultistepScheduler(SchedulerMixin, ConfigMixin):

|

||||

sigmas = np.flip(sigmas).copy()

|

||||

sigmas = self._convert_to_karras(in_sigmas=sigmas, num_inference_steps=num_inference_steps)

|

||||

timesteps = np.array([self._sigma_to_t(sigma, log_sigmas) for sigma in sigmas]).round()

|

||||

sigmas = np.concatenate([sigmas, sigmas[-1:]]).astype(np.float32)

|

||||

if self.config.final_sigmas_type == "sigma_min":

|

||||

sigma_last = sigmas[-1]

|

||||

elif self.config.final_sigmas_type == "zero":

|

||||

sigma_last = 0

|

||||

else:

|

||||

raise ValueError(

|

||||

f"`final_sigmas_type` must be one of 'zero', or 'sigma_min', but got {self.config.final_sigmas_type}"

|

||||

)

|

||||

sigmas = np.concatenate([sigmas, [sigma_last]]).astype(np.float32)

|

||||

else:

|

||||

sigmas = np.interp(timesteps, np.arange(0, len(sigmas)), sigmas)

|

||||

sigma_last = ((1 - self.alphas_cumprod[0]) / self.alphas_cumprod[0]) ** 0.5

|

||||

if self.config.final_sigmas_type == "sigma_min":

|

||||

sigma_last = ((1 - self.alphas_cumprod[0]) / self.alphas_cumprod[0]) ** 0.5

|

||||

elif self.config.final_sigmas_type == "zero":

|

||||

sigma_last = 0

|

||||

else:

|

||||

raise ValueError(

|

||||

f"`final_sigmas_type` must be one of 'zero', or 'sigma_min', but got {self.config.final_sigmas_type}"

|

||||

)

|

||||

sigmas = np.concatenate([sigmas, [sigma_last]]).astype(np.float32)

|

||||

|

||||

self.sigmas = torch.from_numpy(sigmas)

|

||||

|

||||

@@ -230,16 +230,26 @@ def delete_adapter_layers(model, adapter_name):

|

||||

def set_weights_and_activate_adapters(model, adapter_names, weights):

|

||||

from peft.tuners.tuners_utils import BaseTunerLayer

|

||||

|

||||

def get_module_weight(weight_for_adapter, module_name):

|

||||

if not isinstance(weight_for_adapter, dict):

|

||||

# If weight_for_adapter is a single number, always return it.

|

||||

return weight_for_adapter

|

||||

|

||||

for layer_name, weight_ in weight_for_adapter.items():

|

||||

if layer_name in module_name:

|

||||

return weight_

|

||||

raise RuntimeError(f"No LoRA weight found for module {module_name}.")

|

||||

|

||||

# iterate over each adapter, make it active and set the corresponding scaling weight

|

||||

for adapter_name, weight in zip(adapter_names, weights):

|

||||

for module in model.modules():

|

||||

for module_name, module in model.named_modules():

|

||||

if isinstance(module, BaseTunerLayer):

|

||||

# For backward compatbility with previous PEFT versions

|

||||

if hasattr(module, "set_adapter"):

|

||||

module.set_adapter(adapter_name)

|

||||

else:

|

||||

module.active_adapter = adapter_name

|

||||

module.set_scale(adapter_name, weight)

|

||||

module.set_scale(adapter_name, get_module_weight(weight, module_name))

|

||||

|

||||

# set multiple active adapters

|

||||

for module in model.modules():

|

||||

|

||||

@@ -105,10 +105,21 @@ def numpy_cosine_similarity_distance(a, b):

|

||||

return distance

|

||||

|

||||

|

||||

def print_tensor_test(tensor, filename="test_corrections.txt", expected_tensor_name="expected_slice"):

|

||||

def print_tensor_test(

|

||||

tensor,

|

||||

limit_to_slices=None,

|

||||

max_torch_print=None,

|

||||

filename="test_corrections.txt",

|

||||

expected_tensor_name="expected_slice",

|

||||

):

|

||||

if max_torch_print:

|

||||

torch.set_printoptions(threshold=10_000)

|

||||

|

||||

test_name = os.environ.get("PYTEST_CURRENT_TEST")

|

||||

if not torch.is_tensor(tensor):

|

||||

tensor = torch.from_numpy(tensor)

|

||||

if limit_to_slices:

|

||||

tensor = tensor[0, -3:, -3:, -1]

|

||||

|

||||

tensor_str = str(tensor.detach().cpu().flatten().to(torch.float32)).replace("\n", "")

|

||||

# format is usually:

|

||||

@@ -117,7 +128,7 @@ def print_tensor_test(tensor, filename="test_corrections.txt", expected_tensor_n

|

||||

test_file, test_class, test_fn = test_name.split("::")

|

||||

test_fn = test_fn.split()[0]

|

||||

with open(filename, "a") as f:

|

||||

print(";".join([test_file, test_class, test_fn, output_str]), file=f)

|

||||

print("::".join([test_file, test_class, test_fn, output_str]), file=f)

|

||||

|

||||

|

||||

def get_tests_dir(append_path=None):

|

||||

|

||||

@@ -15,6 +15,7 @@

|

||||

import os

|

||||

import tempfile

|

||||

import unittest

|

||||

from itertools import product

|

||||

|

||||

import numpy as np

|

||||

import torch

|

||||

@@ -762,6 +763,218 @@ class PeftLoraLoaderMixinTests:

|

||||

"output with no lora and output with lora disabled should give same results",

|

||||

)

|

||||

|

||||

def test_simple_inference_with_text_unet_block_scale(self):

|

||||

"""

|

||||

Tests a simple inference with lora attached to text encoder and unet, attaches

|

||||

one adapter and set differnt weights for different blocks (i.e. block lora)

|

||||

"""

|

||||

for scheduler_cls in [DDIMScheduler, LCMScheduler]:

|

||||

components, text_lora_config, unet_lora_config = self.get_dummy_components(scheduler_cls)

|

||||

pipe = self.pipeline_class(**components)

|

||||

pipe = pipe.to(torch_device)

|

||||

pipe.set_progress_bar_config(disable=None)

|

||||

_, _, inputs = self.get_dummy_inputs(with_generator=False)

|

||||

|

||||

output_no_lora = pipe(**inputs, generator=torch.manual_seed(0)).images

|

||||

|

||||

pipe.text_encoder.add_adapter(text_lora_config, "adapter-1")

|

||||

pipe.unet.add_adapter(unet_lora_config, "adapter-1")

|

||||

|

||||

self.assertTrue(check_if_lora_correctly_set(pipe.text_encoder), "Lora not correctly set in text encoder")

|

||||

self.assertTrue(check_if_lora_correctly_set(pipe.unet), "Lora not correctly set in Unet")

|

||||

|

||||

if self.has_two_text_encoders:

|

||||

pipe.text_encoder_2.add_adapter(text_lora_config, "adapter-1")

|

||||

self.assertTrue(

|

||||

check_if_lora_correctly_set(pipe.text_encoder_2), "Lora not correctly set in text encoder 2"

|

||||

)

|

||||

|

||||

weights_1 = {"text_encoder": 2, "unet": {"down": 5}}

|

||||

pipe.set_adapters("adapter-1", weights_1)

|

||||

output_weights_1 = pipe(**inputs, generator=torch.manual_seed(0)).images

|

||||

|

||||

weights_2 = {"unet": {"up": 5}}

|

||||

pipe.set_adapters("adapter-1", weights_2)

|

||||

output_weights_2 = pipe(**inputs, generator=torch.manual_seed(0)).images

|

||||

|

||||

self.assertFalse(

|

||||

np.allclose(output_weights_1, output_weights_2, atol=1e-3, rtol=1e-3),

|

||||

"LoRA weights 1 and 2 should give different results",

|

||||

)

|

||||

self.assertFalse(

|

||||

np.allclose(output_no_lora, output_weights_1, atol=1e-3, rtol=1e-3),

|

||||

"No adapter and LoRA weights 1 should give different results",

|

||||

)

|

||||

self.assertFalse(

|

||||

np.allclose(output_no_lora, output_weights_2, atol=1e-3, rtol=1e-3),

|

||||

"No adapter and LoRA weights 2 should give different results",

|

||||

)

|

||||

|

||||

pipe.disable_lora()

|

||||

output_disabled = pipe(**inputs, generator=torch.manual_seed(0)).images

|

||||

|

||||

self.assertTrue(

|

||||

np.allclose(output_no_lora, output_disabled, atol=1e-3, rtol=1e-3),

|

||||

"output with no lora and output with lora disabled should give same results",

|

||||

)

|

||||

|

||||

def test_simple_inference_with_text_unet_multi_adapter_block_lora(self):

|

||||

"""

|

||||

Tests a simple inference with lora attached to text encoder and unet, attaches

|

||||

multiple adapters and set differnt weights for different blocks (i.e. block lora)

|

||||

"""

|

||||

for scheduler_cls in [DDIMScheduler, LCMScheduler]:

|

||||

components, text_lora_config, unet_lora_config = self.get_dummy_components(scheduler_cls)

|

||||

pipe = self.pipeline_class(**components)

|

||||

pipe = pipe.to(torch_device)

|

||||

pipe.set_progress_bar_config(disable=None)

|

||||

_, _, inputs = self.get_dummy_inputs(with_generator=False)

|

||||

|

||||

output_no_lora = pipe(**inputs, generator=torch.manual_seed(0)).images

|

||||

|

||||

pipe.text_encoder.add_adapter(text_lora_config, "adapter-1")

|

||||

pipe.text_encoder.add_adapter(text_lora_config, "adapter-2")

|

||||

|

||||

pipe.unet.add_adapter(unet_lora_config, "adapter-1")

|

||||

pipe.unet.add_adapter(unet_lora_config, "adapter-2")

|

||||

|

||||

self.assertTrue(check_if_lora_correctly_set(pipe.text_encoder), "Lora not correctly set in text encoder")

|

||||

self.assertTrue(check_if_lora_correctly_set(pipe.unet), "Lora not correctly set in Unet")

|

||||

|

||||

if self.has_two_text_encoders:

|

||||

pipe.text_encoder_2.add_adapter(text_lora_config, "adapter-1")

|

||||

pipe.text_encoder_2.add_adapter(text_lora_config, "adapter-2")

|

||||

self.assertTrue(

|

||||

check_if_lora_correctly_set(pipe.text_encoder_2), "Lora not correctly set in text encoder 2"

|

||||

)

|

||||

|

||||

scales_1 = {"text_encoder": 2, "unet": {"down": 5}}

|

||||

scales_2 = {"unet": {"down": 5, "mid": 5}}

|

||||

pipe.set_adapters("adapter-1", scales_1)

|

||||

|

||||

output_adapter_1 = pipe(**inputs, generator=torch.manual_seed(0)).images

|

||||

|

||||

pipe.set_adapters("adapter-2", scales_2)

|

||||

output_adapter_2 = pipe(**inputs, generator=torch.manual_seed(0)).images

|

||||

|

||||

pipe.set_adapters(["adapter-1", "adapter-2"], [scales_1, scales_2])

|

||||

|

||||

output_adapter_mixed = pipe(**inputs, generator=torch.manual_seed(0)).images

|

||||

|

||||

# Fuse and unfuse should lead to the same results

|

||||

self.assertFalse(

|

||||

np.allclose(output_adapter_1, output_adapter_2, atol=1e-3, rtol=1e-3),

|

||||

"Adapter 1 and 2 should give different results",

|

||||

)

|

||||

|

||||

self.assertFalse(

|

||||

np.allclose(output_adapter_1, output_adapter_mixed, atol=1e-3, rtol=1e-3),

|

||||

"Adapter 1 and mixed adapters should give different results",

|

||||

)

|

||||

|

||||

self.assertFalse(

|

||||

np.allclose(output_adapter_2, output_adapter_mixed, atol=1e-3, rtol=1e-3),

|

||||

"Adapter 2 and mixed adapters should give different results",

|

||||

)

|

||||

|

||||

pipe.disable_lora()

|

||||

|

||||

output_disabled = pipe(**inputs, generator=torch.manual_seed(0)).images

|

||||

|

||||

self.assertTrue(

|

||||

np.allclose(output_no_lora, output_disabled, atol=1e-3, rtol=1e-3),

|

||||

"output with no lora and output with lora disabled should give same results",

|

||||

)

|

||||

|

||||

# a mismatching number of adapter_names and adapter_weights should raise an error

|

||||

with self.assertRaises(ValueError):

|

||||

pipe.set_adapters(["adapter-1", "adapter-2"], [scales_1])

|

||||

|

||||

def test_simple_inference_with_text_unet_block_scale_for_all_dict_options(self):

|

||||

"""Tests that any valid combination of lora block scales can be used in pipe.set_adapter"""

|

||||

|

||||

def updown_options(blocks_with_tf, layers_per_block, value):

|

||||

"""

|

||||

Generate every possible combination for how a lora weight dict for the up/down part can be.

|

||||

E.g. 2, {"block_1": 2}, {"block_1": [2,2,2]}, {"block_1": 2, "block_2": [2,2,2]}, ...

|

||||

"""

|

||||

num_val = value

|

||||

list_val = [value] * layers_per_block

|

||||

|

||||

node_opts = [None, num_val, list_val]

|

||||

node_opts_foreach_block = [node_opts] * len(blocks_with_tf)

|

||||

|

||||

updown_opts = [num_val]

|

||||

for nodes in product(*node_opts_foreach_block):

|

||||

if all(n is None for n in nodes):

|

||||

continue

|

||||

opt = {}

|

||||

for b, n in zip(blocks_with_tf, nodes):

|

||||

if n is not None:

|

||||

opt["block_" + str(b)] = n

|

||||

updown_opts.append(opt)

|

||||

return updown_opts

|

||||

|

||||

def all_possible_dict_opts(unet, value):

|

||||

"""

|

||||

Generate every possible combination for how a lora weight dict can be.

|

||||

E.g. 2, {"unet: {"down": 2}}, {"unet: {"down": [2,2,2]}}, {"unet: {"mid": 2, "up": [2,2,2]}}, ...

|

||||

"""

|

||||

|

||||

down_blocks_with_tf = [i for i, d in enumerate(unet.down_blocks) if hasattr(d, "attentions")]

|

||||

up_blocks_with_tf = [i for i, u in enumerate(unet.up_blocks) if hasattr(u, "attentions")]

|

||||

|

||||

layers_per_block = unet.config.layers_per_block

|

||||

|

||||

text_encoder_opts = [None, value]

|

||||

text_encoder_2_opts = [None, value]