mirror of

https://github.com/huggingface/diffusers.git

synced 2026-02-22 10:50:35 +08:00

Compare commits

7 Commits

correct_gu

...

v0.19.1-pa

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

aa4634a7fa | ||

|

|

0709650e9d | ||

|

|

a9829164f4 | ||

|

|

49c95178ad | ||

|

|

c2f755bc62 | ||

|

|

2fb877b66c | ||

|

|

ef9824f9f7 |

@@ -39,8 +39,8 @@ Currently AutoPipeline support the Text-to-Image, Image-to-Image, and Inpainting

|

||||

- [Stable Diffusion Controlnet](./api/pipelines/controlnet)

|

||||

- [Stable Diffusion XL](./stable_diffusion/stable_diffusion_xl)

|

||||

- [IF](./if)

|

||||

- [Kandinsky](./kandinsky)(./kandinsky)(./kandinsky)(./kandinsky)(./kandinsky)

|

||||

- [Kandinsky 2.2]()(./kandinsky)

|

||||

- [Kandinsky](./kandinsky)

|

||||

- [Kandinsky 2.2](./kandinsky)

|

||||

|

||||

|

||||

## AutoPipelineForText2Image

|

||||

|

||||

@@ -105,6 +105,30 @@ One cheeseburger monster coming up! Enjoy!

|

||||

|

||||

|

||||

|

||||

<Tip>

|

||||

|

||||

We also provide an end-to-end Kandinsky pipeline [`KandinskyCombinedPipeline`], which combines both the prior pipeline and text-to-image pipeline, and lets you perform inference in a single step. You can create the combined pipeline with the [`~AutoPipelineForTextToImage.from_pretrained`] method

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForTextToImage

|

||||

import torch

|

||||

|

||||

pipe = AutoPipelineForTextToImage.from_pretrained(

|

||||

"kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16

|

||||

)

|

||||

pipe.enable_model_cpu_offload()

|

||||

```

|

||||

|

||||

Under the hood, it will automatically load both [`KandinskyPriorPipeline`] and [`KandinskyPipeline`]. To generate images, you no longer need to call both pipelines and pass the outputs from one to another. You only need to call the combined pipeline once. You can set different `guidance_scale` and `num_inference_steps` for the prior pipeline with the `prior_guidance_scale` and `prior_num_inference_steps` arguments.

|

||||

|

||||

```python

|

||||

prompt = "A alien cheeseburger creature eating itself, claymation, cinematic, moody lighting"

|

||||

negative_prompt = "low quality, bad quality"

|

||||

|

||||

image = pipe(prompt=prompt, negative_prompt=negative_prompt, prior_guidance_scale =1.0, guidance_scacle = 4.0, height=768, width=768).images[0]

|

||||

```

|

||||

</Tip>

|

||||

|

||||

The Kandinsky model works extremely well with creative prompts. Here is some of the amazing art that can be created using the exact same process but with different prompts.

|

||||

|

||||

```python

|

||||

@@ -187,6 +211,34 @@ out.images[0].save("fantasy_land.png")

|

||||

|

||||

|

||||

|

||||

<Tip>

|

||||

|

||||

You can also use the [`KandinskyImg2ImgCombinedPipeline`] for end-to-end image-to-image generation with Kandinsky 2.1

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForImage2Image

|

||||

import torch

|

||||

import requests

|

||||

from io import BytesIO

|

||||

from PIL import Image

|

||||

import os

|

||||

|

||||

pipe = AutoPipelineForImage2Image.from_pretrained("kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16)

|

||||

pipe.enable_model_cpu_offload()

|

||||

|

||||

prompt = "A fantasy landscape, Cinematic lighting"

|

||||

negative_prompt = "low quality, bad quality"

|

||||

|

||||

url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

|

||||

|

||||

response = requests.get(url)

|

||||

original_image = Image.open(BytesIO(response.content)).convert("RGB")

|

||||

original_image.thumbnail((768, 768))

|

||||

|

||||

image = pipe(prompt=prompt, image=original_image, strength=0.3).images[0]

|

||||

```

|

||||

</Tip>

|

||||

|

||||

### Text Guided Inpainting Generation

|

||||

|

||||

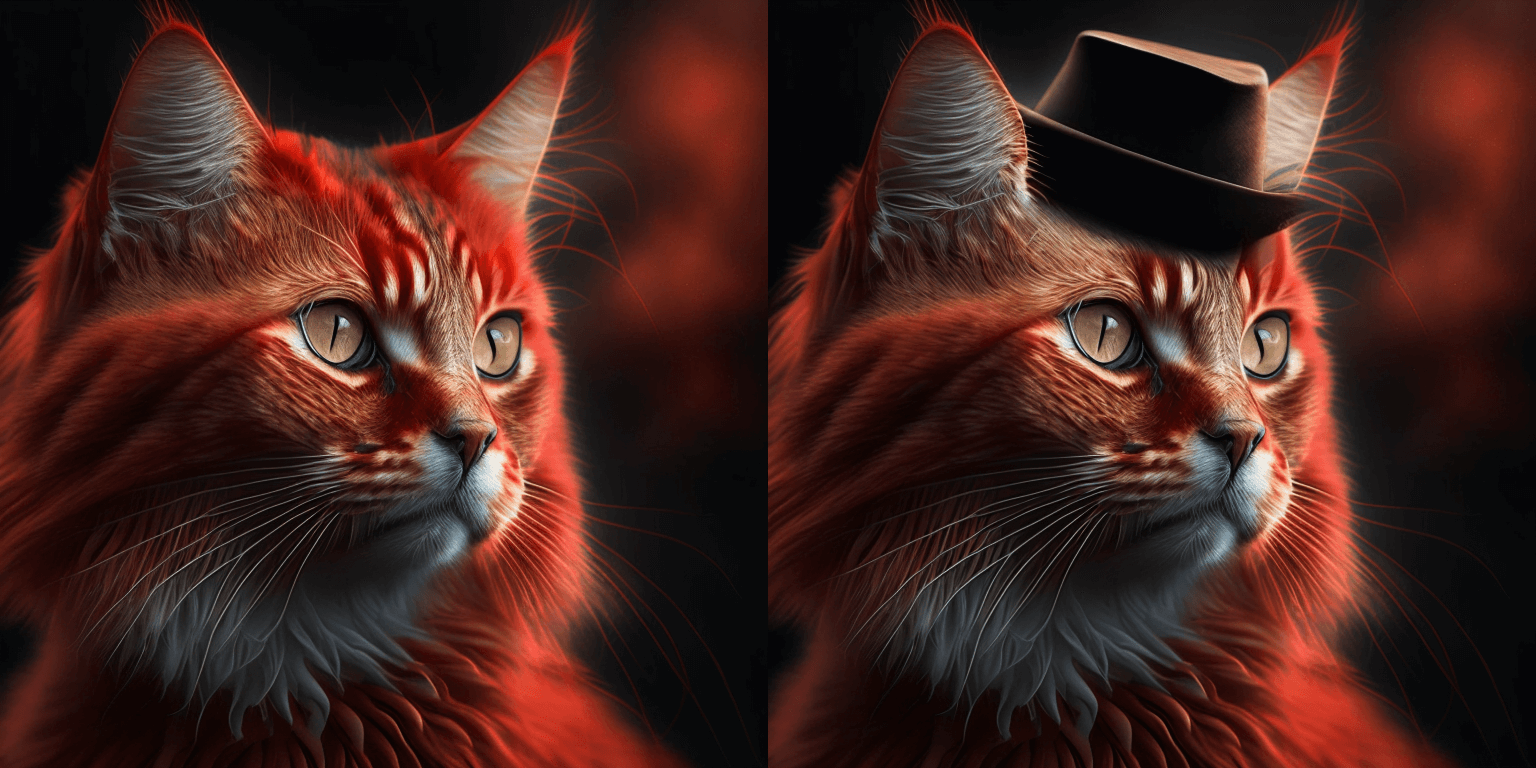

You can use [`KandinskyInpaintPipeline`] to edit images. In this example, we will add a hat to the portrait of a cat.

|

||||

@@ -231,6 +283,33 @@ image.save("cat_with_hat.png")

|

||||

```

|

||||

|

||||

|

||||

<Tip>

|

||||

|

||||

To use the [`KandinskyInpaintCombinedPipeline`] to perform end-to-end image inpainting generation, you can run below code instead

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForInpainting

|

||||

|

||||

pipe = AutoPipelineForInpainting.from_pretrained("kandinsky-community/kandinsky-2-1-inpaint", torch_dtype=torch.float16)

|

||||

pipe.enable_model_cpu_offload()

|

||||

image = pipe(prompt=prompt, image=original_image, mask_image=mask).images[0]

|

||||

```

|

||||

</Tip>

|

||||

|

||||

🚨🚨🚨 __Breaking change for Kandinsky Mask Inpainting__ 🚨🚨🚨

|

||||

|

||||

We introduced a breaking change for Kandinsky inpainting pipeline in the following pull request: https://github.com/huggingface/diffusers/pull/4207. Previously we accepted a mask format where black pixels represent the masked-out area. This is inconsistent with all other pipelines in diffusers. We have changed the mask format in Knaindsky and now using white pixels instead.

|

||||

Please upgrade your inpainting code to follow the above. If you are using Kandinsky Inpaint in production. You now need to change the mask to:

|

||||

|

||||

```python

|

||||

# For PIL input

|

||||

import PIL.ImageOps

|

||||

mask = PIL.ImageOps.invert(mask)

|

||||

|

||||

# For PyTorch and Numpy input

|

||||

mask = 1 - mask

|

||||

```

|

||||

|

||||

### Interpolate

|

||||

|

||||

The [`KandinskyPriorPipeline`] also comes with a cool utility function that will allow you to interpolate the latent space of different images and texts super easily. Here is an example of how you can create an Impressionist-style portrait for your pet based on "The Starry Night".

|

||||

|

||||

@@ -11,7 +11,22 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

The Kandinsky 2.2 release includes robust new text-to-image models that support text-to-image generation, image-to-image generation, image interpolation, and text-guided image inpainting. The general workflow to perform these tasks using Kandinsky 2.2 is the same as in Kandinsky 2.1. First, you will need to use a prior pipeline to generate image embeddings based on your text prompt, and then use one of the image decoding pipelines to generate the output image. The only difference is that in Kandinsky 2.2, all of the decoding pipelines no longer accept the `prompt` input, and the image generation process is conditioned with only `image_embeds` and `negative_image_embeds`.

|

||||

|

||||

Let's look at an example of how to perform text-to-image generation using Kandinsky 2.2.

|

||||

Same as with Kandinsky 2.1, the easiest way to perform text-to-image generation is to use the combined Kandinsky pipeline. This process is exactly the same as Kandinsky 2.1. All you need to do is to replace the Kandinsky 2.1 checkpoint with 2.2.

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForText2Image

|

||||

import torch

|

||||

|

||||

pipe = AutoPipelineForText2Image.from_pretrained("kandinsky-community/kandinsky-2-2-decoder", torch_dtype=torch.float16)

|

||||

pipe.enable_model_cpu_offload()

|

||||

|

||||

prompt = "A alien cheeseburger creature eating itself, claymation, cinematic, moody lighting"

|

||||

negative_prompt = "low quality, bad quality"

|

||||

|

||||

image = pipe(prompt=prompt, negative_prompt=negative_prompt, prior_guidance_scale =1.0, height=768, width=768).images[0]

|

||||

```

|

||||

|

||||

Now, let's look at an example where we take separate steps to run the prior pipeline and text-to-image pipeline. This way, we can understand what's happening under the hood and how Kandinsky 2.2 differs from Kandinsky 2.1.

|

||||

|

||||

First, let's create the prior pipeline and text-to-image pipeline with Kandinsky 2.2 checkpoints.

|

||||

|

||||

|

||||

@@ -56,7 +56,7 @@ if is_wandb_available():

|

||||

import wandb

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

|

||||

@@ -59,7 +59,7 @@ if is_wandb_available():

|

||||

import wandb

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = logging.getLogger(__name__)

|

||||

|

||||

|

||||

@@ -58,7 +58,7 @@ if is_wandb_available():

|

||||

import wandb

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.18.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

|

||||

@@ -57,7 +57,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

|

||||

@@ -59,7 +59,7 @@ if is_wandb_available():

|

||||

import wandb

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

|

||||

@@ -36,7 +36,7 @@ from diffusers.utils import check_min_version

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

# Cache compiled models across invocations of this script.

|

||||

cc.initialize_cache(os.path.expanduser("~/.cache/jax/compilation_cache"))

|

||||

|

||||

@@ -69,7 +69,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

|

||||

@@ -58,7 +58,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

|

||||

@@ -52,7 +52,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__, log_level="INFO")

|

||||

|

||||

|

||||

@@ -55,7 +55,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.18.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__, log_level="INFO")

|

||||

|

||||

|

||||

@@ -54,7 +54,7 @@ if is_wandb_available():

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__, log_level="INFO")

|

||||

|

||||

|

||||

@@ -33,7 +33,7 @@ from diffusers.utils import check_min_version

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = logging.getLogger(__name__)

|

||||

|

||||

|

||||

@@ -48,7 +48,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__, log_level="INFO")

|

||||

|

||||

|

||||

@@ -78,7 +78,7 @@ else:

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

|

||||

@@ -56,7 +56,7 @@ else:

|

||||

# ------------------------------------------------------------------------------

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = logging.getLogger(__name__)

|

||||

|

||||

|

||||

@@ -30,7 +30,7 @@ from diffusers.utils.import_utils import is_xformers_available

|

||||

|

||||

|

||||

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

|

||||

check_min_version("0.19.0.dev0")

|

||||

check_min_version("0.19.0")

|

||||

|

||||

logger = get_logger(__name__, log_level="INFO")

|

||||

|

||||

|

||||

2

setup.py

2

setup.py

@@ -233,7 +233,7 @@ install_requires = [

|

||||

|

||||

setup(

|

||||

name="diffusers",

|

||||

version="0.19.0.dev0", # expected format is one of x.y.z.dev0, or x.y.z.rc1 or x.y.z (no to dashes, yes to dots)

|

||||

version="0.19.1", # expected format is one of x.y.z.dev0, or x.y.z.rc1 or x.y.z (no to dashes, yes to dots)

|

||||

description="Diffusers",

|

||||

long_description=open("README.md", "r", encoding="utf-8").read(),

|

||||

long_description_content_type="text/markdown",

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

__version__ = "0.19.0.dev0"

|

||||

__version__ = "0.19.1"

|

||||

|

||||

from .configuration_utils import ConfigMixin

|

||||

from .utils import (

|

||||

|

||||

@@ -14,6 +14,7 @@

|

||||

|

||||

from typing import Optional

|

||||

|

||||

import torch.nn.functional as F

|

||||

from torch import nn

|

||||

|

||||

|

||||

@@ -91,7 +92,9 @@ class LoRACompatibleConv(nn.Conv2d):

|

||||

|

||||

def forward(self, x):

|

||||

if self.lora_layer is None:

|

||||

return super().forward(x)

|

||||

# make sure to the functional Conv2D function as otherwise torch.compile's graph will break

|

||||

# see: https://github.com/huggingface/diffusers/pull/4315

|

||||

return F.conv2d(x, self.weight, self.bias, self.stride, self.padding, self.dilation, self.groups)

|

||||

else:

|

||||

return super().forward(x) + self.lora_layer(x)

|

||||

|

||||

|

||||

@@ -1474,11 +1474,25 @@ class DiffusionPipeline(ConfigMixin):

|

||||

user_agent=user_agent,

|

||||

)

|

||||

|

||||

if pipeline_class._load_connected_pipes:

|

||||

# retrieve pipeline class from local file

|

||||

cls_name = cls.load_config(os.path.join(cached_folder, "model_index.json")).get("_class_name", None)

|

||||

pipeline_class = getattr(diffusers, cls_name, None)

|

||||

|

||||

if pipeline_class is not None and pipeline_class._load_connected_pipes:

|

||||

modelcard = ModelCard.load(os.path.join(cached_folder, "README.md"))

|

||||

connected_pipes = sum([getattr(modelcard.data, k, []) for k in CONNECTED_PIPES_KEYS], [])

|

||||

for connected_pipe_repo_id in connected_pipes:

|

||||

DiffusionPipeline.download(connected_pipe_repo_id)

|

||||

download_kwargs = {

|

||||

"cache_dir": cache_dir,

|

||||

"resume_download": resume_download,

|

||||

"force_download": force_download,

|

||||

"proxies": proxies,

|

||||

"local_files_only": local_files_only,

|

||||

"use_auth_token": use_auth_token,

|

||||

"variant": variant,

|

||||

"use_safetensors": use_safetensors,

|

||||

}

|

||||

DiffusionPipeline.download(connected_pipe_repo_id, **download_kwargs)

|

||||

|

||||

return cached_folder

|

||||

|

||||

|

||||

@@ -1186,6 +1186,7 @@ def download_from_original_stable_diffusion_ckpt(

|

||||

StableDiffusionInpaintPipeline,

|

||||

StableDiffusionPipeline,

|

||||

StableDiffusionXLImg2ImgPipeline,

|

||||

StableDiffusionXLPipeline,

|

||||

StableUnCLIPImg2ImgPipeline,

|

||||

StableUnCLIPPipeline,

|

||||

)

|

||||

@@ -1542,7 +1543,7 @@ def download_from_original_stable_diffusion_ckpt(

|

||||

checkpoint, config_name, prefix="conditioner.embedders.1.model.", has_projection=True, **config_kwargs

|

||||

)

|

||||

|

||||

pipe = pipeline_class(

|

||||

pipe = StableDiffusionXLPipeline(

|

||||

vae=vae,

|

||||

text_encoder=text_encoder,

|

||||

tokenizer=tokenizer,

|

||||

|

||||

@@ -424,10 +424,13 @@ class StableDiffusionUpscalePipeline(DiffusionPipeline, TextualInversionLoaderMi

|

||||

|

||||

# verify batch size of prompt and image are same if image is a list or tensor or numpy array

|

||||

if isinstance(image, list) or isinstance(image, torch.Tensor) or isinstance(image, np.ndarray):

|

||||

if isinstance(prompt, str):

|

||||

if prompt is not None and isinstance(prompt, str):

|

||||

batch_size = 1

|

||||

else:

|

||||

elif prompt is not None and isinstance(prompt, list):

|

||||

batch_size = len(prompt)

|

||||

else:

|

||||

batch_size = prompt_embeds.shape[0]

|

||||

|

||||

if isinstance(image, list):

|

||||

image_batch_size = len(image)

|

||||

else:

|

||||

|

||||

@@ -563,10 +563,10 @@ class SDXLLoraLoaderMixinTests(unittest.TestCase):

|

||||

projection_dim=32,

|

||||

)

|

||||

text_encoder = CLIPTextModel(text_encoder_config)

|

||||

tokenizer = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip", local_files_only=True)

|

||||

tokenizer = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip")

|

||||

|

||||

text_encoder_2 = CLIPTextModelWithProjection(text_encoder_config)

|

||||

tokenizer_2 = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip", local_files_only=True)

|

||||

tokenizer_2 = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip")

|

||||

|

||||

unet_lora_attn_procs, unet_lora_layers = create_unet_lora_layers(unet)

|

||||

text_encoder_one_lora_layers = create_text_encoder_lora_layers(text_encoder)

|

||||

|

||||

@@ -210,6 +210,68 @@ class StableDiffusionUpscalePipelineFastTests(unittest.TestCase):

|

||||

image = output.images

|

||||

assert image.shape[0] == 2

|

||||

|

||||

def test_stable_diffusion_upscale_prompt_embeds(self):

|

||||

device = "cpu" # ensure determinism for the device-dependent torch.Generator

|

||||

unet = self.dummy_cond_unet_upscale

|

||||

low_res_scheduler = DDPMScheduler()

|

||||

scheduler = DDIMScheduler(prediction_type="v_prediction")

|

||||

vae = self.dummy_vae

|

||||

text_encoder = self.dummy_text_encoder

|

||||

tokenizer = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip")

|

||||

|

||||

image = self.dummy_image.cpu().permute(0, 2, 3, 1)[0]

|

||||

low_res_image = Image.fromarray(np.uint8(image)).convert("RGB").resize((64, 64))

|

||||

|

||||

# make sure here that pndm scheduler skips prk

|

||||

sd_pipe = StableDiffusionUpscalePipeline(

|

||||

unet=unet,

|

||||

low_res_scheduler=low_res_scheduler,

|

||||

scheduler=scheduler,

|

||||

vae=vae,

|

||||

text_encoder=text_encoder,

|

||||

tokenizer=tokenizer,

|

||||

max_noise_level=350,

|

||||

)

|

||||

sd_pipe = sd_pipe.to(device)

|

||||

sd_pipe.set_progress_bar_config(disable=None)

|

||||

|

||||

prompt = "A painting of a squirrel eating a burger"

|

||||

generator = torch.Generator(device=device).manual_seed(0)

|

||||

output = sd_pipe(

|

||||

[prompt],

|

||||

image=low_res_image,

|

||||

generator=generator,

|

||||

guidance_scale=6.0,

|

||||

noise_level=20,

|

||||

num_inference_steps=2,

|

||||

output_type="np",

|

||||

)

|

||||

|

||||

image = output.images

|

||||

|

||||

generator = torch.Generator(device=device).manual_seed(0)

|

||||

prompt_embeds = sd_pipe._encode_prompt(prompt, device, 1, False)

|

||||

image_from_prompt_embeds = sd_pipe(

|

||||

prompt_embeds=prompt_embeds,

|

||||

image=[low_res_image],

|

||||

generator=generator,

|

||||

guidance_scale=6.0,

|

||||

noise_level=20,

|

||||

num_inference_steps=2,

|

||||

output_type="np",

|

||||

return_dict=False,

|

||||

)[0]

|

||||

|

||||

image_slice = image[0, -3:, -3:, -1]

|

||||

image_from_prompt_embeds_slice = image_from_prompt_embeds[0, -3:, -3:, -1]

|

||||

|

||||

expected_height_width = low_res_image.size[0] * 4

|

||||

assert image.shape == (1, expected_height_width, expected_height_width, 3)

|

||||

expected_slice = np.array([0.3113, 0.3910, 0.4272, 0.4859, 0.5061, 0.4652, 0.5362, 0.5715, 0.5661])

|

||||

|

||||

assert np.abs(image_slice.flatten() - expected_slice).max() < 1e-2

|

||||

assert np.abs(image_from_prompt_embeds_slice.flatten() - expected_slice).max() < 1e-2

|

||||

|

||||

@unittest.skipIf(torch_device != "cuda", "This test requires a GPU")

|

||||

def test_stable_diffusion_upscale_fp16(self):

|

||||

"""Test that stable diffusion upscale works with fp16"""

|

||||

|

||||

@@ -100,10 +100,10 @@ class StableDiffusionXLPipelineFastTests(PipelineLatentTesterMixin, PipelineTest

|

||||

projection_dim=32,

|

||||

)

|

||||

text_encoder = CLIPTextModel(text_encoder_config)

|

||||

tokenizer = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip", local_files_only=True)

|

||||

tokenizer = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip")

|

||||

|

||||

text_encoder_2 = CLIPTextModelWithProjection(text_encoder_config)

|

||||

tokenizer_2 = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip", local_files_only=True)

|

||||

tokenizer_2 = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip")

|

||||

|

||||

components = {

|

||||

"unet": unet,

|

||||

|

||||

@@ -100,10 +100,10 @@ class StableDiffusionXLImg2ImgPipelineFastTests(PipelineLatentTesterMixin, Pipel

|

||||

projection_dim=32,

|

||||

)

|

||||

text_encoder = CLIPTextModel(text_encoder_config)

|

||||

tokenizer = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip", local_files_only=True)

|

||||

tokenizer = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip")

|

||||

|

||||

text_encoder_2 = CLIPTextModelWithProjection(text_encoder_config)

|

||||

tokenizer_2 = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip", local_files_only=True)

|

||||

tokenizer_2 = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip")

|

||||

|

||||

components = {

|

||||

"unet": unet,

|

||||

|

||||

@@ -102,10 +102,10 @@ class StableDiffusionXLInpaintPipelineFastTests(PipelineLatentTesterMixin, Pipel

|

||||

projection_dim=32,

|

||||

)

|

||||

text_encoder = CLIPTextModel(text_encoder_config)

|

||||

tokenizer = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip", local_files_only=True)

|

||||

tokenizer = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip")

|

||||

|

||||

text_encoder_2 = CLIPTextModelWithProjection(text_encoder_config)

|

||||

tokenizer_2 = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip", local_files_only=True)

|

||||

tokenizer_2 = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip")

|

||||

|

||||

components = {

|

||||

"unet": unet,

|

||||

|

||||

@@ -105,10 +105,10 @@ class StableDiffusionXLInstructPix2PixPipelineFastTests(

|

||||

projection_dim=32,

|

||||

)

|

||||

text_encoder = CLIPTextModel(text_encoder_config)

|

||||

tokenizer = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip", local_files_only=True)

|

||||

tokenizer = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip")

|

||||

|

||||

text_encoder_2 = CLIPTextModelWithProjection(text_encoder_config)

|

||||

tokenizer_2 = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip", local_files_only=True)

|

||||

tokenizer_2 = CLIPTokenizer.from_pretrained("hf-internal-testing/tiny-random-clip")

|

||||

|

||||

components = {

|

||||

"unet": unet,

|

||||

|

||||

@@ -374,7 +374,7 @@ class DownloadTests(unittest.TestCase):

|

||||

response_mock.json.return_value = {}

|

||||

|

||||

# Download this model to make sure it's in the cache.

|

||||

orig_pipe = StableDiffusionPipeline.from_pretrained(

|

||||

orig_pipe = DiffusionPipeline.from_pretrained(

|

||||

"hf-internal-testing/tiny-stable-diffusion-torch", safety_checker=None

|

||||

)

|

||||

orig_comps = {k: v for k, v in orig_pipe.components.items() if hasattr(v, "parameters")}

|

||||

@@ -382,7 +382,7 @@ class DownloadTests(unittest.TestCase):

|

||||

# Under the mock environment we get a 500 error when trying to reach the model.

|

||||

with mock.patch("requests.request", return_value=response_mock):

|

||||

# Download this model to make sure it's in the cache.

|

||||

pipe = StableDiffusionPipeline.from_pretrained(

|

||||

pipe = DiffusionPipeline.from_pretrained(

|

||||

"hf-internal-testing/tiny-stable-diffusion-torch", safety_checker=None

|

||||

)

|

||||

comps = {k: v for k, v in pipe.components.items() if hasattr(v, "parameters")}

|

||||

@@ -392,6 +392,42 @@ class DownloadTests(unittest.TestCase):

|

||||

if p1.data.ne(p2.data).sum() > 0:

|

||||

assert False, "Parameters not the same!"

|

||||

|

||||

def test_local_files_only_are_used_when_no_internet(self):

|

||||

# A mock response for an HTTP head request to emulate server down

|

||||

response_mock = mock.Mock()

|

||||

response_mock.status_code = 500

|

||||

response_mock.headers = {}

|

||||

response_mock.raise_for_status.side_effect = HTTPError

|

||||

response_mock.json.return_value = {}

|

||||

|

||||

# first check that with local files only the pipeline can only be used if cached

|

||||

with self.assertRaises(FileNotFoundError):

|

||||

with tempfile.TemporaryDirectory() as tmpdirname:

|

||||

orig_pipe = DiffusionPipeline.from_pretrained(

|

||||

"hf-internal-testing/tiny-stable-diffusion-torch", local_files_only=True, cache_dir=tmpdirname

|

||||

)

|

||||

|

||||

# now download

|

||||

orig_pipe = DiffusionPipeline.download("hf-internal-testing/tiny-stable-diffusion-torch")

|

||||

|

||||

# make sure it can be loaded with local_files_only

|

||||

orig_pipe = DiffusionPipeline.from_pretrained(

|

||||

"hf-internal-testing/tiny-stable-diffusion-torch", local_files_only=True

|

||||

)

|

||||

orig_comps = {k: v for k, v in orig_pipe.components.items() if hasattr(v, "parameters")}

|

||||

|

||||

# Under the mock environment we get a 500 error when trying to connect to the internet.

|

||||

# Make sure it works local_files_only only works here!

|

||||

with mock.patch("requests.request", return_value=response_mock):

|

||||

# Download this model to make sure it's in the cache.

|

||||

pipe = DiffusionPipeline.from_pretrained("hf-internal-testing/tiny-stable-diffusion-torch")

|

||||

comps = {k: v for k, v in pipe.components.items() if hasattr(v, "parameters")}

|

||||

|

||||

for m1, m2 in zip(orig_comps.values(), comps.values()):

|

||||

for p1, p2 in zip(m1.parameters(), m2.parameters()):

|

||||

if p1.data.ne(p2.data).sum() > 0:

|

||||

assert False, "Parameters not the same!"

|

||||

|

||||

def test_download_from_variant_folder(self):

|

||||

for safe_avail in [False, True]:

|

||||

import diffusers

|

||||

|

||||

@@ -387,7 +387,7 @@ class PipelineTesterMixin:

|

||||

batched_inputs[name] = [value[: len_prompt // i] for i in range(1, batch_size + 1)]

|

||||

|

||||

# make last batch super long

|

||||

batched_inputs[name][-1] = 2000 * "very long"

|

||||

batched_inputs[name][-1] = 100 * "very long"

|

||||

# or else we have images

|

||||

else:

|

||||

batched_inputs[name] = batch_size * [value]

|

||||

@@ -462,7 +462,7 @@ class PipelineTesterMixin:

|

||||

batched_inputs[name] = [value[: len_prompt // i] for i in range(1, batch_size + 1)]

|

||||

|

||||

# make last batch super long

|

||||

batched_inputs[name][-1] = 2000 * "very long"

|

||||

batched_inputs[name][-1] = 100 * "very long"

|

||||

# or else we have images

|

||||

else:

|

||||

batched_inputs[name] = batch_size * [value]

|

||||

|

||||

Reference in New Issue

Block a user