mirror of

https://github.com/huggingface/diffusers.git

synced 2026-02-22 02:39:51 +08:00

Compare commits

32 Commits

run_tests

...

v0.16.1-pa

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

9b14ce397e | ||

|

|

23159f4adb | ||

|

|

4c476e99b5 | ||

|

|

9c876a5915 | ||

|

|

6ba0efb9a1 | ||

|

|

46ceba5b35 | ||

|

|

977162c02b | ||

|

|

744663f8dc | ||

|

|

abbf3c1adf | ||

|

|

da2ce1a6b9 | ||

|

|

e51f19aee8 | ||

|

|

1ffcc924bc | ||

|

|

730e01ec93 | ||

|

|

0d196f9f45 | ||

|

|

131312caba | ||

|

|

e9edbfc251 | ||

|

|

0ddc5bf7b9 | ||

|

|

c5933c9c89 | ||

|

|

91a2a80eb2 | ||

|

|

425192fe15 | ||

|

|

9965cb50ea | ||

|

|

20e426cb5d | ||

|

|

90eac14f72 | ||

|

|

11f527ac0f | ||

|

|

2c04e5855c | ||

|

|

391cfcd7d7 | ||

|

|

bc0392a0cb | ||

|

|

05d9baeacd | ||

|

|

e573ae06e2 | ||

|

|

2f6351b001 | ||

|

|

9c856118c7 | ||

|

|

9bce375f77 |

@@ -105,6 +105,8 @@

|

||||

title: MPS

|

||||

- local: optimization/habana

|

||||

title: Habana Gaudi

|

||||

- local: optimization/tome

|

||||

title: Token Merging

|

||||

title: Optimization/Special Hardware

|

||||

- sections:

|

||||

- local: conceptual/philosophy

|

||||

@@ -152,6 +154,8 @@

|

||||

title: DDPM

|

||||

- local: api/pipelines/dit

|

||||

title: DiT

|

||||

- local: api/pipelines/if

|

||||

title: IF

|

||||

- local: api/pipelines/latent_diffusion

|

||||

title: Latent Diffusion

|

||||

- local: api/pipelines/paint_by_example

|

||||

|

||||

@@ -25,14 +25,14 @@ This pipeline was contributed by [sanchit-gandhi](https://huggingface.co/sanchit

|

||||

|

||||

## Text-to-Audio

|

||||

|

||||

The [`AudioLDMPipeline`] can be used to load pre-trained weights from [cvssp/audioldm](https://huggingface.co/cvssp/audioldm) and generate text-conditional audio outputs:

|

||||

The [`AudioLDMPipeline`] can be used to load pre-trained weights from [cvssp/audioldm-s-full-v2](https://huggingface.co/cvssp/audioldm-s-full-v2) and generate text-conditional audio outputs:

|

||||

|

||||

```python

|

||||

from diffusers import AudioLDMPipeline

|

||||

import torch

|

||||

import scipy

|

||||

|

||||

repo_id = "cvssp/audioldm"

|

||||

repo_id = "cvssp/audioldm-s-full-v2"

|

||||

pipe = AudioLDMPipeline.from_pretrained(repo_id, torch_dtype=torch.float16)

|

||||

pipe = pipe.to("cuda")

|

||||

|

||||

@@ -56,7 +56,7 @@ Inference:

|

||||

### How to load and use different schedulers

|

||||

|

||||

The AudioLDM pipeline uses [`DDIMScheduler`] scheduler by default. But `diffusers` provides many other schedulers

|

||||

that can be used with the AudioLDM pipeline such as [`PNDMScheduler`], [`LMSDiscreteScheduler`], [`EulerDiscreteScheduler`],

|

||||

that can be used with the AudioLDM pipeline such as [`PNDMScheduler`], [`LMSDiscreteScheduler`], [`EulerDiscreteScheduler`],

|

||||

[`EulerAncestralDiscreteScheduler`] etc. We recommend using the [`DPMSolverMultistepScheduler`] as it's currently the fastest

|

||||

scheduler there is.

|

||||

|

||||

@@ -68,12 +68,14 @@ method, or pass the `scheduler` argument to the `from_pretrained` method of the

|

||||

>>> from diffusers import AudioLDMPipeline, DPMSolverMultistepScheduler

|

||||

>>> import torch

|

||||

|

||||

>>> pipeline = AudioLDMPipeline.from_pretrained("cvssp/audioldm", torch_dtype=torch.float16)

|

||||

>>> pipeline = AudioLDMPipeline.from_pretrained("cvssp/audioldm-s-full-v2", torch_dtype=torch.float16)

|

||||

>>> pipeline.scheduler = DPMSolverMultistepScheduler.from_config(pipeline.scheduler.config)

|

||||

|

||||

>>> # or

|

||||

>>> dpm_scheduler = DPMSolverMultistepScheduler.from_pretrained("cvssp/audioldm", subfolder="scheduler")

|

||||

>>> pipeline = AudioLDMPipeline.from_pretrained("cvssp/audioldm", scheduler=dpm_scheduler, torch_dtype=torch.float16)

|

||||

>>> dpm_scheduler = DPMSolverMultistepScheduler.from_pretrained("cvssp/audioldm-s-full-v2", subfolder="scheduler")

|

||||

>>> pipeline = AudioLDMPipeline.from_pretrained(

|

||||

... "cvssp/audioldm-s-full-v2", scheduler=dpm_scheduler, torch_dtype=torch.float16

|

||||

... )

|

||||

```

|

||||

|

||||

## AudioLDMPipeline

|

||||

|

||||

523

docs/source/en/api/pipelines/if.mdx

Normal file

523

docs/source/en/api/pipelines/if.mdx

Normal file

@@ -0,0 +1,523 @@

|

||||

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# IF

|

||||

|

||||

## Overview

|

||||

|

||||

DeepFloyd IF is a novel state-of-the-art open-source text-to-image model with a high degree of photorealism and language understanding.

|

||||

The model is a modular composed of a frozen text encoder and three cascaded pixel diffusion modules:

|

||||

- Stage 1: a base model that generates 64x64 px image based on text prompt,

|

||||

- Stage 2: a 64x64 px => 256x256 px super-resolution model, and a

|

||||

- Stage 3: a 256x256 px => 1024x1024 px super-resolution model

|

||||

Stage 1 and Stage 2 utilize a frozen text encoder based on the T5 transformer to extract text embeddings,

|

||||

which are then fed into a UNet architecture enhanced with cross-attention and attention pooling.

|

||||

Stage 3 is [Stability's x4 Upscaling model](https://huggingface.co/stabilityai/stable-diffusion-x4-upscaler).

|

||||

The result is a highly efficient model that outperforms current state-of-the-art models, achieving a zero-shot FID score of 6.66 on the COCO dataset.

|

||||

Our work underscores the potential of larger UNet architectures in the first stage of cascaded diffusion models and depicts a promising future for text-to-image synthesis.

|

||||

|

||||

## Usage

|

||||

|

||||

Before you can use IF, you need to accept its usage conditions. To do so:

|

||||

1. Make sure to have a [Hugging Face account](https://huggingface.co/join) and be logged in

|

||||

2. Accept the license on the model card of [DeepFloyd/IF-I-XL-v1.0](https://huggingface.co/DeepFloyd/IF-I-XL-v1.0). Accepting the license on the stage I model card will auto accept for the other IF models.

|

||||

3. Make sure to login locally. Install `huggingface_hub`

|

||||

```sh

|

||||

pip install huggingface_hub --upgrade

|

||||

```

|

||||

|

||||

run the login function in a Python shell

|

||||

|

||||

```py

|

||||

from huggingface_hub import login

|

||||

|

||||

login()

|

||||

```

|

||||

|

||||

and enter your [Hugging Face Hub access token](https://huggingface.co/docs/hub/security-tokens#what-are-user-access-tokens).

|

||||

|

||||

Next we install `diffusers` and dependencies:

|

||||

|

||||

```sh

|

||||

pip install diffusers accelerate transformers safetensors

|

||||

```

|

||||

|

||||

The following sections give more in-detail examples of how to use IF. Specifically:

|

||||

|

||||

- [Text-to-Image Generation](#text-to-image-generation)

|

||||

- [Image-to-Image Generation](#text-guided-image-to-image-generation)

|

||||

- [Inpainting](#text-guided-inpainting-generation)

|

||||

- [Reusing model weights](#converting-between-different-pipelines)

|

||||

- [Speed optimization](#optimizing-for-speed)

|

||||

- [Memory optimization](#optimizing-for-memory)

|

||||

|

||||

**Available checkpoints**

|

||||

- *Stage-1*

|

||||

- [DeepFloyd/IF-I-XL-v1.0](https://huggingface.co/DeepFloyd/IF-I-XL-v1.0)

|

||||

- [DeepFloyd/IF-I-L-v1.0](https://huggingface.co/DeepFloyd/IF-I-L-v1.0)

|

||||

- [DeepFloyd/IF-I-M-v1.0](https://huggingface.co/DeepFloyd/IF-I-M-v1.0)

|

||||

|

||||

- *Stage-2*

|

||||

- [DeepFloyd/IF-II-L-v1.0](https://huggingface.co/DeepFloyd/IF-II-L-v1.0)

|

||||

- [DeepFloyd/IF-II-M-v1.0](https://huggingface.co/DeepFloyd/IF-II-M-v1.0)

|

||||

|

||||

- *Stage-3*

|

||||

- [stabilityai/stable-diffusion-x4-upscaler](https://huggingface.co/stabilityai/stable-diffusion-x4-upscaler)

|

||||

|

||||

**Demo**

|

||||

[](https://huggingface.co/spaces/DeepFloyd/IF)

|

||||

|

||||

**Google Colab**

|

||||

[](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/deepfloyd_if_free_tier_google_colab.ipynb)

|

||||

|

||||

### Text-to-Image Generation

|

||||

|

||||

By default diffusers makes use of [model cpu offloading](https://huggingface.co/docs/diffusers/optimization/fp16#model-offloading-for-fast-inference-and-memory-savings)

|

||||

to run the whole IF pipeline with as little as 14 GB of VRAM.

|

||||

|

||||

```python

|

||||

from diffusers import DiffusionPipeline

|

||||

from diffusers.utils import pt_to_pil

|

||||

import torch

|

||||

|

||||

# stage 1

|

||||

stage_1 = DiffusionPipeline.from_pretrained("DeepFloyd/IF-I-XL-v1.0", variant="fp16", torch_dtype=torch.float16)

|

||||

stage_1.enable_model_cpu_offload()

|

||||

|

||||

# stage 2

|

||||

stage_2 = DiffusionPipeline.from_pretrained(

|

||||

"DeepFloyd/IF-II-L-v1.0", text_encoder=None, variant="fp16", torch_dtype=torch.float16

|

||||

)

|

||||

stage_2.enable_model_cpu_offload()

|

||||

|

||||

# stage 3

|

||||

safety_modules = {

|

||||

"feature_extractor": stage_1.feature_extractor,

|

||||

"safety_checker": stage_1.safety_checker,

|

||||

"watermarker": stage_1.watermarker,

|

||||

}

|

||||

stage_3 = DiffusionPipeline.from_pretrained(

|

||||

"stabilityai/stable-diffusion-x4-upscaler", **safety_modules, torch_dtype=torch.float16

|

||||

)

|

||||

stage_3.enable_model_cpu_offload()

|

||||

|

||||

prompt = 'a photo of a kangaroo wearing an orange hoodie and blue sunglasses standing in front of the eiffel tower holding a sign that says "very deep learning"'

|

||||

generator = torch.manual_seed(1)

|

||||

|

||||

# text embeds

|

||||

prompt_embeds, negative_embeds = stage_1.encode_prompt(prompt)

|

||||

|

||||

# stage 1

|

||||

image = stage_1(

|

||||

prompt_embeds=prompt_embeds, negative_prompt_embeds=negative_embeds, generator=generator, output_type="pt"

|

||||

).images

|

||||

pt_to_pil(image)[0].save("./if_stage_I.png")

|

||||

|

||||

# stage 2

|

||||

image = stage_2(

|

||||

image=image,

|

||||

prompt_embeds=prompt_embeds,

|

||||

negative_prompt_embeds=negative_embeds,

|

||||

generator=generator,

|

||||

output_type="pt",

|

||||

).images

|

||||

pt_to_pil(image)[0].save("./if_stage_II.png")

|

||||

|

||||

# stage 3

|

||||

image = stage_3(prompt=prompt, image=image, noise_level=100, generator=generator).images

|

||||

image[0].save("./if_stage_III.png")

|

||||

```

|

||||

|

||||

### Text Guided Image-to-Image Generation

|

||||

|

||||

The same IF model weights can be used for text-guided image-to-image translation or image variation.

|

||||

In this case just make sure to load the weights using the [`IFInpaintingPipeline`] and [`IFInpaintingSuperResolutionPipeline`] pipelines.

|

||||

|

||||

**Note**: You can also directly move the weights of the text-to-image pipelines to the image-to-image pipelines

|

||||

without loading them twice by making use of the [`~DiffusionPipeline.components()`] function as explained [here](#converting-between-different-pipelines).

|

||||

|

||||

```python

|

||||

from diffusers import IFImg2ImgPipeline, IFImg2ImgSuperResolutionPipeline, DiffusionPipeline

|

||||

from diffusers.utils import pt_to_pil

|

||||

|

||||

import torch

|

||||

|

||||

from PIL import Image

|

||||

import requests

|

||||

from io import BytesIO

|

||||

|

||||

# download image

|

||||

url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

|

||||

response = requests.get(url)

|

||||

original_image = Image.open(BytesIO(response.content)).convert("RGB")

|

||||

original_image = original_image.resize((768, 512))

|

||||

|

||||

# stage 1

|

||||

stage_1 = IFImg2ImgPipeline.from_pretrained("DeepFloyd/IF-I-XL-v1.0", variant="fp16", torch_dtype=torch.float16)

|

||||

stage_1.enable_model_cpu_offload()

|

||||

|

||||

# stage 2

|

||||

stage_2 = IFImg2ImgSuperResolutionPipeline.from_pretrained(

|

||||

"DeepFloyd/IF-II-L-v1.0", text_encoder=None, variant="fp16", torch_dtype=torch.float16

|

||||

)

|

||||

stage_2.enable_model_cpu_offload()

|

||||

|

||||

# stage 3

|

||||

safety_modules = {

|

||||

"feature_extractor": stage_1.feature_extractor,

|

||||

"safety_checker": stage_1.safety_checker,

|

||||

"watermarker": stage_1.watermarker,

|

||||

}

|

||||

stage_3 = DiffusionPipeline.from_pretrained(

|

||||

"stabilityai/stable-diffusion-x4-upscaler", **safety_modules, torch_dtype=torch.float16

|

||||

)

|

||||

stage_3.enable_model_cpu_offload()

|

||||

|

||||

prompt = "A fantasy landscape in style minecraft"

|

||||

generator = torch.manual_seed(1)

|

||||

|

||||

# text embeds

|

||||

prompt_embeds, negative_embeds = stage_1.encode_prompt(prompt)

|

||||

|

||||

# stage 1

|

||||

image = stage_1(

|

||||

image=original_image,

|

||||

prompt_embeds=prompt_embeds,

|

||||

negative_prompt_embeds=negative_embeds,

|

||||

generator=generator,

|

||||

output_type="pt",

|

||||

).images

|

||||

pt_to_pil(image)[0].save("./if_stage_I.png")

|

||||

|

||||

# stage 2

|

||||

image = stage_2(

|

||||

image=image,

|

||||

original_image=original_image,

|

||||

prompt_embeds=prompt_embeds,

|

||||

negative_prompt_embeds=negative_embeds,

|

||||

generator=generator,

|

||||

output_type="pt",

|

||||

).images

|

||||

pt_to_pil(image)[0].save("./if_stage_II.png")

|

||||

|

||||

# stage 3

|

||||

image = stage_3(prompt=prompt, image=image, generator=generator, noise_level=100).images

|

||||

image[0].save("./if_stage_III.png")

|

||||

```

|

||||

|

||||

### Text Guided Inpainting Generation

|

||||

|

||||

The same IF model weights can be used for text-guided image-to-image translation or image variation.

|

||||

In this case just make sure to load the weights using the [`IFInpaintingPipeline`] and [`IFInpaintingSuperResolutionPipeline`] pipelines.

|

||||

|

||||

**Note**: You can also directly move the weights of the text-to-image pipelines to the image-to-image pipelines

|

||||

without loading them twice by making use of the [`~DiffusionPipeline.components()`] function as explained [here](#converting-between-different-pipelines).

|

||||

|

||||

```python

|

||||

from diffusers import IFInpaintingPipeline, IFInpaintingSuperResolutionPipeline, DiffusionPipeline

|

||||

from diffusers.utils import pt_to_pil

|

||||

import torch

|

||||

|

||||

from PIL import Image

|

||||

import requests

|

||||

from io import BytesIO

|

||||

|

||||

# download image

|

||||

url = "https://huggingface.co/datasets/diffusers/docs-images/resolve/main/if/person.png"

|

||||

response = requests.get(url)

|

||||

original_image = Image.open(BytesIO(response.content)).convert("RGB")

|

||||

original_image = original_image

|

||||

|

||||

# download mask

|

||||

url = "https://huggingface.co/datasets/diffusers/docs-images/resolve/main/if/glasses_mask.png"

|

||||

response = requests.get(url)

|

||||

mask_image = Image.open(BytesIO(response.content))

|

||||

mask_image = mask_image

|

||||

|

||||

# stage 1

|

||||

stage_1 = IFInpaintingPipeline.from_pretrained("DeepFloyd/IF-I-XL-v1.0", variant="fp16", torch_dtype=torch.float16)

|

||||

stage_1.enable_model_cpu_offload()

|

||||

|

||||

# stage 2

|

||||

stage_2 = IFInpaintingSuperResolutionPipeline.from_pretrained(

|

||||

"DeepFloyd/IF-II-L-v1.0", text_encoder=None, variant="fp16", torch_dtype=torch.float16

|

||||

)

|

||||

stage_2.enable_model_cpu_offload()

|

||||

|

||||

# stage 3

|

||||

safety_modules = {

|

||||

"feature_extractor": stage_1.feature_extractor,

|

||||

"safety_checker": stage_1.safety_checker,

|

||||

"watermarker": stage_1.watermarker,

|

||||

}

|

||||

stage_3 = DiffusionPipeline.from_pretrained(

|

||||

"stabilityai/stable-diffusion-x4-upscaler", **safety_modules, torch_dtype=torch.float16

|

||||

)

|

||||

stage_3.enable_model_cpu_offload()

|

||||

|

||||

prompt = "blue sunglasses"

|

||||

generator = torch.manual_seed(1)

|

||||

|

||||

# text embeds

|

||||

prompt_embeds, negative_embeds = stage_1.encode_prompt(prompt)

|

||||

|

||||

# stage 1

|

||||

image = stage_1(

|

||||

image=original_image,

|

||||

mask_image=mask_image,

|

||||

prompt_embeds=prompt_embeds,

|

||||

negative_prompt_embeds=negative_embeds,

|

||||

generator=generator,

|

||||

output_type="pt",

|

||||

).images

|

||||

pt_to_pil(image)[0].save("./if_stage_I.png")

|

||||

|

||||

# stage 2

|

||||

image = stage_2(

|

||||

image=image,

|

||||

original_image=original_image,

|

||||

mask_image=mask_image,

|

||||

prompt_embeds=prompt_embeds,

|

||||

negative_prompt_embeds=negative_embeds,

|

||||

generator=generator,

|

||||

output_type="pt",

|

||||

).images

|

||||

pt_to_pil(image)[0].save("./if_stage_II.png")

|

||||

|

||||

# stage 3

|

||||

image = stage_3(prompt=prompt, image=image, generator=generator, noise_level=100).images

|

||||

image[0].save("./if_stage_III.png")

|

||||

```

|

||||

|

||||

### Converting between different pipelines

|

||||

|

||||

In addition to being loaded with `from_pretrained`, Pipelines can also be loaded directly from each other.

|

||||

|

||||

```python

|

||||

from diffusers import IFPipeline, IFSuperResolutionPipeline

|

||||

|

||||

pipe_1 = IFPipeline.from_pretrained("DeepFloyd/IF-I-XL-v1.0")

|

||||

pipe_2 = IFSuperResolutionPipeline.from_pretrained("DeepFloyd/IF-II-L-v1.0")

|

||||

|

||||

|

||||

from diffusers import IFImg2ImgPipeline, IFImg2ImgSuperResolutionPipeline

|

||||

|

||||

pipe_1 = IFImg2ImgPipeline(**pipe_1.components)

|

||||

pipe_2 = IFImg2ImgSuperResolutionPipeline(**pipe_2.components)

|

||||

|

||||

|

||||

from diffusers import IFInpaintingPipeline, IFInpaintingSuperResolutionPipeline

|

||||

|

||||

pipe_1 = IFInpaintingPipeline(**pipe_1.components)

|

||||

pipe_2 = IFInpaintingSuperResolutionPipeline(**pipe_2.components)

|

||||

```

|

||||

|

||||

### Optimizing for speed

|

||||

|

||||

The simplest optimization to run IF faster is to move all model components to the GPU.

|

||||

|

||||

```py

|

||||

pipe = DiffusionPipeline.from_pretrained("DeepFloyd/IF-I-XL-v1.0", variant="fp16", torch_dtype=torch.float16)

|

||||

pipe.to("cuda")

|

||||

```

|

||||

|

||||

You can also run the diffusion process for a shorter number of timesteps.

|

||||

|

||||

This can either be done with the `num_inference_steps` argument

|

||||

|

||||

```py

|

||||

pipe("<prompt>", num_inference_steps=30)

|

||||

```

|

||||

|

||||

Or with the `timesteps` argument

|

||||

|

||||

```py

|

||||

from diffusers.pipelines.deepfloyd_if import fast27_timesteps

|

||||

|

||||

pipe("<prompt>", timesteps=fast27_timesteps)

|

||||

```

|

||||

|

||||

When doing image variation or inpainting, you can also decrease the number of timesteps

|

||||

with the strength argument. The strength argument is the amount of noise to add to

|

||||

the input image which also determines how many steps to run in the denoising process.

|

||||

A smaller number will vary the image less but run faster.

|

||||

|

||||

```py

|

||||

pipe = IFImg2ImgPipeline.from_pretrained("DeepFloyd/IF-I-XL-v1.0", variant="fp16", torch_dtype=torch.float16)

|

||||

pipe.to("cuda")

|

||||

|

||||

image = pipe(image=image, prompt="<prompt>", strength=0.3).images

|

||||

```

|

||||

|

||||

You can also use [`torch.compile`](../../optimization/torch2.0). Note that we have not exhaustively tested `torch.compile`

|

||||

with IF and it might not give expected results.

|

||||

|

||||

```py

|

||||

import torch

|

||||

|

||||

pipe = DiffusionPipeline.from_pretrained("DeepFloyd/IF-I-XL-v1.0", variant="fp16", torch_dtype=torch.float16)

|

||||

pipe.to("cuda")

|

||||

|

||||

pipe.text_encoder = torch.compile(pipe.text_encoder)

|

||||

pipe.unet = torch.compile(pipe.unet)

|

||||

```

|

||||

|

||||

### Optimizing for memory

|

||||

|

||||

When optimizing for GPU memory, we can use the standard diffusers cpu offloading APIs.

|

||||

|

||||

Either the model based CPU offloading,

|

||||

|

||||

```py

|

||||

pipe = DiffusionPipeline.from_pretrained("DeepFloyd/IF-I-XL-v1.0", variant="fp16", torch_dtype=torch.float16)

|

||||

pipe.enable_model_cpu_offload()

|

||||

```

|

||||

|

||||

or the more aggressive layer based CPU offloading.

|

||||

|

||||

```py

|

||||

pipe = DiffusionPipeline.from_pretrained("DeepFloyd/IF-I-XL-v1.0", variant="fp16", torch_dtype=torch.float16)

|

||||

pipe.enable_sequential_cpu_offload()

|

||||

```

|

||||

|

||||

Additionally, T5 can be loaded in 8bit precision

|

||||

|

||||

```py

|

||||

from transformers import T5EncoderModel

|

||||

|

||||

text_encoder = T5EncoderModel.from_pretrained(

|

||||

"DeepFloyd/IF-I-XL-v1.0", subfolder="text_encoder", device_map="auto", load_in_8bit=True, variant="8bit"

|

||||

)

|

||||

|

||||

from diffusers import DiffusionPipeline

|

||||

|

||||

pipe = DiffusionPipeline.from_pretrained(

|

||||

"DeepFloyd/IF-I-XL-v1.0",

|

||||

text_encoder=text_encoder, # pass the previously instantiated 8bit text encoder

|

||||

unet=None,

|

||||

device_map="auto",

|

||||

)

|

||||

|

||||

prompt_embeds, negative_embeds = pipe.encode_prompt("<prompt>")

|

||||

```

|

||||

|

||||

For CPU RAM constrained machines like google colab free tier where we can't load all

|

||||

model components to the CPU at once, we can manually only load the pipeline with

|

||||

the text encoder or unet when the respective model components are needed.

|

||||

|

||||

```py

|

||||

from diffusers import IFPipeline, IFSuperResolutionPipeline

|

||||

import torch

|

||||

import gc

|

||||

from transformers import T5EncoderModel

|

||||

from diffusers.utils import pt_to_pil

|

||||

|

||||

text_encoder = T5EncoderModel.from_pretrained(

|

||||

"DeepFloyd/IF-I-XL-v1.0", subfolder="text_encoder", device_map="auto", load_in_8bit=True, variant="8bit"

|

||||

)

|

||||

|

||||

# text to image

|

||||

|

||||

pipe = DiffusionPipeline.from_pretrained(

|

||||

"DeepFloyd/IF-I-XL-v1.0",

|

||||

text_encoder=text_encoder, # pass the previously instantiated 8bit text encoder

|

||||

unet=None,

|

||||

device_map="auto",

|

||||

)

|

||||

|

||||

prompt = 'a photo of a kangaroo wearing an orange hoodie and blue sunglasses standing in front of the eiffel tower holding a sign that says "very deep learning"'

|

||||

prompt_embeds, negative_embeds = pipe.encode_prompt(prompt)

|

||||

|

||||

# Remove the pipeline so we can re-load the pipeline with the unet

|

||||

del text_encoder

|

||||

del pipe

|

||||

gc.collect()

|

||||

torch.cuda.empty_cache()

|

||||

|

||||

pipe = IFPipeline.from_pretrained(

|

||||

"DeepFloyd/IF-I-XL-v1.0", text_encoder=None, variant="fp16", torch_dtype=torch.float16, device_map="auto"

|

||||

)

|

||||

|

||||

generator = torch.Generator().manual_seed(0)

|

||||

image = pipe(

|

||||

prompt_embeds=prompt_embeds,

|

||||

negative_prompt_embeds=negative_embeds,

|

||||

output_type="pt",

|

||||

generator=generator,

|

||||

).images

|

||||

|

||||

pt_to_pil(image)[0].save("./if_stage_I.png")

|

||||

|

||||

# Remove the pipeline so we can load the super-resolution pipeline

|

||||

del pipe

|

||||

gc.collect()

|

||||

torch.cuda.empty_cache()

|

||||

|

||||

# First super resolution

|

||||

|

||||

pipe = IFSuperResolutionPipeline.from_pretrained(

|

||||

"DeepFloyd/IF-II-L-v1.0", text_encoder=None, variant="fp16", torch_dtype=torch.float16, device_map="auto"

|

||||

)

|

||||

|

||||

generator = torch.Generator().manual_seed(0)

|

||||

image = pipe(

|

||||

image=image,

|

||||

prompt_embeds=prompt_embeds,

|

||||

negative_prompt_embeds=negative_embeds,

|

||||

output_type="pt",

|

||||

generator=generator,

|

||||

).images

|

||||

|

||||

pt_to_pil(image)[0].save("./if_stage_II.png")

|

||||

```

|

||||

|

||||

|

||||

## Available Pipelines:

|

||||

|

||||

| Pipeline | Tasks | Colab

|

||||

|---|---|:---:|

|

||||

| [pipeline_if.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/deepfloyd_if/pipeline_if.py) | *Text-to-Image Generation* | - |

|

||||

| [pipeline_if_superresolution.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/deepfloyd_if/pipeline_if.py) | *Text-to-Image Generation* | - |

|

||||

| [pipeline_if_img2img.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/deepfloyd_if/pipeline_if_img2img.py) | *Image-to-Image Generation* | - |

|

||||

| [pipeline_if_img2img_superresolution.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/deepfloyd_if/pipeline_if_img2img_superresolution.py) | *Image-to-Image Generation* | - |

|

||||

| [pipeline_if_inpainting.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/deepfloyd_if/pipeline_if_inpainting.py) | *Image-to-Image Generation* | - |

|

||||

| [pipeline_if_inpainting_superresolution.py](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/deepfloyd_if/pipeline_if_inpainting_superresolution.py) | *Image-to-Image Generation* | - |

|

||||

|

||||

## IFPipeline

|

||||

[[autodoc]] IFPipeline

|

||||

- all

|

||||

- __call__

|

||||

|

||||

## IFSuperResolutionPipeline

|

||||

[[autodoc]] IFSuperResolutionPipeline

|

||||

- all

|

||||

- __call__

|

||||

|

||||

## IFImg2ImgPipeline

|

||||

[[autodoc]] IFImg2ImgPipeline

|

||||

- all

|

||||

- __call__

|

||||

|

||||

## IFImg2ImgSuperResolutionPipeline

|

||||

[[autodoc]] IFImg2ImgSuperResolutionPipeline

|

||||

- all

|

||||

- __call__

|

||||

|

||||

## IFInpaintingPipeline

|

||||

[[autodoc]] IFInpaintingPipeline

|

||||

- all

|

||||

- __call__

|

||||

|

||||

## IFInpaintingSuperResolutionPipeline

|

||||

[[autodoc]] IFInpaintingSuperResolutionPipeline

|

||||

- all

|

||||

- __call__

|

||||

@@ -51,6 +51,9 @@ available a colab notebook to directly try them out.

|

||||

| [dance_diffusion](./dance_diffusion) | [**Dance Diffusion**](https://github.com/williamberman/diffusers.git) | Unconditional Audio Generation |

|

||||

| [ddpm](./ddpm) | [**Denoising Diffusion Probabilistic Models**](https://arxiv.org/abs/2006.11239) | Unconditional Image Generation |

|

||||

| [ddim](./ddim) | [**Denoising Diffusion Implicit Models**](https://arxiv.org/abs/2010.02502) | Unconditional Image Generation |

|

||||

| [if](./if) | [**IF**](https://github.com/deep-floyd/IF) | Image Generation | [](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/deepfloyd_if_free_tier_google_colab.ipynb)

|

||||

| [if_img2img](./if) | [**IF**](https://github.com/deep-floyd/IF) | Image-to-Image Generation | [](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/deepfloyd_if_free_tier_google_colab.ipynb)

|

||||

| [if_inpainting](./if) | [**IF**](https://github.com/deep-floyd/IF) | Image-to-Image Generation | [](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/deepfloyd_if_free_tier_google_colab.ipynb)

|

||||

| [latent_diffusion](./latent_diffusion) | [**High-Resolution Image Synthesis with Latent Diffusion Models**](https://arxiv.org/abs/2112.10752)| Text-to-Image Generation |

|

||||

| [latent_diffusion](./latent_diffusion) | [**High-Resolution Image Synthesis with Latent Diffusion Models**](https://arxiv.org/abs/2112.10752)| Super Resolution Image-to-Image |

|

||||

| [latent_diffusion_uncond](./latent_diffusion_uncond) | [**High-Resolution Image Synthesis with Latent Diffusion Models**](https://arxiv.org/abs/2112.10752) | Unconditional Image Generation |

|

||||

|

||||

@@ -277,7 +277,6 @@ Canny Control Example

|

||||

|<a href="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare_guess_mode/output_images/diffusers/output_bird_canny_0.png"><img width="128" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare_guess_mode/output_images/diffusers/output_bird_canny_0.png"/></a>|<a href="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare_guess_mode/output_images/diffusers/output_bird_canny_0_gm.png"><img width="128" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare_guess_mode/output_images/diffusers/output_bird_canny_0_gm.png"/></a>|

|

||||

|

||||

|

||||

|

||||

## Available checkpoints

|

||||

|

||||

ControlNet requires a *control image* in addition to the text-to-image *prompt*.

|

||||

@@ -285,7 +284,9 @@ Each pretrained model is trained using a different conditioning method that requ

|

||||

|

||||

All checkpoints can be found under the authors' namespace [lllyasviel](https://huggingface.co/lllyasviel).

|

||||

|

||||

### ControlNet with Stable Diffusion 1.5

|

||||

**13.04.2024 Update**: The author has released improved controlnet checkpoints v1.1 - see [here](#controlnet-v1.1).

|

||||

|

||||

### ControlNet v1.0

|

||||

|

||||

| Model Name | Control Image Overview| Control Image Example | Generated Image Example |

|

||||

|---|---|---|---|

|

||||

@@ -298,6 +299,24 @@ All checkpoints can be found under the authors' namespace [lllyasviel](https://h

|

||||

|[lllyasviel/sd-controlnet-scribble](https://huggingface.co/lllyasviel/sd-controlnet_scribble)<br/> *Trained with human scribbles* |A hand-drawn monochrome image with white outlines on a black background.|<a href="https://huggingface.co/takuma104/controlnet_dev/blob/main/gen_compare/control_images/converted/control_vermeer_scribble.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_vermeer_scribble.png"/></a>|<a href="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_vermeer_scribble_0.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_vermeer_scribble_0.png"/></a> |

|

||||

|[lllyasviel/sd-controlnet-seg](https://huggingface.co/lllyasviel/sd-controlnet_seg)<br/>*Trained with semantic segmentation* |An [ADE20K](https://groups.csail.mit.edu/vision/datasets/ADE20K/)'s segmentation protocol image.|<a href="https://huggingface.co/takuma104/controlnet_dev/blob/main/gen_compare/control_images/converted/control_room_seg.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/control_images/converted/control_room_seg.png"/></a>|<a href="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_room_seg_1.png"><img width="64" src="https://huggingface.co/takuma104/controlnet_dev/resolve/main/gen_compare/output_images/diffusers/output_room_seg_1.png"/></a> |

|

||||

|

||||

### ControlNet v1.1

|

||||

|

||||

| Model Name | Control Image Overview| Control Image Example | Generated Image Example |

|

||||

|---|---|---|---|

|

||||

|[lllyasviel/control_v11p_sd15_canny](https://huggingface.co/lllyasviel/control_v11p_sd15_canny)<br/> *Trained with canny edge detection* | A monochrome image with white edges on a black background.|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_canny/resolve/main/images/control.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/lllyasviel/control_v11p_sd15_canny/resolve/main/images/control.png"/></a>|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_canny/resolve/main/images/image_out.png"><img width="64" src="https://huggingface.co/lllyasviel/control_v11p_sd15_canny/resolve/main/images/image_out.png"/></a>|

|

||||

|[lllyasviel/control_v11e_sd15_ip2p](https://huggingface.co/lllyasviel/control_v11e_sd15_ip2p)<br/> *Trained with pixel to pixel instruction* | No condition .|<a href="https://huggingface.co/lllyasviel/control_v11e_sd15_ip2p/resolve/main/images/control.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/lllyasviel/control_v11e_sd15_ip2p/resolve/main/images/control.png"/></a>|<a href="https://huggingface.co/lllyasviel/control_v11e_sd15_ip2p/resolve/main/images/image_out.png"><img width="64" src="https://huggingface.co/lllyasviel/control_v11e_sd15_ip2p/resolve/main/images/image_out.png"/></a>|

|

||||

|[lllyasviel/control_v11p_sd15_inpaint](https://huggingface.co/lllyasviel/control_v11p_sd15_inpaint)<br/> Trained with image inpainting | No condition.|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_inpaint/resolve/main/images/control.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/lllyasviel/control_v11p_sd15_inpaint/resolve/main/images/control.png"/></a>|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_inpaint/resolve/main/images/output.png"><img width="64" src="https://huggingface.co/lllyasviel/control_v11p_sd15_inpaint/resolve/main/images/output.png"/></a>|

|

||||

|[lllyasviel/control_v11p_sd15_mlsd](https://huggingface.co/lllyasviel/control_v11p_sd15_mlsd)<br/> Trained with multi-level line segment detection | An image with annotated line segments.|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_mlsd/resolve/main/images/control.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/lllyasviel/control_v11p_sd15_mlsd/resolve/main/images/control.png"/></a>|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_mlsd/resolve/main/images/image_out.png"><img width="64" src="https://huggingface.co/lllyasviel/control_v11p_sd15_mlsd/resolve/main/images/image_out.png"/></a>|

|

||||

|[lllyasviel/control_v11f1p_sd15_depth](https://huggingface.co/lllyasviel/control_v11f1p_sd15_depth)<br/> Trained with depth estimation | An image with depth information, usually represented as a grayscale image.|<a href="https://huggingface.co/lllyasviel/control_v11f1p_sd15_depth/resolve/main/images/control.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/lllyasviel/control_v11f1p_sd15_depth/resolve/main/images/control.png"/></a>|<a href="https://huggingface.co/lllyasviel/control_v11f1p_sd15_depth/resolve/main/images/image_out.png"><img width="64" src="https://huggingface.co/lllyasviel/control_v11f1p_sd15_depth/resolve/main/images/image_out.png"/></a>|

|

||||

|[lllyasviel/control_v11p_sd15_normalbae](https://huggingface.co/lllyasviel/control_v11p_sd15_normalbae)<br/> Trained with surface normal estimation | An image with surface normal information, usually represented as a color-coded image.|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_normalbae/resolve/main/images/control.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/lllyasviel/control_v11p_sd15_normalbae/resolve/main/images/control.png"/></a>|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_normalbae/resolve/main/images/image_out.png"><img width="64" src="https://huggingface.co/lllyasviel/control_v11p_sd15_normalbae/resolve/main/images/image_out.png"/></a>|

|

||||

|[lllyasviel/control_v11p_sd15_seg](https://huggingface.co/lllyasviel/control_v11p_sd15_seg)<br/> Trained with image segmentation | An image with segmented regions, usually represented as a color-coded image.|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_seg/resolve/main/images/control.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/lllyasviel/control_v11p_sd15_seg/resolve/main/images/control.png"/></a>|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_seg/resolve/main/images/image_out.png"><img width="64" src="https://huggingface.co/lllyasviel/control_v11p_sd15_seg/resolve/main/images/image_out.png"/></a>|

|

||||

|[lllyasviel/control_v11p_sd15_lineart](https://huggingface.co/lllyasviel/control_v11p_sd15_lineart)<br/> Trained with line art generation | An image with line art, usually black lines on a white background.|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_lineart/resolve/main/images/control.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/lllyasviel/control_v11p_sd15_lineart/resolve/main/images/control.png"/></a>|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_lineart/resolve/main/images/image_out.png"><img width="64" src="https://huggingface.co/lllyasviel/control_v11p_sd15_lineart/resolve/main/images/image_out.png"/></a>|

|

||||

|[lllyasviel/control_v11p_sd15s2_lineart_anime](https://huggingface.co/lllyasviel/control_v11p_sd15s2_lineart_anime)<br/> Trained with anime line art generation | An image with anime-style line art.|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15s2_lineart_anime/resolve/main/images/control.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/lllyasviel/control_v11p_sd15s2_lineart_anime/resolve/main/images/control.png"/></a>|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15s2_lineart_anime/resolve/main/images/image_out.png"><img width="64" src="https://huggingface.co/lllyasviel/control_v11p_sd15s2_lineart_anime/resolve/main/images/image_out.png"/></a>|

|

||||

|[lllyasviel/control_v11p_sd15_openpose](https://huggingface.co/lllyasviel/control_v11p_sd15s2_lineart_anime)<br/> Trained with human pose estimation | An image with human poses, usually represented as a set of keypoints or skeletons.|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_openpose/resolve/main/images/control.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/lllyasviel/control_v11p_sd15_openpose/resolve/main/images/control.png"/></a>|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_openpose/resolve/main/images/image_out.png"><img width="64" src="https://huggingface.co/lllyasviel/control_v11p_sd15_openpose/resolve/main/images/image_out.png"/></a>|

|

||||

|[lllyasviel/control_v11p_sd15_scribble](https://huggingface.co/lllyasviel/control_v11p_sd15_scribble)<br/> Trained with scribble-based image generation | An image with scribbles, usually random or user-drawn strokes.|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_scribble/resolve/main/images/control.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/lllyasviel/control_v11p_sd15_scribble/resolve/main/images/control.png"/></a>|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_scribble/resolve/main/images/image_out.png"><img width="64" src="https://huggingface.co/lllyasviel/control_v11p_sd15_scribble/resolve/main/images/image_out.png"/></a>|

|

||||

|[lllyasviel/control_v11p_sd15_softedge](https://huggingface.co/lllyasviel/control_v11p_sd15_softedge)<br/> Trained with soft edge image generation | An image with soft edges, usually to create a more painterly or artistic effect.|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_softedge/resolve/main/images/control.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/lllyasviel/control_v11p_sd15_softedge/resolve/main/images/control.png"/></a>|<a href="https://huggingface.co/lllyasviel/control_v11p_sd15_softedge/resolve/main/images/image_out.png"><img width="64" src="https://huggingface.co/lllyasviel/control_v11p_sd15_softedge/resolve/main/images/image_out.png"/></a>|

|

||||

|[lllyasviel/control_v11e_sd15_shuffle](https://huggingface.co/lllyasviel/control_v11e_sd15_shuffle)<br/> Trained with image shuffling | An image with shuffled patches or regions.|<a href="https://huggingface.co/lllyasviel/control_v11e_sd15_shuffle/resolve/main/images/control.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/lllyasviel/control_v11e_sd15_shuffle/resolve/main/images/control.png"/></a>|<a href="https://huggingface.co/lllyasviel/control_v11e_sd15_shuffle/resolve/main/images/image_out.png"><img width="64" src="https://huggingface.co/lllyasviel/control_v11e_sd15_shuffle/resolve/main/images/image_out.png"/></a>|

|

||||

|

||||

## StableDiffusionControlNetPipeline

|

||||

[[autodoc]] StableDiffusionControlNetPipeline

|

||||

- all

|

||||

|

||||

@@ -58,6 +58,9 @@ The library has three main components:

|

||||

| [dance_diffusion](./api/pipelines/dance_diffusion) | [Dance Diffusion](https://github.com/williamberman/diffusers.git) | Unconditional Audio Generation |

|

||||

| [ddpm](./api/pipelines/ddpm) | [Denoising Diffusion Probabilistic Models](https://arxiv.org/abs/2006.11239) | Unconditional Image Generation |

|

||||

| [ddim](./api/pipelines/ddim) | [Denoising Diffusion Implicit Models](https://arxiv.org/abs/2010.02502) | Unconditional Image Generation |

|

||||

| [if](./if) | [**IF**](./api/pipelines/if) | Image Generation |

|

||||

| [if_img2img](./if) | [**IF**](./api/pipelines/if) | Image-to-Image Generation |

|

||||

| [if_inpainting](./if) | [**IF**](./api/pipelines/if) | Image-to-Image Generation |

|

||||

| [latent_diffusion](./api/pipelines/latent_diffusion) | [High-Resolution Image Synthesis with Latent Diffusion Models](https://arxiv.org/abs/2112.10752)| Text-to-Image Generation |

|

||||

| [latent_diffusion](./api/pipelines/latent_diffusion) | [High-Resolution Image Synthesis with Latent Diffusion Models](https://arxiv.org/abs/2112.10752)| Super Resolution Image-to-Image |

|

||||

| [latent_diffusion_uncond](./api/pipelines/latent_diffusion_uncond) | [High-Resolution Image Synthesis with Latent Diffusion Models](https://arxiv.org/abs/2112.10752) | Unconditional Image Generation |

|

||||

|

||||

@@ -16,8 +16,8 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

## Requirements

|

||||

|

||||

- Optimum Habana 1.4 or later, [here](https://huggingface.co/docs/optimum/habana/installation) is how to install it.

|

||||

- SynapseAI 1.8.

|

||||

- Optimum Habana 1.5 or later, [here](https://huggingface.co/docs/optimum/habana/installation) is how to install it.

|

||||

- SynapseAI 1.9.

|

||||

|

||||

|

||||

## Inference Pipeline

|

||||

@@ -64,7 +64,16 @@ For more information, check out Optimum Habana's [documentation](https://hugging

|

||||

|

||||

Here are the latencies for Habana first-generation Gaudi and Gaudi2 with the [Habana/stable-diffusion](https://huggingface.co/Habana/stable-diffusion) Gaudi configuration (mixed precision bf16/fp32):

|

||||

|

||||

- [Stable Diffusion v1.5](https://huggingface.co/runwayml/stable-diffusion-v1-5) (512x512 resolution):

|

||||

|

||||

| | Latency (batch size = 1) | Throughput (batch size = 8) |

|

||||

| ---------------------- |:------------------------:|:---------------------------:|

|

||||

| first-generation Gaudi | 4.29s | 0.283 images/s |

|

||||

| Gaudi2 | 1.54s | 0.904 images/s |

|

||||

| first-generation Gaudi | 4.22s | 0.29 images/s |

|

||||

| Gaudi2 | 1.70s | 0.925 images/s |

|

||||

|

||||

- [Stable Diffusion v2.1](https://huggingface.co/stabilityai/stable-diffusion-2-1) (768x768 resolution):

|

||||

|

||||

| | Latency (batch size = 1) | Throughput |

|

||||

| ---------------------- |:------------------------:|:-------------------------------:|

|

||||

| first-generation Gaudi | 23.3s | 0.045 images/s (batch size = 2) |

|

||||

| Gaudi2 | 7.75s | 0.14 images/s (batch size = 5) |

|

||||

|

||||

116

docs/source/en/optimization/tome.mdx

Normal file

116

docs/source/en/optimization/tome.mdx

Normal file

@@ -0,0 +1,116 @@

|

||||

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# Token Merging

|

||||

|

||||

Token Merging (introduced in [Token Merging: Your ViT But Faster](https://arxiv.org/abs/2210.09461)) works by merging the redundant tokens / patches progressively in the forward pass of a Transformer-based network. It can speed up the inference latency of the underlying network.

|

||||

|

||||

After Token Merging (ToMe) was released, the authors released [Token Merging for Fast Stable Diffusion](https://arxiv.org/abs/2303.17604), which introduced a version of ToMe which is more compatible with Stable Diffusion. We can use ToMe to gracefully speed up the inference latency of a [`DiffusionPipeline`]. This doc discusses how to apply ToMe to the [`StableDiffusionPipeline`], the expected speedups, and the qualitative aspects of using ToMe on the [`StableDiffusionPipeline`].

|

||||

|

||||

## Using ToMe

|

||||

|

||||

The authors of ToMe released a convenient Python library called [`tomesd`](https://github.com/dbolya/tomesd) that lets us apply ToMe to a [`DiffusionPipeline`] like so:

|

||||

|

||||

```diff

|

||||

from diffusers import StableDiffusionPipeline

|

||||

import tomesd

|

||||

|

||||

pipeline = StableDiffusionPipeline.from_pretrained(

|

||||

"runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16

|

||||

).to("cuda")

|

||||

+ tomesd.apply_patch(pipeline, ratio=0.5)

|

||||

|

||||

image = pipeline("a photo of an astronaut riding a horse on mars").images[0]

|

||||

```

|

||||

|

||||

And that’s it!

|

||||

|

||||

`tomesd.apply_patch()` exposes [a number of arguments](https://github.com/dbolya/tomesd#usage) to let us strike a balance between the pipeline inference speed and the quality of the generated tokens. Amongst those arguments, the most important one is `ratio`. `ratio` controls the number of tokens that will be merged during the forward pass. For more details on `tomesd`, please refer to the original repository https://github.com/dbolya/tomesd and [the paper](https://arxiv.org/abs/2303.17604).

|

||||

|

||||

## Benchmarking `tomesd` with `StableDiffusionPipeline`

|

||||

|

||||

We benchmarked the impact of using `tomesd` on [`StableDiffusionPipeline`] along with [xformers](https://huggingface.co/docs/diffusers/optimization/xformers) across different image resolutions. We used A100 and V100 as our test GPU devices with the following development environment (with Python 3.8.5):

|

||||

|

||||

```bash

|

||||

- `diffusers` version: 0.15.1

|

||||

- Python version: 3.8.16

|

||||

- PyTorch version (GPU?): 1.13.1+cu116 (True)

|

||||

- Huggingface_hub version: 0.13.2

|

||||

- Transformers version: 4.27.2

|

||||

- Accelerate version: 0.18.0

|

||||

- xFormers version: 0.0.16

|

||||

- tomesd version: 0.1.2

|

||||

```

|

||||

|

||||

We used this script for benchmarking: [https://gist.github.com/sayakpaul/27aec6bca7eb7b0e0aa4112205850335](https://gist.github.com/sayakpaul/27aec6bca7eb7b0e0aa4112205850335). Following are our findings:

|

||||

|

||||

### A100

|

||||

|

||||

| Resolution | Batch size | Vanilla | ToMe | ToMe + xFormers | ToMe speedup (%) | ToMe + xFormers speedup (%) |

|

||||

| --- | --- | --- | --- | --- | --- | --- |

|

||||

| 512 | 10 | 6.88 | 5.26 | 4.69 | 23.54651163 | 31.83139535 |

|

||||

| | | | | | | |

|

||||

| 768 | 10 | OOM | 14.71 | 11 | | |

|

||||

| | 8 | OOM | 11.56 | 8.84 | | |

|

||||

| | 4 | OOM | 5.98 | 4.66 | | |

|

||||

| | 2 | 4.99 | 3.24 | 3.1 | 35.07014028 | 37.8757515 |

|

||||

| | 1 | 3.29 | 2.24 | 2.03 | 31.91489362 | 38.29787234 |

|

||||

| | | | | | | |

|

||||

| 1024 | 10 | OOM | OOM | OOM | | |

|

||||

| | 8 | OOM | OOM | OOM | | |

|

||||

| | 4 | OOM | 12.51 | 9.09 | | |

|

||||

| | 2 | OOM | 6.52 | 4.96 | | |

|

||||

| | 1 | 6.4 | 3.61 | 2.81 | 43.59375 | 56.09375 |

|

||||

|

||||

***The timings reported here are in seconds. Speedups are calculated over the `Vanilla` timings.***

|

||||

|

||||

### V100

|

||||

|

||||

| Resolution | Batch size | Vanilla | ToMe | ToMe + xFormers | ToMe speedup (%) | ToMe + xFormers speedup (%) |

|

||||

| --- | --- | --- | --- | --- | --- | --- |

|

||||

| 512 | 10 | OOM | 10.03 | 9.29 | | |

|

||||

| | 8 | OOM | 8.05 | 7.47 | | |

|

||||

| | 4 | 5.7 | 4.3 | 3.98 | 24.56140351 | 30.1754386 |

|

||||

| | 2 | 3.14 | 2.43 | 2.27 | 22.61146497 | 27.70700637 |

|

||||

| | 1 | 1.88 | 1.57 | 1.57 | 16.4893617 | 16.4893617 |

|

||||

| | | | | | | |

|

||||

| 768 | 10 | OOM | OOM | 23.67 | | |

|

||||

| | 8 | OOM | OOM | 18.81 | | |

|

||||

| | 4 | OOM | 11.81 | 9.7 | | |

|

||||

| | 2 | OOM | 6.27 | 5.2 | | |

|

||||

| | 1 | 5.43 | 3.38 | 2.82 | 37.75322284 | 48.06629834 |

|

||||

| | | | | | | |

|

||||

| 1024 | 10 | OOM | OOM | OOM | | |

|

||||

| | 8 | OOM | OOM | OOM | | |

|

||||

| | 4 | OOM | OOM | 19.35 | | |

|

||||

| | 2 | OOM | 13 | 10.78 | | |

|

||||

| | 1 | OOM | 6.66 | 5.54 | | |

|

||||

|

||||

As seen in the tables above, the speedup with `tomesd` becomes more pronounced for larger image resolutions. It is also interesting to note that with `tomesd`, it becomes possible to run the pipeline on a higher resolution, like 1024x1024.

|

||||

|

||||

It might be possible to speed up inference even further with [`torch.compile()`](https://huggingface.co/docs/diffusers/optimization/torch2.0).

|

||||

|

||||

## Quality

|

||||

|

||||

As reported in [the paper](https://arxiv.org/abs/2303.17604), ToMe can preserve the quality of the generated images to a great extent while speeding up inference. By increasing the `ratio`, it is possible to further speed up inference, but that might come at the cost of a deterioration in the image quality.

|

||||

|

||||

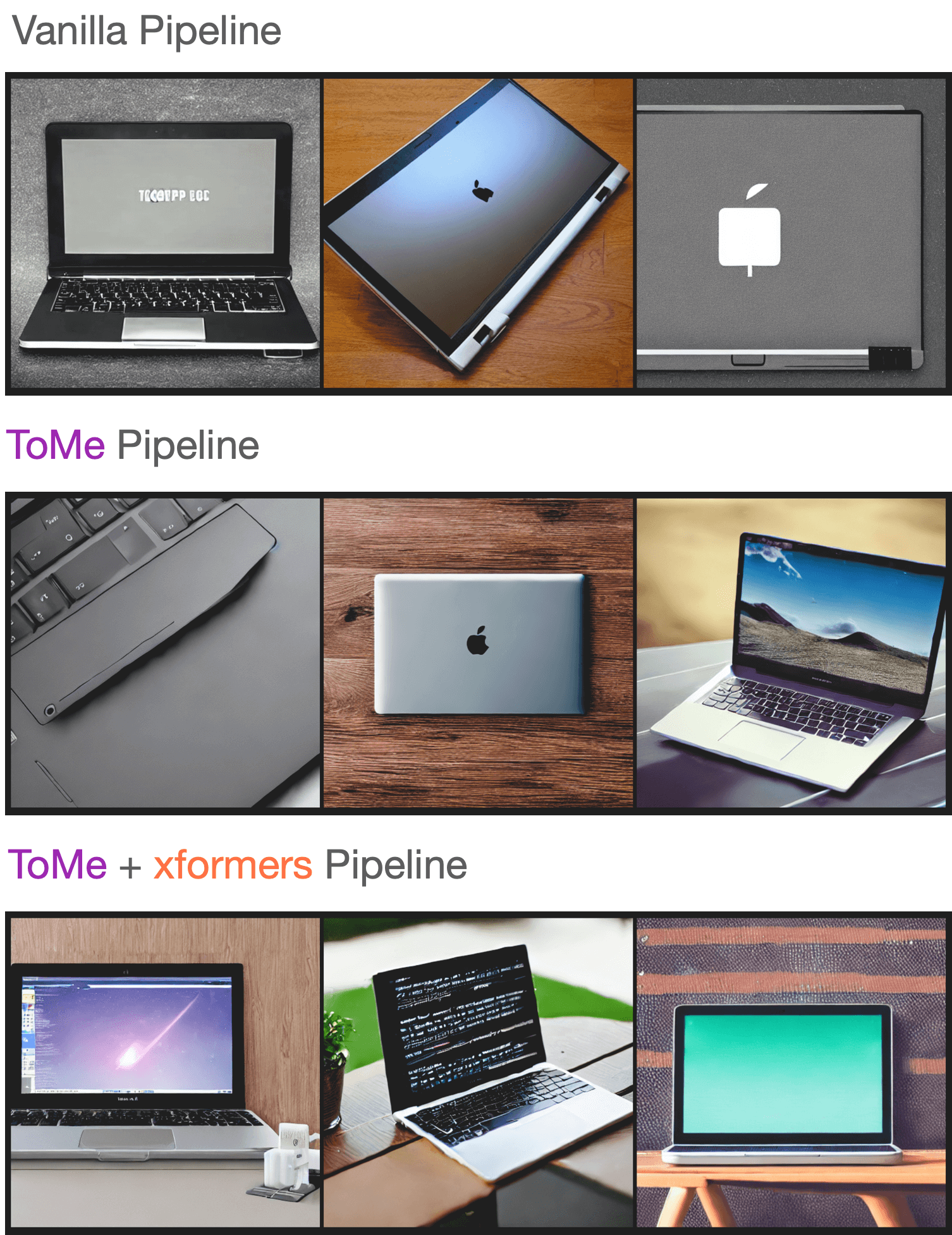

To test the quality of the generated samples using our setup, we sampled a few prompts from the “Parti Prompts” (introduced in [Parti](https://parti.research.google/)) and performed inference with the [`StableDiffusionPipeline`] in the following settings:

|

||||

|

||||

- Vanilla [`StableDiffusionPipeline`]

|

||||

- [`StableDiffusionPipeline`] + ToMe

|

||||

- [`StableDiffusionPipeline`] + ToMe + xformers

|

||||

|

||||

We didn’t notice any significant decrease in the quality of the generated samples. Here are samples:

|

||||

|

||||

|

||||

|

||||

You can check out the generated samples [here](https://wandb.ai/sayakpaul/tomesd-results/runs/23j4bj3i?workspace=). We used [this script](https://gist.github.com/sayakpaul/8cac98d7f22399085a060992f411ecbd) for conducting this experiment.

|

||||

@@ -74,6 +74,7 @@ wget https://huggingface.co/datasets/huggingface/documentation-images/resolve/ma

|

||||

wget https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/controlnet_training/conditioning_image_2.png

|

||||

```

|

||||

|

||||

Specify the `MODEL_NAME` environment variable (either a Hub model repository id or a path to the directory containing the model weights) and pass it to the [`~diffusers.DiffusionPipeline.from_pretrained.pretrained_model_name_or_path`] argument.

|

||||

|

||||

```bash

|

||||

export MODEL_DIR="runwayml/stable-diffusion-v1-5"

|

||||

|

||||

@@ -15,6 +15,8 @@ specific language governing permissions and limitations under the License.

|

||||

[Custom Diffusion](https://arxiv.org/abs/2212.04488) is a method to customize text-to-image models like Stable Diffusion given just a few (4~5) images of a subject.

|

||||

The `train_custom_diffusion.py` script shows how to implement the training procedure and adapt it for stable diffusion.

|

||||

|

||||

This training example was contributed by [Nupur Kumari](https://nupurkmr9.github.io/) (one of the authors of Custom Diffusion).

|

||||

|

||||

## Running locally with PyTorch

|

||||

|

||||

### Installing the dependencies

|

||||

|

||||

@@ -50,6 +50,20 @@ from accelerate.utils import write_basic_config

|

||||

write_basic_config()

|

||||

```

|

||||

|

||||

Finally, download a [few images of a dog](https://huggingface.co/datasets/diffusers/dog-example) to DreamBooth with:

|

||||

|

||||

```py

|

||||

from huggingface_hub import snapshot_download

|

||||

|

||||

local_dir = "./dog"

|

||||

snapshot_download(

|

||||

"diffusers/dog-example",

|

||||

local_dir=local_dir,

|

||||

repo_type="dataset",

|

||||

ignore_patterns=".gitattributes",

|

||||

)

|

||||

```

|

||||

|

||||

## Finetuning

|

||||

|

||||

<Tip warning={true}>

|

||||

@@ -60,22 +74,13 @@ DreamBooth finetuning is very sensitive to hyperparameters and easy to overfit.

|

||||

|

||||

<frameworkcontent>

|

||||

<pt>

|

||||

Let's try DreamBooth with a

|

||||

[few images of a dog](https://huggingface.co/datasets/diffusers/dog-example);

|

||||

download and save them to a directory and then set the `INSTANCE_DIR` environment variable to that path:

|

||||

Set the `INSTANCE_DIR` environment variable to the path of the directory containing the dog images.

|

||||

|

||||

```python

|

||||

local_dir = "./path_to_training_images"

|

||||

snapshot_download(

|

||||

"diffusers/dog-example",

|

||||

local_dir=local_dir, repo_type="dataset",

|

||||

ignore_patterns=".gitattributes",

|

||||

)

|

||||

```

|

||||

Specify the `MODEL_NAME` environment variable (either a Hub model repository id or a path to the directory containing the model weights) and pass it to the [`~diffusers.DiffusionPipeline.from_pretrained.pretrained_model_name_or_path`] argument.

|

||||

|

||||

```bash

|

||||

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

||||

export INSTANCE_DIR="path_to_training_images"

|

||||

export INSTANCE_DIR="./dog"

|

||||

export OUTPUT_DIR="path_to_saved_model"

|

||||

```

|

||||

|

||||

@@ -105,11 +110,13 @@ Before running the script, make sure you have the requirements installed:

|

||||

pip install -U -r requirements.txt

|

||||

```

|

||||

|

||||

Specify the `MODEL_NAME` environment variable (either a Hub model repository id or a path to the directory containing the model weights) and pass it to the [`~diffusers.DiffusionPipeline.from_pretrained.pretrained_model_name_or_path`] argument.

|

||||

|

||||

Now you can launch the training script with the following command:

|

||||

|

||||

```bash

|

||||

export MODEL_NAME="duongna/stable-diffusion-v1-4-flax"

|

||||

export INSTANCE_DIR="path-to-instance-images"

|

||||

export INSTANCE_DIR="./dog"

|

||||

export OUTPUT_DIR="path-to-save-model"

|

||||

|

||||

python train_dreambooth_flax.py \

|

||||

@@ -135,7 +142,7 @@ The authors recommend generating `num_epochs * num_samples` images for prior pre

|

||||

<pt>

|

||||

```bash

|

||||

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

||||

export INSTANCE_DIR="path_to_training_images"

|

||||

export INSTANCE_DIR="./dog"

|

||||

export CLASS_DIR="path_to_class_images"

|

||||

export OUTPUT_DIR="path_to_saved_model"

|

||||

|

||||

@@ -160,7 +167,7 @@ accelerate launch train_dreambooth.py \

|

||||

<jax>

|

||||

```bash

|

||||

export MODEL_NAME="duongna/stable-diffusion-v1-4-flax"

|

||||

export INSTANCE_DIR="path-to-instance-images"

|

||||

export INSTANCE_DIR="./dog"

|

||||

export CLASS_DIR="path-to-class-images"

|

||||

export OUTPUT_DIR="path-to-save-model"

|

||||

|

||||

@@ -197,7 +204,7 @@ Pass the `--train_text_encoder` argument to the training script to enable finetu

|

||||

<pt>

|

||||

```bash

|

||||

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

||||

export INSTANCE_DIR="path_to_training_images"

|

||||

export INSTANCE_DIR="./dog"

|

||||

export CLASS_DIR="path_to_class_images"

|

||||

export OUTPUT_DIR="path_to_saved_model"

|

||||

|

||||

@@ -224,7 +231,7 @@ accelerate launch train_dreambooth.py \

|

||||

<jax>

|

||||

```bash

|

||||

export MODEL_NAME="duongna/stable-diffusion-v1-4-flax"

|

||||

export INSTANCE_DIR="path-to-instance-images"

|

||||

export INSTANCE_DIR="./dog"

|

||||

export CLASS_DIR="path-to-class-images"

|

||||

export OUTPUT_DIR="path-to-save-model"

|

||||

|

||||

@@ -360,7 +367,7 @@ Then pass the `--use_8bit_adam` option to the training script:

|

||||

|

||||

```bash

|

||||

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

||||

export INSTANCE_DIR="path_to_training_images"

|

||||

export INSTANCE_DIR="./dog"

|

||||

export CLASS_DIR="path_to_class_images"

|

||||

export OUTPUT_DIR="path_to_saved_model"

|

||||

|

||||

@@ -389,7 +396,7 @@ To run DreamBooth on a 12GB GPU, you'll need to enable gradient checkpointing, t

|

||||

|

||||

```bash

|

||||

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

||||

export INSTANCE_DIR="path-to-instance-images"

|

||||

export INSTANCE_DIR="./dog"

|

||||

export CLASS_DIR="path-to-class-images"

|

||||

export OUTPUT_DIR="path-to-save-model"

|

||||

|

||||

@@ -436,7 +443,7 @@ Launch training with the following command:

|

||||

|

||||

```bash

|

||||

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

||||

export INSTANCE_DIR="path_to_training_images"

|

||||

export INSTANCE_DIR="./dog"

|

||||

export CLASS_DIR="path_to_class_images"

|

||||

export OUTPUT_DIR="path_to_saved_model"

|

||||

|

||||

|

||||

@@ -74,8 +74,7 @@ write_basic_config()

|

||||

As mentioned before, we'll use a [small toy dataset](https://huggingface.co/datasets/fusing/instructpix2pix-1000-samples) for training. The dataset

|

||||

is a smaller version of the [original dataset](https://huggingface.co/datasets/timbrooks/instructpix2pix-clip-filtered) used in the InstructPix2Pix paper.

|

||||

|

||||

Configure environment variables such as the dataset identifier and the Stable Diffusion

|

||||

checkpoint:

|

||||

Specify the `MODEL_NAME` environment variable (either a Hub model repository id or a path to the directory containing the model weights) and pass it to the [`~diffusers.DiffusionPipeline.from_pretrained.pretrained_model_name_or_path`] argument. You'll also need to specify the dataset name in `DATASET_ID`:

|

||||

|

||||

```bash

|

||||

export MODEL_NAME="runwayml/stable-diffusion-v1-5"

|

||||

|

||||

@@ -52,7 +52,9 @@ Finetuning a model like Stable Diffusion, which has billions of parameters, can

|

||||

|

||||

Let's finetune [`stable-diffusion-v1-5`](https://huggingface.co/runwayml/stable-diffusion-v1-5) on the [Pokémon BLIP captions](https://huggingface.co/datasets/lambdalabs/pokemon-blip-captions) dataset to generate your own Pokémon.

|

||||

|

||||

To start, make sure you have the `MODEL_NAME` and `DATASET_NAME` environment variables set. The `OUTPUT_DIR` and `HUB_MODEL_ID` variables are optional and specify where to save the model to on the Hub:

|

||||

Specify the `MODEL_NAME` environment variable (either a Hub model repository id or a path to the directory containing the model weights) and pass it to the [`~diffusers.DiffusionPipeline.from_pretrained.pretrained_model_name_or_path`] argument. You'll also need to set the `DATASET_NAME` environment variable to the name of the dataset you want to train on.

|

||||

|

||||

The `OUTPUT_DIR` and `HUB_MODEL_ID` variables are optional and specify where to save the model to on the Hub:

|

||||

|

||||

```bash

|

||||

export MODEL_NAME="runwayml/stable-diffusion-v1-5"

|

||||

@@ -140,7 +142,9 @@ Load the LoRA weights from your finetuned model *on top of the base model weight

|

||||

|

||||

Let's finetune [`stable-diffusion-v1-5`](https://huggingface.co/runwayml/stable-diffusion-v1-5) with DreamBooth and LoRA with some 🐶 [dog images](https://drive.google.com/drive/folders/1BO_dyz-p65qhBRRMRA4TbZ8qW4rB99JZ). Download and save these images to a directory.

|

||||

|

||||

To start, make sure you have the `MODEL_NAME` and `INSTANCE_DIR` (path to directory containing images) environment variables set. The `OUTPUT_DIR` variables is optional and specifies where to save the model to on the Hub:

|

||||

To start, specify the `MODEL_NAME` environment variable (either a Hub model repository id or a path to the directory containing the model weights) and pass it to the [`~diffusers.DiffusionPipeline.from_pretrained.pretrained_model_name_or_path`] argument. You'll also need to set `INSTANCE_DIR` to the path of the directory containing the images.

|

||||

|

||||

The `OUTPUT_DIR` variables is optional and specifies where to save the model to on the Hub:

|

||||

|

||||

```bash

|

||||

export MODEL_NAME="runwayml/stable-diffusion-v1-5"

|

||||

|

||||

@@ -72,7 +72,9 @@ To load a checkpoint to resume training, pass the argument `--resume_from_checkp

|

||||

|

||||

<frameworkcontent>

|

||||

<pt>

|

||||

Launch the [PyTorch training script](https://github.com/huggingface/diffusers/blob/main/examples/text_to_image/train_text_to_image.py) for a fine-tuning run on the [Pokémon BLIP captions](https://huggingface.co/datasets/lambdalabs/pokemon-blip-captions) dataset like this:

|

||||

Launch the [PyTorch training script](https://github.com/huggingface/diffusers/blob/main/examples/text_to_image/train_text_to_image.py) for a fine-tuning run on the [Pokémon BLIP captions](https://huggingface.co/datasets/lambdalabs/pokemon-blip-captions) dataset like this.

|

||||

|

||||

Specify the `MODEL_NAME` environment variable (either a Hub model repository id or a path to the directory containing the model weights) and pass it to the [`~diffusers.DiffusionPipeline.from_pretrained.pretrained_model_name_or_path`] argument.

|

||||

|

||||

<literalinclude>

|

||||

{"path": "../../../../examples/text_to_image/README.md",

|

||||

@@ -141,6 +143,8 @@ Before running the script, make sure you have the requirements installed:

|

||||

pip install -U -r requirements_flax.txt

|

||||

```

|

||||

|

||||

Specify the `MODEL_NAME` environment variable (either a Hub model repository id or a path to the directory containing the model weights) and pass it to the [`~diffusers.DiffusionPipeline.from_pretrained.pretrained_model_name_or_path`] argument.

|

||||

|

||||

Now you can launch the [Flax training script](https://github.com/huggingface/diffusers/blob/main/examples/text_to_image/train_text_to_image_flax.py) like this:

|

||||

|

||||

```bash

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||