mirror of

https://github.com/huggingface/diffusers.git

synced 2025-12-11 06:54:32 +08:00

Compare commits

83 Commits

hooks/qol-

...

quantizer-

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

b29164a892 | ||

|

|

b88fef4785 | ||

|

|

e7e6d85282 | ||

|

|

8eefed65bd | ||

|

|

26149c0ecd | ||

|

|

0703ce8800 | ||

|

|

f5edaa7894 | ||

|

|

9a1810f0de | ||

|

|

1fddee211e | ||

|

|

b38450d5d2 | ||

|

|

1357931d74 | ||

|

|

a2d3d6af44 | ||

|

|

363d1ab7e2 | ||

|

|

6a0137eb3b | ||

|

|

2e5203be04 | ||

|

|

d55f41102a | ||

|

|

748cb0fab6 | ||

|

|

790a909b54 | ||

|

|

54ab475391 | ||

|

|

f103993094 | ||

|

|

1be0202502 | ||

|

|

ea81a4228d | ||

|

|

b15027636a | ||

|

|

6e2a93de70 | ||

|

|

37b8edfb86 | ||

|

|

fbf6b856cc | ||

|

|

e031caf4ea | ||

|

|

08f74a8b92 | ||

|

|

24c062aaa1 | ||

|

|

a74f02fb40 | ||

|

|

66bf7ea5be | ||

|

|

b8215b1c06 | ||

|

|

3ee899fa0c | ||

|

|

dcd77ce222 | ||

|

|

11d8e3ce2c | ||

|

|

97fda1b75c | ||

|

|

cc22058324 | ||

|

|

7855ac597e | ||

|

|

30cef6bff3 | ||

|

|

8f15be169f | ||

|

|

f92e599c70 | ||

|

|

982f9b38d6 | ||

|

|

c9a219b323 | ||

|

|

9e910c4633 | ||

|

|

5e3b7d2d8a | ||

|

|

7513162b8b | ||

|

|

4aaa0d21ba | ||

|

|

54043c3e2e | ||

|

|

fc4229a0c3 | ||

|

|

694f9658c1 | ||

|

|

2d8a41cae8 | ||

|

|

7007febae5 | ||

|

|

d230ecc570 | ||

|

|

37a5f1b3b6 | ||

|

|

501d9de701 | ||

|

|

e5c43b8af7 | ||

|

|

9a8e8db79f | ||

|

|

764d7ed49a | ||

|

|

3fab6624fd | ||

|

|

f0ac7aaafc | ||

|

|

613e77f8be | ||

|

|

1450c2ac4f | ||

|

|

cc7b5b873a | ||

|

|

0404703237 | ||

|

|

13f20c7fe8 | ||

|

|

87599691b9 | ||

|

|

36517f6124 | ||

|

|

64af74fc58 | ||

|

|

170833c22a | ||

|

|

db21c97043 | ||

|

|

3fdf173084 | ||

|

|

aba4a5799a | ||

|

|

b0550a66cc | ||

|

|

6f74ef550d | ||

|

|

9c7e205176 | ||

|

|

64dec70e56 | ||

|

|

ffb6777ace | ||

|

|

85fcbaf314 | ||

|

|

d75ea3c772 | ||

|

|

b27d4edbe1 | ||

|

|

2b2d04299c | ||

|

|

6cef7d2366 | ||

|

|

9055ccb382 |

38

.github/ISSUE_TEMPLATE/remote-vae-pilot-feedback.yml

vendored

Normal file

38

.github/ISSUE_TEMPLATE/remote-vae-pilot-feedback.yml

vendored

Normal file

@@ -0,0 +1,38 @@

|

||||

name: "\U0001F31F Remote VAE"

|

||||

description: Feedback for remote VAE pilot

|

||||

labels: [ "Remote VAE" ]

|

||||

|

||||

body:

|

||||

- type: textarea

|

||||

id: positive

|

||||

validations:

|

||||

required: true

|

||||

attributes:

|

||||

label: Did you like the remote VAE solution?

|

||||

description: |

|

||||

If you liked it, we would appreciate it if you could elaborate what you liked.

|

||||

|

||||

- type: textarea

|

||||

id: feedback

|

||||

validations:

|

||||

required: true

|

||||

attributes:

|

||||

label: What can be improved about the current solution?

|

||||

description: |

|

||||

Let us know the things you would like to see improved. Note that we will work optimizing the solution once the pilot is over and we have usage.

|

||||

|

||||

- type: textarea

|

||||

id: others

|

||||

validations:

|

||||

required: true

|

||||

attributes:

|

||||

label: What other VAEs you would like to see if the pilot goes well?

|

||||

description: |

|

||||

Provide a list of the VAEs you would like to see in the future if the pilot goes well.

|

||||

|

||||

- type: textarea

|

||||

id: additional-info

|

||||

attributes:

|

||||

label: Notify the members of the team

|

||||

description: |

|

||||

Tag the following folks when submitting this feedback: @hlky @sayakpaul

|

||||

2

.github/workflows/nightly_tests.yml

vendored

2

.github/workflows/nightly_tests.yml

vendored

@@ -418,6 +418,8 @@ jobs:

|

||||

test_location: "gguf"

|

||||

- backend: "torchao"

|

||||

test_location: "torchao"

|

||||

- backend: "optimum_quanto"

|

||||

test_location: "quanto"

|

||||

runs-on:

|

||||

group: aws-g6e-xlarge-plus

|

||||

container:

|

||||

|

||||

142

.github/workflows/pr_style_bot.yml

vendored

142

.github/workflows/pr_style_bot.yml

vendored

@@ -9,119 +9,43 @@ permissions:

|

||||

pull-requests: write

|

||||

|

||||

jobs:

|

||||

run-style-bot:

|

||||

if: >

|

||||

contains(github.event.comment.body, '@bot /style') &&

|

||||

github.event.issue.pull_request != null

|

||||

runs-on: ubuntu-latest

|

||||

style:

|

||||

uses: huggingface/huggingface_hub/.github/workflows/style-bot-action.yml@main

|

||||

with:

|

||||

python_quality_dependencies: "[quality]"

|

||||

pre_commit_script_name: "Download and Compare files from the main branch"

|

||||

pre_commit_script: |

|

||||

echo "Downloading the files from the main branch"

|

||||

|

||||

steps:

|

||||

- name: Extract PR details

|

||||

id: pr_info

|

||||

uses: actions/github-script@v6

|

||||

with:

|

||||

script: |

|

||||

const prNumber = context.payload.issue.number;

|

||||

const { data: pr } = await github.rest.pulls.get({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

pull_number: prNumber

|

||||

});

|

||||

|

||||

// We capture both the branch ref and the "full_name" of the head repo

|

||||

// so that we can check out the correct repository & branch (including forks).

|

||||

core.setOutput("prNumber", prNumber);

|

||||

core.setOutput("headRef", pr.head.ref);

|

||||

core.setOutput("headRepoFullName", pr.head.repo.full_name);

|

||||

curl -o main_Makefile https://raw.githubusercontent.com/huggingface/diffusers/main/Makefile

|

||||

curl -o main_setup.py https://raw.githubusercontent.com/huggingface/diffusers/refs/heads/main/setup.py

|

||||

curl -o main_check_doc_toc.py https://raw.githubusercontent.com/huggingface/diffusers/refs/heads/main/utils/check_doc_toc.py

|

||||

|

||||

- name: Check out PR branch

|

||||

uses: actions/checkout@v3

|

||||

env:

|

||||

HEADREPOFULLNAME: ${{ steps.pr_info.outputs.headRepoFullName }}

|

||||

HEADREF: ${{ steps.pr_info.outputs.headRef }}

|

||||

with:

|

||||

# Instead of checking out the base repo, use the contributor's repo name

|

||||

repository: ${{ env.HEADREPOFULLNAME }}

|

||||

ref: ${{ env.HEADREF }}

|

||||

# You may need fetch-depth: 0 for being able to push

|

||||

fetch-depth: 0

|

||||

token: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

- name: Debug

|

||||

env:

|

||||

HEADREPOFULLNAME: ${{ steps.pr_info.outputs.headRepoFullName }}

|

||||

HEADREF: ${{ steps.pr_info.outputs.headRef }}

|

||||

PRNUMBER: ${{ steps.pr_info.outputs.prNumber }}

|

||||

run: |

|

||||

echo "PR number: ${{ env.PRNUMBER }}"

|

||||

echo "Head Ref: ${{ env.HEADREF }}"

|

||||

echo "Head Repo Full Name: ${{ env.HEADREPOFULLNAME }}"

|

||||

echo "Compare the files and raise error if needed"

|

||||

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v4

|

||||

diff_failed=0

|

||||

if ! diff -q main_Makefile Makefile; then

|

||||

echo "Error: The Makefile has changed. Please ensure it matches the main branch."

|

||||

diff_failed=1

|

||||

fi

|

||||

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

pip install .[quality]

|

||||

if ! diff -q main_setup.py setup.py; then

|

||||

echo "Error: The setup.py has changed. Please ensure it matches the main branch."

|

||||

diff_failed=1

|

||||

fi

|

||||

|

||||

- name: Download Makefile from main branch

|

||||

run: |

|

||||

curl -o main_Makefile https://raw.githubusercontent.com/huggingface/diffusers/main/Makefile

|

||||

|

||||

- name: Compare Makefiles

|

||||

run: |

|

||||

if ! diff -q main_Makefile Makefile; then

|

||||

echo "Error: The Makefile has changed. Please ensure it matches the main branch."

|

||||

exit 1

|

||||

fi

|

||||

echo "No changes in Makefile. Proceeding..."

|

||||

rm -rf main_Makefile

|

||||

if ! diff -q main_check_doc_toc.py utils/check_doc_toc.py; then

|

||||

echo "Error: The utils/check_doc_toc.py has changed. Please ensure it matches the main branch."

|

||||

diff_failed=1

|

||||

fi

|

||||

|

||||

- name: Run make style and make quality

|

||||

run: |

|

||||

make style && make quality

|

||||

if [ $diff_failed -eq 1 ]; then

|

||||

echo "❌ Error happened as we detected changes in the files that should not be changed ❌"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

- name: Commit and push changes

|

||||

id: commit_and_push

|

||||

env:

|

||||

HEADREPOFULLNAME: ${{ steps.pr_info.outputs.headRepoFullName }}

|

||||

HEADREF: ${{ steps.pr_info.outputs.headRef }}

|

||||

PRNUMBER: ${{ steps.pr_info.outputs.prNumber }}

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

run: |

|

||||

echo "HEADREPOFULLNAME: ${{ env.HEADREPOFULLNAME }}, HEADREF: ${{ env.HEADREF }}"

|

||||

# Configure git with the Actions bot user

|

||||

git config user.name "github-actions[bot]"

|

||||

git config user.email "github-actions[bot]@users.noreply.github.com"

|

||||

|

||||

# Make sure your 'origin' remote is set to the contributor's fork

|

||||

git remote set-url origin "https://x-access-token:${GITHUB_TOKEN}@github.com/${{ env.HEADREPOFULLNAME }}.git"

|

||||

|

||||

# If there are changes after running style/quality, commit them

|

||||

if [ -n "$(git status --porcelain)" ]; then

|

||||

git add .

|

||||

git commit -m "Apply style fixes"

|

||||

# Push to the original contributor's forked branch

|

||||

git push origin HEAD:${{ env.HEADREF }}

|

||||

echo "changes_pushed=true" >> $GITHUB_OUTPUT

|

||||

else

|

||||

echo "No changes to commit."

|

||||

echo "changes_pushed=false" >> $GITHUB_OUTPUT

|

||||

fi

|

||||

|

||||

- name: Comment on PR with workflow run link

|

||||

if: steps.commit_and_push.outputs.changes_pushed == 'true'

|

||||

uses: actions/github-script@v6

|

||||

with:

|

||||

script: |

|

||||

const prNumber = parseInt(process.env.prNumber, 10);

|

||||

const runUrl = `${process.env.GITHUB_SERVER_URL}/${process.env.GITHUB_REPOSITORY}/actions/runs/${process.env.GITHUB_RUN_ID}`

|

||||

|

||||

await github.rest.issues.createComment({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

issue_number: prNumber,

|

||||

body: `Style fixes have been applied. [View the workflow run here](${runUrl}).`

|

||||

});

|

||||

env:

|

||||

prNumber: ${{ steps.pr_info.outputs.prNumber }}

|

||||

echo "No changes in the files. Proceeding..."

|

||||

rm -rf main_Makefile main_setup.py main_check_doc_toc.py

|

||||

style_command: "make style && make quality"

|

||||

secrets:

|

||||

bot_token: ${{ secrets.GITHUB_TOKEN }}

|

||||

1

.github/workflows/pr_tests.yml

vendored

1

.github/workflows/pr_tests.yml

vendored

@@ -3,7 +3,6 @@ name: Fast tests for PRs

|

||||

on:

|

||||

pull_request:

|

||||

branches: [main]

|

||||

types: [synchronize]

|

||||

paths:

|

||||

- "src/diffusers/**.py"

|

||||

- "benchmarks/**.py"

|

||||

|

||||

250

.github/workflows/pr_tests_gpu.yml

vendored

Normal file

250

.github/workflows/pr_tests_gpu.yml

vendored

Normal file

@@ -0,0 +1,250 @@

|

||||

name: Fast GPU Tests on PR

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

branches: main

|

||||

paths:

|

||||

- "src/diffusers/models/modeling_utils.py"

|

||||

- "src/diffusers/models/model_loading_utils.py"

|

||||

- "src/diffusers/pipelines/pipeline_utils.py"

|

||||

- "src/diffusers/pipeline_loading_utils.py"

|

||||

- "src/diffusers/loaders/lora_base.py"

|

||||

- "src/diffusers/loaders/lora_pipeline.py"

|

||||

- "src/diffusers/loaders/peft.py"

|

||||

- "tests/pipelines/test_pipelines_common.py"

|

||||

- "tests/models/test_modeling_common.py"

|

||||

workflow_dispatch:

|

||||

|

||||

concurrency:

|

||||

group: ${{ github.workflow }}-${{ github.head_ref || github.run_id }}

|

||||

cancel-in-progress: true

|

||||

|

||||

env:

|

||||

DIFFUSERS_IS_CI: yes

|

||||

OMP_NUM_THREADS: 8

|

||||

MKL_NUM_THREADS: 8

|

||||

HF_HUB_ENABLE_HF_TRANSFER: 1

|

||||

PYTEST_TIMEOUT: 600

|

||||

PIPELINE_USAGE_CUTOFF: 1000000000 # set high cutoff so that only always-test pipelines run

|

||||

|

||||

jobs:

|

||||

setup_torch_cuda_pipeline_matrix:

|

||||

name: Setup Torch Pipelines CUDA Slow Tests Matrix

|

||||

runs-on:

|

||||

group: aws-general-8-plus

|

||||

container:

|

||||

image: diffusers/diffusers-pytorch-cpu

|

||||

outputs:

|

||||

pipeline_test_matrix: ${{ steps.fetch_pipeline_matrix.outputs.pipeline_test_matrix }}

|

||||

steps:

|

||||

- name: Checkout diffusers

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 2

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m venv /opt/venv && export PATH="/opt/venv/bin:$PATH"

|

||||

python -m uv pip install -e [quality,test]

|

||||

- name: Environment

|

||||

run: |

|

||||

python utils/print_env.py

|

||||

- name: Fetch Pipeline Matrix

|

||||

id: fetch_pipeline_matrix

|

||||

run: |

|

||||

matrix=$(python utils/fetch_torch_cuda_pipeline_test_matrix.py)

|

||||

echo $matrix

|

||||

echo "pipeline_test_matrix=$matrix" >> $GITHUB_OUTPUT

|

||||

- name: Pipeline Tests Artifacts

|

||||

if: ${{ always() }}

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: test-pipelines.json

|

||||

path: reports

|

||||

|

||||

torch_pipelines_cuda_tests:

|

||||

name: Torch Pipelines CUDA Tests

|

||||

needs: setup_torch_cuda_pipeline_matrix

|

||||

strategy:

|

||||

fail-fast: false

|

||||

max-parallel: 8

|

||||

matrix:

|

||||

module: ${{ fromJson(needs.setup_torch_cuda_pipeline_matrix.outputs.pipeline_test_matrix) }}

|

||||

runs-on:

|

||||

group: aws-g4dn-2xlarge

|

||||

container:

|

||||

image: diffusers/diffusers-pytorch-cuda

|

||||

options: --shm-size "16gb" --ipc host --gpus 0

|

||||

steps:

|

||||

- name: Checkout diffusers

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 2

|

||||

|

||||

- name: NVIDIA-SMI

|

||||

run: |

|

||||

nvidia-smi

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m venv /opt/venv && export PATH="/opt/venv/bin:$PATH"

|

||||

python -m uv pip install -e [quality,test]

|

||||

pip uninstall accelerate -y && python -m uv pip install -U accelerate@git+https://github.com/huggingface/accelerate.git

|

||||

pip uninstall transformers -y && python -m uv pip install -U transformers@git+https://github.com/huggingface/transformers.git --no-deps

|

||||

|

||||

- name: Environment

|

||||

run: |

|

||||

python utils/print_env.py

|

||||

- name: Extract tests

|

||||

id: extract_tests

|

||||

run: |

|

||||

pattern=$(python utils/extract_tests_from_mixin.py --type pipeline)

|

||||

echo "$pattern" > /tmp/test_pattern.txt

|

||||

echo "pattern_file=/tmp/test_pattern.txt" >> $GITHUB_OUTPUT

|

||||

|

||||

- name: PyTorch CUDA checkpoint tests on Ubuntu

|

||||

env:

|

||||

HF_TOKEN: ${{ secrets.DIFFUSERS_HF_HUB_READ_TOKEN }}

|

||||

# https://pytorch.org/docs/stable/notes/randomness.html#avoiding-nondeterministic-algorithms

|

||||

CUBLAS_WORKSPACE_CONFIG: :16:8

|

||||

run: |

|

||||

if [ "${{ matrix.module }}" = "ip_adapters" ]; then

|

||||

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile \

|

||||

-s -v -k "not Flax and not Onnx" \

|

||||

--make-reports=tests_pipeline_${{ matrix.module }}_cuda \

|

||||

tests/pipelines/${{ matrix.module }}

|

||||

else

|

||||

pattern=$(cat ${{ steps.extract_tests.outputs.pattern_file }})

|

||||

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile \

|

||||

-s -v -k "not Flax and not Onnx and $pattern" \

|

||||

--make-reports=tests_pipeline_${{ matrix.module }}_cuda \

|

||||

tests/pipelines/${{ matrix.module }}

|

||||

fi

|

||||

|

||||

- name: Failure short reports

|

||||

if: ${{ failure() }}

|

||||

run: |

|

||||

cat reports/tests_pipeline_${{ matrix.module }}_cuda_stats.txt

|

||||

cat reports/tests_pipeline_${{ matrix.module }}_cuda_failures_short.txt

|

||||

- name: Test suite reports artifacts

|

||||

if: ${{ always() }}

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: pipeline_${{ matrix.module }}_test_reports

|

||||

path: reports

|

||||

|

||||

torch_cuda_tests:

|

||||

name: Torch CUDA Tests

|

||||

runs-on:

|

||||

group: aws-g4dn-2xlarge

|

||||

container:

|

||||

image: diffusers/diffusers-pytorch-cuda

|

||||

options: --shm-size "16gb" --ipc host --gpus 0

|

||||

defaults:

|

||||

run:

|

||||

shell: bash

|

||||

strategy:

|

||||

fail-fast: false

|

||||

max-parallel: 2

|

||||

matrix:

|

||||

module: [models, schedulers, lora, others]

|

||||

steps:

|

||||

- name: Checkout diffusers

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 2

|

||||

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m venv /opt/venv && export PATH="/opt/venv/bin:$PATH"

|

||||

python -m uv pip install -e [quality,test]

|

||||

python -m uv pip install peft@git+https://github.com/huggingface/peft.git

|

||||

pip uninstall accelerate -y && python -m uv pip install -U accelerate@git+https://github.com/huggingface/accelerate.git

|

||||

pip uninstall transformers -y && python -m uv pip install -U transformers@git+https://github.com/huggingface/transformers.git --no-deps

|

||||

|

||||

- name: Environment

|

||||

run: |

|

||||

python utils/print_env.py

|

||||

|

||||

- name: Extract tests

|

||||

id: extract_tests

|

||||

run: |

|

||||

pattern=$(python utils/extract_tests_from_mixin.py --type ${{ matrix.module }})

|

||||

echo "$pattern" > /tmp/test_pattern.txt

|

||||

echo "pattern_file=/tmp/test_pattern.txt" >> $GITHUB_OUTPUT

|

||||

|

||||

- name: Run PyTorch CUDA tests

|

||||

env:

|

||||

HF_TOKEN: ${{ secrets.DIFFUSERS_HF_HUB_READ_TOKEN }}

|

||||

# https://pytorch.org/docs/stable/notes/randomness.html#avoiding-nondeterministic-algorithms

|

||||

CUBLAS_WORKSPACE_CONFIG: :16:8

|

||||

run: |

|

||||

pattern=$(cat ${{ steps.extract_tests.outputs.pattern_file }})

|

||||

if [ -z "$pattern" ]; then

|

||||

python -m pytest -n 1 -sv --max-worker-restart=0 --dist=loadfile -k "not Flax and not Onnx" tests/${{ matrix.module }} \

|

||||

--make-reports=tests_torch_cuda_${{ matrix.module }}

|

||||

else

|

||||

python -m pytest -n 1 -sv --max-worker-restart=0 --dist=loadfile -k "not Flax and not Onnx and $pattern" tests/${{ matrix.module }} \

|

||||

--make-reports=tests_torch_cuda_${{ matrix.module }}

|

||||

fi

|

||||

|

||||

- name: Failure short reports

|

||||

if: ${{ failure() }}

|

||||

run: |

|

||||

cat reports/tests_torch_cuda_${{ matrix.module }}_stats.txt

|

||||

cat reports/tests_torch_cuda_${{ matrix.module }}_failures_short.txt

|

||||

|

||||

- name: Test suite reports artifacts

|

||||

if: ${{ always() }}

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: torch_cuda_test_reports_${{ matrix.module }}

|

||||

path: reports

|

||||

|

||||

run_examples_tests:

|

||||

name: Examples PyTorch CUDA tests on Ubuntu

|

||||

pip uninstall transformers -y && python -m uv pip install -U transformers@git+https://github.com/huggingface/transformers.git --no-deps

|

||||

runs-on:

|

||||

group: aws-g4dn-2xlarge

|

||||

|

||||

container:

|

||||

image: diffusers/diffusers-pytorch-cuda

|

||||

options: --gpus 0 --shm-size "16gb" --ipc host

|

||||

steps:

|

||||

- name: Checkout diffusers

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 2

|

||||

|

||||

- name: NVIDIA-SMI

|

||||

run: |

|

||||

nvidia-smi

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m venv /opt/venv && export PATH="/opt/venv/bin:$PATH"

|

||||

python -m uv pip install -e [quality,test,training]

|

||||

|

||||

- name: Environment

|

||||

run: |

|

||||

python -m venv /opt/venv && export PATH="/opt/venv/bin:$PATH"

|

||||

python utils/print_env.py

|

||||

|

||||

- name: Run example tests on GPU

|

||||

env:

|

||||

HF_TOKEN: ${{ secrets.DIFFUSERS_HF_HUB_READ_TOKEN }}

|

||||

run: |

|

||||

python -m venv /opt/venv && export PATH="/opt/venv/bin:$PATH"

|

||||

python -m uv pip install timm

|

||||

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile -s -v --make-reports=examples_torch_cuda examples/

|

||||

|

||||

- name: Failure short reports

|

||||

if: ${{ failure() }}

|

||||

run: |

|

||||

cat reports/examples_torch_cuda_stats.txt

|

||||

cat reports/examples_torch_cuda_failures_short.txt

|

||||

|

||||

- name: Test suite reports artifacts

|

||||

if: ${{ always() }}

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: examples_test_reports

|

||||

path: reports

|

||||

|

||||

11

.github/workflows/push_tests.yml

vendored

11

.github/workflows/push_tests.yml

vendored

@@ -1,13 +1,6 @@

|

||||

name: Fast GPU Tests on main

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

branches: main

|

||||

paths:

|

||||

- "src/diffusers/models/modeling_utils.py"

|

||||

- "src/diffusers/models/model_loading_utils.py"

|

||||

- "src/diffusers/pipelines/pipeline_utils.py"

|

||||

- "src/diffusers/pipeline_loading_utils.py"

|

||||

workflow_dispatch:

|

||||

push:

|

||||

branches:

|

||||

@@ -167,7 +160,6 @@ jobs:

|

||||

path: reports

|

||||

|

||||

flax_tpu_tests:

|

||||

if: ${{ github.event_name != 'pull_request' }}

|

||||

name: Flax TPU Tests

|

||||

runs-on:

|

||||

group: gcp-ct5lp-hightpu-8t

|

||||

@@ -216,7 +208,6 @@ jobs:

|

||||

path: reports

|

||||

|

||||

onnx_cuda_tests:

|

||||

if: ${{ github.event_name != 'pull_request' }}

|

||||

name: ONNX CUDA Tests

|

||||

runs-on:

|

||||

group: aws-g4dn-2xlarge

|

||||

@@ -265,7 +256,6 @@ jobs:

|

||||

path: reports

|

||||

|

||||

run_torch_compile_tests:

|

||||

if: ${{ github.event_name != 'pull_request' }}

|

||||

name: PyTorch Compile CUDA tests

|

||||

|

||||

runs-on:

|

||||

@@ -309,7 +299,6 @@ jobs:

|

||||

path: reports

|

||||

|

||||

run_xformers_tests:

|

||||

if: ${{ github.event_name != 'pull_request' }}

|

||||

name: PyTorch xformers CUDA tests

|

||||

|

||||

runs-on:

|

||||

|

||||

@@ -76,6 +76,14 @@

|

||||

- local: advanced_inference/outpaint

|

||||

title: Outpainting

|

||||

title: Advanced inference

|

||||

- sections:

|

||||

- local: hybrid_inference/overview

|

||||

title: Overview

|

||||

- local: hybrid_inference/vae_decode

|

||||

title: VAE Decode

|

||||

- local: hybrid_inference/api_reference

|

||||

title: API Reference

|

||||

title: Hybrid Inference

|

||||

- sections:

|

||||

- local: using-diffusers/cogvideox

|

||||

title: CogVideoX

|

||||

@@ -165,6 +173,8 @@

|

||||

title: gguf

|

||||

- local: quantization/torchao

|

||||

title: torchao

|

||||

- local: quantization/quanto

|

||||

title: quanto

|

||||

title: Quantization Methods

|

||||

- sections:

|

||||

- local: optimization/fp16

|

||||

@@ -282,6 +292,8 @@

|

||||

title: CogView4Transformer2DModel

|

||||

- local: api/models/dit_transformer2d

|

||||

title: DiTTransformer2DModel

|

||||

- local: api/models/easyanimate_transformer3d

|

||||

title: EasyAnimateTransformer3DModel

|

||||

- local: api/models/flux_transformer

|

||||

title: FluxTransformer2DModel

|

||||

- local: api/models/hunyuan_transformer2d

|

||||

@@ -314,6 +326,8 @@

|

||||

title: Transformer2DModel

|

||||

- local: api/models/transformer_temporal

|

||||

title: TransformerTemporalModel

|

||||

- local: api/models/wan_transformer_3d

|

||||

title: WanTransformer3DModel

|

||||

title: Transformers

|

||||

- sections:

|

||||

- local: api/models/stable_cascade_unet

|

||||

@@ -342,8 +356,12 @@

|

||||

title: AutoencoderKLHunyuanVideo

|

||||

- local: api/models/autoencoderkl_ltx_video

|

||||

title: AutoencoderKLLTXVideo

|

||||

- local: api/models/autoencoderkl_magvit

|

||||

title: AutoencoderKLMagvit

|

||||

- local: api/models/autoencoderkl_mochi

|

||||

title: AutoencoderKLMochi

|

||||

- local: api/models/autoencoder_kl_wan

|

||||

title: AutoencoderKLWan

|

||||

- local: api/models/asymmetricautoencoderkl

|

||||

title: AsymmetricAutoencoderKL

|

||||

- local: api/models/autoencoder_dc

|

||||

@@ -418,6 +436,8 @@

|

||||

title: DiffEdit

|

||||

- local: api/pipelines/dit

|

||||

title: DiT

|

||||

- local: api/pipelines/easyanimate

|

||||

title: EasyAnimate

|

||||

- local: api/pipelines/flux

|

||||

title: Flux

|

||||

- local: api/pipelines/control_flux_inpaint

|

||||

@@ -534,6 +554,8 @@

|

||||

title: UniDiffuser

|

||||

- local: api/pipelines/value_guided_sampling

|

||||

title: Value-guided sampling

|

||||

- local: api/pipelines/wan

|

||||

title: Wan

|

||||

- local: api/pipelines/wuerstchen

|

||||

title: Wuerstchen

|

||||

title: Pipelines

|

||||

@@ -543,6 +565,10 @@

|

||||

title: Overview

|

||||

- local: api/schedulers/cm_stochastic_iterative

|

||||

title: CMStochasticIterativeScheduler

|

||||

- local: api/schedulers/ddim_cogvideox

|

||||

title: CogVideoXDDIMScheduler

|

||||

- local: api/schedulers/multistep_dpm_solver_cogvideox

|

||||

title: CogVideoXDPMScheduler

|

||||

- local: api/schedulers/consistency_decoder

|

||||

title: ConsistencyDecoderScheduler

|

||||

- local: api/schedulers/cosine_dpm

|

||||

|

||||

32

docs/source/en/api/models/autoencoder_kl_wan.md

Normal file

32

docs/source/en/api/models/autoencoder_kl_wan.md

Normal file

@@ -0,0 +1,32 @@

|

||||

<!-- Copyright 2024 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License. -->

|

||||

|

||||

# AutoencoderKLWan

|

||||

|

||||

The 3D variational autoencoder (VAE) model with KL loss used in [Wan 2.1](https://github.com/Wan-Video/Wan2.1) by the Alibaba Wan Team.

|

||||

|

||||

The model can be loaded with the following code snippet.

|

||||

|

||||

```python

|

||||

from diffusers import AutoencoderKLWan

|

||||

|

||||

vae = AutoencoderKLWan.from_pretrained("Wan-AI/Wan2.1-T2V-1.3B-Diffusers", subfolder="vae", torch_dtype=torch.float32)

|

||||

```

|

||||

|

||||

## AutoencoderKLWan

|

||||

|

||||

[[autodoc]] AutoencoderKLWan

|

||||

- decode

|

||||

- all

|

||||

|

||||

## DecoderOutput

|

||||

|

||||

[[autodoc]] models.autoencoders.vae.DecoderOutput

|

||||

37

docs/source/en/api/models/autoencoderkl_magvit.md

Normal file

37

docs/source/en/api/models/autoencoderkl_magvit.md

Normal file

@@ -0,0 +1,37 @@

|

||||

<!--Copyright 2025 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License. -->

|

||||

|

||||

# AutoencoderKLMagvit

|

||||

|

||||

The 3D variational autoencoder (VAE) model with KL loss used in [EasyAnimate](https://github.com/aigc-apps/EasyAnimate) was introduced by Alibaba PAI.

|

||||

|

||||

The model can be loaded with the following code snippet.

|

||||

|

||||

```python

|

||||

from diffusers import AutoencoderKLMagvit

|

||||

|

||||

vae = AutoencoderKLMagvit.from_pretrained("alibaba-pai/EasyAnimateV5.1-12b-zh", subfolder="vae", torch_dtype=torch.float16).to("cuda")

|

||||

```

|

||||

|

||||

## AutoencoderKLMagvit

|

||||

|

||||

[[autodoc]] AutoencoderKLMagvit

|

||||

- decode

|

||||

- encode

|

||||

- all

|

||||

|

||||

## AutoencoderKLOutput

|

||||

|

||||

[[autodoc]] models.autoencoders.autoencoder_kl.AutoencoderKLOutput

|

||||

|

||||

## DecoderOutput

|

||||

|

||||

[[autodoc]] models.autoencoders.vae.DecoderOutput

|

||||

30

docs/source/en/api/models/easyanimate_transformer3d.md

Normal file

30

docs/source/en/api/models/easyanimate_transformer3d.md

Normal file

@@ -0,0 +1,30 @@

|

||||

<!--Copyright 2025 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License. -->

|

||||

|

||||

# EasyAnimateTransformer3DModel

|

||||

|

||||

A Diffusion Transformer model for 3D data from [EasyAnimate](https://github.com/aigc-apps/EasyAnimate) was introduced by Alibaba PAI.

|

||||

|

||||

The model can be loaded with the following code snippet.

|

||||

|

||||

```python

|

||||

from diffusers import EasyAnimateTransformer3DModel

|

||||

|

||||

transformer = EasyAnimateTransformer3DModel.from_pretrained("alibaba-pai/EasyAnimateV5.1-12b-zh", subfolder="transformer", torch_dtype=torch.float16).to("cuda")

|

||||

```

|

||||

|

||||

## EasyAnimateTransformer3DModel

|

||||

|

||||

[[autodoc]] EasyAnimateTransformer3DModel

|

||||

|

||||

## Transformer2DModelOutput

|

||||

|

||||

[[autodoc]] models.modeling_outputs.Transformer2DModelOutput

|

||||

30

docs/source/en/api/models/wan_transformer_3d.md

Normal file

30

docs/source/en/api/models/wan_transformer_3d.md

Normal file

@@ -0,0 +1,30 @@

|

||||

<!-- Copyright 2024 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License. -->

|

||||

|

||||

# WanTransformer3DModel

|

||||

|

||||

A Diffusion Transformer model for 3D video-like data was introduced in [Wan 2.1](https://github.com/Wan-Video/Wan2.1) by the Alibaba Wan Team.

|

||||

|

||||

The model can be loaded with the following code snippet.

|

||||

|

||||

```python

|

||||

from diffusers import WanTransformer3DModel

|

||||

|

||||

transformer = WanTransformer3DModel.from_pretrained("Wan-AI/Wan2.1-T2V-1.3B-Diffusers", subfolder="transformer", torch_dtype=torch.bfloat16)

|

||||

```

|

||||

|

||||

## WanTransformer3DModel

|

||||

|

||||

[[autodoc]] WanTransformer3DModel

|

||||

|

||||

## Transformer2DModelOutput

|

||||

|

||||

[[autodoc]] models.modeling_outputs.Transformer2DModelOutput

|

||||

@@ -12,6 +12,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# Text-to-Video Generation with AnimateDiff

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

## Overview

|

||||

|

||||

[AnimateDiff: Animate Your Personalized Text-to-Image Diffusion Models without Specific Tuning](https://arxiv.org/abs/2307.04725) by Yuwei Guo, Ceyuan Yang, Anyi Rao, Yaohui Wang, Yu Qiao, Dahua Lin, Bo Dai.

|

||||

|

||||

@@ -15,6 +15,10 @@

|

||||

|

||||

# CogVideoX

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

[CogVideoX: Text-to-Video Diffusion Models with An Expert Transformer](https://arxiv.org/abs/2408.06072) from Tsinghua University & ZhipuAI, by Zhuoyi Yang, Jiayan Teng, Wendi Zheng, Ming Ding, Shiyu Huang, Jiazheng Xu, Yuanming Yang, Wenyi Hong, Xiaohan Zhang, Guanyu Feng, Da Yin, Xiaotao Gu, Yuxuan Zhang, Weihan Wang, Yean Cheng, Ting Liu, Bin Xu, Yuxiao Dong, Jie Tang.

|

||||

|

||||

The abstract from the paper is:

|

||||

|

||||

@@ -15,6 +15,10 @@

|

||||

|

||||

# ConsisID

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

[Identity-Preserving Text-to-Video Generation by Frequency Decomposition](https://arxiv.org/abs/2411.17440) from Peking University & University of Rochester & etc, by Shenghai Yuan, Jinfa Huang, Xianyi He, Yunyang Ge, Yujun Shi, Liuhan Chen, Jiebo Luo, Li Yuan.

|

||||

|

||||

The abstract from the paper is:

|

||||

|

||||

@@ -12,6 +12,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# FluxControlInpaint

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

FluxControlInpaintPipeline is an implementation of Inpainting for Flux.1 Depth/Canny models. It is a pipeline that allows you to inpaint images using the Flux.1 Depth/Canny models. The pipeline takes an image and a mask as input and returns the inpainted image.

|

||||

|

||||

FLUX.1 Depth and Canny [dev] is a 12 billion parameter rectified flow transformer capable of generating an image based on a text description while following the structure of a given input image. **This is not a ControlNet model**.

|

||||

|

||||

@@ -12,6 +12,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# ControlNet

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

ControlNet was introduced in [Adding Conditional Control to Text-to-Image Diffusion Models](https://huggingface.co/papers/2302.05543) by Lvmin Zhang, Anyi Rao, and Maneesh Agrawala.

|

||||

|

||||

With a ControlNet model, you can provide an additional control image to condition and control Stable Diffusion generation. For example, if you provide a depth map, the ControlNet model generates an image that'll preserve the spatial information from the depth map. It is a more flexible and accurate way to control the image generation process.

|

||||

|

||||

@@ -12,6 +12,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# ControlNet with Flux.1

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

FluxControlNetPipeline is an implementation of ControlNet for Flux.1.

|

||||

|

||||

ControlNet was introduced in [Adding Conditional Control to Text-to-Image Diffusion Models](https://huggingface.co/papers/2302.05543) by Lvmin Zhang, Anyi Rao, and Maneesh Agrawala.

|

||||

|

||||

@@ -12,6 +12,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# ControlNet with Stable Diffusion 3

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

StableDiffusion3ControlNetPipeline is an implementation of ControlNet for Stable Diffusion 3.

|

||||

|

||||

ControlNet was introduced in [Adding Conditional Control to Text-to-Image Diffusion Models](https://huggingface.co/papers/2302.05543) by Lvmin Zhang, Anyi Rao, and Maneesh Agrawala.

|

||||

|

||||

@@ -12,6 +12,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# ControlNet with Stable Diffusion XL

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

ControlNet was introduced in [Adding Conditional Control to Text-to-Image Diffusion Models](https://huggingface.co/papers/2302.05543) by Lvmin Zhang, Anyi Rao, and Maneesh Agrawala.

|

||||

|

||||

With a ControlNet model, you can provide an additional control image to condition and control Stable Diffusion generation. For example, if you provide a depth map, the ControlNet model generates an image that'll preserve the spatial information from the depth map. It is a more flexible and accurate way to control the image generation process.

|

||||

|

||||

@@ -12,6 +12,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# ControlNetUnion

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

ControlNetUnionModel is an implementation of ControlNet for Stable Diffusion XL.

|

||||

|

||||

The ControlNet model was introduced in [ControlNetPlus](https://github.com/xinsir6/ControlNetPlus) by xinsir6. It supports multiple conditioning inputs without increasing computation.

|

||||

|

||||

@@ -12,6 +12,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# ControlNet-XS

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

ControlNet-XS was introduced in [ControlNet-XS](https://vislearn.github.io/ControlNet-XS/) by Denis Zavadski and Carsten Rother. It is based on the observation that the control model in the [original ControlNet](https://huggingface.co/papers/2302.05543) can be made much smaller and still produce good results.

|

||||

|

||||

Like the original ControlNet model, you can provide an additional control image to condition and control Stable Diffusion generation. For example, if you provide a depth map, the ControlNet model generates an image that'll preserve the spatial information from the depth map. It is a more flexible and accurate way to control the image generation process.

|

||||

|

||||

@@ -12,6 +12,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# DeepFloyd IF

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

## Overview

|

||||

|

||||

DeepFloyd IF is a novel state-of-the-art open-source text-to-image model with a high degree of photorealism and language understanding.

|

||||

|

||||

88

docs/source/en/api/pipelines/easyanimate.md

Normal file

88

docs/source/en/api/pipelines/easyanimate.md

Normal file

@@ -0,0 +1,88 @@

|

||||

<!--Copyright 2025 The HuggingFace Team. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

-->

|

||||

|

||||

# EasyAnimate

|

||||

[EasyAnimate](https://github.com/aigc-apps/EasyAnimate) by Alibaba PAI.

|

||||

|

||||

The description from it's GitHub page:

|

||||

*EasyAnimate is a pipeline based on the transformer architecture, designed for generating AI images and videos, and for training baseline models and Lora models for Diffusion Transformer. We support direct prediction from pre-trained EasyAnimate models, allowing for the generation of videos with various resolutions, approximately 6 seconds in length, at 8fps (EasyAnimateV5.1, 1 to 49 frames). Additionally, users can train their own baseline and Lora models for specific style transformations.*

|

||||

|

||||

This pipeline was contributed by [bubbliiiing](https://github.com/bubbliiiing). The original codebase can be found [here](https://huggingface.co/alibaba-pai). The original weights can be found under [hf.co/alibaba-pai](https://huggingface.co/alibaba-pai).

|

||||

|

||||

There are two official EasyAnimate checkpoints for text-to-video and video-to-video.

|

||||

|

||||

| checkpoints | recommended inference dtype |

|

||||

|:---:|:---:|

|

||||

| [`alibaba-pai/EasyAnimateV5.1-12b-zh`](https://huggingface.co/alibaba-pai/EasyAnimateV5.1-12b-zh) | torch.float16 |

|

||||

| [`alibaba-pai/EasyAnimateV5.1-12b-zh-InP`](https://huggingface.co/alibaba-pai/EasyAnimateV5.1-12b-zh-InP) | torch.float16 |

|

||||

|

||||

There is one official EasyAnimate checkpoints available for image-to-video and video-to-video.

|

||||

|

||||

| checkpoints | recommended inference dtype |

|

||||

|:---:|:---:|

|

||||

| [`alibaba-pai/EasyAnimateV5.1-12b-zh-InP`](https://huggingface.co/alibaba-pai/EasyAnimateV5.1-12b-zh-InP) | torch.float16 |

|

||||

|

||||

There are two official EasyAnimate checkpoints available for control-to-video.

|

||||

|

||||

| checkpoints | recommended inference dtype |

|

||||

|:---:|:---:|

|

||||

| [`alibaba-pai/EasyAnimateV5.1-12b-zh-Control`](https://huggingface.co/alibaba-pai/EasyAnimateV5.1-12b-zh-Control) | torch.float16 |

|

||||

| [`alibaba-pai/EasyAnimateV5.1-12b-zh-Control-Camera`](https://huggingface.co/alibaba-pai/EasyAnimateV5.1-12b-zh-Control-Camera) | torch.float16 |

|

||||

|

||||

For the EasyAnimateV5.1 series:

|

||||

- Text-to-video (T2V) and Image-to-video (I2V) works for multiple resolutions. The width and height can vary from 256 to 1024.

|

||||

- Both T2V and I2V models support generation with 1~49 frames and work best at this value. Exporting videos at 8 FPS is recommended.

|

||||

|

||||

## Quantization

|

||||

|

||||

Quantization helps reduce the memory requirements of very large models by storing model weights in a lower precision data type. However, quantization may have varying impact on video quality depending on the video model.

|

||||

|

||||

Refer to the [Quantization](../../quantization/overview) overview to learn more about supported quantization backends and selecting a quantization backend that supports your use case. The example below demonstrates how to load a quantized [`EasyAnimatePipeline`] for inference with bitsandbytes.

|

||||

|

||||

```py

|

||||

import torch

|

||||

from diffusers import BitsAndBytesConfig as DiffusersBitsAndBytesConfig, EasyAnimateTransformer3DModel, EasyAnimatePipeline

|

||||

from diffusers.utils import export_to_video

|

||||

|

||||

quant_config = DiffusersBitsAndBytesConfig(load_in_8bit=True)

|

||||

transformer_8bit = EasyAnimateTransformer3DModel.from_pretrained(

|

||||

"alibaba-pai/EasyAnimateV5.1-12b-zh",

|

||||

subfolder="transformer",

|

||||

quantization_config=quant_config,

|

||||

torch_dtype=torch.float16,

|

||||

)

|

||||

|

||||

pipeline = EasyAnimatePipeline.from_pretrained(

|

||||

"alibaba-pai/EasyAnimateV5.1-12b-zh",

|

||||

transformer=transformer_8bit,

|

||||

torch_dtype=torch.float16,

|

||||

device_map="balanced",

|

||||

)

|

||||

|

||||

prompt = "A cat walks on the grass, realistic style."

|

||||

negative_prompt = "bad detailed"

|

||||

video = pipeline(prompt=prompt, negative_prompt=negative_prompt, num_frames=49, num_inference_steps=30).frames[0]

|

||||

export_to_video(video, "cat.mp4", fps=8)

|

||||

```

|

||||

|

||||

## EasyAnimatePipeline

|

||||

|

||||

[[autodoc]] EasyAnimatePipeline

|

||||

- all

|

||||

- __call__

|

||||

|

||||

## EasyAnimatePipelineOutput

|

||||

|

||||

[[autodoc]] pipelines.easyanimate.pipeline_output.EasyAnimatePipelineOutput

|

||||

@@ -12,6 +12,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# Flux

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

Flux is a series of text-to-image generation models based on diffusion transformers. To know more about Flux, check out the original [blog post](https://blackforestlabs.ai/announcing-black-forest-labs/) by the creators of Flux, Black Forest Labs.

|

||||

|

||||

Original model checkpoints for Flux can be found [here](https://huggingface.co/black-forest-labs). Original inference code can be found [here](https://github.com/black-forest-labs/flux).

|

||||

@@ -355,8 +359,74 @@ image.save('flux_ip_adapter_output.jpg')

|

||||

<figcaption class="mt-2 text-sm text-center text-gray-500">IP-Adapter examples with prompt "wearing sunglasses"</figcaption>

|

||||

</div>

|

||||

|

||||

## Optimize

|

||||

|

||||

## Running FP16 inference

|

||||

Flux is a very large model and requires ~50GB of RAM/VRAM to load all the modeling components. Enable some of the optimizations below to lower the memory requirements.

|

||||

|

||||

### Group offloading

|

||||

|

||||

[Group offloading](../../optimization/memory#group-offloading) lowers VRAM usage by offloading groups of internal layers rather than the whole model or weights. You need to use [`~hooks.apply_group_offloading`] on all the model components of a pipeline. The `offload_type` parameter allows you to toggle between block and leaf-level offloading. Setting it to `leaf_level` offloads the lowest leaf-level parameters to the CPU instead of offloading at the module-level.

|

||||

|

||||

On CUDA devices that support asynchronous data streaming, set `use_stream=True` to overlap data transfer and computation to accelerate inference.

|

||||

|

||||

> [!TIP]

|

||||

> It is possible to mix block and leaf-level offloading for different components in a pipeline.

|

||||

|

||||

```py

|

||||

import torch

|

||||

from diffusers import FluxPipeline

|

||||

from diffusers.hooks import apply_group_offloading

|

||||

|

||||

model_id = "black-forest-labs/FLUX.1-dev"

|

||||

dtype = torch.bfloat16

|

||||

pipe = FluxPipeline.from_pretrained(

|

||||

model_id,

|

||||

torch_dtype=dtype,

|

||||

)

|

||||

|

||||

apply_group_offloading(

|

||||

pipe.transformer,

|

||||

offload_type="leaf_level",

|

||||

offload_device=torch.device("cpu"),

|

||||

onload_device=torch.device("cuda"),

|

||||

use_stream=True,

|

||||

)

|

||||

apply_group_offloading(

|

||||

pipe.text_encoder,

|

||||

offload_device=torch.device("cpu"),

|

||||

onload_device=torch.device("cuda"),

|

||||

offload_type="leaf_level",

|

||||

use_stream=True,

|

||||

)

|

||||

apply_group_offloading(

|

||||

pipe.text_encoder_2,

|

||||

offload_device=torch.device("cpu"),

|

||||

onload_device=torch.device("cuda"),

|

||||

offload_type="leaf_level",

|

||||

use_stream=True,

|

||||

)

|

||||

apply_group_offloading(

|

||||

pipe.vae,

|

||||

offload_device=torch.device("cpu"),

|

||||

onload_device=torch.device("cuda"),

|

||||

offload_type="leaf_level",

|

||||

use_stream=True,

|

||||

)

|

||||

|

||||

prompt="A cat wearing sunglasses and working as a lifeguard at pool."

|

||||

|

||||

generator = torch.Generator().manual_seed(181201)

|

||||

image = pipe(

|

||||

prompt,

|

||||

width=576,

|

||||

height=1024,

|

||||

num_inference_steps=30,

|

||||

generator=generator

|

||||

).images[0]

|

||||

image

|

||||

```

|

||||

|

||||

### Running FP16 inference

|

||||

|

||||

Flux can generate high-quality images with FP16 (i.e. to accelerate inference on Turing/Volta GPUs) but produces different outputs compared to FP32/BF16. The issue is that some activations in the text encoders have to be clipped when running in FP16, which affects the overall image. Forcing text encoders to run with FP32 inference thus removes this output difference. See [here](https://github.com/huggingface/diffusers/pull/9097#issuecomment-2272292516) for details.

|

||||

|

||||

@@ -385,7 +455,7 @@ out = pipe(

|

||||

out.save("image.png")

|

||||

```

|

||||

|

||||

## Quantization

|

||||

### Quantization

|

||||

|

||||

Quantization helps reduce the memory requirements of very large models by storing model weights in a lower precision data type. However, quantization may have varying impact on video quality depending on the video model.

|

||||

|

||||

|

||||

@@ -14,6 +14,10 @@

|

||||

|

||||

# HunyuanVideo

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

[HunyuanVideo](https://www.arxiv.org/abs/2412.03603) by Tencent.

|

||||

|

||||

*Recent advancements in video generation have significantly impacted daily life for both individuals and industries. However, the leading video generation models remain closed-source, resulting in a notable performance gap between industry capabilities and those available to the public. In this report, we introduce HunyuanVideo, an innovative open-source video foundation model that demonstrates performance in video generation comparable to, or even surpassing, that of leading closed-source models. HunyuanVideo encompasses a comprehensive framework that integrates several key elements, including data curation, advanced architectural design, progressive model scaling and training, and an efficient infrastructure tailored for large-scale model training and inference. As a result, we successfully trained a video generative model with over 13 billion parameters, making it the largest among all open-source models. We conducted extensive experiments and implemented a series of targeted designs to ensure high visual quality, motion dynamics, text-video alignment, and advanced filming techniques. According to evaluations by professionals, HunyuanVideo outperforms previous state-of-the-art models, including Runway Gen-3, Luma 1.6, and three top-performing Chinese video generative models. By releasing the code for the foundation model and its applications, we aim to bridge the gap between closed-source and open-source communities. This initiative will empower individuals within the community to experiment with their ideas, fostering a more dynamic and vibrant video generation ecosystem. The code is publicly available at [this https URL](https://github.com/tencent/HunyuanVideo).*

|

||||

@@ -45,7 +49,8 @@ The following models are available for the image-to-video pipeline:

|

||||

|

||||

| Model name | Description |

|

||||

|:---|:---|

|

||||

| [`https://huggingface.co/Skywork/SkyReels-V1-Hunyuan-I2V`](https://huggingface.co/Skywork/SkyReels-V1-Hunyuan-I2V) | Skywork's custom finetune of HunyuanVideo (de-distilled). Performs best with `97x544x960` resolution. Performs best at `97x544x960` resolution, `guidance_scale=1.0`, `true_cfg_scale=6.0` and a negative prompt. |

|

||||

| [`Skywork/SkyReels-V1-Hunyuan-I2V`](https://huggingface.co/Skywork/SkyReels-V1-Hunyuan-I2V) | Skywork's custom finetune of HunyuanVideo (de-distilled). Performs best with `97x544x960` resolution. Performs best at `97x544x960` resolution, `guidance_scale=1.0`, `true_cfg_scale=6.0` and a negative prompt. |

|

||||

| [`hunyuanvideo-community/HunyuanVideo-I2V`](https://huggingface.co/hunyuanvideo-community/HunyuanVideo-I2V) | Tecent's official HunyuanVideo I2V model. Performs best at resolutions of 480, 720, 960, 1280. A higher `shift` value when initializing the scheduler is recommended (good values are between 7 and 20) |

|

||||

|

||||

## Quantization

|

||||

|

||||

|

||||

@@ -9,6 +9,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# Kandinsky 3

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

Kandinsky 3 is created by [Vladimir Arkhipkin](https://github.com/oriBetelgeuse),[Anastasia Maltseva](https://github.com/NastyaMittseva),[Igor Pavlov](https://github.com/boomb0om),[Andrei Filatov](https://github.com/anvilarth),[Arseniy Shakhmatov](https://github.com/cene555),[Andrey Kuznetsov](https://github.com/kuznetsoffandrey),[Denis Dimitrov](https://github.com/denndimitrov), [Zein Shaheen](https://github.com/zeinsh)

|

||||

|

||||

The description from it's GitHub page:

|

||||

|

||||

@@ -12,6 +12,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# Kolors: Effective Training of Diffusion Model for Photorealistic Text-to-Image Synthesis

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

|

||||

|

||||

Kolors is a large-scale text-to-image generation model based on latent diffusion, developed by [the Kuaishou Kolors team](https://github.com/Kwai-Kolors/Kolors). Trained on billions of text-image pairs, Kolors exhibits significant advantages over both open-source and closed-source models in visual quality, complex semantic accuracy, and text rendering for both Chinese and English characters. Furthermore, Kolors supports both Chinese and English inputs, demonstrating strong performance in understanding and generating Chinese-specific content. For more details, please refer to this [technical report](https://github.com/Kwai-Kolors/Kolors/blob/master/imgs/Kolors_paper.pdf).

|

||||

|

||||

@@ -12,6 +12,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# Latent Consistency Models

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

Latent Consistency Models (LCMs) were proposed in [Latent Consistency Models: Synthesizing High-Resolution Images with Few-Step Inference](https://huggingface.co/papers/2310.04378) by Simian Luo, Yiqin Tan, Longbo Huang, Jian Li, and Hang Zhao.

|

||||

|

||||

The abstract of the paper is as follows:

|

||||

|

||||

@@ -12,6 +12,10 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# LEDITS++

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

LEDITS++ was proposed in [LEDITS++: Limitless Image Editing using Text-to-Image Models](https://huggingface.co/papers/2311.16711) by Manuel Brack, Felix Friedrich, Katharina Kornmeier, Linoy Tsaban, Patrick Schramowski, Kristian Kersting, Apolinário Passos.

|

||||

|

||||

The abstract from the paper is:

|

||||

|

||||

@@ -14,6 +14,10 @@

|

||||

|

||||

# LTX Video

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

[LTX Video](https://huggingface.co/Lightricks/LTX-Video) is the first DiT-based video generation model capable of generating high-quality videos in real-time. It produces 24 FPS videos at a 768x512 resolution faster than they can be watched. Trained on a large-scale dataset of diverse videos, the model generates high-resolution videos with realistic and varied content. We provide a model for both text-to-video as well as image + text-to-video usecases.

|

||||

|

||||

<Tip>

|

||||

|

||||

@@ -14,6 +14,10 @@

|

||||

|

||||

# Lumina2

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="LoRA" src="https://img.shields.io/badge/LoRA-d8b4fe?style=flat"/>

|

||||

</div>

|

||||

|

||||

[Lumina Image 2.0: A Unified and Efficient Image Generative Model](https://huggingface.co/Alpha-VLLM/Lumina-Image-2.0) is a 2 billion parameter flow-based diffusion transformer capable of generating diverse images from text descriptions.

|

||||

|

||||

The abstract from the paper is:

|

||||

|

||||

@@ -1,4 +1,6 @@

|

||||

<!--Copyright 2024 Marigold authors and The HuggingFace Team. All rights reserved.

|

||||

<!--

|

||||

Copyright 2023-2025 Marigold Team, ETH Zürich. All rights reserved.

|

||||

Copyright 2024-2025 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

@@ -10,67 +12,120 @@ an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express o

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# Marigold Pipelines for Computer Vision Tasks

|

||||

# Marigold Computer Vision

|

||||

|

||||

|

||||

|

||||

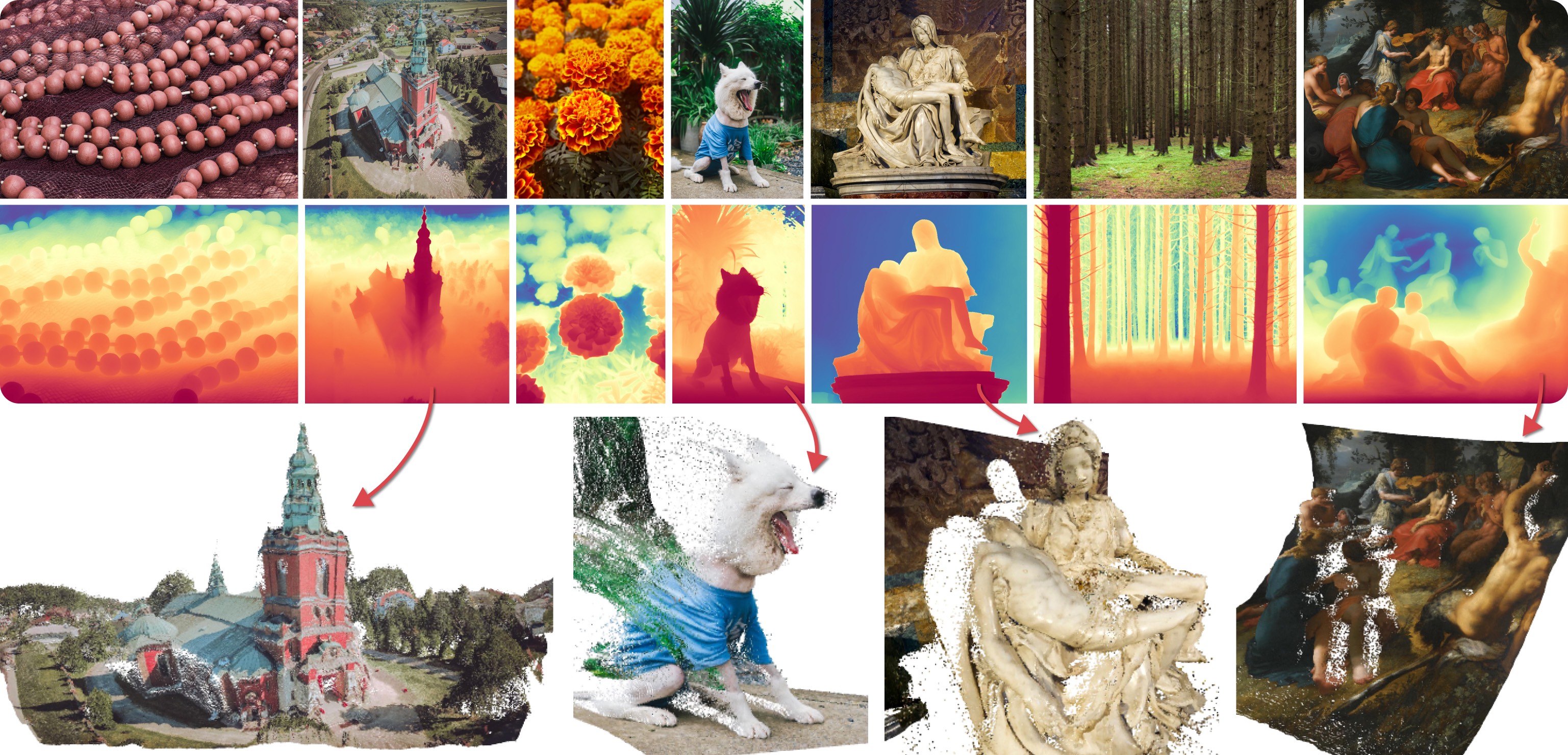

Marigold was proposed in [Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation](https://huggingface.co/papers/2312.02145), a CVPR 2024 Oral paper by [Bingxin Ke](http://www.kebingxin.com/), [Anton Obukhov](https://www.obukhov.ai/), [Shengyu Huang](https://shengyuh.github.io/), [Nando Metzger](https://nandometzger.github.io/), [Rodrigo Caye Daudt](https://rcdaudt.github.io/), and [Konrad Schindler](https://scholar.google.com/citations?user=FZuNgqIAAAAJ&hl=en).

|

||||

The idea is to repurpose the rich generative prior of Text-to-Image Latent Diffusion Models (LDMs) for traditional computer vision tasks.

|

||||

Initially, this idea was explored to fine-tune Stable Diffusion for Monocular Depth Estimation, as shown in the teaser above.

|

||||

Later,

|

||||