mirror of

https://github.com/huggingface/diffusers.git

synced 2025-12-07 04:54:47 +08:00

Compare commits

144 Commits

v0.19.0

...

test-clean

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

59d2bd3799 | ||

|

|

24c5e7708b | ||

|

|

cd21b965d1 | ||

|

|

d185b5ed5f | ||

|

|

709a642827 | ||

|

|

0a0fe69aa6 | ||

|

|

124e76ddc6 | ||

|

|

05b0ec63bc | ||

|

|

4909b1e3ac | ||

|

|

052bf3280b | ||

|

|

80871ac597 | ||

|

|

6abc66ef28 | ||

|

|

38efac9f61 | ||

|

|

4f6399bedd | ||

|

|

6e1af3a777 | ||

|

|

f22aad6e3a | ||

|

|

ecded50ad5 | ||

|

|

e34d9aa681 | ||

|

|

8d30d25794 | ||

|

|

1e0395e791 | ||

|

|

9141c1f9d5 | ||

|

|

f75b8aa9dd | ||

|

|

7a24977ce3 | ||

|

|

74d902eb59 | ||

|

|

d7c4ae619d | ||

|

|

67ea2b7afa | ||

|

|

a10107f92b | ||

|

|

d0c30cfd37 | ||

|

|

7c3e7fedcd | ||

|

|

029fb41695 | ||

|

|

852dc76d6d | ||

|

|

064f150813 | ||

|

|

5333f4c0ec | ||

|

|

3d08d8dc4e | ||

|

|

bdc4c3265f | ||

|

|

4ff7264d9b | ||

|

|

5049599143 | ||

|

|

7b93c2a882 | ||

|

|

a7de96505b | ||

|

|

351aab60e9 | ||

|

|

da5ab51d54 | ||

|

|

5175d3d7a5 | ||

|

|

a7508a76f0 | ||

|

|

aaef41b5fe | ||

|

|

078df46bc9 | ||

|

|

15782fd506 | ||

|

|

d93ca26893 | ||

|

|

32963c24c5 | ||

|

|

1b739e7344 | ||

|

|

d67eba0f31 | ||

|

|

714bfed859 | ||

|

|

d5983a6779 | ||

|

|

796c01534d | ||

|

|

c8d86e9f0a | ||

|

|

b28cd3fba0 | ||

|

|

cd7071e750 | ||

|

|

e31f38b5d6 | ||

|

|

3bd5e073cb | ||

|

|

3df52ba8dc | ||

|

|

c697c5ab57 | ||

|

|

3fd45eb10f | ||

|

|

5cbcbe3c63 | ||

|

|

7b07f9812a | ||

|

|

16ad13b61d | ||

|

|

da0e2fce38 | ||

|

|

a67ff32301 | ||

|

|

3c1b4933bd | ||

|

|

e731ae0ec8 | ||

|

|

6c5b5b260e | ||

|

|

e7e3749498 | ||

|

|

c7c0b57541 | ||

|

|

c91272d631 | ||

|

|

f0725c5845 | ||

|

|

aef11cbf66 | ||

|

|

71c8224159 | ||

|

|

4367b8a300 | ||

|

|

f4f854138d | ||

|

|

e1b5b8ba13 | ||

|

|

dff5ff35a9 | ||

|

|

b2456717e6 | ||

|

|

2e69cf16fe | ||

|

|

9c29bc2df8 | ||

|

|

70d098540d | ||

|

|

ea1fcc28a4 | ||

|

|

66de221409 | ||

|

|

06f73bd6d1 | ||

|

|

c14c141b86 | ||

|

|

79ef9e528c | ||

|

|

801a5e2199 | ||

|

|

1edd0debaa | ||

|

|

29ece0db79 | ||

|

|

1a8843f93e | ||

|

|

e391b789ac | ||

|

|

d0b8de1262 | ||

|

|

b9058754c5 | ||

|

|

777becda6b | ||

|

|

380bfd82c1 | ||

|

|

5989a85edb | ||

|

|

4188f3063a | ||

|

|

0b4430e840 | ||

|

|

a74c995e7d | ||

|

|

85aa673bec | ||

|

|

1d2587bb34 | ||

|

|

372b58108e | ||

|

|

45171174b8 | ||

|

|

4c4fe042a7 | ||

|

|

47bf8e566c | ||

|

|

18fc40c169 | ||

|

|

615c04db15 | ||

|

|

ae82a3eb34 | ||

|

|

816ca0048f | ||

|

|

fef8d2f726 | ||

|

|

579b4b2020 | ||

|

|

6c5bd2a38d | ||

|

|

160474ac61 | ||

|

|

c10861ee1b | ||

|

|

94b332c476 | ||

|

|

6f4355f89f | ||

|

|

05a1cb902c | ||

|

|

c69526a3d5 | ||

|

|

6c49d542a3 | ||

|

|

ba43ce3476 | ||

|

|

ea5b0575f8 | ||

|

|

4f986fb28a | ||

|

|

aae27262f4 | ||

|

|

34b5b63bb8 | ||

|

|

2b1786735e | ||

|

|

4a4cdd6b07 | ||

|

|

b7b6d6138d | ||

|

|

faa6cbc959 | ||

|

|

306a7bd047 | ||

|

|

c7250f2b8a | ||

|

|

18b018c864 | ||

|

|

54fab2cd5f | ||

|

|

961173064d | ||

|

|

7d0d073261 | ||

|

|

01b6ec21fa | ||

|

|

92e5ddd295 | ||

|

|

1926331eaf | ||

|

|

5fd3dca5f3 | ||

|

|

a2091b7071 | ||

|

|

d8bc1a4e51 | ||

|

|

80c10d8245 | ||

|

|

20e92586c1 |

57

.github/workflows/pr_tests.yml

vendored

57

.github/workflows/pr_tests.yml

vendored

@@ -113,3 +113,60 @@ jobs:

|

||||

with:

|

||||

name: pr_${{ matrix.config.report }}_test_reports

|

||||

path: reports

|

||||

|

||||

run_staging_tests:

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

config:

|

||||

- name: Hub tests for models, schedulers, and pipelines

|

||||

framework: hub_tests_pytorch

|

||||

runner: docker-cpu

|

||||

image: diffusers/diffusers-pytorch-cpu

|

||||

report: torch_hub

|

||||

|

||||

name: ${{ matrix.config.name }}

|

||||

|

||||

runs-on: ${{ matrix.config.runner }}

|

||||

|

||||

container:

|

||||

image: ${{ matrix.config.image }}

|

||||

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/

|

||||

|

||||

defaults:

|

||||

run:

|

||||

shell: bash

|

||||

|

||||

steps:

|

||||

- name: Checkout diffusers

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 2

|

||||

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

apt-get update && apt-get install libsndfile1-dev libgl1 -y

|

||||

python -m pip install -e .[quality,test]

|

||||

|

||||

- name: Environment

|

||||

run: |

|

||||

python utils/print_env.py

|

||||

|

||||

- name: Run Hub tests for models, schedulers, and pipelines on a staging env

|

||||

if: ${{ matrix.config.framework == 'hub_tests_pytorch' }}

|

||||

run: |

|

||||

HUGGINGFACE_CO_STAGING=true python -m pytest \

|

||||

-m "is_staging_test" \

|

||||

--make-reports=tests_${{ matrix.config.report }} \

|

||||

tests

|

||||

|

||||

- name: Failure short reports

|

||||

if: ${{ failure() }}

|

||||

run: cat reports/tests_${{ matrix.config.report }}_failures_short.txt

|

||||

|

||||

- name: Test suite reports artifacts

|

||||

if: ${{ always() }}

|

||||

uses: actions/upload-artifact@v2

|

||||

with:

|

||||

name: pr_${{ matrix.config.report }}_test_reports

|

||||

path: reports

|

||||

2

Makefile

2

Makefile

@@ -78,7 +78,7 @@ test:

|

||||

# Run tests for examples

|

||||

|

||||

test-examples:

|

||||

python -m pytest -n auto --dist=loadfile -s -v ./examples/pytorch/

|

||||

python -m pytest -n auto --dist=loadfile -s -v ./examples/

|

||||

|

||||

|

||||

# Release stuff

|

||||

|

||||

@@ -90,7 +90,7 @@ The following design principles are followed:

|

||||

- To integrate new model checkpoints whose general architecture can be classified as an architecture that already exists in Diffusers, the existing model architecture shall be adapted to make it work with the new checkpoint. One should only create a new file if the model architecture is fundamentally different.

|

||||

- Models should be designed to be easily extendable to future changes. This can be achieved by limiting public function arguments, configuration arguments, and "foreseeing" future changes, *e.g.* it is usually better to add `string` "...type" arguments that can easily be extended to new future types instead of boolean `is_..._type` arguments. Only the minimum amount of changes shall be made to existing architectures to make a new model checkpoint work.

|

||||

- The model design is a difficult trade-off between keeping code readable and concise and supporting many model checkpoints. For most parts of the modeling code, classes shall be adapted for new model checkpoints, while there are some exceptions where it is preferred to add new classes to make sure the code is kept concise and

|

||||

readable longterm, such as [UNet blocks](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/unet_2d_blocks.py) and [Attention processors](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/cross_attention.py).

|

||||

readable longterm, such as [UNet blocks](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/unet_2d_blocks.py) and [Attention processors](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/attention_processor.py).

|

||||

|

||||

### Schedulers

|

||||

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

<p align="center">

|

||||

<br>

|

||||

<img src="https://github.com/huggingface/diffusers/blob/main/docs/source/en/imgs/diffusers_library.jpg" width="400"/>

|

||||

<img src="https://raw.githubusercontent.com/huggingface/diffusers/main/docs/source/en/imgs/diffusers_library.jpg" width="400"/>

|

||||

<br>

|

||||

<p>

|

||||

<p align="center">

|

||||

|

||||

@@ -13,6 +13,8 @@

|

||||

title: Overview

|

||||

- local: using-diffusers/write_own_pipeline

|

||||

title: Understanding models and schedulers

|

||||

- local: tutorials/autopipeline

|

||||

title: AutoPipeline

|

||||

- local: tutorials/basic_training

|

||||

title: Train a diffusion model

|

||||

title: Tutorials

|

||||

@@ -30,6 +32,8 @@

|

||||

title: Load safetensors

|

||||

- local: using-diffusers/other-formats

|

||||

title: Load different Stable Diffusion formats

|

||||

- local: using-diffusers/push_to_hub

|

||||

title: Push files to the Hub

|

||||

title: Loading & Hub

|

||||

- sections:

|

||||

- local: using-diffusers/pipeline_overview

|

||||

@@ -48,6 +52,8 @@

|

||||

title: Textual inversion

|

||||

- local: training/distributed_inference

|

||||

title: Distributed inference with multiple GPUs

|

||||

- local: using-diffusers/distilled_sd

|

||||

title: Distilled Stable Diffusion inference

|

||||

- local: using-diffusers/reusing_seeds

|

||||

title: Improve image quality with deterministic generation

|

||||

- local: using-diffusers/control_brightness

|

||||

@@ -61,7 +67,7 @@

|

||||

- local: using-diffusers/stable_diffusion_jax_how_to

|

||||

title: Stable Diffusion in JAX/Flax

|

||||

- local: using-diffusers/weighted_prompts

|

||||

title: Weighting Prompts

|

||||

title: Prompt weighting

|

||||

title: Pipelines for Inference

|

||||

- sections:

|

||||

- local: training/overview

|

||||

@@ -162,6 +168,8 @@

|

||||

title: AutoencoderKL

|

||||

- local: api/models/asymmetricautoencoderkl

|

||||

title: AsymmetricAutoencoderKL

|

||||

- local: api/models/autoencoder_tiny

|

||||

title: Tiny AutoEncoder

|

||||

- local: api/models/transformer2d

|

||||

title: Transformer2D

|

||||

- local: api/models/transformer_temporal

|

||||

@@ -182,12 +190,16 @@

|

||||

title: Audio Diffusion

|

||||

- local: api/pipelines/audioldm

|

||||

title: AudioLDM

|

||||

- local: api/pipelines/audioldm2

|

||||

title: AudioLDM 2

|

||||

- local: api/pipelines/auto_pipeline

|

||||

title: AutoPipeline

|

||||

- local: api/pipelines/consistency_models

|

||||

title: Consistency Models

|

||||

- local: api/pipelines/controlnet

|

||||

title: ControlNet

|

||||

- local: api/pipelines/controlnet_sdxl

|

||||

title: ControlNet with Stable Diffusion XL

|

||||

- local: api/pipelines/cycle_diffusion

|

||||

title: Cycle Diffusion

|

||||

- local: api/pipelines/dance_diffusion

|

||||

@@ -259,6 +271,8 @@

|

||||

title: LDM3D Text-to-(RGB, Depth)

|

||||

- local: api/pipelines/stable_diffusion/adapter

|

||||

title: Stable Diffusion T2I-adapter

|

||||

- local: api/pipelines/stable_diffusion/gligen

|

||||

title: GLIGEN (Grounded Language-to-Image Generation)

|

||||

title: Stable Diffusion

|

||||

- local: api/pipelines/stable_unclip

|

||||

title: Stable unCLIP

|

||||

@@ -287,49 +301,49 @@

|

||||

- local: api/schedulers/overview

|

||||

title: Overview

|

||||

- local: api/schedulers/cm_stochastic_iterative

|

||||

title: Consistency Model Multistep Scheduler

|

||||

- local: api/schedulers/ddim

|

||||

title: DDIM

|

||||

title: CMStochasticIterativeScheduler

|

||||

- local: api/schedulers/ddim_inverse

|

||||

title: DDIMInverse

|

||||

title: DDIMInverseScheduler

|

||||

- local: api/schedulers/ddim

|

||||

title: DDIMScheduler

|

||||

- local: api/schedulers/ddpm

|

||||

title: DDPM

|

||||

title: DDPMScheduler

|

||||

- local: api/schedulers/deis

|

||||

title: DEIS

|

||||

- local: api/schedulers/dpm_discrete

|

||||

title: DPM Discrete Scheduler

|

||||

- local: api/schedulers/dpm_discrete_ancestral

|

||||

title: DPM Discrete Scheduler with ancestral sampling

|

||||

title: DEISMultistepScheduler

|

||||

- local: api/schedulers/multistep_dpm_solver_inverse

|

||||

title: DPMSolverMultistepInverse

|

||||

- local: api/schedulers/multistep_dpm_solver

|

||||

title: DPMSolverMultistepScheduler

|

||||

- local: api/schedulers/dpm_sde

|

||||

title: DPMSolverSDEScheduler

|

||||

- local: api/schedulers/euler_ancestral

|

||||

title: Euler Ancestral Scheduler

|

||||

- local: api/schedulers/euler

|

||||

title: Euler scheduler

|

||||

- local: api/schedulers/heun

|

||||

title: Heun Scheduler

|

||||

- local: api/schedulers/multistep_dpm_solver_inverse

|

||||

title: Inverse Multistep DPM-Solver

|

||||

- local: api/schedulers/ipndm

|

||||

title: IPNDM

|

||||

- local: api/schedulers/lms_discrete

|

||||

title: Linear Multistep

|

||||

- local: api/schedulers/multistep_dpm_solver

|

||||

title: Multistep DPM-Solver

|

||||

- local: api/schedulers/pndm

|

||||

title: PNDM

|

||||

- local: api/schedulers/repaint

|

||||

title: RePaint Scheduler

|

||||

- local: api/schedulers/singlestep_dpm_solver

|

||||

title: Singlestep DPM-Solver

|

||||

title: DPMSolverSinglestepScheduler

|

||||

- local: api/schedulers/euler_ancestral

|

||||

title: EulerAncestralDiscreteScheduler

|

||||

- local: api/schedulers/euler

|

||||

title: EulerDiscreteScheduler

|

||||

- local: api/schedulers/heun

|

||||

title: HeunDiscreteScheduler

|

||||

- local: api/schedulers/ipndm

|

||||

title: IPNDMScheduler

|

||||

- local: api/schedulers/stochastic_karras_ve

|

||||

title: Stochastic Kerras VE

|

||||

title: KarrasVeScheduler

|

||||

- local: api/schedulers/dpm_discrete_ancestral

|

||||

title: KDPM2AncestralDiscreteScheduler

|

||||

- local: api/schedulers/dpm_discrete

|

||||

title: KDPM2DiscreteScheduler

|

||||

- local: api/schedulers/lms_discrete

|

||||

title: LMSDiscreteScheduler

|

||||

- local: api/schedulers/pndm

|

||||

title: PNDMScheduler

|

||||

- local: api/schedulers/repaint

|

||||

title: RePaintScheduler

|

||||

- local: api/schedulers/score_sde_ve

|

||||

title: ScoreSdeVeScheduler

|

||||

- local: api/schedulers/score_sde_vp

|

||||

title: ScoreSdeVpScheduler

|

||||

- local: api/schedulers/unipc

|

||||

title: UniPCMultistepScheduler

|

||||

- local: api/schedulers/score_sde_ve

|

||||

title: VE-SDE

|

||||

- local: api/schedulers/score_sde_vp

|

||||

title: VP-SDE

|

||||

- local: api/schedulers/vq_diffusion

|

||||

title: VQDiffusionScheduler

|

||||

title: Schedulers

|

||||

|

||||

45

docs/source/en/api/models/autoencoder_tiny.md

Normal file

45

docs/source/en/api/models/autoencoder_tiny.md

Normal file

@@ -0,0 +1,45 @@

|

||||

# Tiny AutoEncoder

|

||||

|

||||

Tiny AutoEncoder for Stable Diffusion (TAESD) was introduced in [madebyollin/taesd](https://github.com/madebyollin/taesd) by Ollin Boer Bohan. It is a tiny distilled version of Stable Diffusion's VAE that can quickly decode the latents in a [`StableDiffusionPipeline`] or [`StableDiffusionXLPipeline`] almost instantly.

|

||||

|

||||

To use with Stable Diffusion v-2.1:

|

||||

|

||||

```python

|

||||

import torch

|

||||

from diffusers import DiffusionPipeline, AutoencoderTiny

|

||||

|

||||

pipe = DiffusionPipeline.from_pretrained(

|

||||

"stabilityai/stable-diffusion-2-1-base", torch_dtype=torch.float16

|

||||

)

|

||||

pipe.vae = AutoencoderTiny.from_pretrained("madebyollin/taesd", torch_dtype=torch.float16)

|

||||

pipe = pipe.to("cuda")

|

||||

|

||||

prompt = "slice of delicious New York-style berry cheesecake"

|

||||

image = pipe(prompt, num_inference_steps=25).images[0]

|

||||

image.save("cheesecake.png")

|

||||

```

|

||||

|

||||

To use with Stable Diffusion XL 1.0

|

||||

|

||||

```python

|

||||

import torch

|

||||

from diffusers import DiffusionPipeline, AutoencoderTiny

|

||||

|

||||

pipe = DiffusionPipeline.from_pretrained(

|

||||

"stabilityai/stable-diffusion-xl-base-1.0", torch_dtype=torch.float16

|

||||

)

|

||||

pipe.vae = AutoencoderTiny.from_pretrained("madebyollin/taesdxl", torch_dtype=torch.float16)

|

||||

pipe = pipe.to("cuda")

|

||||

|

||||

prompt = "slice of delicious New York-style berry cheesecake"

|

||||

image = pipe(prompt, num_inference_steps=25).images[0]

|

||||

image.save("cheesecake_sdxl.png")

|

||||

```

|

||||

|

||||

## AutoencoderTiny

|

||||

|

||||

[[autodoc]] AutoencoderTiny

|

||||

|

||||

## AutoencoderTinyOutput

|

||||

|

||||

[[autodoc]] models.autoencoder_tiny.AutoencoderTinyOutput

|

||||

@@ -9,4 +9,8 @@ All models are built from the base [`ModelMixin`] class which is a [`torch.nn.mo

|

||||

|

||||

## FlaxModelMixin

|

||||

|

||||

[[autodoc]] FlaxModelMixin

|

||||

[[autodoc]] FlaxModelMixin

|

||||

|

||||

## PushToHubMixin

|

||||

|

||||

[[autodoc]] utils.PushToHubMixin

|

||||

@@ -46,6 +46,5 @@ Make sure to check out the Schedulers [guide](/using-diffusers/schedulers) to le

|

||||

- all

|

||||

- __call__

|

||||

|

||||

## StableDiffusionPipelineOutput

|

||||

|

||||

[[autodoc]] pipelines.stable_diffusion.StableDiffusionPipelineOutput

|

||||

## AudioPipelineOutput

|

||||

[[autodoc]] pipelines.AudioPipelineOutput

|

||||

93

docs/source/en/api/pipelines/audioldm2.md

Normal file

93

docs/source/en/api/pipelines/audioldm2.md

Normal file

@@ -0,0 +1,93 @@

|

||||

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# AudioLDM 2

|

||||

|

||||

AudioLDM 2 was proposed in [AudioLDM 2: Learning Holistic Audio Generation with Self-supervised Pretraining](https://arxiv.org/abs/2308.05734)

|

||||

by Haohe Liu et al. AudioLDM 2 takes a text prompt as input and predicts the corresponding audio. It can generate

|

||||

text-conditional sound effects, human speech and music.

|

||||

|

||||

Inspired by [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion/overview), AudioLDM 2

|

||||

is a text-to-audio _latent diffusion model (LDM)_ that learns continuous audio representations from text embeddings. Two

|

||||

text encoder models are used to compute the text embeddings from a prompt input: the text-branch of [CLAP](https://huggingface.co/docs/transformers/main/en/model_doc/clap)

|

||||

and the encoder of [Flan-T5](https://huggingface.co/docs/transformers/main/en/model_doc/flan-t5). These text embeddings

|

||||

are then projected to a shared embedding space by an [AudioLDM2ProjectionModel](https://huggingface.co/docs/diffusers/main/api/pipelines/audioldm2#diffusers.AudioLDM2ProjectionModel).

|

||||

A [GPT2](https://huggingface.co/docs/transformers/main/en/model_doc/gpt2) _language model (LM)_ is used to auto-regressively

|

||||

predict eight new embedding vectors, conditional on the projected CLAP and Flan-T5 embeddings. The generated embedding

|

||||

vectors and Flan-T5 text embeddings are used as cross-attention conditioning in the LDM. The [UNet](https://huggingface.co/docs/diffusers/main/en/api/pipelines/audioldm2#diffusers.AudioLDM2UNet2DConditionModel)

|

||||

of AudioLDM 2 is unique in the sense that it takes **two** cross-attention embeddings, as opposed to one cross-attention

|

||||

conditioning, as in most other LDMs.

|

||||

|

||||

The abstract of the paper is the following:

|

||||

|

||||

*Although audio generation shares commonalities across different types of audio, such as speech, music, and sound effects, designing models for each type requires careful consideration of specific objectives and biases that can significantly differ from those of other types. To bring us closer to a unified perspective of audio generation, this paper proposes a framework that utilizes the same learning method for speech, music, and sound effect generation. Our framework introduces a general representation of audio, called language of audio (LOA). Any audio can be translated into LOA based on AudioMAE, a self-supervised pre-trained representation learning model. In the generation process, we translate any modalities into LOA by using a GPT-2 model, and we perform self-supervised audio generation learning with a latent diffusion model conditioned on LOA. The proposed framework naturally brings advantages such as in-context learning abilities and reusable self-supervised pretrained AudioMAE and latent diffusion models. Experiments on the major benchmarks of text-to-audio, text-to-music, and text-to-speech demonstrate new state-of-the-art or competitive performance to previous approaches.*

|

||||

|

||||

This pipeline was contributed by [sanchit-gandhi](https://huggingface.co/sanchit-gandhi). The original codebase can be

|

||||

found at [haoheliu/audioldm2](https://github.com/haoheliu/audioldm2).

|

||||

|

||||

## Tips

|

||||

|

||||

### Choosing a checkpoint

|

||||

|

||||

AudioLDM2 comes in three variants. Two of these checkpoints are applicable to the general task of text-to-audio

|

||||

generation. The third checkpoint is trained exclusively on text-to-music generation.

|

||||

|

||||

All checkpoints share the same model size for the text encoders and VAE. They differ in the size and depth of the UNet.

|

||||

See table below for details on the three checkpoints:

|

||||

|

||||

| Checkpoint | Task | UNet Model Size | Total Model Size | Training Data / h |

|

||||

|-----------------------------------------------------------------|---------------|-----------------|------------------|-------------------|

|

||||

| [audioldm2](https://huggingface.co/cvssp/audioldm2) | Text-to-audio | 350M | 1.1B | 1150k |

|

||||

| [audioldm2-large](https://huggingface.co/cvssp/audioldm2-large) | Text-to-audio | 750M | 1.5B | 1150k |

|

||||

| [audioldm2-music](https://huggingface.co/cvssp/audioldm2-music) | Text-to-music | 350M | 1.1B | 665k |

|

||||

|

||||

### Constructing a prompt

|

||||

|

||||

* Descriptive prompt inputs work best: use adjectives to describe the sound (e.g. "high quality" or "clear") and make the prompt context specific (e.g. "water stream in a forest" instead of "stream").

|

||||

* It's best to use general terms like "cat" or "dog" instead of specific names or abstract objects the model may not be familiar with.

|

||||

* Using a **negative prompt** can significantly improve the quality of the generated waveform, by guiding the generation away from terms that correspond to poor quality audio. Try using a negative prompt of "Low quality."

|

||||

|

||||

### Controlling inference

|

||||

|

||||

* The _quality_ of the predicted audio sample can be controlled by the `num_inference_steps` argument; higher steps give higher quality audio at the expense of slower inference.

|

||||

* The _length_ of the predicted audio sample can be controlled by varying the `audio_length_in_s` argument.

|

||||

|

||||

### Evaluating generated waveforms:

|

||||

|

||||

* The quality of the generated waveforms can vary significantly based on the seed. Try generating with different seeds until you find a satisfactory generation

|

||||

* Multiple waveforms can be generated in one go: set `num_waveforms_per_prompt` to a value greater than 1. Automatic scoring will be performed between the generated waveforms and prompt text, and the audios ranked from best to worst accordingly.

|

||||

|

||||

The following example demonstrates how to construct good music generation using the aforementioned tips: [example](https://huggingface.co/docs/diffusers/main/en/api/pipelines/audioldm2#diffusers.AudioLDM2Pipeline.__call__.example).

|

||||

|

||||

<Tip>

|

||||

|

||||

Make sure to check out the Schedulers [guide](/using-diffusers/schedulers) to learn how to explore the tradeoff between

|

||||

scheduler speed and quality, and see the [reuse components across pipelines](/using-diffusers/loading#reuse-components-across-pipelines)

|

||||

section to learn how to efficiently load the same components into multiple pipelines.

|

||||

|

||||

</Tip>

|

||||

|

||||

## AudioLDM2Pipeline

|

||||

[[autodoc]] AudioLDM2Pipeline

|

||||

- all

|

||||

- __call__

|

||||

|

||||

## AudioLDM2ProjectionModel

|

||||

[[autodoc]] AudioLDM2ProjectionModel

|

||||

- forward

|

||||

|

||||

## AudioLDM2UNet2DConditionModel

|

||||

[[autodoc]] AudioLDM2UNet2DConditionModel

|

||||

- forward

|

||||

|

||||

## AudioPipelineOutput

|

||||

[[autodoc]] pipelines.AudioPipelineOutput

|

||||

@@ -12,35 +12,41 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

# AutoPipeline

|

||||

|

||||

In many cases, one checkpoint can be used for multiple tasks. For example, you may be able to use the same checkpoint for Text-to-Image, Image-to-Image, and Inpainting. However, you'll need to know the pipeline class names linked to your checkpoint.

|

||||

`AutoPipeline` is designed to:

|

||||

|

||||

AutoPipeline is designed to make it easy for you to use multiple pipelines in your workflow. We currently provide 3 AutoPipeline classes to perform three different tasks, i.e. [`AutoPipelineForText2Image`], [`AutoPipelineForImage2Image`], and [`AutoPipelineForInpainting`]. You'll need to choose the AutoPipeline class based on the task you want to perform and use it to automatically retrieve the relevant pipeline given the name/path to the pre-trained weights.

|

||||

1. make it easy for you to load a checkpoint for a task without knowing the specific pipeline class to use

|

||||

2. use multiple pipelines in your workflow

|

||||

|

||||

For example, to perform Image-to-Image with the SD1.5 checkpoint, you can do

|

||||

Based on the task, the `AutoPipeline` class automatically retrieves the relevant pipeline given the name or path to the pretrained weights with the `from_pretrained()` method.

|

||||

|

||||

```python

|

||||

from diffusers import PipelineForImageToImage

|

||||

To seamlessly switch between tasks with the same checkpoint without reallocating additional memory, use the `from_pipe()` method to transfer the components from the original pipeline to the new one.

|

||||

|

||||

pipe_i2i = PipelineForImageoImage.from_pretrained("runwayml/stable-diffusion-v1-5")

|

||||

```py

|

||||

from diffusers import AutoPipelineForText2Image

|

||||

import torch

|

||||

|

||||

pipeline = AutoPipelineForText2Image.from_pretrained(

|

||||

"runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True

|

||||

).to("cuda")

|

||||

prompt = "Astronaut in a jungle, cold color palette, muted colors, detailed, 8k"

|

||||

|

||||

image = pipeline(prompt, num_inference_steps=25).images[0]

|

||||

```

|

||||

|

||||

It will also help you switch between tasks seamlessly using the same checkpoint without reallocating additional memory. For example, to re-use the Image-to-Image pipeline we just created for inpainting, you can do

|

||||

<Tip>

|

||||

|

||||

```python

|

||||

from diffusers import PipelineForInpainting

|

||||

Check out the [AutoPipeline](/tutorials/autopipeline) tutorial to learn how to use this API!

|

||||

|

||||

pipe_inpaint = AutoPipelineForInpainting.from_pipe(pipe_i2i)

|

||||

```

|

||||

All the components will be transferred to the inpainting pipeline with zero cost.

|

||||

</Tip>

|

||||

|

||||

`AutoPipeline` supports text-to-image, image-to-image, and inpainting for the following diffusion models:

|

||||

|

||||

Currently AutoPipeline support the Text-to-Image, Image-to-Image, and Inpainting tasks for below diffusion models:

|

||||

- [stable Diffusion](./stable_diffusion)

|

||||

- [Stable Diffusion Controlnet](./api/pipelines/controlnet)

|

||||

- [Stable Diffusion XL](./stable_diffusion/stable_diffusion_xl)

|

||||

- [IF](./if)

|

||||

- [Kandinsky](./kandinsky)(./kandinsky)(./kandinsky)(./kandinsky)(./kandinsky)

|

||||

- [Kandinsky 2.2]()(./kandinsky)

|

||||

- [Stable Diffusion](./stable_diffusion)

|

||||

- [ControlNet](./api/pipelines/controlnet)

|

||||

- [Stable Diffusion XL (SDXL)](./stable_diffusion/stable_diffusion_xl)

|

||||

- [DeepFloyd IF](./if)

|

||||

- [Kandinsky](./kandinsky)

|

||||

- [Kandinsky 2.2](./kandinsky#kandinsky-22)

|

||||

|

||||

|

||||

## AutoPipelineForText2Image

|

||||

|

||||

162

docs/source/en/api/pipelines/controlnet_sdxl.md

Normal file

162

docs/source/en/api/pipelines/controlnet_sdxl.md

Normal file

@@ -0,0 +1,162 @@

|

||||

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# ControlNet with Stable Diffusion XL

|

||||

|

||||

[Adding Conditional Control to Text-to-Image Diffusion Models](https://huggingface.co/papers/2302.05543) by Lvmin Zhang and Maneesh Agrawala.

|

||||

|

||||

Using a pretrained model, we can provide control images (for example, a depth map) to control Stable Diffusion text-to-image generation so that it follows the structure of the depth image and fills in the details.

|

||||

|

||||

The abstract from the paper is:

|

||||

|

||||

*We present a neural network structure, ControlNet, to control pretrained large diffusion models to support additional input conditions. The ControlNet learns task-specific conditions in an end-to-end way, and the learning is robust even when the training dataset is small (< 50k). Moreover, training a ControlNet is as fast as fine-tuning a diffusion model, and the model can be trained on a personal devices. Alternatively, if powerful computation clusters are available, the model can scale to large amounts (millions to billions) of data. We report that large diffusion models like Stable Diffusion can be augmented with ControlNets to enable conditional inputs like edge maps, segmentation maps, keypoints, etc. This may enrich the methods to control large diffusion models and further facilitate related applications.*

|

||||

|

||||

We provide support using ControlNets with [Stable Diffusion XL](./stable_diffusion/stable_diffusion_xl.md) (SDXL).

|

||||

|

||||

You can find numerous SDXL ControlNet checkpoints from [this link](https://huggingface.co/models?other=stable-diffusion-xl&other=controlnet). There are some smaller ControlNet checkpoints too:

|

||||

|

||||

* [controlnet-canny-sdxl-1.0-small](https://huggingface.co/diffusers/controlnet-canny-sdxl-1.0-small)

|

||||

* [controlnet-canny-sdxl-1.0-mid](https://huggingface.co/diffusers/controlnet-canny-sdxl-1.0-mid)

|

||||

* [controlnet-depth-sdxl-1.0-small](https://huggingface.co/diffusers/controlnet-depth-sdxl-1.0-small)

|

||||

* [controlnet-depth-sdxl-1.0-mid](https://huggingface.co/diffusers/controlnet-depth-sdxl-1.0-mid)

|

||||

|

||||

We also encourage you to train custom ControlNets; we provide a [training script](https://github.com/huggingface/diffusers/blob/main/examples/controlnet/README_sdxl.md) for this.

|

||||

|

||||

You can find some results below:

|

||||

|

||||

<img src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/sd_xl/sdxl_controlnet_canny_grid.png" width=600/>

|

||||

|

||||

🚨 At the time of this writing, many of these SDXL ControlNet checkpoints are experimental and there is a lot of room for improvement. We encourage our users to provide feedback. 🚨

|

||||

|

||||

## MultiControlNet

|

||||

|

||||

You can compose multiple ControlNet conditionings from different image inputs to create a *MultiControlNet*. To get better results, it is often helpful to:

|

||||

|

||||

1. mask conditionings such that they don't overlap (for example, mask the area of a canny image where the pose conditioning is located)

|

||||

2. experiment with the [`controlnet_conditioning_scale`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/controlnet#diffusers.StableDiffusionControlNetPipeline.__call__.controlnet_conditioning_scale) parameter to determine how much weight to assign to each conditioning input

|

||||

|

||||

In this example, you'll combine a canny image and a human pose estimation image to generate a new image.

|

||||

|

||||

Prepare the canny image conditioning:

|

||||

|

||||

```py

|

||||

from diffusers.utils import load_image

|

||||

from PIL import Image

|

||||

import numpy as np

|

||||

import cv2

|

||||

|

||||

canny_image = load_image(

|

||||

"https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/landscape.png"

|

||||

)

|

||||

canny_image = np.array(canny_image)

|

||||

|

||||

low_threshold = 100

|

||||

high_threshold = 200

|

||||

|

||||

canny_image = cv2.Canny(canny_image, low_threshold, high_threshold)

|

||||

|

||||

# zero out middle columns of image where pose will be overlayed

|

||||

zero_start = canny_image.shape[1] // 4

|

||||

zero_end = zero_start + canny_image.shape[1] // 2

|

||||

canny_image[:, zero_start:zero_end] = 0

|

||||

|

||||

canny_image = canny_image[:, :, None]

|

||||

canny_image = np.concatenate([canny_image, canny_image, canny_image], axis=2)

|

||||

canny_image = Image.fromarray(canny_image).resize((1024, 1024))

|

||||

```

|

||||

|

||||

<div class="flex gap-4">

|

||||

<div>

|

||||

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/landscape.png"/>

|

||||

<figcaption class="mt-2 text-center text-sm text-gray-500">original image</figcaption>

|

||||

</div>

|

||||

<div>

|

||||

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/controlnet/landscape_canny_masked.png"/>

|

||||

<figcaption class="mt-2 text-center text-sm text-gray-500">canny image</figcaption>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

Prepare the human pose estimation conditioning:

|

||||

|

||||

```py

|

||||

from controlnet_aux import OpenposeDetector

|

||||

from diffusers.utils import load_image

|

||||

|

||||

openpose = OpenposeDetector.from_pretrained("lllyasviel/ControlNet")

|

||||

|

||||

openpose_image = load_image(

|

||||

"https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/person.png"

|

||||

)

|

||||

openpose_image = openpose(openpose_image).resize((1024, 1024))

|

||||

```

|

||||

|

||||

<div class="flex gap-4">

|

||||

<div>

|

||||

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/person.png"/>

|

||||

<figcaption class="mt-2 text-center text-sm text-gray-500">original image</figcaption>

|

||||

</div>

|

||||

<div>

|

||||

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/controlnet/person_pose.png"/>

|

||||

<figcaption class="mt-2 text-center text-sm text-gray-500">human pose image</figcaption>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

Load a list of ControlNet models that correspond to each conditioning, and pass them to the [`StableDiffusionXLControlNetPipeline`]. Use the faster [`UniPCMultistepScheduler`] and nable model offloading to reduce memory usage.

|

||||

|

||||

```py

|

||||

from diffusers import StableDiffusionXLControlNetPipeline, ControlNetModel, AutoencoderKL, UniPCMultistepScheduler

|

||||

import torch

|

||||

|

||||

controlnets = [

|

||||

ControlNetModel.from_pretrained(

|

||||

"thibaud/controlnet-openpose-sdxl-1.0", torch_dtype=torch.float16, use_safetensors=True

|

||||

),

|

||||

ControlNetModel.from_pretrained("diffusers/controlnet-canny-sdxl-1.0", torch_dtype=torch.float16, use_safetensors=True),

|

||||

]

|

||||

|

||||

vae = AutoencoderKL.from_pretrained("madebyollin/sdxl-vae-fp16-fix", torch_dtype=torch.float16, use_safetensors=True)

|

||||

pipe = StableDiffusionXLControlNetPipeline.from_pretrained(

|

||||

"stabilityai/stable-diffusion-xl-base-1.0", controlnet=controlnets, vae=vae, torch_dtype=torch.float16, use_safetensors=True

|

||||

)

|

||||

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

|

||||

pipe.enable_model_cpu_offload()

|

||||

```

|

||||

|

||||

Now you can pass your prompt (an optional negative prompt if you're using one), canny image, and pose image to the pipeline:

|

||||

|

||||

```py

|

||||

prompt = "a giant standing in a fantasy landscape, best quality"

|

||||

negative_prompt = "monochrome, lowres, bad anatomy, worst quality, low quality"

|

||||

|

||||

generator = torch.manual_seed(1)

|

||||

|

||||

images = [openpose_image, canny_image]

|

||||

|

||||

images = pipe(

|

||||

prompt,

|

||||

image=images,

|

||||

num_inference_steps=25,

|

||||

generator=generator,

|

||||

negative_prompt=negative_prompt,

|

||||

num_images_per_prompt=3,

|

||||

controlnet_conditioning_scale=[1.0, 0.8],

|

||||

).images[0]

|

||||

```

|

||||

|

||||

<div class="flex justify-center">

|

||||

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/multicontrolnet.png"/>

|

||||

</div>

|

||||

|

||||

## StableDiffusionXLControlNetPipeline

|

||||

[[autodoc]] StableDiffusionXLControlNetPipeline

|

||||

- all

|

||||

- __call__

|

||||

@@ -105,6 +105,30 @@ One cheeseburger monster coming up! Enjoy!

|

||||

|

||||

|

||||

|

||||

<Tip>

|

||||

|

||||

We also provide an end-to-end Kandinsky pipeline [`KandinskyCombinedPipeline`], which combines both the prior pipeline and text-to-image pipeline, and lets you perform inference in a single step. You can create the combined pipeline with the [`~AutoPipelineForText2Image.from_pretrained`] method

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForText2Image

|

||||

import torch

|

||||

|

||||

pipe = AutoPipelineForText2Image.from_pretrained(

|

||||

"kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16

|

||||

)

|

||||

pipe.enable_model_cpu_offload()

|

||||

```

|

||||

|

||||

Under the hood, it will automatically load both [`KandinskyPriorPipeline`] and [`KandinskyPipeline`]. To generate images, you no longer need to call both pipelines and pass the outputs from one to another. You only need to call the combined pipeline once. You can set different `guidance_scale` and `num_inference_steps` for the prior pipeline with the `prior_guidance_scale` and `prior_num_inference_steps` arguments.

|

||||

|

||||

```python

|

||||

prompt = "A alien cheeseburger creature eating itself, claymation, cinematic, moody lighting"

|

||||

negative_prompt = "low quality, bad quality"

|

||||

|

||||

image = pipe(prompt=prompt, negative_prompt=negative_prompt, prior_guidance_scale =1.0, guidance_scacle = 4.0, height=768, width=768).images[0]

|

||||

```

|

||||

</Tip>

|

||||

|

||||

The Kandinsky model works extremely well with creative prompts. Here is some of the amazing art that can be created using the exact same process but with different prompts.

|

||||

|

||||

```python

|

||||

@@ -187,6 +211,34 @@ out.images[0].save("fantasy_land.png")

|

||||

|

||||

|

||||

|

||||

<Tip>

|

||||

|

||||

You can also use the [`KandinskyImg2ImgCombinedPipeline`] for end-to-end image-to-image generation with Kandinsky 2.1

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForImage2Image

|

||||

import torch

|

||||

import requests

|

||||

from io import BytesIO

|

||||

from PIL import Image

|

||||

import os

|

||||

|

||||

pipe = AutoPipelineForImage2Image.from_pretrained("kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16)

|

||||

pipe.enable_model_cpu_offload()

|

||||

|

||||

prompt = "A fantasy landscape, Cinematic lighting"

|

||||

negative_prompt = "low quality, bad quality"

|

||||

|

||||

url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

|

||||

|

||||

response = requests.get(url)

|

||||

original_image = Image.open(BytesIO(response.content)).convert("RGB")

|

||||

original_image.thumbnail((768, 768))

|

||||

|

||||

image = pipe(prompt=prompt, image=original_image, strength=0.3).images[0]

|

||||

```

|

||||

</Tip>

|

||||

|

||||

### Text Guided Inpainting Generation

|

||||

|

||||

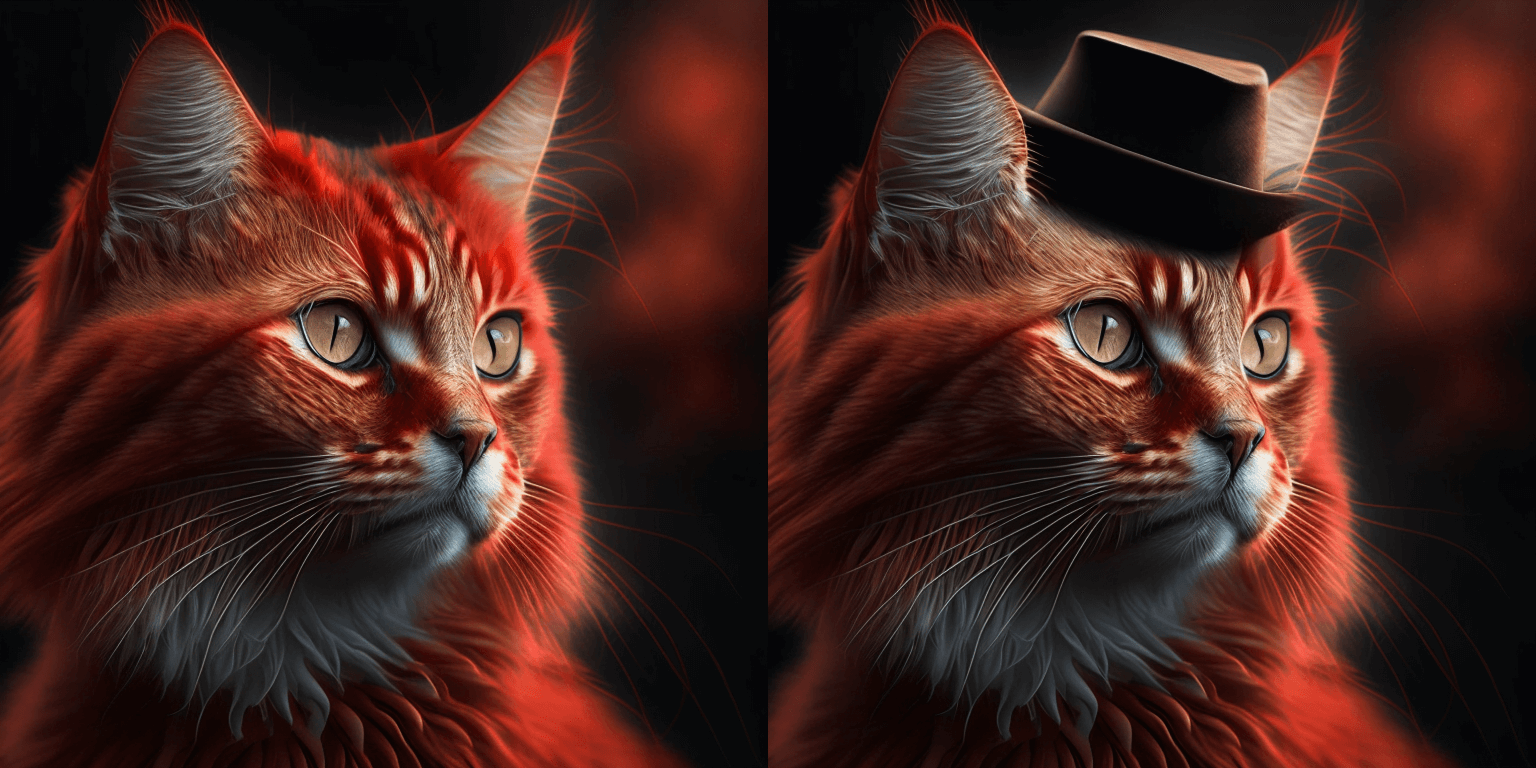

You can use [`KandinskyInpaintPipeline`] to edit images. In this example, we will add a hat to the portrait of a cat.

|

||||

@@ -231,6 +283,33 @@ image.save("cat_with_hat.png")

|

||||

```

|

||||

|

||||

|

||||

<Tip>

|

||||

|

||||

To use the [`KandinskyInpaintCombinedPipeline`] to perform end-to-end image inpainting generation, you can run below code instead

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForInpainting

|

||||

|

||||

pipe = AutoPipelineForInpainting.from_pretrained("kandinsky-community/kandinsky-2-1-inpaint", torch_dtype=torch.float16)

|

||||

pipe.enable_model_cpu_offload()

|

||||

image = pipe(prompt=prompt, image=original_image, mask_image=mask).images[0]

|

||||

```

|

||||

</Tip>

|

||||

|

||||

🚨🚨🚨 __Breaking change for Kandinsky Mask Inpainting__ 🚨🚨🚨

|

||||

|

||||

We introduced a breaking change for Kandinsky inpainting pipeline in the following pull request: https://github.com/huggingface/diffusers/pull/4207. Previously we accepted a mask format where black pixels represent the masked-out area. This is inconsistent with all other pipelines in diffusers. We have changed the mask format in Knaindsky and now using white pixels instead.

|

||||

Please upgrade your inpainting code to follow the above. If you are using Kandinsky Inpaint in production. You now need to change the mask to:

|

||||

|

||||

```python

|

||||

# For PIL input

|

||||

import PIL.ImageOps

|

||||

mask = PIL.ImageOps.invert(mask)

|

||||

|

||||

# For PyTorch and Numpy input

|

||||

mask = 1 - mask

|

||||

```

|

||||

|

||||

### Interpolate

|

||||

|

||||

The [`KandinskyPriorPipeline`] also comes with a cool utility function that will allow you to interpolate the latent space of different images and texts super easily. Here is an example of how you can create an Impressionist-style portrait for your pet based on "The Starry Night".

|

||||

|

||||

@@ -11,7 +11,22 @@ specific language governing permissions and limitations under the License.

|

||||

|

||||

The Kandinsky 2.2 release includes robust new text-to-image models that support text-to-image generation, image-to-image generation, image interpolation, and text-guided image inpainting. The general workflow to perform these tasks using Kandinsky 2.2 is the same as in Kandinsky 2.1. First, you will need to use a prior pipeline to generate image embeddings based on your text prompt, and then use one of the image decoding pipelines to generate the output image. The only difference is that in Kandinsky 2.2, all of the decoding pipelines no longer accept the `prompt` input, and the image generation process is conditioned with only `image_embeds` and `negative_image_embeds`.

|

||||

|

||||

Let's look at an example of how to perform text-to-image generation using Kandinsky 2.2.

|

||||

Same as with Kandinsky 2.1, the easiest way to perform text-to-image generation is to use the combined Kandinsky pipeline. This process is exactly the same as Kandinsky 2.1. All you need to do is to replace the Kandinsky 2.1 checkpoint with 2.2.

|

||||

|

||||

```python

|

||||

from diffusers import AutoPipelineForText2Image

|

||||

import torch

|

||||

|

||||

pipe = AutoPipelineForText2Image.from_pretrained("kandinsky-community/kandinsky-2-2-decoder", torch_dtype=torch.float16)

|

||||

pipe.enable_model_cpu_offload()

|

||||

|

||||

prompt = "A alien cheeseburger creature eating itself, claymation, cinematic, moody lighting"

|

||||

negative_prompt = "low quality, bad quality"

|

||||

|

||||

image = pipe(prompt=prompt, negative_prompt=negative_prompt, prior_guidance_scale =1.0, height=768, width=768).images[0]

|

||||

```

|

||||

|

||||

Now, let's look at an example where we take separate steps to run the prior pipeline and text-to-image pipeline. This way, we can understand what's happening under the hood and how Kandinsky 2.2 differs from Kandinsky 2.1.

|

||||

|

||||

First, let's create the prior pipeline and text-to-image pipeline with Kandinsky 2.2 checkpoints.

|

||||

|

||||

|

||||

@@ -34,3 +34,7 @@ Pipelines do not offer any training functionality. You'll notice PyTorch's autog

|

||||

## FlaxDiffusionPipeline

|

||||

|

||||

[[autodoc]] pipelines.pipeline_flax_utils.FlaxDiffusionPipeline

|

||||

|

||||

## PushToHubMixin

|

||||

|

||||

[[autodoc]] utils.PushToHubMixin

|

||||

|

||||

@@ -69,7 +69,7 @@ Next, create the adapter pipeline

|

||||

import torch

|

||||

from diffusers import StableDiffusionAdapterPipeline, T2IAdapter

|

||||

|

||||

adapter = T2IAdapter.from_pretrained("TencentARC/t2iadapter_color_sd14v1")

|

||||

adapter = T2IAdapter.from_pretrained("TencentARC/t2iadapter_color_sd14v1", torch_dtype=torch.float16)

|

||||

pipe = StableDiffusionAdapterPipeline.from_pretrained(

|

||||

"CompVis/stable-diffusion-v1-4",

|

||||

adapter=adapter,

|

||||

|

||||

46

docs/source/en/api/pipelines/stable_diffusion/gligen.md

Normal file

46

docs/source/en/api/pipelines/stable_diffusion/gligen.md

Normal file

@@ -0,0 +1,46 @@

|

||||

<!--Copyright 2023 The GLIGEN Authors and The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# GLIGEN (Grounded Language-to-Image Generation)

|

||||

|

||||

The GLIGEN model was created by researchers and engineers from [University of Wisconsin-Madison, Columbia University, and Microsoft](https://github.com/gligen/GLIGEN). The [`StableDiffusionGLIGENPipeline`] can generate photorealistic images conditioned on grounding inputs. Along with text and bounding boxes, if input images are given, this pipeline can insert objects described by text at the region defined by bounding boxes. Otherwise, it'll generate an image described by the caption/prompt and insert objects described by text at the region defined by bounding boxes. It's trained on COCO2014D and COCO2014CD datasets, and the model uses a frozen CLIP ViT-L/14 text encoder to condition itself on grounding inputs.

|

||||

|

||||

The abstract from the [paper](https://huggingface.co/papers/2301.07093) is:

|

||||

|

||||

*Large-scale text-to-image diffusion models have made amazing advances. However, the status quo is to use text input alone, which can impede controllability. In this work, we propose GLIGEN, Grounded-Language-to-Image Generation, a novel approach that builds upon and extends the functionality of existing pre-trained text-to-image diffusion models by enabling them to also be conditioned on grounding inputs. To preserve the vast concept knowledge of the pre-trained model, we freeze all of its weights and inject the grounding information into new trainable layers via a gated mechanism. Our model achieves open-world grounded text2img generation with caption and bounding box condition inputs, and the grounding ability generalizes well to novel spatial configurations and concepts. GLIGEN’s zeroshot performance on COCO and LVIS outperforms existing supervised layout-to-image baselines by a large margin.*

|

||||

|

||||

<Tip>

|

||||

|

||||

Make sure to check out the Stable Diffusion [Tips](https://huggingface.co/docs/diffusers/en/api/pipelines/stable_diffusion/overview#tips) section to learn how to explore the tradeoff between scheduler speed and quality and how to reuse pipeline components efficiently!

|

||||

|

||||

If you want to use one of the official checkpoints for a task, explore the [gligen](https://huggingface.co/gligen) Hub organizations!

|

||||

|

||||

</Tip>

|

||||

|

||||

This pipeline was contributed by [Nikhil Gajendrakumar](https://github.com/nikhil-masterful).

|

||||

|

||||

## StableDiffusionGLIGENPipeline

|

||||

|

||||

[[autodoc]] StableDiffusionGLIGENPipeline

|

||||

- all

|

||||

- __call__

|

||||

- enable_vae_slicing

|

||||

- disable_vae_slicing

|

||||

- enable_vae_tiling

|

||||

- disable_vae_tiling

|

||||

- enable_model_cpu_offload

|

||||

- prepare_latents

|

||||

- enable_fuser

|

||||

|

||||

## StableDiffusionPipelineOutput

|

||||

|

||||

[[autodoc]] pipelines.stable_diffusion.StableDiffusionPipelineOutput

|

||||

@@ -30,8 +30,8 @@ Make sure to check out the Stable Diffusion [Tips](overview#tips) section to lea

|

||||

- all

|

||||

- __call__

|

||||

|

||||

## StableDiffusionPipelineOutput

|

||||

## LDM3DPipelineOutput

|

||||

|

||||

[[autodoc]] pipelines.stable_diffusion.StableDiffusionPipelineOutput

|

||||

[[autodoc]] pipelines.stable_diffusion.pipeline_stable_diffusion_ldm3d.LDM3DPipelineOutput

|

||||

- all

|

||||

- __call__

|

||||

|

||||

@@ -38,9 +38,25 @@ You can install the libraries as follows:

|

||||

pip install transformers

|

||||

pip install accelerate

|

||||

pip install safetensors

|

||||

```

|

||||

|

||||

### Watermarker

|

||||

|

||||

We recommend to add an invisible watermark to images generating by Stable Diffusion XL, this can help with identifying if an image is machine-synthesised for downstream applications. To do so, please install

|

||||

the [invisible-watermark library](https://pypi.org/project/invisible-watermark/) via:

|

||||

|

||||

```

|

||||

pip install invisible-watermark>=0.2.0

|

||||

```

|

||||

|

||||

If the `invisible-watermark` library is installed the watermarker will be used **by default**.

|

||||

|

||||

If you have other provisions for generating or deploying images safely, you can disable the watermarker as follows:

|

||||

|

||||

```py

|

||||

pipe = StableDiffusionXLPipeline.from_pretrained(..., add_watermarker=False)

|

||||

```

|

||||

|

||||

### Text-to-Image

|

||||

|

||||

You can use SDXL as follows for *text-to-image*:

|

||||

|

||||

@@ -20,6 +20,12 @@ The abstract from the [paper](https://arxiv.org/abs/2303.06555) is:

|

||||

|

||||

You can find the original codebase at [thu-ml/unidiffuser](https://github.com/thu-ml/unidiffuser) and additional checkpoints at [thu-ml](https://huggingface.co/thu-ml).

|

||||

|

||||

<Tip warning={true}>

|

||||

|

||||

There is currently an issue on PyTorch 1.X where the output images are all black or the pixel values become `NaNs`. This issue can be mitigated by switching to PyTorch 2.X.

|

||||

|

||||

</Tip>

|

||||

|

||||

This pipeline was contributed by [dg845](https://github.com/dg845). ❤️

|

||||

|

||||

## Usage Examples

|

||||

|

||||

@@ -1,11 +1,15 @@

|

||||

# Consistency Model Multistep Scheduler

|

||||

# CMStochasticIterativeScheduler

|

||||

|

||||

## Overview

|

||||

[Consistency Models](https://huggingface.co/papers/2303.01469) by Yang Song, Prafulla Dhariwal, Mark Chen, and Ilya Sutskever introduced a multistep and onestep scheduler (Algorithm 1) that is capable of generating good samples in one or a small number of steps.

|

||||

|

||||

Multistep and onestep scheduler (Algorithm 1) introduced alongside consistency models in the paper [Consistency Models](https://arxiv.org/abs/2303.01469) by Yang Song, Prafulla Dhariwal, Mark Chen, and Ilya Sutskever.

|

||||

Based on the [original consistency models implementation](https://github.com/openai/consistency_models).

|

||||

Should generate good samples from [`ConsistencyModelPipeline`] in one or a small number of steps.

|

||||

The abstract from the paper is:

|

||||

|

||||

*Diffusion models have made significant breakthroughs in image, audio, and video generation, but they depend on an iterative generation process that causes slow sampling speed and caps their potential for real-time applications. To overcome this limitation, we propose consistency models, a new family of generative models that achieve high sample quality without adversarial training. They support fast one-step generation by design, while still allowing for few-step sampling to trade compute for sample quality. They also support zero-shot data editing, like image inpainting, colorization, and super-resolution, without requiring explicit training on these tasks. Consistency models can be trained either as a way to distill pre-trained diffusion models, or as standalone generative models. Through extensive experiments, we demonstrate that they outperform existing distillation techniques for diffusion models in one- and few-step generation. For example, we achieve the new state-of-the-art FID of 3.55 on CIFAR-10 and 6.20 on ImageNet 64x64 for one-step generation. When trained as standalone generative models, consistency models also outperform single-step, non-adversarial generative models on standard benchmarks like CIFAR-10, ImageNet 64x64 and LSUN 256x256.*

|

||||

|

||||

The original codebase can be found at [openai/consistency_models](https://github.com/openai/consistency_models).

|

||||

|

||||

## CMStochasticIterativeScheduler

|

||||

[[autodoc]] CMStochasticIterativeScheduler

|

||||

|

||||

## CMStochasticIterativeSchedulerOutput

|

||||

[[autodoc]] schedulers.scheduling_consistency_models.CMStochasticIterativeSchedulerOutput

|

||||

@@ -10,13 +10,11 @@ an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express o

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# Denoising Diffusion Implicit Models (DDIM)

|

||||

# DDIMScheduler

|

||||

|

||||

## Overview

|

||||

[Denoising Diffusion Implicit Models](https://huggingface.co/papers/2010.02502) (DDIM) by Jiaming Song, Chenlin Meng and Stefano Ermon.

|

||||

|

||||

[Denoising Diffusion Implicit Models](https://arxiv.org/abs/2010.02502) (DDIM) by Jiaming Song, Chenlin Meng and Stefano Ermon.

|

||||

|

||||

The abstract of the paper is the following:

|

||||

The abstract from the paper is:

|

||||

|

||||

*Denoising diffusion probabilistic models (DDPMs) have achieved high quality image generation without adversarial training,

|

||||

yet they require simulating a Markov chain for many steps to produce a sample.

|

||||

@@ -26,50 +24,43 @@ We construct a class of non-Markovian diffusion processes that lead to the same

|

||||

We empirically demonstrate that DDIMs can produce high quality samples 10× to 50× faster in terms of wall-clock time compared to DDPMs, allow us to trade off

|

||||

computation for sample quality, and can perform semantically meaningful image interpolation directly in the latent space.*

|

||||

|

||||

The original codebase of this paper can be found here: [ermongroup/ddim](https://github.com/ermongroup/ddim).

|

||||

For questions, feel free to contact the author on [tsong.me](https://tsong.me/).

|

||||

The original codebase of this paper can be found at [ermongroup/ddim](https://github.com/ermongroup/ddim), and you can contact the author on [tsong.me](https://tsong.me/).

|

||||

|

||||

### Experimental: "Common Diffusion Noise Schedules and Sample Steps are Flawed":

|

||||

## Tips

|

||||

|

||||

The paper **[Common Diffusion Noise Schedules and Sample Steps are Flawed](https://arxiv.org/abs/2305.08891)**

|

||||

claims that a mismatch between the training and inference settings leads to suboptimal inference generation results for Stable Diffusion.

|

||||

The paper [Common Diffusion Noise Schedules and Sample Steps are Flawed](https://huggingface.co/papers/2305.08891) claims that a mismatch between the training and inference settings leads to suboptimal inference generation results for Stable Diffusion. To fix this, the authors propose:

|

||||

|

||||

The abstract reads as follows:

|

||||

<Tip warning={true}>

|

||||

|

||||

*We discover that common diffusion noise schedules do not enforce the last timestep to have zero signal-to-noise ratio (SNR),

|

||||

and some implementations of diffusion samplers do not start from the last timestep.

|

||||

Such designs are flawed and do not reflect the fact that the model is given pure Gaussian noise at inference, creating a discrepancy between training and inference.

|

||||

We show that the flawed design causes real problems in existing implementations.

|

||||

In Stable Diffusion, it severely limits the model to only generate images with medium brightness and

|

||||

prevents it from generating very bright and dark samples. We propose a few simple fixes:

|

||||

- (1) rescale the noise schedule to enforce zero terminal SNR;

|

||||

- (2) train the model with v prediction;

|

||||

- (3) change the sampler to always start from the last timestep;

|

||||

- (4) rescale classifier-free guidance to prevent over-exposure.

|

||||

These simple changes ensure the diffusion process is congruent between training and inference and

|

||||

allow the model to generate samples more faithful to the original data distribution.*

|

||||

🧪 This is an experimental feature!

|

||||

|

||||

</Tip>

|

||||

|

||||

1. rescale the noise schedule to enforce zero terminal signal-to-noise ratio (SNR)

|

||||

|

||||

You can apply all of these changes in `diffusers` when using [`DDIMScheduler`]:

|

||||

- (1) rescale the noise schedule to enforce zero terminal SNR;

|

||||

```py

|

||||

pipe.scheduler = DDIMScheduler.from_config(pipe.scheduler.config, rescale_betas_zero_snr=True)

|

||||

```

|

||||

- (2) train the model with v prediction;

|

||||

Continue fine-tuning a checkpoint with [`train_text_to_image.py`](https://github.com/huggingface/diffusers/blob/main/examples/text_to_image/train_text_to_image.py) or [`train_text_to_image_lora.py`](https://github.com/huggingface/diffusers/blob/main/examples/text_to_image/train_text_to_image_lora.py)

|

||||

and `--prediction_type="v_prediction"`.

|

||||

- (3) change the sampler to always start from the last timestep;

|

||||

|

||||

2. train a model with `v_prediction` (add the following argument to the [train_text_to_image.py](https://github.com/huggingface/diffusers/blob/main/examples/text_to_image/train_text_to_image.py) or [train_text_to_image_lora.py](https://github.com/huggingface/diffusers/blob/main/examples/text_to_image/train_text_to_image_lora.py) scripts)

|

||||

|

||||

```bash

|

||||

--prediction_type="v_prediction"

|

||||

```

|

||||

|

||||

3. change the sampler to always start from the last timestep

|

||||

|

||||

```py

|

||||

pipe.scheduler = DDIMScheduler.from_config(pipe.scheduler.config, timestep_spacing="trailing")

|

||||

```

|

||||

- (4) rescale classifier-free guidance to prevent over-exposure.

|

||||

|

||||

4. rescale classifier-free guidance to prevent over-exposure

|

||||

|

||||

```py

|

||||

pipe(..., guidance_rescale=0.7)

|

||||

image = pipeline(prompt, guidance_rescale=0.7).images[0]

|

||||

```

|

||||

|

||||

An example is to use [this checkpoint](https://huggingface.co/ptx0/pseudo-journey-v2)

|

||||

which has been fine-tuned using the `"v_prediction"`.

|

||||

|

||||

The checkpoint can then be run in inference as follows:

|

||||

For example:

|

||||

|

||||

```py

|

||||

from diffusers import DiffusionPipeline, DDIMScheduler

|

||||

@@ -86,3 +77,6 @@ image = pipeline(prompt, guidance_rescale=0.7).images[0]

|

||||

|

||||

## DDIMScheduler

|

||||

[[autodoc]] DDIMScheduler

|

||||

|

||||

## DDIMSchedulerOutput

|

||||

[[autodoc]] schedulers.scheduling_ddim.DDIMSchedulerOutput

|

||||

|

||||

@@ -10,12 +10,10 @@ an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express o

|

||||