mirror of

https://github.com/huggingface/diffusers.git

synced 2025-12-07 13:04:15 +08:00

Compare commits

181 Commits

migrate-lo

...

test-clean

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

de7cdf6287 | ||

|

|

73c5fe8bb1 | ||

|

|

595581d6ba | ||

|

|

d27b65411e | ||

|

|

cb9dca5523 | ||

|

|

79166dcb47 | ||

|

|

f95c320467 | ||

|

|

59abd9514b | ||

|

|

5f3ebef0d7 | ||

|

|

e6ffde2936 | ||

|

|

04171c7345 | ||

|

|

be5e10ae61 | ||

|

|

a2da0004ee | ||

|

|

863c7df543 | ||

|

|

e0083b29d5 | ||

|

|

6521f599b2 | ||

|

|

0fcce2acd8 | ||

|

|

ceeb3c1da3 | ||

|

|

0fcdd699cf | ||

|

|

5af003a9e1 | ||

|

|

179d6d958b | ||

|

|

229c4b355c | ||

|

|

0a4819a755 | ||

|

|

7cea9a3bb0 | ||

|

|

23de59e21a | ||

|

|

4f8b6f5a15 | ||

|

|

63e94cbc61 | ||

|

|

2c66fb3a85 | ||

|

|

284f827d6c | ||

|

|

b750c69859 | ||

|

|

13c51bb038 | ||

|

|

3e46c86a93 | ||

|

|

8cb5b084b5 | ||

|

|

13fe248152 | ||

|

|

2e2024152c | ||

|

|

1987c07899 | ||

|

|

4543d216ec | ||

|

|

b5db8aaa6f | ||

|

|

98ea5c9e86 | ||

|

|

f27fbceba1 | ||

|

|

4b12a60c93 | ||

|

|

abf28d55fb | ||

|

|

db4b54cfab | ||

|

|

0138e176ac | ||

|

|

bbd9340781 | ||

|

|

363737ec4b | ||

|

|

c5849ba9d5 | ||

|

|

f09b1ccfae | ||

|

|

285f877620 | ||

|

|

c75b88f86f | ||

|

|

b43e703fae | ||

|

|

9fae3828a7 | ||

|

|

3a3441cb45 | ||

|

|

fdd2bedae9 | ||

|

|

fedaa00bd5 | ||

|

|

8c680bc0b4 | ||

|

|

92b6b43805 | ||

|

|

49ea4d1bf5 | ||

|

|

58dbe0c29e | ||

|

|

9aaec5b9bc | ||

|

|

93760b1888 | ||

|

|

75540f42ee | ||

|

|

b543bcc661 | ||

|

|

885a596696 | ||

|

|

655512e2cf | ||

|

|

f63d62e091 | ||

|

|

7608d2eb9e | ||

|

|

449f299c63 | ||

|

|

84f4b27dfa | ||

|

|

9abac85f77 | ||

|

|

61772f0994 | ||

|

|

b92cda25e2 | ||

|

|

7492e331b4 | ||

|

|

ab6d63407a | ||

|

|

da4242d467 | ||

|

|

129d658da7 | ||

|

|

75e62385f5 | ||

|

|

a33206d22b | ||

|

|

a82e211f89 | ||

|

|

f3453f05ff | ||

|

|

c437ae72c6 | ||

|

|

9530245e17 | ||

|

|

74b908b7e2 | ||

|

|

7d2a633e02 | ||

|

|

cb328d3ff9 | ||

|

|

8c038f0e62 | ||

|

|

5917d7039f | ||

|

|

c0327e493e | ||

|

|

174628edf4 | ||

|

|

1c9f0a83c9 | ||

|

|

cdaaa40d31 | ||

|

|

ffbaa890ba | ||

|

|

e49413d87d | ||

|

|

48e4ff5c05 | ||

|

|

7c78fb1aad | ||

|

|

bb4044362e | ||

|

|

1ae591e817 | ||

|

|

42c06e90f4 | ||

|

|

085ade03be | ||

|

|

78d2454c7c | ||

|

|

19545fd3e1 | ||

|

|

d12531ddf7 | ||

|

|

4751d456f2 | ||

|

|

083479c365 | ||

|

|

04c16d0a56 | ||

|

|

9e58856b7a | ||

|

|

45392cce11 | ||

|

|

8913d59bf3 | ||

|

|

5a8c1b5f19 | ||

|

|

7ad01a6350 | ||

|

|

a8e853b791 | ||

|

|

6a509ba862 | ||

|

|

96795afc72 | ||

|

|

12650e1393 | ||

|

|

addaad013c | ||

|

|

485f8d1758 | ||

|

|

cff0fd6260 | ||

|

|

8ddb20bfb8 | ||

|

|

e5089d702b | ||

|

|

2c3e4eafa8 | ||

|

|

c7020df2cf | ||

|

|

4bed3e306e | ||

|

|

00a3bc9d6c | ||

|

|

ccb35acd81 | ||

|

|

00cae4e857 | ||

|

|

b3fb4188f5 | ||

|

|

71df1581f7 | ||

|

|

d046cf7d35 | ||

|

|

68a5185c86 | ||

|

|

6e2fe26bfd | ||

|

|

77b5fa59c5 | ||

|

|

a226920b52 | ||

|

|

7007f72409 | ||

|

|

a6804de4a2 | ||

|

|

7f897a9fc4 | ||

|

|

0966663d2a | ||

|

|

fb78f4f12d | ||

|

|

2220af6940 | ||

|

|

7a34832d52 | ||

|

|

e973de64f9 | ||

|

|

db94ca882d | ||

|

|

6985906a2e | ||

|

|

54f410db6c | ||

|

|

c12a05b9c1 | ||

|

|

2e0f5c86cc | ||

|

|

1d63306295 | ||

|

|

6c93626f6f | ||

|

|

72c5bf07c8 | ||

|

|

ed59f90f15 | ||

|

|

a09ca7f27e | ||

|

|

8c02572e16 | ||

|

|

27dde51de8 | ||

|

|

10d4a775f1 | ||

|

|

72d9a81d99 | ||

|

|

4fa85c7963 | ||

|

|

806e8e66fb | ||

|

|

0b90051db8 | ||

|

|

b305c779b2 | ||

|

|

2b3cd2d39c | ||

|

|

bc3d1c9ee6 | ||

|

|

e50d614636 | ||

|

|

a8df0f1ffb | ||

|

|

ace53e2d2f | ||

|

|

ffc2992fc2 | ||

|

|

c70a285c2c | ||

|

|

8b811feece | ||

|

|

37e8dc7a59 | ||

|

|

024a9f5de3 | ||

|

|

005195c23e | ||

|

|

6742f160df | ||

|

|

540d303250 | ||

|

|

f1b3036ca1 | ||

|

|

46ec1743a2 | ||

|

|

70272b1108 | ||

|

|

2b6dcbfa1d | ||

|

|

af9572d759 | ||

|

|

ddea157979 | ||

|

|

ad3f9a26c0 | ||

|

|

e8d0980f9f | ||

|

|

52a7f1cb97 | ||

|

|

33f85fadf6 |

2

.github/workflows/nightly_tests.yml

vendored

2

.github/workflows/nightly_tests.yml

vendored

@@ -248,7 +248,7 @@ jobs:

|

||||

BIG_GPU_MEMORY: 40

|

||||

run: |

|

||||

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile \

|

||||

-m "big_gpu_with_torch_cuda" \

|

||||

-m "big_accelerator" \

|

||||

--make-reports=tests_big_gpu_torch_cuda \

|

||||

--report-log=tests_big_gpu_torch_cuda.log \

|

||||

tests/

|

||||

|

||||

@@ -93,6 +93,16 @@

|

||||

- local: hybrid_inference/api_reference

|

||||

title: API Reference

|

||||

title: Hybrid Inference

|

||||

- sections:

|

||||

- local: modular_diffusers/getting_started

|

||||

title: Getting Started

|

||||

- local: modular_diffusers/components_manager

|

||||

title: Components Manager

|

||||

- local: modular_diffusers/write_own_pipeline_block

|

||||

title: Write your own pipeline block

|

||||

- local: modular_diffusers/end_to_end_guide

|

||||

title: End-to-End Developer Guide

|

||||

title: Modular Diffusers

|

||||

- sections:

|

||||

- local: using-diffusers/consisid

|

||||

title: ConsisID

|

||||

|

||||

@@ -28,3 +28,9 @@ Cache methods speedup diffusion transformers by storing and reusing intermediate

|

||||

[[autodoc]] FasterCacheConfig

|

||||

|

||||

[[autodoc]] apply_faster_cache

|

||||

|

||||

### FirstBlockCacheConfig

|

||||

|

||||

[[autodoc]] FirstBlockCacheConfig

|

||||

|

||||

[[autodoc]] apply_first_block_cache

|

||||

|

||||

510

docs/source/en/modular_diffusers/components_manager.md

Normal file

510

docs/source/en/modular_diffusers/components_manager.md

Normal file

@@ -0,0 +1,510 @@

|

||||

<!--Copyright 2025 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# Components Manager

|

||||

|

||||

<Tip warning={true}>

|

||||

|

||||

🧪 **Experimental Feature**: This is an experimental feature we are actively developing. The API may be subject to breaking changes.

|

||||

|

||||

</Tip>

|

||||

|

||||

The Components Manager is a central model registry and management system in diffusers. It lets you add models then reuse them across multiple pipelines and workflows. It tracks all models in one place with useful metadata such as model size, device placement and loaded adapters (LoRA, IP-Adapter). It has mechanisms in place to prevent duplicate model instances, enables memory-efficient sharing. Most significantly, it offers offloading that works across pipelines — unlike regular DiffusionPipeline offloading which is limited to one pipeline with predefined sequences, the Components Manager automatically manages your device memory across all your models and workflows.

|

||||

|

||||

|

||||

## Basic Operations

|

||||

|

||||

Let's start with the fundamental operations. First, create a Components Manager:

|

||||

|

||||

```py

|

||||

from diffusers import ComponentsManager

|

||||

comp = ComponentsManager()

|

||||

```

|

||||

|

||||

Use the `add(name, component)` method to register a component. It returns a unique ID that combines the component name with the object's unique identifier (using Python's `id()` function):

|

||||

|

||||

```py

|

||||

from diffusers import AutoModel

|

||||

text_encoder = AutoModel.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0", subfolder="text_encoder")

|

||||

# Returns component_id like 'text_encoder_139917733042864'

|

||||

component_id = comp.add("text_encoder", text_encoder)

|

||||

```

|

||||

|

||||

You can view all registered components and their metadata:

|

||||

|

||||

```py

|

||||

>>> comp

|

||||

Components:

|

||||

===============================================================================================================================================

|

||||

Models:

|

||||

-----------------------------------------------------------------------------------------------------------------------------------------------

|

||||

Name_ID | Class | Device: act(exec) | Dtype | Size (GB) | Load ID | Collection

|

||||

-----------------------------------------------------------------------------------------------------------------------------------------------

|

||||

text_encoder_139917733042864 | CLIPTextModel | cpu | torch.float32 | 0.46 | N/A | N/A

|

||||

-----------------------------------------------------------------------------------------------------------------------------------------------

|

||||

|

||||

Additional Component Info:

|

||||

==================================================

|

||||

```

|

||||

|

||||

And remove components using their unique ID:

|

||||

|

||||

```py

|

||||

comp.remove("text_encoder_139917733042864")

|

||||

```

|

||||

|

||||

## Duplicate Detection

|

||||

|

||||

The Components Manager automatically detects and prevents duplicate model instances to save memory and avoid confusion. Let's walk through how this works in practice.

|

||||

|

||||

When you try to add the same object twice, the manager will warn you and return the existing ID:

|

||||

|

||||

```py

|

||||

>>> comp.add("text_encoder", text_encoder)

|

||||

'text_encoder_139917733042864'

|

||||

>>> comp.add("text_encoder", text_encoder)

|

||||

ComponentsManager: component 'text_encoder' already exists as 'text_encoder_139917733042864'

|

||||

'text_encoder_139917733042864'

|

||||

```

|

||||

|

||||

Even if you add the same object under a different name, it will still be detected as a duplicate:

|

||||

|

||||

```py

|

||||

>>> comp.add("clip", text_encoder)

|

||||

ComponentsManager: adding component 'clip' as 'clip_139917733042864', but it is duplicate of 'text_encoder_139917733042864'

|

||||

To remove a duplicate, call `components_manager.remove('<component_id>')`.

|

||||

'clip_139917733042864'

|

||||

```

|

||||

|

||||

However, there's a more subtle case where duplicate detection becomes tricky. When you load the same model into different objects, the manager can't detect duplicates unless you use `ComponentSpec`. For example:

|

||||

|

||||

```py

|

||||

>>> text_encoder_2 = AutoModel.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0", subfolder="text_encoder")

|

||||

>>> comp.add("text_encoder", text_encoder_2)

|

||||

'text_encoder_139917732983664'

|

||||

```

|

||||

|

||||

This creates a problem - you now have two copies of the same model consuming double the memory:

|

||||

|

||||

```py

|

||||

>>> comp

|

||||

Components:

|

||||

===============================================================================================================================================

|

||||

Models:

|

||||

-----------------------------------------------------------------------------------------------------------------------------------------------

|

||||

Name_ID | Class | Device: act(exec) | Dtype | Size (GB) | Load ID | Collection

|

||||

-----------------------------------------------------------------------------------------------------------------------------------------------

|

||||

text_encoder_139917733042864 | CLIPTextModel | cpu | torch.float32 | 0.46 | N/A | N/A

|

||||

clip_139917733042864 | CLIPTextModel | cpu | torch.float32 | 0.46 | N/A | N/A

|

||||

text_encoder_139917732983664 | CLIPTextModel | cpu | torch.float32 | 0.46 | N/A | N/A

|

||||

-----------------------------------------------------------------------------------------------------------------------------------------------

|

||||

|

||||

Additional Component Info:

|

||||

==================================================

|

||||

```

|

||||

|

||||

We recommend using `ComponentSpec` to load your models. Models loaded with `ComponentSpec` get tagged with a unique ID that encodes their loading parameters, allowing the Components Manager to detect when different objects represent the same underlying checkpoint:

|

||||

|

||||

```py

|

||||

from diffusers import ComponentSpec, ComponentsManager

|

||||

from transformers import CLIPTextModel

|

||||

comp = ComponentsManager()

|

||||

|

||||

# Create ComponentSpec for the first text encoder

|

||||

spec = ComponentSpec(name="text_encoder", repo="stabilityai/stable-diffusion-xl-base-1.0", subfolder="text_encoder", type_hint=AutoModel)

|

||||

# Create ComponentSpec for a duplicate text encoder (it is same checkpoint, from same repo/subfolder)

|

||||

spec_duplicated = ComponentSpec(name="text_encoder_duplicated", repo="stabilityai/stable-diffusion-xl-base-1.0", subfolder="text_encoder", type_hint=CLIPTextModel)

|

||||

|

||||

# Load and add both components - the manager will detect they're the same model

|

||||

comp.add("text_encoder", spec.load())

|

||||

comp.add("text_encoder_duplicated", spec_duplicated.load())

|

||||

```

|

||||

|

||||

Now the manager detects the duplicate and warns you:

|

||||

|

||||

```out

|

||||

ComponentsManager: adding component 'text_encoder_duplicated_139917580682672', but it has duplicate load_id 'stabilityai/stable-diffusion-xl-base-1.0|text_encoder|null|null' with existing components: text_encoder_139918506246832. To remove a duplicate, call `components_manager.remove('<component_id>')`.

|

||||

'text_encoder_duplicated_139917580682672'

|

||||

```

|

||||

|

||||

Both models now show the same `load_id`, making it clear they're the same model:

|

||||

|

||||

```py

|

||||

>>> comp

|

||||

Components:

|

||||

======================================================================================================================================================================================================

|

||||

Models:

|

||||

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

Name_ID | Class | Device: act(exec) | Dtype | Size (GB) | Load ID | Collection

|

||||

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

text_encoder_139918506246832 | CLIPTextModel | cpu | torch.float32 | 0.46 | stabilityai/stable-diffusion-xl-base-1.0|text_encoder|null|null | N/A

|

||||

text_encoder_duplicated_139917580682672 | CLIPTextModel | cpu | torch.float32 | 0.46 | stabilityai/stable-diffusion-xl-base-1.0|text_encoder|null|null | N/A

|

||||

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

|

||||

Additional Component Info:

|

||||

==================================================

|

||||

```

|

||||

|

||||

## Collections

|

||||

|

||||

Collections are labels you can assign to components for better organization and management. You add a component under a collection by passing the `collection=` parameter when you add the component to the manager, i.e. `add(name, component, collection=...)`. Within each collection, only one component per name is allowed - if you add a second component with the same name, the first one is automatically removed.

|

||||

|

||||

Here's how collections work in practice:

|

||||

|

||||

```py

|

||||

comp = ComponentsManager()

|

||||

# Create ComponentSpec for the first UNet (SDXL base)

|

||||

spec = ComponentSpec(name="unet", repo="stabilityai/stable-diffusion-xl-base-1.0", subfolder="unet", type_hint=AutoModel)

|

||||

# Create ComponentSpec for a different UNet (Juggernaut-XL)

|

||||

spec2 = ComponentSpec(name="unet", repo="RunDiffusion/Juggernaut-XL-v9", subfolder="unet", type_hint=AutoModel, variant="fp16")

|

||||

|

||||

# Add both UNets to the same collection - the second one will replace the first

|

||||

comp.add("unet", spec.load(), collection="sdxl")

|

||||

comp.add("unet", spec2.load(), collection="sdxl")

|

||||

```

|

||||

|

||||

The manager automatically removes the old UNet and adds the new one:

|

||||

|

||||

```out

|

||||

ComponentsManager: removing existing unet from collection 'sdxl': unet_139917723891888

|

||||

'unet_139917723893136'

|

||||

```

|

||||

|

||||

Only one UNet remains in the collection:

|

||||

|

||||

```py

|

||||

>>> comp

|

||||

Components:

|

||||

====================================================================================================================================================================

|

||||

Models:

|

||||

--------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

Name_ID | Class | Device: act(exec) | Dtype | Size (GB) | Load ID | Collection

|

||||

--------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

unet_139917723893136 | UNet2DConditionModel | cpu | torch.float32 | 9.56 | RunDiffusion/Juggernaut-XL-v9|unet|fp16|null | sdxl

|

||||

--------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

|

||||

Additional Component Info:

|

||||

==================================================

|

||||

```

|

||||

|

||||

For example, in node-based systems, you can mark all models loaded from one node with the same collection label, automatically replace models when user loads new checkpoints under same name, batch delete all models in a collection when a node is removed.

|

||||

|

||||

## Retrieving Components

|

||||

|

||||

The Components Manager provides several methods to retrieve registered components.

|

||||

|

||||

The `get_one()` method returns a single component and supports pattern matching for the `name` parameter. You can use:

|

||||

- exact matches like `comp.get_one(name="unet")`

|

||||

- wildcards like `comp.get_one(name="unet*")` for components starting with "unet"

|

||||

- exclusion patterns like `comp.get_one(name="!unet")` to exclude components named "unet"

|

||||

- OR patterns like `comp.get_one(name="unet|vae")` to match either "unet" OR "vae".

|

||||

|

||||

You can also filter by collection with `comp.get_one(name="unet", collection="sdxl")` or by load_id. If multiple components match, `get_one()` throws an error.

|

||||

|

||||

Another useful method is `get_components_by_names()`, which takes a list of names and returns a dictionary mapping names to components. This is particularly helpful with modular pipelines since they provide lists of required component names, and the returned dictionary can be directly passed to `pipeline.update_components()`.

|

||||

|

||||

```py

|

||||

# Get components by name list

|

||||

component_dict = comp.get_components_by_names(names=["text_encoder", "unet", "vae"])

|

||||

# Returns: {"text_encoder": component1, "unet": component2, "vae": component3}

|

||||

```

|

||||

|

||||

## Using Components Manager with Modular Pipelines

|

||||

|

||||

The Components Manager integrates seamlessly with Modular Pipelines. All you need to do is pass a Components Manager instance to `from_pretrained()` or `init_pipeline()` with an optional `collection` parameter:

|

||||

|

||||

```py

|

||||

from diffusers import ModularPipeline, ComponentsManager

|

||||

comp = ComponentsManager()

|

||||

pipe = ModularPipeline.from_pretrained("YiYiXu/modular-demo-auto", components_manager=comp, collection="test1")

|

||||

```

|

||||

|

||||

By default, modular pipelines don't load components immediately, so both the pipeline and Components Manager start empty:

|

||||

|

||||

```py

|

||||

>>> comp

|

||||

Components:

|

||||

==================================================

|

||||

No components registered.

|

||||

==================================================

|

||||

```

|

||||

|

||||

When you load components on the pipeline, they are automatically registered in the Components Manager:

|

||||

|

||||

```py

|

||||

>>> pipe.load_components(names="unet")

|

||||

>>> comp

|

||||

Components:

|

||||

==============================================================================================================================================================

|

||||

Models:

|

||||

--------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

Name_ID | Class | Device: act(exec) | Dtype | Size (GB) | Load ID | Collection

|

||||

--------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

unet_139917726686304 | UNet2DConditionModel | cpu | torch.float32 | 9.56 | SG161222/RealVisXL_V4.0|unet|null|null | test1

|

||||

--------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

|

||||

Additional Component Info:

|

||||

==================================================

|

||||

```

|

||||

|

||||

Now let's load all default components and then create a second pipeline that reuses all components from the first one. We pass the same Components Manager to the second pipeline but with a different collection:

|

||||

|

||||

```py

|

||||

# Load all default components

|

||||

>>> pipe.load_default_components()`

|

||||

|

||||

# Create a second pipeline using the same Components Manager but with a different collection

|

||||

>>> pipe2 = ModularPipeline.from_pretrained("YiYiXu/modular-demo-auto", components_manager=comp, collection="test2")

|

||||

```

|

||||

|

||||

As mentioned earlier, `ModularPipeline` has a property `null_component_names` that returns a list of component names it needs to load. We can conveniently use this list with the `get_components_by_names` method on the Components Manager:

|

||||

|

||||

```py

|

||||

# Get the list of components that pipe2 needs to load

|

||||

>>> pipe2.null_component_names

|

||||

['text_encoder', 'text_encoder_2', 'tokenizer', 'tokenizer_2', 'image_encoder', 'unet', 'vae', 'scheduler', 'controlnet']

|

||||

|

||||

# Retrieve all required components from the Components Manager

|

||||

>>> comp_dict = comp.get_components_by_names(names=pipe2.null_component_names)

|

||||

|

||||

# Update the pipeline with the retrieved components

|

||||

>>> pipe2.update_components(**comp_dict)

|

||||

```

|

||||

|

||||

The warnings that follow are expected and indicate that the Components Manager is correctly identifying that these components already exist and will be reused rather than creating duplicates:

|

||||

|

||||

```

|

||||

ComponentsManager: component 'text_encoder' already exists as 'text_encoder_139917586016400'

|

||||

ComponentsManager: component 'text_encoder_2' already exists as 'text_encoder_2_139917699973424'

|

||||

ComponentsManager: component 'tokenizer' already exists as 'tokenizer_139917580599504'

|

||||

ComponentsManager: component 'tokenizer_2' already exists as 'tokenizer_2_139915763443904'

|

||||

ComponentsManager: component 'image_encoder' already exists as 'image_encoder_139917722468304'

|

||||

ComponentsManager: component 'unet' already exists as 'unet_139917580609632'

|

||||

ComponentsManager: component 'vae' already exists as 'vae_139917722459040'

|

||||

ComponentsManager: component 'scheduler' already exists as 'scheduler_139916266559408'

|

||||

ComponentsManager: component 'controlnet' already exists as 'controlnet_139917722454432'

|

||||

```

|

||||

```

|

||||

|

||||

The pipeline is now fully loaded:

|

||||

|

||||

```py

|

||||

# null_component_names return empty list, meaning everything are loaded

|

||||

>>> pipe2.null_component_names

|

||||

[]

|

||||

```

|

||||

|

||||

No new components were added to the Components Manager - we're reusing everything. All models are now associated with both `test1` and `test2` collections, showing that these components are shared across multiple pipelines:

|

||||

```py

|

||||

>>> comp

|

||||

Components:

|

||||

========================================================================================================================================================================================

|

||||

Models:

|

||||

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

Name_ID | Class | Device: act(exec) | Dtype | Size (GB) | Load ID | Collection

|

||||

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

text_encoder_139917586016400 | CLIPTextModel | cpu | torch.float32 | 0.46 | SG161222/RealVisXL_V4.0|text_encoder|null|null | test1

|

||||

| | | | | | test2

|

||||

text_encoder_2_139917699973424 | CLIPTextModelWithProjection | cpu | torch.float32 | 2.59 | SG161222/RealVisXL_V4.0|text_encoder_2|null|null | test1

|

||||

| | | | | | test2

|

||||

unet_139917580609632 | UNet2DConditionModel | cpu | torch.float32 | 9.56 | SG161222/RealVisXL_V4.0|unet|null|null | test1

|

||||

| | | | | | test2

|

||||

controlnet_139917722454432 | ControlNetModel | cpu | torch.float32 | 4.66 | diffusers/controlnet-canny-sdxl-1.0|null|null|null | test1

|

||||

| | | | | | test2

|

||||

vae_139917722459040 | AutoencoderKL | cpu | torch.float32 | 0.31 | SG161222/RealVisXL_V4.0|vae|null|null | test1

|

||||

| | | | | | test2

|

||||

image_encoder_139917722468304 | CLIPVisionModelWithProjection | cpu | torch.float32 | 6.87 | h94/IP-Adapter|sdxl_models/image_encoder|null|null | test1

|

||||

| | | | | | test2

|

||||

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

|

||||

Other Components:

|

||||

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

ID | Class | Collection

|

||||

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

tokenizer_139917580599504 | CLIPTokenizer | test1

|

||||

| | test2

|

||||

scheduler_139916266559408 | EulerDiscreteScheduler | test1

|

||||

| | test2

|

||||

tokenizer_2_139915763443904 | CLIPTokenizer | test1

|

||||

| | test2

|

||||

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

||||

|

||||

Additional Component Info:

|

||||

==================================================

|

||||

```

|

||||

|

||||

|

||||

## Automatic Memory Management

|

||||

|

||||

The Components Manager provides a global offloading strategy across all models, regardless of which pipeline is using them:

|

||||

|

||||

```py

|

||||

comp.enable_auto_cpu_offload(device="cuda")

|

||||

```

|

||||

|

||||

When enabled, all models start on CPU. The manager moves models to the device right before they're used and moves other models back to CPU when GPU memory runs low. You can set your own rules for which models to offload first. This works smoothly as you add or remove components. Once it's on, you don't need to worry about device placement - you can focus on your workflow.

|

||||

|

||||

|

||||

|

||||

## Practical Example: Building Modular Workflows with Component Reuse

|

||||

|

||||

Now that we've covered the basics of the Components Manager, let's walk through a practical example that shows how to build workflows in a modular setting and use the Components Manager to reuse components across multiple pipelines. This example demonstrates the true power of Modular Diffusers by working with multiple pipelines that can share components.

|

||||

|

||||

In this example, we'll generate latents from a text-to-image pipeline, then refine them with an image-to-image pipeline. We will also use Lora and IP-Adapter.

|

||||

|

||||

Let's create a modular text-to-image workflow by separating it into three components: `text_blocks` for encoding prompts, `t2i_blocks` for generating latents, and `decoder_blocks` for creating final images.

|

||||

|

||||

```py

|

||||

import torch

|

||||

from diffusers.modular_pipelines import SequentialPipelineBlocks

|

||||

from diffusers.modular_pipelines.stable_diffusion_xl import ALL_BLOCKS

|

||||

|

||||

# Create modular blocks and separate text encoding and decoding steps

|

||||

t2i_blocks = SequentialPipelineBlocks.from_blocks_dict(ALL_BLOCKS["text2img"])

|

||||

text_blocks = t2i_blocks.sub_blocks.pop("text_encoder")

|

||||

decoder_blocks = t2i_blocks.sub_blocks.pop("decode")

|

||||

```

|

||||

|

||||

Now we will convert them into runnalbe pipelines and set up the Components Manager with auto offloading and organize components under a "t2i" collection:

|

||||

|

||||

```py

|

||||

from diffusers import ComponentsManager, ModularPipeline

|

||||

|

||||

# Set up Components Manager with auto offloading

|

||||

components = ComponentsManager()

|

||||

components.enable_auto_cpu_offload(device="cuda")

|

||||

|

||||

# Create pipelines and load components

|

||||

t2i_repo = "YiYiXu/modular-demo-auto"

|

||||

t2i_loader_pipe = ModularPipeline.from_pretrained(t2i_repo, components_manager=components, collection="t2i")

|

||||

|

||||

text_node = text_blocks.init_pipeline(t2i_repo, components_manager=components)

|

||||

decoder_node = decoder_blocks.init_pipeline(t2i_repo, components_manager=components)

|

||||

t2i_pipe = t2i_blocks.init_pipeline(t2i_repo, components_manager=components)

|

||||

```

|

||||

|

||||

Load all components into the Components Manager under the "t2i" collection:

|

||||

|

||||

```py

|

||||

# Load all components (including IP-Adapter and ControlNet for later use)

|

||||

t2i_loader_pipe.load_components(names=t2i_loader_pipe.pretrained_component_names, torch_dtype=torch.float16)

|

||||

```

|

||||

|

||||

Now distribute the loaded components to each pipeline:

|

||||

|

||||

```py

|

||||

# Get VAE for decoder (using get_one since there's only one)

|

||||

vae = components.get_one(load_id="SG161222/RealVisXL_V4.0|vae|null|null")

|

||||

decoder_node.update_components(vae=vae)

|

||||

|

||||

# Get text components for text node (using get_components_by_names for multiple components)

|

||||

text_components = components.get_components_by_names(text_node.null_component_names)

|

||||

text_node.update_components(**text_components)

|

||||

|

||||

# Get remaining components for t2i pipeline

|

||||

t2i_components = components.get_components_by_names(t2i_pipe.null_component_names)

|

||||

t2i_pipe.update_components(**t2i_components)

|

||||

```

|

||||

|

||||

Now we can generate images using our modular workflow:

|

||||

|

||||

```py

|

||||

# Generate text embeddings

|

||||

prompt = "an astronaut"

|

||||

text_embeddings = text_node(prompt=prompt, output=["prompt_embeds","negative_prompt_embeds", "pooled_prompt_embeds", "negative_pooled_prompt_embeds"])

|

||||

|

||||

# Generate latents and decode to image

|

||||

generator = torch.Generator(device="cuda").manual_seed(0)

|

||||

latents_t2i = t2i_pipe(**text_embeddings, num_inference_steps=25, generator=generator, output="latents")

|

||||

image = decoder_node(latents=latents_t2i, output="images")[0]

|

||||

image.save("modular_part2_t2i.png")

|

||||

```

|

||||

|

||||

Let's add a LoRA:

|

||||

|

||||

```py

|

||||

# Load LoRA weights - only the UNet gets the adapter

|

||||

>>> t2i_loader_pipe.load_lora_weights("CiroN2022/toy-face", weight_name="toy_face_sdxl.safetensors", adapter_name="toy_face")

|

||||

>>> components

|

||||

Components:

|

||||

============================================================================================================================================================

|

||||

...

|

||||

Additional Component Info:

|

||||

==================================================

|

||||

|

||||

unet:

|

||||

Adapters: ['toy_face']

|

||||

```

|

||||

|

||||

You can see that the Components Manager tracks adapters metadata for all models it manages, and in our case, only Unet has lora loaded. This means we can reuse existing text embeddings.

|

||||

|

||||

```py

|

||||

# Generate with LoRA (reusing existing text embeddings)

|

||||

generator = torch.Generator(device="cuda").manual_seed(0)

|

||||

latents_lora = t2i_pipe(**text_embeddings, num_inference_steps=25, generator=generator, output="latents")

|

||||

image = decoder_node(latents=latents_lora, output="images")[0]

|

||||

image.save("modular_part2_lora.png")

|

||||

```

|

||||

|

||||

|

||||

Now let's create a refiner pipeline that reuses components from our text-to-image workflow:

|

||||

|

||||

```py

|

||||

# Create refiner blocks (removing image_encoder and decode since we work with latents)

|

||||

refiner_blocks = SequentialPipelineBlocks.from_blocks_dict(ALL_BLOCKS["img2img"])

|

||||

refiner_blocks.sub_blocks.pop("image_encoder")

|

||||

refiner_blocks.sub_blocks.pop("decode")

|

||||

|

||||

# Create refiner pipeline with different repo and collection

|

||||

refiner_repo = "YiYiXu/modular_refiner"

|

||||

refiner_pipe = refiner_blocks.init_pipeline(refiner_repo, components_manager=components, collection="refiner")

|

||||

```

|

||||

|

||||

We pass the **same Components Manager** (`components`) to the refiner pipeline, but with a **different collection** (`"refiner"`). This allows the refiner to access and reuse components from the "t2i" collection while organizing its own components (like the refiner UNet) under the "refiner" collection.

|

||||

|

||||

```py

|

||||

# Load only the refiner UNet (different from t2i UNet)

|

||||

refiner_pipe.load_components(names="unet", torch_dtype=torch.float16)

|

||||

|

||||

# Reuse components from t2i pipeline using pattern matching

|

||||

reuse_components = components.search_components("text_encoder_2|scheduler|vae|tokenizer_2")

|

||||

refiner_pipe.update_components(**reuse_components)

|

||||

```

|

||||

|

||||

When we reuse components from the "t2i" collection, they automatically get added to the "refiner" collection as well. You can verify this by checking the Components Manager - you'll see components like `vae`, `scheduler`, etc. listed under both collections, indicating they're shared between workflows.

|

||||

|

||||

Now we can refine any of our generated latents:

|

||||

|

||||

```py

|

||||

# Refine all our different latents

|

||||

refined_latents = refiner_pipe(image_latents=latents_t2i, prompt=prompt, num_inference_steps=10, output="latents")

|

||||

refined_image = decoder_node(latents=refined_latents, output="images")[0]

|

||||

refined_image.save("modular_part2_t2i_refine_out.png")

|

||||

|

||||

refined_latents = refiner_pipe(image_latents=latents_lora, prompt=prompt, num_inference_steps=10, output="latents")

|

||||

refined_image = decoder_node(latents=refined_latents, output="images")[0]

|

||||

refined_image.save("modular_part2_lora_refine_out.png")

|

||||

```

|

||||

|

||||

|

||||

Here are the results from our modular pipeline examples.

|

||||

|

||||

#### Base Text-to-Image Generation

|

||||

| Base Text-to-Image | Base Text-to-Image (Refined) |

|

||||

|-------------------|------------------------------|

|

||||

|  |  |

|

||||

|

||||

#### LoRA

|

||||

| LoRA | LoRA (Refined) |

|

||||

|-------------------|------------------------------|

|

||||

|  |  |

|

||||

|

||||

648

docs/source/en/modular_diffusers/end_to_end_guide.md

Normal file

648

docs/source/en/modular_diffusers/end_to_end_guide.md

Normal file

@@ -0,0 +1,648 @@

|

||||

<!--Copyright 2025 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# End-to-End Developer Guide: Building with Modular Diffusers

|

||||

|

||||

<Tip warning={true}>

|

||||

|

||||

🧪 **Experimental Feature**: Modular Diffusers is an experimental feature we are actively developing. The API may be subject to breaking changes.

|

||||

|

||||

</Tip>

|

||||

|

||||

|

||||

In this tutorial we will walk through the process of adding a new pipeline to the modular framework using differential diffusion as our example. We'll cover the complete workflow from implementation to deployment: implementing the new pipeline, ensuring compatibility with existing tools, sharing the code on Hugging Face Hub, and deploying it as a UI node.

|

||||

|

||||

We'll also demonstrate the 4-step framework process we use for implementing new basic pipelines in the modular system.

|

||||

|

||||

1. **Start with an existing pipeline as a base**

|

||||

- Identify which existing pipeline is most similar to the one you want to implement

|

||||

- Determine what part of the pipeline needs modification

|

||||

|

||||

2. **Build a working pipeline structure first**

|

||||

- Assemble the complete pipeline structure

|

||||

- Use existing blocks wherever possible

|

||||

- For new blocks, create placeholders (e.g. you can copy from similar blocks and change the name) without implementing custom logic just yet

|

||||

|

||||

3. **Set up an example**

|

||||

- Create a simple inference script with expected inputs/outputs

|

||||

|

||||

4. **Implement your custom logic and test incrementally**

|

||||

- Add the custom logics the blocks you want to change

|

||||

- Test incrementally, and inspect pipeline states and debug as needed

|

||||

|

||||

Let's see how this works with the Differential Diffusion example.

|

||||

|

||||

|

||||

## Differential Diffusion Pipeline

|

||||

|

||||

### Start with an existing pipeline

|

||||

|

||||

Differential diffusion (https://differential-diffusion.github.io/) is an image-to-image workflow, so it makes sense for us to start with the preset of pipeline blocks used to build img2img pipeline (`IMAGE2IMAGE_BLOCKS`) and see how we can build this new pipeline with them.

|

||||

|

||||

```py

|

||||

>>> from diffusers.modular_pipelines.stable_diffusion_xl import IMAGE2IMAGE_BLOCKS

|

||||

>>> IMAGE2IMAGE_BLOCKS = InsertableDict([

|

||||

... ("text_encoder", StableDiffusionXLTextEncoderStep),

|

||||

... ("image_encoder", StableDiffusionXLVaeEncoderStep),

|

||||

... ("input", StableDiffusionXLInputStep),

|

||||

... ("set_timesteps", StableDiffusionXLImg2ImgSetTimestepsStep),

|

||||

... ("prepare_latents", StableDiffusionXLImg2ImgPrepareLatentsStep),

|

||||

... ("prepare_add_cond", StableDiffusionXLImg2ImgPrepareAdditionalConditioningStep),

|

||||

... ("denoise", StableDiffusionXLDenoiseStep),

|

||||

... ("decode", StableDiffusionXLDecodeStep)

|

||||

... ])

|

||||

```

|

||||

|

||||

Note that "denoise" (`StableDiffusionXLDenoiseStep`) is a `LoopSequentialPipelineBlocks` that contains 3 loop blocks (more on LoopSequentialPipelineBlocks [here](https://huggingface.co/docs/diffusers/modular_diffusers/write_own_pipeline_block#loopsequentialpipelineblocks))

|

||||

|

||||

```py

|

||||

>>> denoise_blocks = IMAGE2IMAGE_BLOCKS["denoise"]()

|

||||

>>> print(denoise_blocks)

|

||||

```

|

||||

|

||||

```out

|

||||

StableDiffusionXLDenoiseStep(

|

||||

Class: StableDiffusionXLDenoiseLoopWrapper

|

||||

|

||||

Description: Denoise step that iteratively denoise the latents.

|

||||

Its loop logic is defined in `StableDiffusionXLDenoiseLoopWrapper.__call__` method

|

||||

At each iteration, it runs blocks defined in `sub_blocks` sequencially:

|

||||

- `StableDiffusionXLLoopBeforeDenoiser`

|

||||

- `StableDiffusionXLLoopDenoiser`

|

||||

- `StableDiffusionXLLoopAfterDenoiser`

|

||||

This block supports both text2img and img2img tasks.

|

||||

|

||||

|

||||

Components:

|

||||

scheduler (`EulerDiscreteScheduler`)

|

||||

guider (`ClassifierFreeGuidance`)

|

||||

unet (`UNet2DConditionModel`)

|

||||

|

||||

Sub-Blocks:

|

||||

[0] before_denoiser (StableDiffusionXLLoopBeforeDenoiser)

|

||||

Description: step within the denoising loop that prepare the latent input for the denoiser. This block should be used to compose the `sub_blocks` attribute of a `LoopSequentialPipelineBlocks` object (e.g. `StableDiffusionXLDenoiseLoopWrapper`)

|

||||

|

||||

[1] denoiser (StableDiffusionXLLoopDenoiser)

|

||||

Description: Step within the denoising loop that denoise the latents with guidance. This block should be used to compose the `sub_blocks` attribute of a `LoopSequentialPipelineBlocks` object (e.g. `StableDiffusionXLDenoiseLoopWrapper`)

|

||||

|

||||

[2] after_denoiser (StableDiffusionXLLoopAfterDenoiser)

|

||||

Description: step within the denoising loop that update the latents. This block should be used to compose the `sub_blocks` attribute of a `LoopSequentialPipelineBlocks` object (e.g. `StableDiffusionXLDenoiseLoopWrapper`)

|

||||

|

||||

)

|

||||

```

|

||||

|

||||

Let's compare standard image-to-image and differential diffusion! The key difference in algorithm is that standard image-to-image diffusion applies uniform noise across all pixels based on a single `strength` parameter, but differential diffusion uses a change map where each pixel value determines when that region starts denoising. Regions with lower values get "frozen" earlier by replacing them with noised original latents, preserving more of the original image.

|

||||

|

||||

Therefore, the key differences when it comes to pipeline implementation would be:

|

||||

1. The `prepare_latents` step (which prepares the change map and pre-computes noised latents for all timesteps)

|

||||

2. The `denoise` step (which selectively applies denoising based on the change map)

|

||||

3. Since differential diffusion doesn't use the `strength` parameter, we'll use the text-to-image `set_timesteps` step instead of the image-to-image version

|

||||

|

||||

To implement differntial diffusion, we can reuse most blocks from image-to-image and text-to-image workflows, only modifying the `prepare_latents` step and the first part of the `denoise` step (i.e. `before_denoiser (StableDiffusionXLLoopBeforeDenoiser)`).

|

||||

|

||||

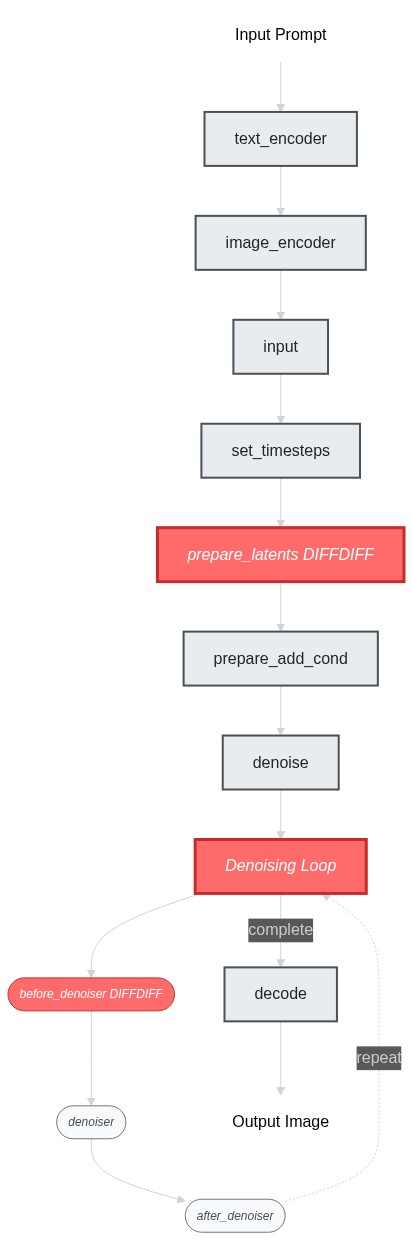

Here's a flowchart showing the pipeline structure and the changes we need to make:

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### Build a Working Pipeline Structure

|

||||

|

||||

ok now we've identified the blocks to modify, let's build the pipeline skeleton first - at this stage, our goal is to get the pipeline struture working end-to-end (even though it's just doing the img2img behavior). I would simply create placeholder blocks by copying from existing ones:

|

||||

|

||||

```py

|

||||

>>> # Copy existing blocks as placeholders

|

||||

>>> class SDXLDiffDiffPrepareLatentsStep(PipelineBlock):

|

||||

... """Copied from StableDiffusionXLImg2ImgPrepareLatentsStep - will modify later"""

|

||||

... # ... same implementation as StableDiffusionXLImg2ImgPrepareLatentsStep

|

||||

...

|

||||

>>> class SDXLDiffDiffLoopBeforeDenoiser(PipelineBlock):

|

||||

... """Copied from StableDiffusionXLLoopBeforeDenoiser - will modify later"""

|

||||

... # ... same implementation as StableDiffusionXLLoopBeforeDenoiser

|

||||

```

|

||||

|

||||

`SDXLDiffDiffLoopBeforeDenoiser` is the be part of the denoise loop we need to change. Let's use it to assemble a `SDXLDiffDiffDenoiseStep`.

|

||||

|

||||

```py

|

||||

>>> class SDXLDiffDiffDenoiseStep(StableDiffusionXLDenoiseLoopWrapper):

|

||||

... block_classes = [SDXLDiffDiffLoopBeforeDenoiser, StableDiffusionXLLoopDenoiser, StableDiffusionXLLoopAfterDenoiser]

|

||||

... block_names = ["before_denoiser", "denoiser", "after_denoiser"]

|

||||

```

|

||||

|

||||

Now we can put together our differential diffusion pipeline.

|

||||

|

||||

```py

|

||||

>>> DIFFDIFF_BLOCKS = IMAGE2IMAGE_BLOCKS.copy()

|

||||

>>> DIFFDIFF_BLOCKS["set_timesteps"] = TEXT2IMAGE_BLOCKS["set_timesteps"]

|

||||

>>> DIFFDIFF_BLOCKS["prepare_latents"] = SDXLDiffDiffPrepareLatentsStep

|

||||

>>> DIFFDIFF_BLOCKS["denoise"] = SDXLDiffDiffDenoiseStep

|

||||

>>>

|

||||

>>> dd_blocks = SequentialPipelineBlocks.from_blocks_dict(DIFFDIFF_BLOCKS)

|

||||

>>> print(dd_blocks)

|

||||

>>> # At this point, the pipeline works exactly like img2img since our blocks are just copies

|

||||

```

|

||||

|

||||

### Set up an example

|

||||

|

||||

ok, so now our blocks should be able to compile without an error, we can move on to the next step. Let's setup a simple example so we can run the pipeline as we build it. diff-diff use same model checkpoints as SDXL so we can fetch the models from a regular SDXL repo.

|

||||

|

||||

```py

|

||||

>>> dd_pipeline = dd_blocks.init_pipeline("YiYiXu/modular-demo-auto", collection="diffdiff")

|

||||

>>> dd_pipeline.load_default_componenets(torch_dtype=torch.float16)

|

||||

>>> dd_pipeline.to("cuda")

|

||||

```

|

||||

|

||||

We will use this example script:

|

||||

|

||||

```py

|

||||

>>> image = load_image("https://huggingface.co/datasets/OzzyGT/testing-resources/resolve/main/differential/20240329211129_4024911930.png?download=true")

|

||||

>>> mask = load_image("https://huggingface.co/datasets/OzzyGT/testing-resources/resolve/main/differential/gradient_mask.png?download=true")

|

||||

>>>

|

||||

>>> prompt = "a green pear"

|

||||

>>> negative_prompt = "blurry"

|

||||

>>>

|

||||

>>> image = dd_pipeline(

|

||||

... prompt=prompt,

|

||||

... negative_prompt=negative_prompt,

|

||||

... num_inference_steps=25,

|

||||

... diffdiff_map=mask,

|

||||

... image=image,

|

||||

... output="images"

|

||||

... )[0]

|

||||

>>>

|

||||

>>> image.save("diffdiff_out.png")

|

||||

```

|

||||

|

||||

If you run the script right now, you will get a complaint about unexpected input `diffdiff_map`.

|

||||

and you would get the same result as the original img2img pipeline.

|

||||

|

||||

### implement your custom logic and test incrementally

|

||||

|

||||

Let's modify the pipeline so that we can get expected result with this example script.

|

||||

|

||||

We'll start with the `prepare_latents` step. The main changes are:

|

||||

- Requires a new user input `diffdiff_map`

|

||||

- Requires new component `mask_processor` to process the `diffdiff_map`

|

||||

- Requires new intermediate inputs:

|

||||

- Need `timestep` instead of `latent_timestep` to precompute all the latents

|

||||

- Need `num_inference_steps` to create the `diffdiff_masks`

|

||||

- create a new output `diffdiff_masks` and `original_latents`

|

||||

|

||||

<Tip>

|

||||

|

||||

💡 use `print(dd_pipeline.doc)` to check compiled inputs and outputs of the built piepline.

|

||||

|

||||

e.g. after we added `diffdiff_map` as an input in this step, we can run `print(dd_pipeline.doc)` to verify that it shows up in the docstring as a user input.

|

||||

|

||||

</Tip>

|

||||

|

||||

Once we make sure all the variables we need are available in the block state, we can implement the diff-diff logic inside `__call__`. We created 2 new variables: the change map `diffdiff_mask` and the pre-computed noised latents for all timesteps `original_latents`.

|

||||

|

||||

<Tip>

|

||||

|

||||

💡 Implement incrementally! Run the example script as you go, and insert `print(state)` and `print(block_state)` everywhere inside the `__call__` method to inspect the intermediate results. This helps you understand what's going on and what each line you just added does.

|

||||

|

||||

</Tip>

|

||||

|

||||

Here are the key changes we made to implement differential diffusion:

|

||||

|

||||

**1. Modified `prepare_latents` step:**

|

||||

```diff

|

||||

class SDXLDiffDiffPrepareLatentsStep(PipelineBlock):

|

||||

@property

|

||||

def expected_components(self) -> List[ComponentSpec]:

|

||||

return [

|

||||

ComponentSpec("vae", AutoencoderKL),

|

||||

ComponentSpec("scheduler", EulerDiscreteScheduler),

|

||||

+ ComponentSpec("mask_processor", VaeImageProcessor, config=FrozenDict({"do_normalize": False, "do_convert_grayscale": True}))

|

||||

]

|

||||

|

||||

@property

|

||||

def inputs(self) -> List[Tuple[str, Any]]:

|

||||

return [

|

||||

+ InputParam("diffdiff_map", required=True),

|

||||

]

|

||||

|

||||

@property

|

||||

def intermediate_inputs(self) -> List[InputParam]:

|

||||

return [

|

||||

InputParam("generator"),

|

||||

- InputParam("latent_timestep", required=True, type_hint=torch.Tensor),

|

||||

+ InputParam("timesteps", type_hint=torch.Tensor),

|

||||

+ InputParam("num_inference_steps", type_hint=int),

|

||||

]

|

||||

|

||||

@property

|

||||

def intermediate_outputs(self) -> List[OutputParam]:

|

||||

return [

|

||||

+ OutputParam("original_latents", type_hint=torch.Tensor),

|

||||

+ OutputParam("diffdiff_masks", type_hint=torch.Tensor),

|

||||

]

|

||||

|

||||

def __call__(self, components, state: PipelineState):

|

||||

# ... existing logic ...

|

||||

+ # Process change map and create masks

|

||||

+ diffdiff_map = components.mask_processor.preprocess(block_state.diffdiff_map, height=latent_height, width=latent_width)

|

||||

+ thresholds = torch.arange(block_state.num_inference_steps, dtype=diffdiff_map.dtype) / block_state.num_inference_steps

|

||||

+ block_state.diffdiff_masks = diffdiff_map > (thresholds + (block_state.denoising_start or 0))

|

||||

+ block_state.original_latents = block_state.latents

|

||||

```

|

||||

|

||||

**2. Modified `before_denoiser` step:**

|

||||

```diff

|

||||

class SDXLDiffDiffLoopBeforeDenoiser(PipelineBlock):

|

||||

@property

|

||||

def description(self) -> str:

|

||||

return (

|

||||

"Step within the denoising loop for differential diffusion that prepare the latent input for the denoiser"

|

||||

)

|

||||

|

||||

@property

|

||||

def inputs(self) -> List[Tuple[str, Any]]:

|

||||

return [

|

||||

InputParam("denoising_start"),

|

||||

]

|

||||

|

||||

@property

|

||||

def intermediate_inputs(self) -> List[str]:

|

||||

return [

|

||||

InputParam("latents", required=True, type_hint=torch.Tensor),

|

||||

InputParam("original_latents", type_hint=torch.Tensor),

|

||||

InputParam("diffdiff_masks", type_hint=torch.Tensor),

|

||||

]

|

||||

|

||||

def __call__(self, components, block_state, i, t):

|

||||

# Apply differential diffusion logic

|

||||

if i == 0 and block_state.denoising_start is None:

|

||||

block_state.latents = block_state.original_latents[:1]

|

||||

else:

|

||||

block_state.mask = block_state.diffdiff_masks[i].unsqueeze(0).unsqueeze(1)

|

||||

block_state.latents = block_state.original_latents[i] * block_state.mask + block_state.latents * (1 - block_state.mask)

|

||||

|

||||

# ... rest of existing logic ...

|

||||

```

|

||||

|

||||

That's all there is to it! We've just created a simple sequential pipeline by mix-and-match some existing and new pipeline blocks.

|

||||

|

||||

Now we use the process we've prepred in step2 to build the pipeline and inspect it.

|

||||

|

||||

|

||||

```py

|

||||

>> dd_pipeline

|

||||

SequentialPipelineBlocks(

|

||||

Class: ModularPipelineBlocks

|

||||

|

||||

Description:

|

||||

|

||||

|

||||

Components:

|

||||

text_encoder (`CLIPTextModel`)

|

||||

text_encoder_2 (`CLIPTextModelWithProjection`)

|

||||

tokenizer (`CLIPTokenizer`)

|

||||

tokenizer_2 (`CLIPTokenizer`)

|

||||

guider (`ClassifierFreeGuidance`)

|

||||

vae (`AutoencoderKL`)

|

||||

image_processor (`VaeImageProcessor`)

|

||||

scheduler (`EulerDiscreteScheduler`)

|

||||

mask_processor (`VaeImageProcessor`)

|

||||

unet (`UNet2DConditionModel`)

|

||||

|

||||

Configs:

|

||||

force_zeros_for_empty_prompt (default: True)

|

||||

requires_aesthetics_score (default: False)

|

||||

|

||||

Blocks:

|

||||

[0] text_encoder (StableDiffusionXLTextEncoderStep)

|

||||

Description: Text Encoder step that generate text_embeddings to guide the image generation

|

||||

|

||||

[1] image_encoder (StableDiffusionXLVaeEncoderStep)

|

||||

Description: Vae Encoder step that encode the input image into a latent representation

|

||||

|

||||

[2] input (StableDiffusionXLInputStep)

|

||||

Description: Input processing step that:

|

||||

1. Determines `batch_size` and `dtype` based on `prompt_embeds`

|

||||

2. Adjusts input tensor shapes based on `batch_size` (number of prompts) and `num_images_per_prompt`

|

||||

|

||||

All input tensors are expected to have either batch_size=1 or match the batch_size

|

||||

of prompt_embeds. The tensors will be duplicated across the batch dimension to

|

||||

have a final batch_size of batch_size * num_images_per_prompt.

|

||||

|

||||

[3] set_timesteps (StableDiffusionXLSetTimestepsStep)

|

||||

Description: Step that sets the scheduler's timesteps for inference

|

||||

|

||||

[4] prepare_latents (SDXLDiffDiffPrepareLatentsStep)

|

||||

Description: Step that prepares the latents for the differential diffusion generation process

|

||||

|

||||

[5] prepare_add_cond (StableDiffusionXLImg2ImgPrepareAdditionalConditioningStep)

|

||||

Description: Step that prepares the additional conditioning for the image-to-image/inpainting generation process

|

||||

|

||||

[6] denoise (SDXLDiffDiffDenoiseStep)

|

||||

Description: Pipeline block that iteratively denoise the latents over `timesteps`. The specific steps with each iteration can be customized with `sub_blocks` attributes

|

||||

|

||||

[7] decode (StableDiffusionXLDecodeStep)

|

||||

Description: Step that decodes the denoised latents into images

|

||||

|

||||

)

|

||||

```

|

||||

|

||||

Run the example now, you should see an apple with its right half transformed into a green pear.

|

||||

|

||||

|

||||

|

||||

|

||||

## Adding IP-adapter

|

||||

|

||||

We provide an auto IP-adapter block that you can plug-and-play into your modular workflow. It's an `AutoPipelineBlocks`, so it will only run when the user passes an IP adapter image. In this tutorial, we'll focus on how to package it into your differential diffusion workflow. To learn more about `AutoPipelineBlocks`, see [here](https://huggingface.co/docs/diffusers/modular_diffusers/write_own_pipeline_block#autopipelineblocks)

|

||||

|

||||

We talked about how to add IP-adapter into your workflow in the [getting-started guide](https://huggingface.co/docs/diffusers/modular_diffusers/quicktour#ip-adapter). Let's just go ahead to create the IP-adapter block.

|

||||

|

||||

```py

|

||||

>>> from diffusers.modular_pipelines.stable_diffusion_xl.encoders import StableDiffusionXLAutoIPAdapterStep

|

||||

>>> ip_adapter_block = StableDiffusionXLAutoIPAdapterStep()

|

||||

```

|

||||

|

||||

We can directly add the ip-adapter block instance to the `diffdiff_blocks` that we created before. The `sub_blocks` attribute is a `InsertableDict`, so we're able to insert the it at specific position (index `0` here).

|

||||

|

||||

```py

|

||||

>>> dd_blocks.sub_blocks.insert("ip_adapter", ip_adapter_block, 0)

|

||||

```

|

||||

|

||||

Take a look at the new diff-diff pipeline with ip-adapter!

|

||||

|

||||

```py

|

||||

>>> print(dd_blocks)

|

||||

```

|

||||

|

||||

The pipeline now lists ip-adapter as its first block, and tells you that it will run only if `ip_adapter_image` is provided. It also includes the two new components from ip-adpater: `image_encoder` and `feature_extractor`

|

||||

|

||||

```out

|

||||

SequentialPipelineBlocks(

|

||||

Class: ModularPipelineBlocks

|

||||

|

||||

====================================================================================================

|

||||

This pipeline contains blocks that are selected at runtime based on inputs.

|

||||

Trigger Inputs: {'ip_adapter_image'}

|

||||

Use `get_execution_blocks()` with input names to see selected blocks (e.g. `get_execution_blocks('ip_adapter_image')`).

|

||||

====================================================================================================

|

||||

|

||||

|

||||

Description:

|

||||

|

||||

|

||||

Components:

|

||||

image_encoder (`CLIPVisionModelWithProjection`)

|

||||

feature_extractor (`CLIPImageProcessor`)

|

||||

unet (`UNet2DConditionModel`)

|

||||

guider (`ClassifierFreeGuidance`)

|

||||

text_encoder (`CLIPTextModel`)

|

||||

text_encoder_2 (`CLIPTextModelWithProjection`)

|

||||

tokenizer (`CLIPTokenizer`)

|

||||

tokenizer_2 (`CLIPTokenizer`)

|

||||

vae (`AutoencoderKL`)

|

||||

image_processor (`VaeImageProcessor`)

|

||||

scheduler (`EulerDiscreteScheduler`)

|

||||

mask_processor (`VaeImageProcessor`)

|

||||

|

||||

Configs:

|

||||

force_zeros_for_empty_prompt (default: True)

|

||||

requires_aesthetics_score (default: False)

|

||||

|

||||

Blocks:

|

||||

[0] ip_adapter (StableDiffusionXLAutoIPAdapterStep)

|

||||

Description: Run IP Adapter step if `ip_adapter_image` is provided.

|

||||

|

||||

[1] text_encoder (StableDiffusionXLTextEncoderStep)

|

||||

Description: Text Encoder step that generate text_embeddings to guide the image generation

|

||||

|

||||

[2] image_encoder (StableDiffusionXLVaeEncoderStep)

|

||||

Description: Vae Encoder step that encode the input image into a latent representation

|

||||

|

||||

[3] input (StableDiffusionXLInputStep)

|

||||

Description: Input processing step that:

|

||||

1. Determines `batch_size` and `dtype` based on `prompt_embeds`

|

||||

2. Adjusts input tensor shapes based on `batch_size` (number of prompts) and `num_images_per_prompt`

|

||||

|

||||

All input tensors are expected to have either batch_size=1 or match the batch_size

|

||||

of prompt_embeds. The tensors will be duplicated across the batch dimension to

|

||||

have a final batch_size of batch_size * num_images_per_prompt.

|

||||

|

||||

[4] set_timesteps (StableDiffusionXLSetTimestepsStep)

|

||||

Description: Step that sets the scheduler's timesteps for inference

|

||||

|

||||

[5] prepare_latents (SDXLDiffDiffPrepareLatentsStep)

|

||||

Description: Step that prepares the latents for the differential diffusion generation process

|

||||

|

||||

[6] prepare_add_cond (StableDiffusionXLImg2ImgPrepareAdditionalConditioningStep)

|

||||