mirror of

https://github.com/huggingface/diffusers.git

synced 2026-02-10 12:55:19 +08:00

Compare commits

23 Commits

fix-model-

...

max-parall

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

ca6d41de0d | ||

|

|

61e962d7d0 | ||

|

|

7492690505 | ||

|

|

decd6758f3 | ||

|

|

0d23645bd1 | ||

|

|

7fa3e5b0f6 | ||

|

|

49b959b540 | ||

|

|

58237364b1 | ||

|

|

3e35628873 | ||

|

|

6a479588db | ||

|

|

fa489eaed6 | ||

|

|

0d7c479023 | ||

|

|

ce97d7e19b | ||

|

|

44ba90caff | ||

|

|

3c85a57297 | ||

|

|

03ca11318e | ||

|

|

3ffa7b46e5 | ||

|

|

c1b2a89e34 | ||

|

|

435d37ce5a | ||

|

|

5915c2985d | ||

|

|

21a7ff12a7 | ||

|

|

8909ab4b19 | ||

|

|

c1edb03c37 |

50

.github/workflows/nightly_tests.yml

vendored

50

.github/workflows/nightly_tests.yml

vendored

@@ -19,7 +19,7 @@ env:

|

|||||||

jobs:

|

jobs:

|

||||||

setup_torch_cuda_pipeline_matrix:

|

setup_torch_cuda_pipeline_matrix:

|

||||||

name: Setup Torch Pipelines Matrix

|

name: Setup Torch Pipelines Matrix

|

||||||

runs-on: ubuntu-latest

|

runs-on: diffusers/diffusers-pytorch-cpu

|

||||||

outputs:

|

outputs:

|

||||||

pipeline_test_matrix: ${{ steps.fetch_pipeline_matrix.outputs.pipeline_test_matrix }}

|

pipeline_test_matrix: ${{ steps.fetch_pipeline_matrix.outputs.pipeline_test_matrix }}

|

||||||

steps:

|

steps:

|

||||||

@@ -67,19 +67,19 @@ jobs:

|

|||||||

fetch-depth: 2

|

fetch-depth: 2

|

||||||

- name: NVIDIA-SMI

|

- name: NVIDIA-SMI

|

||||||

run: nvidia-smi

|

run: nvidia-smi

|

||||||

|

|

||||||

- name: Install dependencies

|

- name: Install dependencies

|

||||||

run: |

|

run: |

|

||||||

python -m venv /opt/venv && export PATH="/opt/venv/bin:$PATH"

|

python -m venv /opt/venv && export PATH="/opt/venv/bin:$PATH"

|

||||||

python -m uv pip install -e [quality,test]

|

python -m uv pip install -e [quality,test]

|

||||||

python -m uv pip install accelerate@git+https://github.com/huggingface/accelerate.git

|

python -m uv pip install accelerate@git+https://github.com/huggingface/accelerate.git

|

||||||

python -m uv pip install pytest-reportlog

|

python -m uv pip install pytest-reportlog

|

||||||

|

|

||||||

- name: Environment

|

- name: Environment

|

||||||

run: |

|

run: |

|

||||||

python utils/print_env.py

|

python utils/print_env.py

|

||||||

|

|

||||||

- name: Nightly PyTorch CUDA checkpoint (pipelines) tests

|

- name: Nightly PyTorch CUDA checkpoint (pipelines) tests

|

||||||

env:

|

env:

|

||||||

HUGGING_FACE_HUB_TOKEN: ${{ secrets.HUGGING_FACE_HUB_TOKEN }}

|

HUGGING_FACE_HUB_TOKEN: ${{ secrets.HUGGING_FACE_HUB_TOKEN }}

|

||||||

# https://pytorch.org/docs/stable/notes/randomness.html#avoiding-nondeterministic-algorithms

|

# https://pytorch.org/docs/stable/notes/randomness.html#avoiding-nondeterministic-algorithms

|

||||||

@@ -88,9 +88,9 @@ jobs:

|

|||||||

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile \

|

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile \

|

||||||

-s -v -k "not Flax and not Onnx" \

|

-s -v -k "not Flax and not Onnx" \

|

||||||

--make-reports=tests_pipeline_${{ matrix.module }}_cuda \

|

--make-reports=tests_pipeline_${{ matrix.module }}_cuda \

|

||||||

--report-log=tests_pipeline_${{ matrix.module }}_cuda.log \

|

--report-log=tests_pipeline_${{ matrix.module }}_cuda.log \

|

||||||

tests/pipelines/${{ matrix.module }}

|

tests/pipelines/${{ matrix.module }}

|

||||||

|

|

||||||

- name: Failure short reports

|

- name: Failure short reports

|

||||||

if: ${{ failure() }}

|

if: ${{ failure() }}

|

||||||

run: |

|

run: |

|

||||||

@@ -103,7 +103,7 @@ jobs:

|

|||||||

with:

|

with:

|

||||||

name: pipeline_${{ matrix.module }}_test_reports

|

name: pipeline_${{ matrix.module }}_test_reports

|

||||||

path: reports

|

path: reports

|

||||||

|

|

||||||

- name: Generate Report and Notify Channel

|

- name: Generate Report and Notify Channel

|

||||||

if: always()

|

if: always()

|

||||||

run: |

|

run: |

|

||||||

@@ -112,7 +112,7 @@ jobs:

|

|||||||

|

|

||||||

run_nightly_tests_for_other_torch_modules:

|

run_nightly_tests_for_other_torch_modules:

|

||||||

name: Torch Non-Pipelines CUDA Nightly Tests

|

name: Torch Non-Pipelines CUDA Nightly Tests

|

||||||

runs-on: docker-gpu

|

runs-on: [single-gpu, nvidia-gpu, t4, ci]

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-pytorch-cuda

|

image: diffusers/diffusers-pytorch-cuda

|

||||||

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/ --gpus 0

|

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/ --gpus 0

|

||||||

@@ -139,7 +139,7 @@ jobs:

|

|||||||

run: python utils/print_env.py

|

run: python utils/print_env.py

|

||||||

|

|

||||||

- name: Run nightly PyTorch CUDA tests for non-pipeline modules

|

- name: Run nightly PyTorch CUDA tests for non-pipeline modules

|

||||||

if: ${{ matrix.module != 'examples'}}

|

if: ${{ matrix.module != 'examples'}}

|

||||||

env:

|

env:

|

||||||

HUGGING_FACE_HUB_TOKEN: ${{ secrets.HUGGING_FACE_HUB_TOKEN }}

|

HUGGING_FACE_HUB_TOKEN: ${{ secrets.HUGGING_FACE_HUB_TOKEN }}

|

||||||

# https://pytorch.org/docs/stable/notes/randomness.html#avoiding-nondeterministic-algorithms

|

# https://pytorch.org/docs/stable/notes/randomness.html#avoiding-nondeterministic-algorithms

|

||||||

@@ -148,7 +148,7 @@ jobs:

|

|||||||

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile \

|

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile \

|

||||||

-s -v -k "not Flax and not Onnx" \

|

-s -v -k "not Flax and not Onnx" \

|

||||||

--make-reports=tests_torch_${{ matrix.module }}_cuda \

|

--make-reports=tests_torch_${{ matrix.module }}_cuda \

|

||||||

--report-log=tests_torch_${{ matrix.module }}_cuda.log \

|

--report-log=tests_torch_${{ matrix.module }}_cuda.log \

|

||||||

tests/${{ matrix.module }}

|

tests/${{ matrix.module }}

|

||||||

|

|

||||||

- name: Run nightly example tests with Torch

|

- name: Run nightly example tests with Torch

|

||||||

@@ -161,13 +161,13 @@ jobs:

|

|||||||

python -m uv pip install peft@git+https://github.com/huggingface/peft.git

|

python -m uv pip install peft@git+https://github.com/huggingface/peft.git

|

||||||

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile \

|

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile \

|

||||||

-s -v --make-reports=examples_torch_cuda \

|

-s -v --make-reports=examples_torch_cuda \

|

||||||

--report-log=examples_torch_cuda.log \

|

--report-log=examples_torch_cuda.log \

|

||||||

examples/

|

examples/

|

||||||

|

|

||||||

- name: Failure short reports

|

- name: Failure short reports

|

||||||

if: ${{ failure() }}

|

if: ${{ failure() }}

|

||||||

run: |

|

run: |

|

||||||

cat reports/tests_torch_${{ matrix.module }}_cuda_stats.txt

|

cat reports/tests_torch_${{ matrix.module }}_cuda_stats.txt

|

||||||

cat reports/tests_torch_${{ matrix.module }}_cuda_failures_short.txt

|

cat reports/tests_torch_${{ matrix.module }}_cuda_failures_short.txt

|

||||||

|

|

||||||

- name: Test suite reports artifacts

|

- name: Test suite reports artifacts

|

||||||

@@ -185,7 +185,7 @@ jobs:

|

|||||||

|

|

||||||

run_lora_nightly_tests:

|

run_lora_nightly_tests:

|

||||||

name: Nightly LoRA Tests with PEFT and TORCH

|

name: Nightly LoRA Tests with PEFT and TORCH

|

||||||

runs-on: docker-gpu

|

runs-on: [single-gpu, nvidia-gpu, t4, ci]

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-pytorch-cuda

|

image: diffusers/diffusers-pytorch-cuda

|

||||||

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/ --gpus 0

|

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/ --gpus 0

|

||||||

@@ -218,13 +218,13 @@ jobs:

|

|||||||

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile \

|

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile \

|

||||||

-s -v -k "not Flax and not Onnx" \

|

-s -v -k "not Flax and not Onnx" \

|

||||||

--make-reports=tests_torch_lora_cuda \

|

--make-reports=tests_torch_lora_cuda \

|

||||||

--report-log=tests_torch_lora_cuda.log \

|

--report-log=tests_torch_lora_cuda.log \

|

||||||

tests/lora

|

tests/lora

|

||||||

|

|

||||||

- name: Failure short reports

|

- name: Failure short reports

|

||||||

if: ${{ failure() }}

|

if: ${{ failure() }}

|

||||||

run: |

|

run: |

|

||||||

cat reports/tests_torch_lora_cuda_stats.txt

|

cat reports/tests_torch_lora_cuda_stats.txt

|

||||||

cat reports/tests_torch_lora_cuda_failures_short.txt

|

cat reports/tests_torch_lora_cuda_failures_short.txt

|

||||||

|

|

||||||

- name: Test suite reports artifacts

|

- name: Test suite reports artifacts

|

||||||

@@ -239,12 +239,12 @@ jobs:

|

|||||||

run: |

|

run: |

|

||||||

pip install slack_sdk tabulate

|

pip install slack_sdk tabulate

|

||||||

python scripts/log_reports.py >> $GITHUB_STEP_SUMMARY

|

python scripts/log_reports.py >> $GITHUB_STEP_SUMMARY

|

||||||

|

|

||||||

run_flax_tpu_tests:

|

run_flax_tpu_tests:

|

||||||

name: Nightly Flax TPU Tests

|

name: Nightly Flax TPU Tests

|

||||||

runs-on: docker-tpu

|

runs-on: docker-tpu

|

||||||

if: github.event_name == 'schedule'

|

if: github.event_name == 'schedule'

|

||||||

|

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-flax-tpu

|

image: diffusers/diffusers-flax-tpu

|

||||||

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/ --privileged

|

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/ --privileged

|

||||||

@@ -274,7 +274,7 @@ jobs:

|

|||||||

python -m pytest -n 0 \

|

python -m pytest -n 0 \

|

||||||

-s -v -k "Flax" \

|

-s -v -k "Flax" \

|

||||||

--make-reports=tests_flax_tpu \

|

--make-reports=tests_flax_tpu \

|

||||||

--report-log=tests_flax_tpu.log \

|

--report-log=tests_flax_tpu.log \

|

||||||

tests/

|

tests/

|

||||||

|

|

||||||

- name: Failure short reports

|

- name: Failure short reports

|

||||||

@@ -298,11 +298,11 @@ jobs:

|

|||||||

|

|

||||||

run_nightly_onnx_tests:

|

run_nightly_onnx_tests:

|

||||||

name: Nightly ONNXRuntime CUDA tests on Ubuntu

|

name: Nightly ONNXRuntime CUDA tests on Ubuntu

|

||||||

runs-on: docker-gpu

|

runs-on: [single-gpu, nvidia-gpu, t4, ci]

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-onnxruntime-cuda

|

image: diffusers/diffusers-onnxruntime-cuda

|

||||||

options: --gpus 0 --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/

|

options: --gpus 0 --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/

|

||||||

|

|

||||||

steps:

|

steps:

|

||||||

- name: Checkout diffusers

|

- name: Checkout diffusers

|

||||||

uses: actions/checkout@v3

|

uses: actions/checkout@v3

|

||||||

@@ -321,7 +321,7 @@ jobs:

|

|||||||

|

|

||||||

- name: Environment

|

- name: Environment

|

||||||

run: python utils/print_env.py

|

run: python utils/print_env.py

|

||||||

|

|

||||||

- name: Run nightly ONNXRuntime CUDA tests

|

- name: Run nightly ONNXRuntime CUDA tests

|

||||||

env:

|

env:

|

||||||

HUGGING_FACE_HUB_TOKEN: ${{ secrets.HUGGING_FACE_HUB_TOKEN }}

|

HUGGING_FACE_HUB_TOKEN: ${{ secrets.HUGGING_FACE_HUB_TOKEN }}

|

||||||

@@ -329,7 +329,7 @@ jobs:

|

|||||||

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile \

|

python -m pytest -n 1 --max-worker-restart=0 --dist=loadfile \

|

||||||

-s -v -k "Onnx" \

|

-s -v -k "Onnx" \

|

||||||

--make-reports=tests_onnx_cuda \

|

--make-reports=tests_onnx_cuda \

|

||||||

--report-log=tests_onnx_cuda.log \

|

--report-log=tests_onnx_cuda.log \

|

||||||

tests/

|

tests/

|

||||||

|

|

||||||

- name: Failure short reports

|

- name: Failure short reports

|

||||||

@@ -344,7 +344,7 @@ jobs:

|

|||||||

with:

|

with:

|

||||||

name: ${{ matrix.config.report }}_test_reports

|

name: ${{ matrix.config.report }}_test_reports

|

||||||

path: reports

|

path: reports

|

||||||

|

|

||||||

- name: Generate Report and Notify Channel

|

- name: Generate Report and Notify Channel

|

||||||

if: always()

|

if: always()

|

||||||

run: |

|

run: |

|

||||||

|

|||||||

6

.github/workflows/pr_test_fetcher.yml

vendored

6

.github/workflows/pr_test_fetcher.yml

vendored

@@ -15,7 +15,7 @@ concurrency:

|

|||||||

jobs:

|

jobs:

|

||||||

setup_pr_tests:

|

setup_pr_tests:

|

||||||

name: Setup PR Tests

|

name: Setup PR Tests

|

||||||

runs-on: docker-cpu

|

runs-on: [ self-hosted, intel-cpu, 8-cpu, ci ]

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-pytorch-cpu

|

image: diffusers/diffusers-pytorch-cpu

|

||||||

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/

|

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/

|

||||||

@@ -73,7 +73,7 @@ jobs:

|

|||||||

max-parallel: 2

|

max-parallel: 2

|

||||||

matrix:

|

matrix:

|

||||||

modules: ${{ fromJson(needs.setup_pr_tests.outputs.matrix) }}

|

modules: ${{ fromJson(needs.setup_pr_tests.outputs.matrix) }}

|

||||||

runs-on: docker-cpu

|

runs-on: [ self-hosted, intel-cpu, 8-cpu, ci ]

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-pytorch-cpu

|

image: diffusers/diffusers-pytorch-cpu

|

||||||

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/

|

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/

|

||||||

@@ -123,7 +123,7 @@ jobs:

|

|||||||

config:

|

config:

|

||||||

- name: Hub tests for models, schedulers, and pipelines

|

- name: Hub tests for models, schedulers, and pipelines

|

||||||

framework: hub_tests_pytorch

|

framework: hub_tests_pytorch

|

||||||

runner: docker-cpu

|

runner: [ self-hosted, intel-cpu, 8-cpu, ci ]

|

||||||

image: diffusers/diffusers-pytorch-cpu

|

image: diffusers/diffusers-pytorch-cpu

|

||||||

report: torch_hub

|

report: torch_hub

|

||||||

|

|

||||||

|

|||||||

44

.github/workflows/push_tests.yml

vendored

44

.github/workflows/push_tests.yml

vendored

@@ -21,7 +21,9 @@ env:

|

|||||||

jobs:

|

jobs:

|

||||||

setup_torch_cuda_pipeline_matrix:

|

setup_torch_cuda_pipeline_matrix:

|

||||||

name: Setup Torch Pipelines CUDA Slow Tests Matrix

|

name: Setup Torch Pipelines CUDA Slow Tests Matrix

|

||||||

runs-on: ubuntu-latest

|

runs-on: [ self-hosted, intel-cpu, 8-cpu, ci ]

|

||||||

|

container:

|

||||||

|

image: diffusers/diffusers-pytorch-cpu

|

||||||

outputs:

|

outputs:

|

||||||

pipeline_test_matrix: ${{ steps.fetch_pipeline_matrix.outputs.pipeline_test_matrix }}

|

pipeline_test_matrix: ${{ steps.fetch_pipeline_matrix.outputs.pipeline_test_matrix }}

|

||||||

steps:

|

steps:

|

||||||

@@ -29,14 +31,13 @@ jobs:

|

|||||||

uses: actions/checkout@v3

|

uses: actions/checkout@v3

|

||||||

with:

|

with:

|

||||||

fetch-depth: 2

|

fetch-depth: 2

|

||||||

- name: Set up Python

|

|

||||||

uses: actions/setup-python@v4

|

|

||||||

with:

|

|

||||||

python-version: "3.8"

|

|

||||||

- name: Install dependencies

|

- name: Install dependencies

|

||||||

run: |

|

run: |

|

||||||

pip install -e .

|

python -m venv /opt/venv && export PATH="/opt/venv/bin:$PATH"

|

||||||

pip install huggingface_hub

|

python -m uv pip install -e [quality,test]

|

||||||

|

- name: Environment

|

||||||

|

run: |

|

||||||

|

python utils/print_env.py

|

||||||

- name: Fetch Pipeline Matrix

|

- name: Fetch Pipeline Matrix

|

||||||

id: fetch_pipeline_matrix

|

id: fetch_pipeline_matrix

|

||||||

run: |

|

run: |

|

||||||

@@ -55,12 +56,13 @@ jobs:

|

|||||||

needs: setup_torch_cuda_pipeline_matrix

|

needs: setup_torch_cuda_pipeline_matrix

|

||||||

strategy:

|

strategy:

|

||||||

fail-fast: false

|

fail-fast: false

|

||||||

|

max-parallel: 8

|

||||||

matrix:

|

matrix:

|

||||||

module: ${{ fromJson(needs.setup_torch_cuda_pipeline_matrix.outputs.pipeline_test_matrix) }}

|

module: ${{ fromJson(needs.setup_torch_cuda_pipeline_matrix.outputs.pipeline_test_matrix) }}

|

||||||

runs-on: [single-gpu, nvidia-gpu, t4, ci]

|

runs-on: [single-gpu, nvidia-gpu, t4, ci]

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-pytorch-cuda

|

image: diffusers/diffusers-pytorch-cuda

|

||||||

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/ --gpus 0 --privileged

|

options: --shm-size "16gb" --ipc host -v /mnt/cache/.cache/huggingface/diffusers:/mnt/cache/ --gpus 0 --privileged

|

||||||

steps:

|

steps:

|

||||||

- name: Checkout diffusers

|

- name: Checkout diffusers

|

||||||

uses: actions/checkout@v3

|

uses: actions/checkout@v3

|

||||||

@@ -114,10 +116,10 @@ jobs:

|

|||||||

|

|

||||||

torch_cuda_tests:

|

torch_cuda_tests:

|

||||||

name: Torch CUDA Tests

|

name: Torch CUDA Tests

|

||||||

runs-on: docker-gpu

|

runs-on: [single-gpu, nvidia-gpu, t4, ci]

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-pytorch-cuda

|

image: diffusers/diffusers-pytorch-cuda

|

||||||

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/ --gpus 0

|

options: --shm-size "16gb" --ipc host -v /mnt/cache/.cache/huggingface/diffusers:/mnt/cache/ --gpus 0

|

||||||

defaults:

|

defaults:

|

||||||

run:

|

run:

|

||||||

shell: bash

|

shell: bash

|

||||||

@@ -166,10 +168,10 @@ jobs:

|

|||||||

|

|

||||||

peft_cuda_tests:

|

peft_cuda_tests:

|

||||||

name: PEFT CUDA Tests

|

name: PEFT CUDA Tests

|

||||||

runs-on: docker-gpu

|

runs-on: [single-gpu, nvidia-gpu, t4, ci]

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-pytorch-cuda

|

image: diffusers/diffusers-pytorch-cuda

|

||||||

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/ --gpus 0

|

options: --shm-size "16gb" --ipc host -v /mnt/cache/.cache/huggingface/diffusers:/mnt/cache/ --gpus 0

|

||||||

defaults:

|

defaults:

|

||||||

run:

|

run:

|

||||||

shell: bash

|

shell: bash

|

||||||

@@ -219,7 +221,7 @@ jobs:

|

|||||||

runs-on: docker-tpu

|

runs-on: docker-tpu

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-flax-tpu

|

image: diffusers/diffusers-flax-tpu

|

||||||

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/ --privileged

|

options: --shm-size "16gb" --ipc host -v /mnt/cache/.cache/huggingface:/mnt/cache/ --privileged

|

||||||

defaults:

|

defaults:

|

||||||

run:

|

run:

|

||||||

shell: bash

|

shell: bash

|

||||||

@@ -263,10 +265,10 @@ jobs:

|

|||||||

|

|

||||||

onnx_cuda_tests:

|

onnx_cuda_tests:

|

||||||

name: ONNX CUDA Tests

|

name: ONNX CUDA Tests

|

||||||

runs-on: docker-gpu

|

runs-on: [single-gpu, nvidia-gpu, t4, ci]

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-onnxruntime-cuda

|

image: diffusers/diffusers-onnxruntime-cuda

|

||||||

options: --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/ --gpus 0

|

options: --shm-size "16gb" --ipc host -v /mnt/cache/.cache/huggingface:/mnt/cache/ --gpus 0

|

||||||

defaults:

|

defaults:

|

||||||

run:

|

run:

|

||||||

shell: bash

|

shell: bash

|

||||||

@@ -311,11 +313,11 @@ jobs:

|

|||||||

run_torch_compile_tests:

|

run_torch_compile_tests:

|

||||||

name: PyTorch Compile CUDA tests

|

name: PyTorch Compile CUDA tests

|

||||||

|

|

||||||

runs-on: docker-gpu

|

runs-on: [single-gpu, nvidia-gpu, t4, ci]

|

||||||

|

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-pytorch-compile-cuda

|

image: diffusers/diffusers-pytorch-compile-cuda

|

||||||

options: --gpus 0 --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/

|

options: --gpus 0 --shm-size "16gb" --ipc host -v /mnt/cache/.cache/huggingface:/mnt/cache/

|

||||||

|

|

||||||

steps:

|

steps:

|

||||||

- name: Checkout diffusers

|

- name: Checkout diffusers

|

||||||

@@ -352,11 +354,11 @@ jobs:

|

|||||||

run_xformers_tests:

|

run_xformers_tests:

|

||||||

name: PyTorch xformers CUDA tests

|

name: PyTorch xformers CUDA tests

|

||||||

|

|

||||||

runs-on: docker-gpu

|

runs-on: [single-gpu, nvidia-gpu, t4, ci]

|

||||||

|

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-pytorch-xformers-cuda

|

image: diffusers/diffusers-pytorch-xformers-cuda

|

||||||

options: --gpus 0 --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/

|

options: --gpus 0 --shm-size "16gb" --ipc host -v /mnt/cache/.cache/huggingface:/mnt/cache/

|

||||||

|

|

||||||

steps:

|

steps:

|

||||||

- name: Checkout diffusers

|

- name: Checkout diffusers

|

||||||

@@ -393,11 +395,11 @@ jobs:

|

|||||||

run_examples_tests:

|

run_examples_tests:

|

||||||

name: Examples PyTorch CUDA tests on Ubuntu

|

name: Examples PyTorch CUDA tests on Ubuntu

|

||||||

|

|

||||||

runs-on: docker-gpu

|

runs-on: [single-gpu, nvidia-gpu, t4, ci]

|

||||||

|

|

||||||

container:

|

container:

|

||||||

image: diffusers/diffusers-pytorch-cuda

|

image: diffusers/diffusers-pytorch-cuda

|

||||||

options: --gpus 0 --shm-size "16gb" --ipc host -v /mnt/hf_cache:/mnt/cache/

|

options: --gpus 0 --shm-size "16gb" --ipc host -v /mnt/cache/.cache/huggingface:/mnt/cache/

|

||||||

|

|

||||||

steps:

|

steps:

|

||||||

- name: Checkout diffusers

|

- name: Checkout diffusers

|

||||||

|

|||||||

@@ -81,16 +81,14 @@

|

|||||||

title: ControlNet

|

title: ControlNet

|

||||||

- local: using-diffusers/t2i_adapter

|

- local: using-diffusers/t2i_adapter

|

||||||

title: T2I-Adapter

|

title: T2I-Adapter

|

||||||

|

- local: using-diffusers/inference_with_lcm

|

||||||

|

title: Latent Consistency Model

|

||||||

- local: using-diffusers/textual_inversion_inference

|

- local: using-diffusers/textual_inversion_inference

|

||||||

title: Textual inversion

|

title: Textual inversion

|

||||||

- local: using-diffusers/shap-e

|

- local: using-diffusers/shap-e

|

||||||

title: Shap-E

|

title: Shap-E

|

||||||

- local: using-diffusers/diffedit

|

- local: using-diffusers/diffedit

|

||||||

title: DiffEdit

|

title: DiffEdit

|

||||||

- local: using-diffusers/inference_with_lcm_lora

|

|

||||||

title: Latent Consistency Model-LoRA

|

|

||||||

- local: using-diffusers/inference_with_lcm

|

|

||||||

title: Latent Consistency Model

|

|

||||||

- local: using-diffusers/inference_with_tcd_lora

|

- local: using-diffusers/inference_with_tcd_lora

|

||||||

title: Trajectory Consistency Distillation-LoRA

|

title: Trajectory Consistency Distillation-LoRA

|

||||||

- local: using-diffusers/svd

|

- local: using-diffusers/svd

|

||||||

@@ -141,8 +139,6 @@

|

|||||||

- sections:

|

- sections:

|

||||||

- local: optimization/fp16

|

- local: optimization/fp16

|

||||||

title: Speed up inference

|

title: Speed up inference

|

||||||

- local: using-diffusers/distilled_sd

|

|

||||||

title: Distilled Stable Diffusion inference

|

|

||||||

- local: optimization/memory

|

- local: optimization/memory

|

||||||

title: Reduce memory usage

|

title: Reduce memory usage

|

||||||

- local: optimization/torch2.0

|

- local: optimization/torch2.0

|

||||||

|

|||||||

@@ -55,3 +55,6 @@ An attention processor is a class for applying different types of attention mech

|

|||||||

|

|

||||||

## XFormersAttnProcessor

|

## XFormersAttnProcessor

|

||||||

[[autodoc]] models.attention_processor.XFormersAttnProcessor

|

[[autodoc]] models.attention_processor.XFormersAttnProcessor

|

||||||

|

|

||||||

|

## AttnProcessorNPU

|

||||||

|

[[autodoc]] models.attention_processor.AttnProcessorNPU

|

||||||

|

|||||||

@@ -12,27 +12,23 @@ specific language governing permissions and limitations under the License.

|

|||||||

|

|

||||||

# Speed up inference

|

# Speed up inference

|

||||||

|

|

||||||

There are several ways to optimize 🤗 Diffusers for inference speed. As a general rule of thumb, we recommend using either [xFormers](xformers) or `torch.nn.functional.scaled_dot_product_attention` in PyTorch 2.0 for their memory-efficient attention.

|

There are several ways to optimize Diffusers for inference speed, such as reducing the computational burden by lowering the data precision or using a lightweight distilled model. There are also memory-efficient attention implementations, [xFormers](xformers) and [scaled dot product attetntion](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html) in PyTorch 2.0, that reduce memory usage which also indirectly speeds up inference. Different speed optimizations can be stacked together to get the fastest inference times.

|

||||||

|

|

||||||

<Tip>

|

> [!TIP]

|

||||||

|

> Optimizing for inference speed or reduced memory usage can lead to improved performance in the other category, so you should try to optimize for both whenever you can. This guide focuses on inference speed, but you can learn more about lowering memory usage in the [Reduce memory usage](memory) guide.

|

||||||

|

|

||||||

In many cases, optimizing for speed or memory leads to improved performance in the other, so you should try to optimize for both whenever you can. This guide focuses on inference speed, but you can learn more about preserving memory in the [Reduce memory usage](memory) guide.

|

The inference times below are obtained from generating a single 512x512 image from the prompt "a photo of an astronaut riding a horse on mars" with 50 DDIM steps on a NVIDIA A100.

|

||||||

|

|

||||||

</Tip>

|

| setup | latency | speed-up |

|

||||||

|

|----------|---------|----------|

|

||||||

|

| baseline | 5.27s | x1 |

|

||||||

|

| tf32 | 4.14s | x1.27 |

|

||||||

|

| fp16 | 3.51s | x1.50 |

|

||||||

|

| combined | 3.41s | x1.54 |

|

||||||

|

|

||||||

The results below are obtained from generating a single 512x512 image from the prompt `a photo of an astronaut riding a horse on mars` with 50 DDIM steps on a Nvidia Titan RTX, demonstrating the speed-up you can expect.

|

## TensorFloat-32

|

||||||

|

|

||||||

| | latency | speed-up |

|

On Ampere and later CUDA devices, matrix multiplications and convolutions can use the [TensorFloat-32 (tf32)](https://blogs.nvidia.com/blog/2020/05/14/tensorfloat-32-precision-format/) mode for faster, but slightly less accurate computations. By default, PyTorch enables tf32 mode for convolutions but not matrix multiplications. Unless your network requires full float32 precision, we recommend enabling tf32 for matrix multiplications. It can significantly speed up computations with typically negligible loss in numerical accuracy.

|

||||||

| ---------------- | ------- | ------- |

|

|

||||||

| original | 9.50s | x1 |

|

|

||||||

| fp16 | 3.61s | x2.63 |

|

|

||||||

| channels last | 3.30s | x2.88 |

|

|

||||||

| traced UNet | 3.21s | x2.96 |

|

|

||||||

| memory efficient attention | 2.63s | x3.61 |

|

|

||||||

|

|

||||||

## Use TensorFloat-32

|

|

||||||

|

|

||||||

On Ampere and later CUDA devices, matrix multiplications and convolutions can use the [TensorFloat-32 (TF32)](https://blogs.nvidia.com/blog/2020/05/14/tensorfloat-32-precision-format/) mode for faster, but slightly less accurate computations. By default, PyTorch enables TF32 mode for convolutions but not matrix multiplications. Unless your network requires full float32 precision, we recommend enabling TF32 for matrix multiplications. It can significantly speeds up computations with typically negligible loss in numerical accuracy.

|

|

||||||

|

|

||||||

```python

|

```python

|

||||||

import torch

|

import torch

|

||||||

@@ -40,11 +36,11 @@ import torch

|

|||||||

torch.backends.cuda.matmul.allow_tf32 = True

|

torch.backends.cuda.matmul.allow_tf32 = True

|

||||||

```

|

```

|

||||||

|

|

||||||

You can learn more about TF32 in the [Mixed precision training](https://huggingface.co/docs/transformers/en/perf_train_gpu_one#tf32) guide.

|

Learn more about tf32 in the [Mixed precision training](https://huggingface.co/docs/transformers/en/perf_train_gpu_one#tf32) guide.

|

||||||

|

|

||||||

## Half-precision weights

|

## Half-precision weights

|

||||||

|

|

||||||

To save GPU memory and get more speed, try loading and running the model weights directly in half-precision or float16:

|

To save GPU memory and get more speed, set `torch_dtype=torch.float16` to load and run the model weights directly with half-precision weights.

|

||||||

|

|

||||||

```Python

|

```Python

|

||||||

import torch

|

import torch

|

||||||

@@ -56,19 +52,76 @@ pipe = DiffusionPipeline.from_pretrained(

|

|||||||

use_safetensors=True,

|

use_safetensors=True,

|

||||||

)

|

)

|

||||||

pipe = pipe.to("cuda")

|

pipe = pipe.to("cuda")

|

||||||

|

|

||||||

prompt = "a photo of an astronaut riding a horse on mars"

|

|

||||||

image = pipe(prompt).images[0]

|

|

||||||

```

|

```

|

||||||

|

|

||||||

<Tip warning={true}>

|

> [!WARNING]

|

||||||

|

> Don't use [torch.autocast](https://pytorch.org/docs/stable/amp.html#torch.autocast) in any of the pipelines as it can lead to black images and is always slower than pure float16 precision.

|

||||||

Don't use [`torch.autocast`](https://pytorch.org/docs/stable/amp.html#torch.autocast) in any of the pipelines as it can lead to black images and is always slower than pure float16 precision.

|

|

||||||

|

|

||||||

</Tip>

|

|

||||||

|

|

||||||

## Distilled model

|

## Distilled model

|

||||||

|

|

||||||

You could also use a distilled Stable Diffusion model and autoencoder to speed up inference. During distillation, many of the UNet's residual and attention blocks are shed to reduce the model size. The distilled model is faster and uses less memory while generating images of comparable quality to the full Stable Diffusion model.

|

You could also use a distilled Stable Diffusion model and autoencoder to speed up inference. During distillation, many of the UNet's residual and attention blocks are shed to reduce the model size by 51% and improve latency on CPU/GPU by 43%. The distilled model is faster and uses less memory while generating images of comparable quality to the full Stable Diffusion model.

|

||||||

|

|

||||||

Learn more about in the [Distilled Stable Diffusion inference](../using-diffusers/distilled_sd) guide!

|

> [!TIP]

|

||||||

|

> Read the [Open-sourcing Knowledge Distillation Code and Weights of SD-Small and SD-Tiny](https://huggingface.co/blog/sd_distillation) blog post to learn more about how knowledge distillation training works to produce a faster, smaller, and cheaper generative model.

|

||||||

|

|

||||||

|

The inference times below are obtained from generating 4 images from the prompt "a photo of an astronaut riding a horse on mars" with 25 PNDM steps on a NVIDIA A100. Each generation is repeated 3 times with the distilled Stable Diffusion v1.4 model by [Nota AI](https://hf.co/nota-ai).

|

||||||

|

|

||||||

|

| setup | latency | speed-up |

|

||||||

|

|------------------------------|---------|----------|

|

||||||

|

| baseline | 6.37s | x1 |

|

||||||

|

| distilled | 4.18s | x1.52 |

|

||||||

|

| distilled + tiny autoencoder | 3.83s | x1.66 |

|

||||||

|

|

||||||

|

Let's load the distilled Stable Diffusion model and compare it against the original Stable Diffusion model.

|

||||||

|

|

||||||

|

```py

|

||||||

|

from diffusers import StableDiffusionPipeline

|

||||||

|

import torch

|

||||||

|

|

||||||

|

distilled = StableDiffusionPipeline.from_pretrained(

|

||||||

|

"nota-ai/bk-sdm-small", torch_dtype=torch.float16, use_safetensors=True,

|

||||||

|

).to("cuda")

|

||||||

|

prompt = "a golden vase with different flowers"

|

||||||

|

generator = torch.manual_seed(2023)

|

||||||

|

image = distilled("a golden vase with different flowers", num_inference_steps=25, generator=generator).images[0]

|

||||||

|

image

|

||||||

|

```

|

||||||

|

|

||||||

|

<div class="flex gap-4">

|

||||||

|

<div>

|

||||||

|

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/original_sd.png"/>

|

||||||

|

<figcaption class="mt-2 text-center text-sm text-gray-500">original Stable Diffusion</figcaption>

|

||||||

|

</div>

|

||||||

|

<div>

|

||||||

|

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/distilled_sd.png"/>

|

||||||

|

<figcaption class="mt-2 text-center text-sm text-gray-500">distilled Stable Diffusion</figcaption>

|

||||||

|

</div>

|

||||||

|

</div>

|

||||||

|

|

||||||

|

### Tiny AutoEncoder

|

||||||

|

|

||||||

|

To speed inference up even more, replace the autoencoder with a [distilled version](https://huggingface.co/sayakpaul/taesdxl-diffusers) of it.

|

||||||

|

|

||||||

|

```py

|

||||||

|

import torch

|

||||||

|

from diffusers import AutoencoderTiny, StableDiffusionPipeline

|

||||||

|

|

||||||

|

distilled = StableDiffusionPipeline.from_pretrained(

|

||||||

|

"nota-ai/bk-sdm-small", torch_dtype=torch.float16, use_safetensors=True,

|

||||||

|

).to("cuda")

|

||||||

|

distilled.vae = AutoencoderTiny.from_pretrained(

|

||||||

|

"sayakpaul/taesd-diffusers", torch_dtype=torch.float16, use_safetensors=True,

|

||||||

|

).to("cuda")

|

||||||

|

|

||||||

|

prompt = "a golden vase with different flowers"

|

||||||

|

generator = torch.manual_seed(2023)

|

||||||

|

image = distilled("a golden vase with different flowers", num_inference_steps=25, generator=generator).images[0]

|

||||||

|

image

|

||||||

|

```

|

||||||

|

|

||||||

|

<div class="flex justify-center">

|

||||||

|

<div>

|

||||||

|

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/distilled_sd_vae.png" />

|

||||||

|

<figcaption class="mt-2 text-center text-sm text-gray-500">distilled Stable Diffusion + Tiny AutoEncoder</figcaption>

|

||||||

|

</div>

|

||||||

|

</div>

|

||||||

|

|||||||

@@ -1,133 +0,0 @@

|

|||||||

<!--Copyright 2024 The HuggingFace Team. All rights reserved.

|

|

||||||

|

|

||||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

|

||||||

the License. You may obtain a copy of the License at

|

|

||||||

|

|

||||||

http://www.apache.org/licenses/LICENSE-2.0

|

|

||||||

|

|

||||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

|

||||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

|

||||||

specific language governing permissions and limitations under the License.

|

|

||||||

-->

|

|

||||||

|

|

||||||

# Distilled Stable Diffusion inference

|

|

||||||

|

|

||||||

[[open-in-colab]]

|

|

||||||

|

|

||||||

Stable Diffusion inference can be a computationally intensive process because it must iteratively denoise the latents to generate an image. To reduce the computational burden, you can use a *distilled* version of the Stable Diffusion model from [Nota AI](https://huggingface.co/nota-ai). The distilled version of their Stable Diffusion model eliminates some of the residual and attention blocks from the UNet, reducing the model size by 51% and improving latency on CPU/GPU by 43%.

|

|

||||||

|

|

||||||

<Tip>

|

|

||||||

|

|

||||||

Read this [blog post](https://huggingface.co/blog/sd_distillation) to learn more about how knowledge distillation training works to produce a faster, smaller, and cheaper generative model.

|

|

||||||

|

|

||||||

</Tip>

|

|

||||||

|

|

||||||

Let's load the distilled Stable Diffusion model and compare it against the original Stable Diffusion model:

|

|

||||||

|

|

||||||

```py

|

|

||||||

from diffusers import StableDiffusionPipeline

|

|

||||||

import torch

|

|

||||||

|

|

||||||

distilled = StableDiffusionPipeline.from_pretrained(

|

|

||||||

"nota-ai/bk-sdm-small", torch_dtype=torch.float16, use_safetensors=True,

|

|

||||||

).to("cuda")

|

|

||||||

|

|

||||||

original = StableDiffusionPipeline.from_pretrained(

|

|

||||||

"CompVis/stable-diffusion-v1-4", torch_dtype=torch.float16, use_safetensors=True,

|

|

||||||

).to("cuda")

|

|

||||||

```

|

|

||||||

|

|

||||||

Given a prompt, get the inference time for the original model:

|

|

||||||

|

|

||||||

```py

|

|

||||||

import time

|

|

||||||

|

|

||||||

seed = 2023

|

|

||||||

generator = torch.manual_seed(seed)

|

|

||||||

|

|

||||||

NUM_ITERS_TO_RUN = 3

|

|

||||||

NUM_INFERENCE_STEPS = 25

|

|

||||||

NUM_IMAGES_PER_PROMPT = 4

|

|

||||||

|

|

||||||

prompt = "a golden vase with different flowers"

|

|

||||||

|

|

||||||

start = time.time_ns()

|

|

||||||

for _ in range(NUM_ITERS_TO_RUN):

|

|

||||||

images = original(

|

|

||||||

prompt,

|

|

||||||

num_inference_steps=NUM_INFERENCE_STEPS,

|

|

||||||

generator=generator,

|

|

||||||

num_images_per_prompt=NUM_IMAGES_PER_PROMPT

|

|

||||||

).images

|

|

||||||

end = time.time_ns()

|

|

||||||

original_sd = f"{(end - start) / 1e6:.1f}"

|

|

||||||

|

|

||||||

print(f"Execution time -- {original_sd} ms\n")

|

|

||||||

"Execution time -- 45781.5 ms"

|

|

||||||

```

|

|

||||||

|

|

||||||

Time the distilled model inference:

|

|

||||||

|

|

||||||

```py

|

|

||||||

start = time.time_ns()

|

|

||||||

for _ in range(NUM_ITERS_TO_RUN):

|

|

||||||

images = distilled(

|

|

||||||

prompt,

|

|

||||||

num_inference_steps=NUM_INFERENCE_STEPS,

|

|

||||||

generator=generator,

|

|

||||||

num_images_per_prompt=NUM_IMAGES_PER_PROMPT

|

|

||||||

).images

|

|

||||||

end = time.time_ns()

|

|

||||||

|

|

||||||

distilled_sd = f"{(end - start) / 1e6:.1f}"

|

|

||||||

print(f"Execution time -- {distilled_sd} ms\n")

|

|

||||||

"Execution time -- 29884.2 ms"

|

|

||||||

```

|

|

||||||

|

|

||||||

<div class="flex gap-4">

|

|

||||||

<div>

|

|

||||||

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/original_sd.png"/>

|

|

||||||

<figcaption class="mt-2 text-center text-sm text-gray-500">original Stable Diffusion (45781.5 ms)</figcaption>

|

|

||||||

</div>

|

|

||||||

<div>

|

|

||||||

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/distilled_sd.png"/>

|

|

||||||

<figcaption class="mt-2 text-center text-sm text-gray-500">distilled Stable Diffusion (29884.2 ms)</figcaption>

|

|

||||||

</div>

|

|

||||||

</div>

|

|

||||||

|

|

||||||

## Tiny AutoEncoder

|

|

||||||

|

|

||||||

To speed inference up even more, use a tiny distilled version of the [Stable Diffusion VAE](https://huggingface.co/sayakpaul/taesdxl-diffusers) to denoise the latents into images. Replace the VAE in the distilled Stable Diffusion model with the tiny VAE:

|

|

||||||

|

|

||||||

```py

|

|

||||||

from diffusers import AutoencoderTiny

|

|

||||||

|

|

||||||

distilled.vae = AutoencoderTiny.from_pretrained(

|

|

||||||

"sayakpaul/taesd-diffusers", torch_dtype=torch.float16, use_safetensors=True,

|

|

||||||

).to("cuda")

|

|

||||||

```

|

|

||||||

|

|

||||||

Time the distilled model and distilled VAE inference:

|

|

||||||

|

|

||||||

```py

|

|

||||||

start = time.time_ns()

|

|

||||||

for _ in range(NUM_ITERS_TO_RUN):

|

|

||||||

images = distilled(

|

|

||||||

prompt,

|

|

||||||

num_inference_steps=NUM_INFERENCE_STEPS,

|

|

||||||

generator=generator,

|

|

||||||

num_images_per_prompt=NUM_IMAGES_PER_PROMPT

|

|

||||||

).images

|

|

||||||

end = time.time_ns()

|

|

||||||

|

|

||||||

distilled_tiny_sd = f"{(end - start) / 1e6:.1f}"

|

|

||||||

print(f"Execution time -- {distilled_tiny_sd} ms\n")

|

|

||||||

"Execution time -- 27165.7 ms"

|

|

||||||

```

|

|

||||||

|

|

||||||

<div class="flex justify-center">

|

|

||||||

<div>

|

|

||||||

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/distilled_sd_vae.png" />

|

|

||||||

<figcaption class="mt-2 text-center text-sm text-gray-500">distilled Stable Diffusion + Tiny AutoEncoder (27165.7 ms)</figcaption>

|

|

||||||

</div>

|

|

||||||

</div>

|

|

||||||

@@ -10,29 +10,30 @@ an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express o

|

|||||||

specific language governing permissions and limitations under the License.

|

specific language governing permissions and limitations under the License.

|

||||||

-->

|

-->

|

||||||

|

|

||||||

[[open-in-colab]]

|

|

||||||

|

|

||||||

# Latent Consistency Model

|

# Latent Consistency Model

|

||||||

|

|

||||||

Latent Consistency Models (LCM) enable quality image generation in typically 2-4 steps making it possible to use diffusion models in almost real-time settings.

|

[[open-in-colab]]

|

||||||

|

|

||||||

From the [official website](https://latent-consistency-models.github.io/):

|

[Latent Consistency Models (LCMs)](https://hf.co/papers/2310.04378) enable fast high-quality image generation by directly predicting the reverse diffusion process in the latent rather than pixel space. In other words, LCMs try to predict the noiseless image from the noisy image in contrast to typical diffusion models that iteratively remove noise from the noisy image. By avoiding the iterative sampling process, LCMs are able to generate high-quality images in 2-4 steps instead of 20-30 steps.

|

||||||

|

|

||||||

> LCMs can be distilled from any pre-trained Stable Diffusion (SD) in only 4,000 training steps (~32 A100 GPU Hours) for generating high quality 768 x 768 resolution images in 2~4 steps or even one step, significantly accelerating text-to-image generation. We employ LCM to distill the Dreamshaper-V7 version of SD in just 4,000 training iterations.

|

LCMs are distilled from pretrained models which requires ~32 hours of A100 compute. To speed this up, [LCM-LoRAs](https://hf.co/papers/2311.05556) train a [LoRA adapter](https://huggingface.co/docs/peft/conceptual_guides/adapter#low-rank-adaptation-lora) which have much fewer parameters to train compared to the full model. The LCM-LoRA can be plugged into a diffusion model once it has been trained.

|

||||||

|

|

||||||

For a more technical overview of LCMs, refer to [the paper](https://huggingface.co/papers/2310.04378).

|

This guide will show you how to use LCMs and LCM-LoRAs for fast inference on tasks and how to use them with other adapters like ControlNet or T2I-Adapter.

|

||||||

|

|

||||||

LCM distilled models are available for [stable-diffusion-v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5), [stable-diffusion-xl-base-1.0](https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0), and the [SSD-1B](https://huggingface.co/segmind/SSD-1B) model. All the checkpoints can be found in this [collection](https://huggingface.co/collections/latent-consistency/latent-consistency-models-weights-654ce61a95edd6dffccef6a8).

|

> [!TIP]

|

||||||

|

> LCMs and LCM-LoRAs are available for Stable Diffusion v1.5, Stable Diffusion XL, and the SSD-1B model. You can find their checkpoints on the [Latent Consistency](https://hf.co/collections/latent-consistency/latent-consistency-models-weights-654ce61a95edd6dffccef6a8) Collections.

|

||||||

This guide shows how to perform inference with LCMs for

|

|

||||||

- text-to-image

|

|

||||||

- image-to-image

|

|

||||||

- combined with style LoRAs

|

|

||||||

- ControlNet/T2I-Adapter

|

|

||||||

|

|

||||||

## Text-to-image

|

## Text-to-image

|

||||||

|

|

||||||

You'll use the [`StableDiffusionXLPipeline`] pipeline with the [`LCMScheduler`] and then load the LCM-LoRA. Together with the LCM-LoRA and the scheduler, the pipeline enables a fast inference workflow, overcoming the slow iterative nature of diffusion models.

|

<hfoptions id="lcm-text2img">

|

||||||

|

<hfoption id="LCM">

|

||||||

|

|

||||||

|

To use LCMs, you need to load the LCM checkpoint for your supported model into [`UNet2DConditionModel`] and replace the scheduler with the [`LCMScheduler`]. Then you can use the pipeline as usual, and pass a text prompt to generate an image in just 4 steps.

|

||||||

|

|

||||||

|

A couple of notes to keep in mind when using LCMs are:

|

||||||

|

|

||||||

|

* Typically, batch size is doubled inside the pipeline for classifier-free guidance. But LCM applies guidance with guidance embeddings and doesn't need to double the batch size, which leads to faster inference. The downside is that negative prompts don't work with LCM because they don't have any effect on the denoising process.

|

||||||

|

* The ideal range for `guidance_scale` is [3., 13.] because that is what the UNet was trained with. However, disabling `guidance_scale` with a value of 1.0 is also effective in most cases.

|

||||||

|

|

||||||

```python

|

```python

|

||||||

from diffusers import StableDiffusionXLPipeline, UNet2DConditionModel, LCMScheduler

|

from diffusers import StableDiffusionXLPipeline, UNet2DConditionModel, LCMScheduler

|

||||||

@@ -49,31 +50,69 @@ pipe = StableDiffusionXLPipeline.from_pretrained(

|

|||||||

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

|

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

|

||||||

|

|

||||||

prompt = "Self-portrait oil painting, a beautiful cyborg with golden hair, 8k"

|

prompt = "Self-portrait oil painting, a beautiful cyborg with golden hair, 8k"

|

||||||

|

|

||||||

generator = torch.manual_seed(0)

|

generator = torch.manual_seed(0)

|

||||||

image = pipe(

|

image = pipe(

|

||||||

prompt=prompt, num_inference_steps=4, generator=generator, guidance_scale=8.0

|

prompt=prompt, num_inference_steps=4, generator=generator, guidance_scale=8.0

|

||||||

).images[0]

|

).images[0]

|

||||||

|

image

|

||||||

```

|

```

|

||||||

|

|

||||||

|

<div class="flex justify-center">

|

||||||

|

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/lcm/lcm_full_sdxl_t2i.png"/>

|

||||||

|

</div>

|

||||||

|

|

||||||

Notice that we use only 4 steps for generation which is way less than what's typically used for standard SDXL.

|

</hfoption>

|

||||||

|

<hfoption id="LCM-LoRA">

|

||||||

|

|

||||||

Some details to keep in mind:

|

To use LCM-LoRAs, you need to replace the scheduler with the [`LCMScheduler`] and load the LCM-LoRA weights with the [`~loaders.LoraLoaderMixin.load_lora_weights`] method. Then you can use the pipeline as usual, and pass a text prompt to generate an image in just 4 steps.

|

||||||

|

|

||||||

* To perform classifier-free guidance, batch size is usually doubled inside the pipeline. LCM, however, applies guidance using guidance embeddings, so the batch size does not have to be doubled in this case. This leads to a faster inference time, with the drawback that negative prompts don't have any effect on the denoising process.

|

A couple of notes to keep in mind when using LCM-LoRAs are:

|

||||||

* The UNet was trained using the [3., 13.] guidance scale range. So, that is the ideal range for `guidance_scale`. However, disabling `guidance_scale` using a value of 1.0 is also effective in most cases.

|

|

||||||

|

|

||||||

|

* Typically, batch size is doubled inside the pipeline for classifier-free guidance. But LCM applies guidance with guidance embeddings and doesn't need to double the batch size, which leads to faster inference. The downside is that negative prompts don't work with LCM because they don't have any effect on the denoising process.

|

||||||

|

* You could use guidance with LCM-LoRAs, but it is very sensitive to high `guidance_scale` values and can lead to artifacts in the generated image. The best values we've found are between [1.0, 2.0].

|

||||||

|

* Replace [stabilityai/stable-diffusion-xl-base-1.0](https://hf.co/stabilityai/stable-diffusion-xl-base-1.0) with any finetuned model. For example, try using the [animagine-xl](https://huggingface.co/Linaqruf/animagine-xl) checkpoint to generate anime images with SDXL.

|

||||||

|

|

||||||

|

```py

|

||||||

|

import torch

|

||||||

|

from diffusers import DiffusionPipeline, LCMScheduler

|

||||||

|

|

||||||

|

pipe = DiffusionPipeline.from_pretrained(

|

||||||

|

"stabilityai/stable-diffusion-xl-base-1.0",

|

||||||

|

variant="fp16",

|

||||||

|

torch_dtype=torch.float16

|

||||||

|

).to("cuda")

|

||||||

|

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

|

||||||

|

pipe.load_lora_weights("latent-consistency/lcm-lora-sdxl")

|

||||||

|

|

||||||

|

prompt = "Self-portrait oil painting, a beautiful cyborg with golden hair, 8k"

|

||||||

|

generator = torch.manual_seed(42)

|

||||||

|

image = pipe(

|

||||||

|

prompt=prompt, num_inference_steps=4, generator=generator, guidance_scale=1.0

|

||||||

|

).images[0]

|

||||||

|

image

|

||||||

|

```

|

||||||

|

|

||||||

|

<div class="flex justify-center">

|

||||||

|

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/lcm/lcm_sdxl_t2i.png"/>

|

||||||

|

</div>

|

||||||

|

|

||||||

|

</hfoption>

|

||||||

|

</hfoptions>

|

||||||

|

|

||||||

## Image-to-image

|

## Image-to-image

|

||||||

|

|

||||||

LCMs can be applied to image-to-image tasks too. For this example, we'll use the [LCM_Dreamshaper_v7](https://huggingface.co/SimianLuo/LCM_Dreamshaper_v7) model, but the same steps can be applied to other LCM models as well.

|

<hfoptions id="lcm-img2img">

|

||||||

|

<hfoption id="LCM">

|

||||||

|

|

||||||

|

To use LCMs for image-to-image, you need to load the LCM checkpoint for your supported model into [`UNet2DConditionModel`] and replace the scheduler with the [`LCMScheduler`]. Then you can use the pipeline as usual, and pass a text prompt and initial image to generate an image in just 4 steps.

|

||||||

|

|

||||||

|

> [!TIP]

|

||||||

|

> Experiment with different values for `num_inference_steps`, `strength`, and `guidance_scale` to get the best results.

|

||||||

|

|

||||||

```python

|

```python

|

||||||

import torch

|

import torch

|

||||||

from diffusers import AutoPipelineForImage2Image, UNet2DConditionModel, LCMScheduler

|

from diffusers import AutoPipelineForImage2Image, UNet2DConditionModel, LCMScheduler

|

||||||

from diffusers.utils import make_image_grid, load_image

|

from diffusers.utils import load_image

|

||||||

|

|

||||||

unet = UNet2DConditionModel.from_pretrained(

|

unet = UNet2DConditionModel.from_pretrained(

|

||||||

"SimianLuo/LCM_Dreamshaper_v7",

|

"SimianLuo/LCM_Dreamshaper_v7",

|

||||||

@@ -89,12 +128,8 @@ pipe = AutoPipelineForImage2Image.from_pretrained(

|

|||||||

).to("cuda")

|

).to("cuda")

|

||||||

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

|

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

|

||||||

|

|

||||||

# prepare image

|

init_image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/img2img-init.png")

|

||||||

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/img2img-init.png"

|

|

||||||

init_image = load_image(url)

|

|

||||||

prompt = "Astronauts in a jungle, cold color palette, muted colors, detailed, 8k"

|

prompt = "Astronauts in a jungle, cold color palette, muted colors, detailed, 8k"

|

||||||

|

|

||||||

# pass prompt and image to pipeline

|

|

||||||

generator = torch.manual_seed(0)

|

generator = torch.manual_seed(0)

|

||||||

image = pipe(

|

image = pipe(

|

||||||

prompt,

|

prompt,

|

||||||

@@ -104,22 +139,130 @@ image = pipe(

|

|||||||

strength=0.5,

|

strength=0.5,

|

||||||

generator=generator

|

generator=generator

|

||||||

).images[0]

|

).images[0]

|

||||||

make_image_grid([init_image, image], rows=1, cols=2)

|

image

|

||||||

```

|

```

|

||||||

|

|

||||||

|

<div class="flex gap-4">

|

||||||

|

<div>

|

||||||

|

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/img2img-init.png"/>

|

||||||

|

<figcaption class="mt-2 text-center text-sm text-gray-500">initial image</figcaption>

|

||||||

|

</div>

|

||||||

|

<div>

|

||||||

|

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/lcm-img2img.png"/>

|

||||||

|

<figcaption class="mt-2 text-center text-sm text-gray-500">generated image</figcaption>

|

||||||

|

</div>

|

||||||

|

</div>

|

||||||

|

|

||||||

|

</hfoption>

|

||||||

|

<hfoption id="LCM-LoRA">

|

||||||

|

|

||||||

<Tip>

|

To use LCM-LoRAs for image-to-image, you need to replace the scheduler with the [`LCMScheduler`] and load the LCM-LoRA weights with the [`~loaders.LoraLoaderMixin.load_lora_weights`] method. Then you can use the pipeline as usual, and pass a text prompt and initial image to generate an image in just 4 steps.

|

||||||

|

|

||||||

You can get different results based on your prompt and the image you provide. To get the best results, we recommend trying different values for `num_inference_steps`, `strength`, and `guidance_scale` parameters and choose the best one.

|

> [!TIP]

|

||||||

|

> Experiment with different values for `num_inference_steps`, `strength`, and `guidance_scale` to get the best results.

|

||||||

|

|

||||||

</Tip>

|

```py

|

||||||

|

import torch

|

||||||

|

from diffusers import AutoPipelineForImage2Image, LCMScheduler

|

||||||

|

from diffusers.utils import make_image_grid, load_image

|

||||||

|

|

||||||

|

pipe = AutoPipelineForImage2Image.from_pretrained(

|

||||||

|

"Lykon/dreamshaper-7",

|

||||||

|

torch_dtype=torch.float16,

|

||||||

|

variant="fp16",

|

||||||

|

).to("cuda")

|

||||||

|

|

||||||

## Combine with style LoRAs

|

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

|

||||||

|

|

||||||

LCMs can be used with other styled LoRAs to generate styled-images in very few steps (4-8). In the following example, we'll use the [papercut LoRA](TheLastBen/Papercut_SDXL).

|

pipe.load_lora_weights("latent-consistency/lcm-lora-sdv1-5")

|

||||||

|

|

||||||

|

init_image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/img2img-init.png")

|

||||||

|

prompt = "Astronauts in a jungle, cold color palette, muted colors, detailed, 8k"

|

||||||

|

|

||||||

|

generator = torch.manual_seed(0)

|

||||||

|

image = pipe(

|

||||||

|

prompt,

|

||||||

|

image=init_image,

|

||||||

|

num_inference_steps=4,

|

||||||

|

guidance_scale=1,

|

||||||

|

strength=0.6,

|

||||||

|

generator=generator

|

||||||

|

).images[0]

|

||||||

|

image

|

||||||

|

```

|

||||||

|

|

||||||

|

<div class="flex gap-4">

|

||||||

|

<div>

|

||||||

|

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/img2img-init.png"/>

|

||||||

|

<figcaption class="mt-2 text-center text-sm text-gray-500">initial image</figcaption>

|

||||||

|

</div>

|

||||||

|

<div>

|

||||||

|

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/lcm-lora-img2img.png"/>

|

||||||

|

<figcaption class="mt-2 text-center text-sm text-gray-500">generated image</figcaption>

|

||||||

|

</div>

|

||||||

|

</div>

|

||||||

|

|

||||||

|

</hfoption>

|

||||||

|

</hfoptions>

|

||||||

|

|

||||||

|

## Inpainting

|

||||||

|

|

||||||

|

To use LCM-LoRAs for inpainting, you need to replace the scheduler with the [`LCMScheduler`] and load the LCM-LoRA weights with the [`~loaders.LoraLoaderMixin.load_lora_weights`] method. Then you can use the pipeline as usual, and pass a text prompt, initial image, and mask image to generate an image in just 4 steps.

|

||||||

|

|

||||||

|

```py

|

||||||

|

import torch

|

||||||

|

from diffusers import AutoPipelineForInpainting, LCMScheduler

|

||||||

|

from diffusers.utils import load_image, make_image_grid

|

||||||

|

|

||||||

|

pipe = AutoPipelineForInpainting.from_pretrained(

|

||||||

|

"runwayml/stable-diffusion-inpainting",

|

||||||

|

torch_dtype=torch.float16,

|

||||||

|

variant="fp16",

|

||||||

|

).to("cuda")

|

||||||

|

|

||||||

|

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

|

||||||

|

|

||||||

|

pipe.load_lora_weights("latent-consistency/lcm-lora-sdv1-5")

|

||||||

|

|

||||||

|

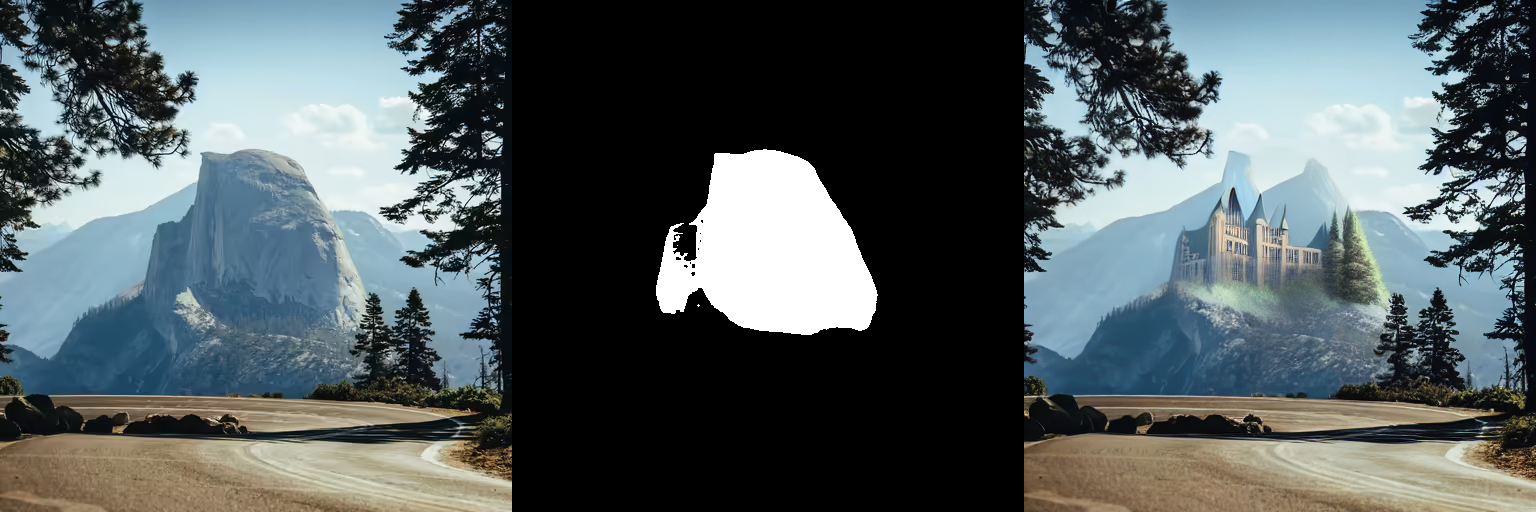

init_image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/inpaint.png")

|

||||||

|

mask_image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/inpaint_mask.png")

|

||||||

|

|

||||||

|

prompt = "concept art digital painting of an elven castle, inspired by lord of the rings, highly detailed, 8k"

|

||||||

|

generator = torch.manual_seed(0)

|

||||||

|

image = pipe(

|

||||||

|

prompt=prompt,

|

||||||

|

image=init_image,

|

||||||

|

mask_image=mask_image,

|

||||||

|

generator=generator,

|

||||||

|

num_inference_steps=4,

|

||||||

|

guidance_scale=4,

|

||||||

|

).images[0]

|

||||||

|

image

|

||||||

|

```

|

||||||

|

|

||||||

|

<div class="flex gap-4">

|

||||||

|

<div>

|

||||||

|

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/inpaint.png"/>

|

||||||

|

<figcaption class="mt-2 text-center text-sm text-gray-500">initial image</figcaption>

|

||||||

|

</div>

|

||||||

|

<div>

|

||||||

|

<img class="rounded-xl" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/lcm-lora-inpaint.png"/>

|

||||||

|

<figcaption class="mt-2 text-center text-sm text-gray-500">generated image</figcaption>

|

||||||

|

</div>

|

||||||

|

</div>

|

||||||

|

|

||||||

|

## Adapters

|

||||||

|

|

||||||

|